This topic describes how DDL statements are executed in PolarDB-X.

Background information

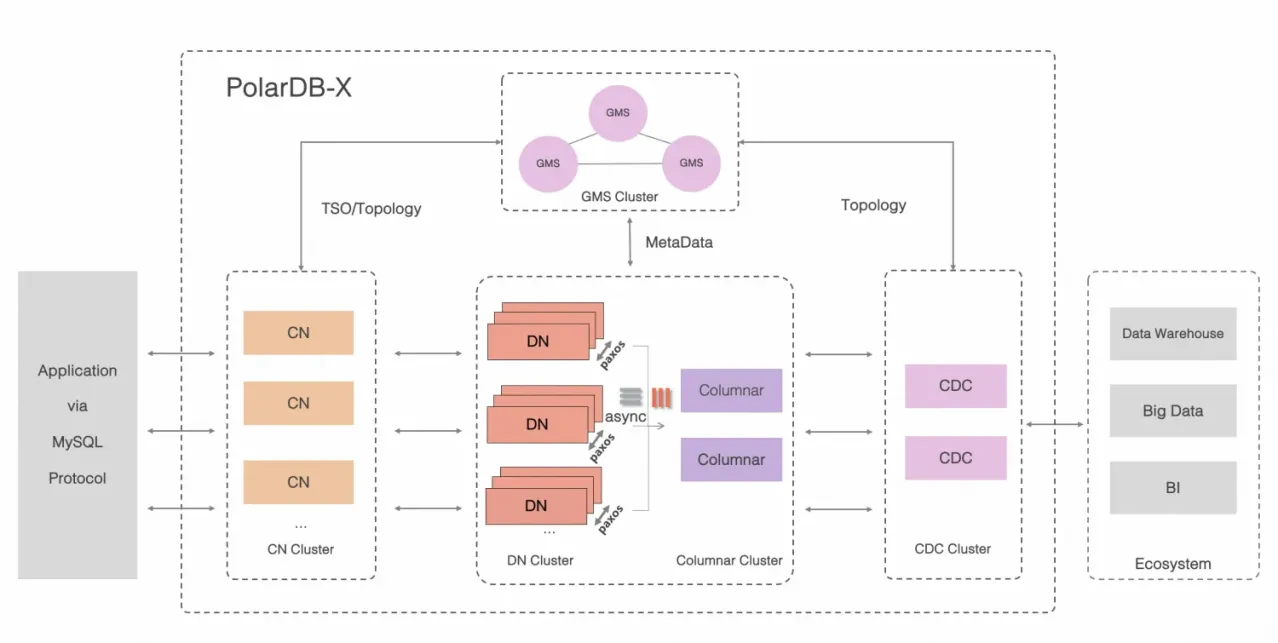

The following figure shows the architecture of PolarDB-X.

During the execution of DDL statements in PolarDB-X, almost all components are involved to ensure that the statements are correctly executed.

Global Meta Service (GMS) nodes maintain metadata information such as tables, schemas, and statistics.

Compute nodes (CNs) provide capabilities such as distributed DDL execution and global index maintenance.

Data nodes (DNs) maintain the physical data of all tables and schemas and execute DDL statements that are pushed down, such as ALTER TABLE.

For ease of description in this topic, DDL statements that are sent by users to CNs in PolarDB-X are referred to as user DDL statements. DDL statements that are pushed down by CNs to DNs during the execution of user DDL statements are referred to as physical DDL statements.

DDL execution methods

In PolarDB-X, DDL statements fall into the following two categories by execution mode: physically executed DDL statements and logically executed DDL statements.

Physical execution

Physically executed DDL statements are those that are pushed down to and executed on DNs. During the physical execution of DDL statements, CNs only maintain the metadata changes. The actual schema changes are performed by DNs. Such DDL statements include CREATE TABLE, DROP TABLE, CREATE LOCAL INDEX, and ALTER TABLE.

Atomicity

In PolarDB-X, a logical table corresponds to multiple physical tables, which are also known as shards. These shards are distributed across multiple DNs in most cases. If a DDL statement is physically executed on a logical table, a CN converts the user DDL statement to physical DDL statements and then pushes them down to the corresponding DNs for execution. The completion status on each shard is recorded. After the physical DDL statements are executed on all shards, the CN performs the final metadata change.

The physically executed DDL statements can be concurrently executed. Therefore, the CN not only records the execution status on each shard, but also needs to ensure the atomicity of changes to all shards. This ensures that all shards can be changed in an atomic manner. For more information, see DDL atomicity.

Execution algorithms

During the physical execution of DDL statements, whether tables are locked, whether tables need to be recreated, and whether only metadata is modified all depend on the execution algorithm that is used by DNs to execute the physical DDL statements. Table locking determines whether concurrent DML operations are allowed. Table recreation determines the execution duration of DDL statements. Metadata-only modification determines whether the DDL operations can be instantly complete.

The following three execution algorithms are available for DNs to execute physical DDL statements:

INSTANT algorithm: Only the metadata in the data dictionary is modified. No historical data needs to be modified or copied, and no physical table is recreated. The execution of physical DDL statements can be complete in seconds.

INPLACE algorithm: Data needs to be copied, and a physical table needs to be recreated. However, both the copy and recreate operations are complete within the storage engine. Generally, concurrent read and write operations can be performed, which minimizes the impact on your business.

COPY algorithm: All data needs to be copied from a physical table to a new table. When data is being copied, the table is locked and all write operations are blocked, which has a significant impact on your business.

Execution algorithms that are supported vary with DDL statements. For more information, see Online DDL.

Logical execution

Logically executed DDL statements are those that are executed by using the Online Schema Change (OSC) capability of CNs. Logical execution generally involves operations such as creating a temporary table, copying historical data, synchronizing incremental data, and performing metadata switching. The execution often takes a long time. However, table locking is not required throughout the process. Logically executed DDL statements include CREATE GLOBAL INDEX, DROP GLOBAL INDEX, ONLINE MODIFY COLUMN, ADD PRIMARY KEY, and partition change-related DDL statements. Some logically executed DDL statements, such as RENAME TABLE and RENAME GLOBAL INDEX, can be complete by directly modifying and synchronizing metadata.

Logically executed DDL statements generally have following characteristics:

Changes are made online without the need to lock tables. This minimizes the impact on your business.

Generally, data is copied and a table is recreated. The execution takes a relatively long period of time.

Atomicity is ensured. This prevents the situation in which the execution is successful on some shards but fails on other shards.

DOP adjustment

Generally, historical data must be copied for logically executed DDL statements, which is time-consuming. If CNs and DNs do not have CPU or I/O bottlenecks, you can adjust the degree of parallelism (DOP) and rate limit for backfilling of historical data to improve the efficiency of DDL statement execution.

For example, during the creation of a global secondary index (GSI), the historical data in the primary table needs to be backfilled to the GSI table. If CNs and DNs do not have CPU or I/O resource bottlenecks, you can adjust the DOP and rate limit for backfilling of historical data to accelerate the data backfilling.

-- Set the global DOP for data backfilling. Default value: 32, which does not need to be adjusted based on experience.

set global BACKFILL_PARALLELISM = 32;

-- Set the DOP for backfilling data to physical shards. Default value: 4. We recommend you adjust the DOP if the amount of such data is large.

set global PHYSICAL_TABLE_BACKFILL_PARALLELISM = 8;

-- Set the rate limit for data backfilling. Default value: 150000. Unit: row/s.

set global GSI_BACKFILL_SPEED_LIMITATION = 250000;Given that the schemas, topologies, and data sizes vary with tables, you can also adjust other parameters in addition to the preceding parameters. For more information, see Parallel DDL.

Obtain the execution modes of DDL statements

In PolarDB-X, you can execute the EXPLAIN statement to obtain the execution mode of a DDL statement. If the output is similar to that of the original DDL statement, the DDL statement is physically executed. If a statement similar to CREATE TABLE is included in the output, the DDL statement is logically executed.

Example

In a PolarDB-X instance, create a logical table named t1. The following sample code shows its schema:

+-------+--------------------------------------------------------------------------+

| Table | Create Table |

+-------+--------------------------------------------------------------------------+

| t1 | CREATE TABLE `t1` (

`a` int(11) DEFAULT NULL,

`b` int(11) DEFAULT NULL

) ENGINE = InnoDB DEFAULT CHARSET = utf8mb4 |

+-------+--------------------------------------------------------------------------+Execute the EXPLAIN statement to check whether the DDL statement for adding a local index to the logical table t1 is logically or physically executed. The result shows that the DDL statement is physically executed.

explain alter table t1 add local index idx(b);

+----------------------------------------------------------------------------------+

| EXECUTION PLAN |

+----------------------------------------------------------------------------------+

| ALTER_TABLE( tables="t1", shardCount=16, sql="ALTER TABLE ? ADD INDEX idx (b)" ) |

| EXCLUDE_RESOURCE( wumu.t1, wumu.tg17 ) |

| SHARE_RESOURCE( wumu.t1, wumu.tg17 ) |

+----------------------------------------------------------------------------------+Execute the EXPLAIN statement to check whether the DDL statement for changing the partitioning algorithm for the logical table t1 to

partition by key(a)is logically or physically executed. The result shows that the DDL statement is logically executed.

explain alter table t1 partition by key(a);

+----------------------------------------------------------------------------------+

| EXECUTION PLAN |

+----------------------------------------------------------------------------------+

| CREATE_TABLE( tables="t1_msfg", shardCount=16, sql="CREATE TABLE ? (

`a` int(11) DEFAULT NULL,

`b` int(11) DEFAULT NULL,

`_drds_implicit_id_` bigint(20) NOT NULL AUTO_INCREMENT,

PRIMARY KEY (`_drds_implicit_id_`),

INDEX `auto_shard_key_a` USING BTREE(`a`)

) ENGINE = InnoDB DEFAULT CHARSET = utf8mb4" ) |

| DROP_TABLE( tables="t1_msfg", shardCount=16, sql="DROP TABLE IF EXISTS ?" ) |

| EXCLUDE_RESOURCE( wumu.t1, wumu.t1_msfg, wumu.tg17 ) |

| SHARE_RESOURCE( wumu.t1, wumu.t1_msfg, wumu.tg17 ) |

+-----------------------------------------------------------------------------------+Asynchronously execute DDL statements

In PolarDB-X, DDL statements are synchronously executed by default. This is consistent with that in MySQL. Synchronous execution of a DDL statement may be suspended in the case of a client disconnection. If the execution of a DDL statement is expected to take long, we recommend that you asynchronously execute the DDL statement.

You can asynchronously execute a DDL statement by adding a hint to the DDL statement. Example:

/*+TDDL:cmd_extra(PURE_ASYNC_DDL_MODE=true)*/ alter table t1 add global index gsi_a(a) partition by key(a);