You can use the video classification component to train a video classification model for inference based on unprocessed video data. This topic describes how to configure the video classification component and provides an example on how to use the component in Platform for AI (PAI).

Prerequisites

OSS is activated, and Machine Learning Studio is authorized to access OSS. For more information, see Activate OSS and Grant the permissions that are required to use Machine Learning Designer.

Limits

The video classification component is available only in Machine Learning Designer of PAI.

You can use the video classification component with the computing resources of Deep Learning Container (DLC).

Overview

The video classification module provides mainstream 3D Convolutional Neural Network (CNN) and transformer models that can be used to run video classification training jobs. The supported X3D models include X3D-XS, X3D-M, and X3D-L and the supported transformer models include swin-t, swin-s, swin-b, and swin-t-bert. The swin-t-bert model supports dual-modal input based on video and text data.

You can find the video classification component in the Offline Training subfolder in the Video Algorithm folder of the component library.

Configure the component in the PAI console

Input ports

Input port (from left to right)

Data type

Recommended upstream component

Required

train data

OSS

Read File Data

No. If you do not use this input port to pass the training data to the video classification component, you need to go to the Fields Setting tab of the component and configure the oss path to train file parameter. For more information, see the Component parameters table in this topic.

eval data

OSS

Read File Data

No. If you do not use this input port to pass the evaluation data to the video classification component, you need to go to the Fields Setting tab of the component and configure the oss path to evaluation file parameter. For more information, see the Component parameters table in this topic.

Component parameters

Tab

Parameter

Required

Description

Default value

Fields Setting

oss path to save checkpoint

Yes

The Object Storage Service (OSS) path in which the model is stored. Example:

oss://pai-online-shanghai.oss-cn-shanghai-internal.aliyuncs.com/test/test_video_cls.N/A

oss path to data

No

The OSS directory in which the video file is stored. If a directory is specified, the video file path contains the directory and the name of the video file in the labeling file. For example, if the OSS directory is

oss://pai-vision-data-hz/EasyMM/DataSet/kinetics400/and the video file name in the labeling file isvideo/1.mp4, the video file path isoss://pai-vision-data-hz/EasyMM/DataSet/kinetics400/video/1.mp4.N/A

oss path to train file

No

The OSS path in which the training data is stored. This parameter is required if you do not use the input port to pass the training data to the video classification component. Example:

oss://pai-vision-data-hz/EasyMM/DataSet/kinetics400/train_pai.txt.If you use both an input port and this parameter to pass the training data to the video classification component, the training data that is passed by using the input port is used.

If the labeling file does not contain text, separate the video file name and label in each row of the labeling file with a space. Example:

Video file name Label. If the labeling file contains text, separate the video file name, text, and label in each row with \t. Example:Video file name\tText\tLabel.N/A

oss path to evaluation file

No

The OSS path in which the evaluation data is stored. This parameter is required if you do not use the input port to pass the evaluation data to the video classification component. Example:

oss://pai-vision-data-hz/EasyMM/DataSet/kinetics400/train_pai.txt.If you use both an input port and this parameter to pass the evaluation data to the video classification component, the evaluation data that is passed by using the input port is used.

N/A

oss path to pretrained model

No

The OSS path in which a pre-trained model is stored. We recommend that you use a pre-trained model to improve the model precision.

N/A

Parameters Setting

video classification network

Yes

The network that is used by the model. Valid values:

x3d_xs

x3d_l

x3d_m

swin_t

swin_s

swin_b

swin_t_bert

x3d_xs

whether to use multilabel

No

Specifies whether to use multiple labels.

This parameter is available only if you select the swin_t_bert network.

false

numclasses

Yes

The number of categories.

N/A

learning rate

Yes

The initial learning rate.

For the x3d model, we recommend that you set the learning rate to 0.1. For the swin model, we recommend that you set the learning rate to 0.0001.

0.1

number of train epochs

Yes

The number of training iterations.

For the x3d model, we recommend that you set the value to 300. For the swin model, we recommend that you set the value to 30.

10

warmup epoch

Yes

The number of warmup iterations. We recommend that you set the initial learning rate for warmup to a small value. This way, the value of the learning rate parameter can be reached only after the specified number of warmup iterations are implemented. This prevents the model gradient from exploding. For example, if you set the warmup epoch parameter to 35, the learning rate of the model is gradually increased to the value specified by the learning rate parameter after 35 warmup iterations.

35

batch size

Yes

The size of a training batch. This parameter specifies the number of data samples used in a single model iteration or training process.

32

model save interval

No

The epoch interval at which a checkpoint is saved. A value of 1 indicates that a checkpoint is saved each time an epoch is completed.

1

Tuning

use fp 16

Yes

Specifies whether to enable FP16 to reduce memory usage during model training.

N/A

single worker or distributed on dlc

No

The mode in which the component is run. Valid values:

single_dlc: single worker on Deep Learning Containers (DLC)

distribute_dlc: distributed workers on DLC

single_dlc

gpu machine type

No

The specification of the GPU-accelerated node that you want to use.

8vCPU+60GB Mem+1xp100-ecs.gn5-c8g1.2xlarge

Output ports

Output port (from left to right)

Data type

Downstream component

output model

The OSS path of the output model. The value is the same as that you specified for the oss path to save checkpoint parameter on the Fields Setting tab. The output model in the .pth format is stored in this OSS path.

Examples

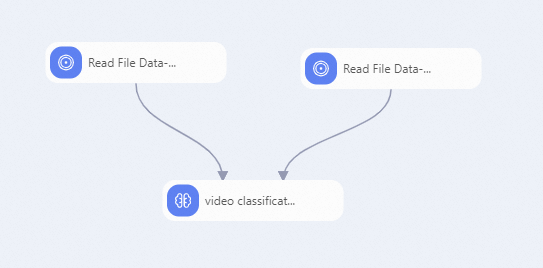

The following figure shows a sample pipeline in which the video classification component is used.  In this example, configure the components in the preceding figure by performing the following steps:

In this example, configure the components in the preceding figure by performing the following steps:

Use two Read File Data components as the upstream components of the video classification component to read video data files as the input training data and evaluation data of the component. To do this, set the OSS Data Path parameters of the two Read File Data components to the OSS paths of the video data files.

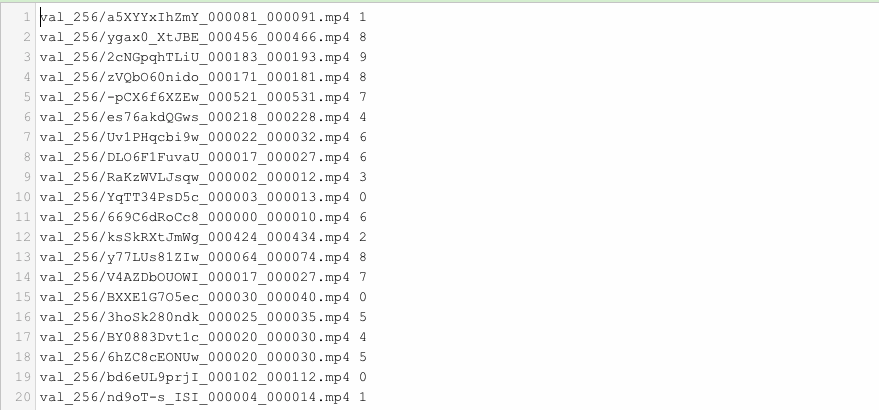

The following figure shows the format of a video labeling file.

Each row in the file contains a video file path and a category label that are separated by a space character.

Each row in the file contains a video file path and a category label that are separated by a space character. Specify the training data and evaluation data as the input of the video classification component and configure other parameters. For more information, see Configure the video classification component.

References

For information about Machine Learning Designer components, see Overview of Machine Learning Designer.

Machine Learning Designer provides various preset algorithm components. You can select a component for data processing based on your business requirements. For more information, see Component reference: Overview of all components.