If your business involves image classification, you can use the image classification (torch) component of Platform for AI (PAI) to build image classification models for inference. This topic describes how to configure the image classification (torch) component and provides an example on how to use the component.

Prerequisites

OSS is activated, and Machine Learning Studio is authorized to access OSS. For more information, see Activate OSS and Grant the permissions that are required to use Machine Learning Designer.

Limits

The pose detection component is available only in Machine Learning Designer of Machine Learning Platform for AI (PAI).

The pose detection component can only be used with the computing resources of Deep Learning Containers (DLC) of PAI.

Background information

The image classification (torch) component provides the convolutional neural network (CNN) and transformer model types and supports the ResNet, ResNeXt, HRNet, ViT, SwinT, and MobileNet-v2 algorithms. The component also provides the pre-trained ImageNet model to facilitate model tuning.

You can find the image classification (torch) component in the Offline Training subfolder in the Video Algorithm folder of the component library.

Configure the component in the PAI console

Input ports

Input port (from left to right)

Data type

Recommended upstream component

Required

data annotation path for training

OSS

No

data annotation path for evaluation

OSS

No

Component parameters

Tab

Parameter

Required

Description

Default value

Fields Setting

model type

Yes

The model type used for model training. Only Classification is supported.

Classification

oss dir to save model

Yes

The Object Storage Service (OSS) directory in which the training model is stored. Example:

oss://examplebucket/yunji.cjy/designer_test.N/A

oss annotation path for training data

No

If you did not use the data annotation path for training input port to specify the labeled training data, you must configure this parameter.

NoteIf you use both an input port and this parameter to specify the labeled training data, the value specified by the input port takes precedence.

The OSS path of the labeled training data. Example:

oss://examplebucket/yunji.cjy/data/imagenet/meta/train_labeled.txt.Each data record in the train_labeled.txt file is stored in the

absolute path/image name.jpg label_idformat.ImportantSeparate image storage path and label_id with a space.

ImportantThe component supports the following formats: ClsSourceImageList and ClsSourceItag. You can use the data labeled by iTAG as training input.

oss annotation path for evaluation data

No

If you did not use the data annotation path for evaluation input port to specify the labeled evaluation data, you must configure this parameter.

NoteIf you use both an input port and this parameter to specify the labeled evaluation data, the value specified by the input port takes precedence.

The OSS path of the labeled evaluation data. Example:

oss://examplebucket/yunji.cjy/data/imagenet/meta/val_labeled.txt.Each data record in the val_labeled.txt file is stored in the

absolute path/image name.jpg label_idformat.ImportantSeparate image storage path and label_id with a space.

NoteThe component supports the following formats: ClsSourceImageList and ClsSourceItag. You can use the data labeled by iTAG as training input.

N/A

class list file

Yes

The class list file parameter specifies a list of image category names. You can specify category names or the path of a TXT file that stores category names.

If you specify category names, use the following format:

[name1,name2,……]. Separate multiple category names with commas (,). Examples:[0, 1, 2]and[person, dog, cat].If you specify the path of a TXT file, add category names to a TXT file, upload the file to an OSS bucket in the same region, and then specify the OSS path of the file.

You can separate the category names in the TXT file with commas (,) or line breaks (\n). Examples:

0, 1, 2and0, \n 1, \n 2\n.If you do not configure this parameter, category names ranging from

str(0)tostr(num_classes-1)are used.num_classesindicates the number of categories.If the number of categories generated by image classification is 3, the category names are

0,1,2.

N/A

Data Source Type

Yes

The type of input data. Valid values: ClsSourceImageList and ClsSourceItag.

ClsSourceItag

oss path for pretrained model

No

The OSS path in which your pre-trained model is stored. If you have a pre-trained model, set this parameter to the OSS path of your pre-trained model. If you do not configure this parameter, the default pre-trained model provided by PAI is used.

N/A

Parameters Setting

backbone

Yes

The backbone model that you want to use. Valid values:

resnet

resnext

hrnet

vit

swint

mobilenetv2

inceptionv4

resnet

num classes

Yes

The number of categories in the data.

N/A

image size after resizing

Yes

The length and width to which images are resized. By default, the length and width are the same.

224

optimizer

Yes

The optimization method for model training. Valid values:

SGD

Adam

SGD

initial learning rate

Yes

The initial learning rate.

0.05

learning rate policy

Yes

The policy that is used to adjust the learning rate. Only step is supported. A value of step indicates that the learning rate is manually adjusted at specific epochs.

step

lr step

Yes

The epochs at which the learning rate is adjusted. Separate multiple values with commas (,). If the number of epochs reaches the values specified by this parameter, the learning rate automatically decays by 90%.

For example, the initial learning rate is set to 0.1, the total number of epochs is set to 20, and the lr step parameter is set to 5,10. In this case, when the training is in epoch 1 to 5, the learning rate is 0.1. When the training enters epoch 6, the learning rate decays to 0.01 and continues until epoch 10 ends. When the training enters epoch 11, the learning rate decays to 0.001 and continues until the end of all epochs.

[30,60,90]

train batch size

Yes

The size of a training batch. The value indicates the number of data samples used for model training in a single iteration.

2

eval batch size

Yes

The size of an evaluation batch. The value indicates the number of data samples used for model evaluation in each iteration.

2

total train epochs

Yes

The total number of epochs. An epoch ends when a round of training is complete on all data samples. The total number of epochs indicates the total number of training rounds conducted on data samples.

1

save checkpoint epoch

No

The frequency at which a checkpoint is saved. For example, a value of 1 indicates that a checkpoint is saved each time an epoch ends.

1

type of export model

Yes

The format in which the model is exported. Valid values:

raw

onnx

raw

Tuning

number process of reading data per gpu

No

The number of threads used to read the training data for each GPU.

4

use fp 16

No

Specifies whether to enable FP16 to reduce memory usage during model training.

N/A

single worker or distributed on DLC

Yes

The compute engine that is used to run the component. You can select a compute engine based on your business requirements. Valid values:

single_on_dlc

distribute_on_dlc

single_on_dlc

number of worker

No

This parameter is required if you set the single worker or distributed on DLC parameter to distribute_on_dlc.

The number of concurrent workers for computing.

1

cpu machine type

No

This parameter is required only if you set the single worker or distributed on DLC parameter to distribute_on_dlc.

The CPU type that you want to use.

16vCPU+64GB Mem-ecs.g6.4xlarge

gpu machine type

Yes

The GPU type that you want to use.

8vCPU+60GB Mem+1xp100-ecs.gn5-c8g1.2xlarge

Output ports

Output port

Data type

Downstream component

output model

The OSS directory in which the output model is stored. The value is the same as that you specified for the oss dir to save model parameter on the Fields Setting tab.

Examples

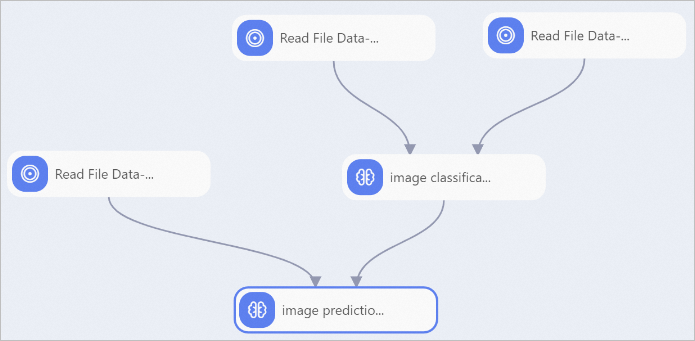

The following figure shows a sample pipeline in which the image classification (torch) component is used.  In this example, configure the components in the preceding figure by performing the following steps:

In this example, configure the components in the preceding figure by performing the following steps:

Prepare data. Label images by using iTAG. For more information, see iTAG.

Use the Read File Data-1 and Read File Data-2 components to read the labeled training data and labeled evaluation data. To do so, set the OSS Data Path parameter of these components to the OSS path of the data that you want to retrieve.

ImportantSet the Data Source Type parameter to ClsSourceItag.

Draw lines from the preceding two components to the image classification (torch)-1 component and configure the image classification (torch)-1 component. For more information, see the "Configure the component in Machine Learning Designer" section of this topic.

Use the Read File Data-3 component to read prediction data. To do so, set the OSS Data Path parameter of the Read File Data-3 component to the OSS path of the prediction data that you want to retrieve.

Use the image prediction-1 component to conduct batch inference. To do so, configure the following parameters for the image prediction-1 component. For more information, see image prediction.

model type: Select torch_classifier.

oss path for model: Select the value specified for the oss dir to save model parameter of the image classification (torch)-1 component.