This topic describes how to use OpenTelemetry to report the trace data of Kong Gateway. Kong Gateway is a cloud-native, platform-independent, and extensible API gateway. It provides features such as load balancing, security, monitoring, and API management. It supports a variety of plug-ins to extend its functionality. You can use the Kong OpenTelemetry plug-in to collect the trace data of Kong Gateway and report the trace data to Managed Service for OpenTelemetry. You can enable tracing for Kong Gateway with only a few configurations.

Limits

Kong Gateway 3.1.x or later is used.

The tracing feature of Kong Gateway supports only HTTP and HTTPS. This feature does not support TCP or UDP.

The Kong OpenTelemetry plug-in reports trace data to Managed Service for OpenTelemetry only over HTTP. Reporting trace data over Google Remote Procedure Call (gRPC) is not supported.

Prerequisites

Procedure (automatic instrumentation)

You can use the Kong OpenTelemetry plug-in to collect the trace data of Kong Gateway and report the trace data to Managed Service for OpenTelemetry. To enable tracing, you need to only modify the configuration file of Kong Gateway.

Step 1: Enable tracing for Kong Gateway

Method 1: Enable tracing by using environment variables

KONG_TRACING_INSTRUMENTATIONS: enables tracing.KONG_TRACING_SAMPLING_RATE: the sampling rate. Valid values: 0 to 1.0. A value of 1.0 indicates that all the data is sampled. A value of 0 indicates that no data is sampled.

KONG_TRACING_INSTRUMENTATIONS=all

KONG_TRACING_SAMPLING_RATE=1Method 2: Enable tracing in the kong.conf file

tracing_instrumentations: enables tracing.tracing_sampling_rate: the sampling rate. Valid values: 0 to 1.0. A value of 1.0 indicates that all the data is sampled. A value of 0 indicates that no data is sampled.

# kong.conf

tracing_instrumentations = all

tracing_sampling_rate = 1.0

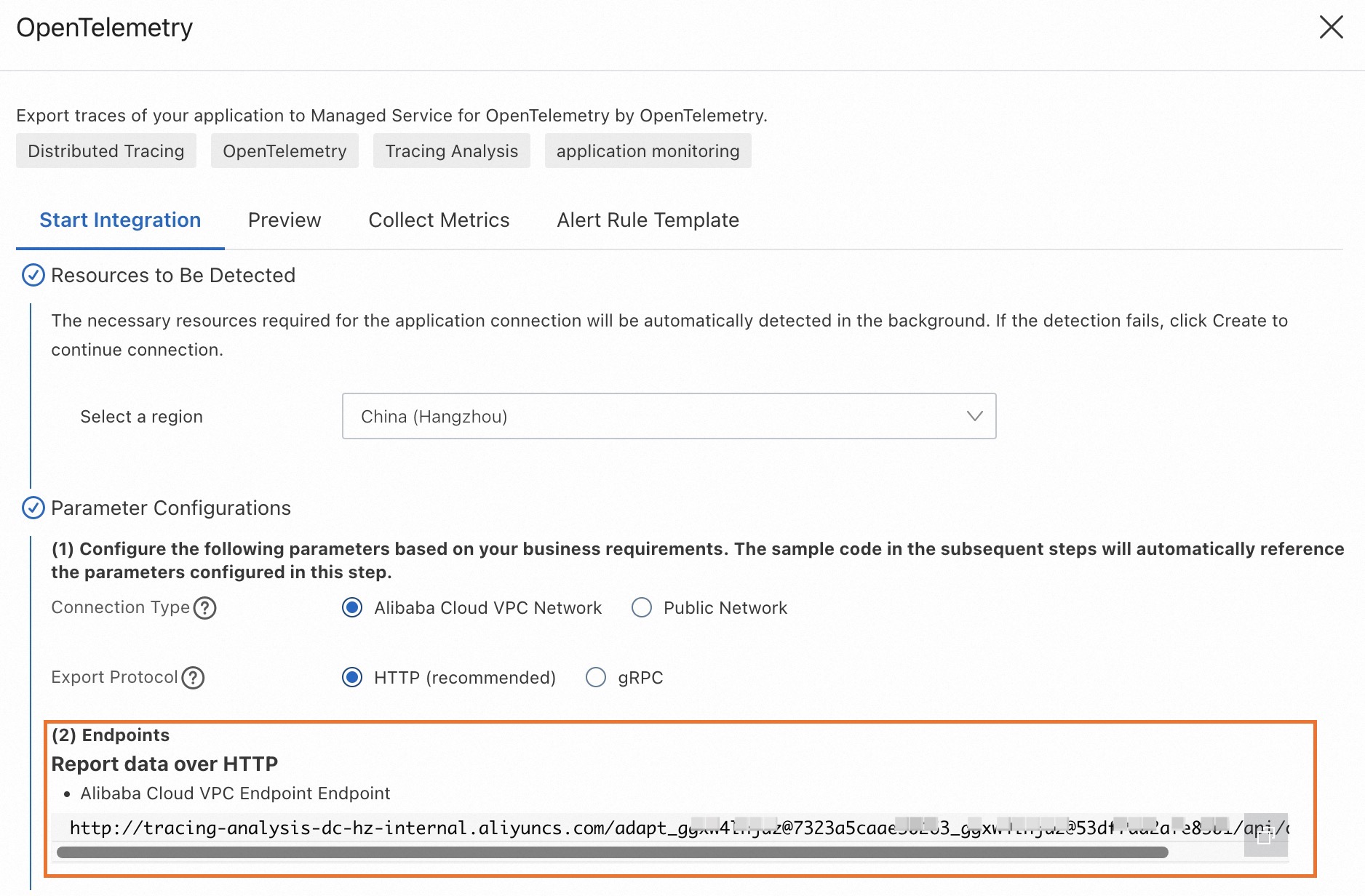

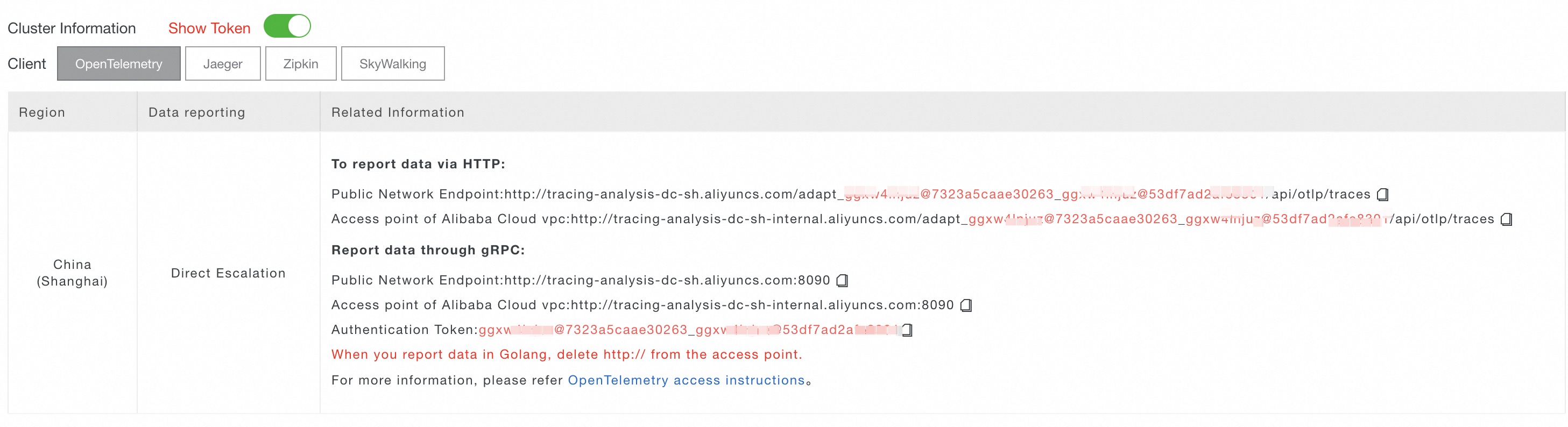

...Step 2: Configure the parameters for reporting data by using OpenTelemetry

When you configure the parameters for reporting data by using OpenTelemetry, replace the following variables with the actual values:

${HTTP_ENDPOINT}: the HTTP endpoint. Replace this variable with the HTTP endpoint that you obtained in the Prerequisites section. Example:http://tracing-analysis-dc-hk.aliyuncs.com/adapt_xxxxx/api/otlp/traces.${SERVICE_NAME}: the custom application name. This name is displayed as the name of Kong Gateway in the Managed Service for OpenTelemetry console.

For information about configuring OpenTelemetry reporting parameters, see OpenTelemetry.

Method 1: Configure the OpenTelemetry endpoint and application name in the kong.yaml configuration file

Kong gateway ≥ V3.8

# kong.yaml

...

plugins:

...

- name: opentelemetry

config:

traces_endpoint: ${HTTP_ENDPOINT}

resource_attributes:

service.name: ${SERVICE_NAME}Kong gateway < V3.8

# kong.yaml

...

plugins:

...

- name: opentelemetry

config:

endpoint: ${HTTP_ENDPOINT}

resource_attributes:

service.name: ${SERVICE_NAME}Method 2: Use the Kong Admin API to configure the OpenTelemetry endpoint and application name

Kong gateway ≥ V3.8

curl -X POST http://localhost:8001/plugins/ \

--header "accept: application/json" \

--header "Content-Type: application/json" \

--data '

{

"name": "opentelemetry",

"config": {

"traces_endpoint": "${HTTP_ENDPOINT}",

"resource_attributes": {

"service.name": "${SERVICE_NAME}"

}

}

}

'Kong gateway < V3.8

curl -X POST http://localhost:8001/plugins/ \

--header "accept: application/json" \

--header "Content-Type: application/json" \

--data '

{

"name": "opentelemetry",

"config": {

"endpoint": "${HTTP_ENDPOINT}",

"resource_attributes": {

"service.name": "${SERVICE_NAME}"

}

}

}

'Method 3: Create a KongClusterPlugin resource in the Kubernetes cluster and configure the OpenTelemetry endpoint and application name

apiVersion: configuration.konghq.com/v1

kind: KongClusterPlugin

metadata:

name: <global-opentelemetry>

annotations:

kubernetes.io/ingress.class: kong

labels:

global: "true"

config:

traces_endpoint: ${HTTP_ENDPOINT}

resource_attributes:

service.name: ${SERVICE_NAME}

plugin: opentelemetryAfter you configure the OpenTelemetry endpoint and application name, you must restart Kong Gateway to enable OpenTelemetry tracing for Kong Gateway.

Step 3: View the trace data of Kong Gateway

After you complete the preceding configurations, you can create routes in Kong Gateway and use Kong Gateway to access backend services to generate traces. Then, you can log on to the Managed Service for OpenTelemetry console to view the traces generated by Managed Service for OpenTelemetry for Kong Gateweay.

On the Applications page, view the Kong Gateway application.

On the Trace Explorer page, open a trace of Kong Gateway and view information such as the request duration, client IP address, HTTP status code, and HTTP route.

Step 4: (Optional) Configure advanced settings

Configuration 1: Configure the trace pass-through protocol used by the Kong OpenTelemetry plug-in, which determines the trace context format

By default, the Kong OpenTelemetry plug-in uses W3C as the trace pass-through protocol. In this case, the plug-in identifies only the W3C request headers from an upstream node and passes the W3C request headers to a downstream node. If you want to use another protocol, modify the config.propagation.extract, config.propagation.inject, and config.propagation.default_format parameters.

In this example, the plug-in extracts the trace context from the HTTP request headers based on the following protocols in sequence: W3C (OpenTelemetry), b3 (Zipkin), Jaeger, and OpenTracing. A value of preserve for the inject parameter specifies that the extracted data is passed as is to downstream nodes. If no context is identified, the plug-in generates the trace data in the W3C format, which is defined by the default_format parameter.

# kong.yaml

...

plugins:

...

- name: opentelemetry

config:

...

propagation:

extract: [ w3c, b3, jaeger, ot ]

inject: [ preserve ]

default_format: "w3c"Configuration 2: Specify the effective scope of the Kong OpenTelemetry plug-in

The Kong OpenTelemetry plug-in can take effect globally or on a specific application, route, or consumer. By default, the Kong OpenTelemetry plug-in takes effect globally. The following sample code shows how to modify the effective scope of the plug-in.

Configure the Kong OpenTelemetry plug-in to take effect on a specific application

Replace

${SERVICE_NAME|ID}with the ID or name of the service on which the plug-in takes effect.# kong.yaml plugins: ... - name: opentelemetry service: ${SERVICE_NAME|ID} config: traces_endpoint: ${HTTP_ENDPOINT} resource_attributes: service.name: ${SERVICE_NAME}Configure the Kong OpenTelemetry plug-in to take effect on a specific route

Replace

${ROUTE_NAME|ID}with the ID or name of the route on which the plug-in takes effect.# kong.yaml plugins: ... - name: opentelemetry route: ${ROUTE_NAME|ID} config: traces_endpoint: ${HTTP_ENDPOINT} resource_attributes: service.name: ${SERVICE_NAME}Configure the Kong OpenTelemetry plug-in to take effect on a specific consumer

Replace

${CONSUMER_NAME|ID}with the ID or name of the consumer on which the plug-in takes effect.# kong.yaml plugins: ... - name: opentelemetry consumer: ${CONSUMER_NAME|ID} config: traces_endpoint: ${HTTP_ENDPOINT} resource_attributes: service.name: ${SERVICE_NAME}

Configuration 3: Specify the types of Kong Gateway traces to be collected

You can specify the types of Kong Gateway traces to be collected by configuring the tracing_instrumentations parameter or the KONG_TRACING_INSTRUMENTATIONS environment variable. The following table describes the valid values of the parameter and environment variable.

Value | Description |

off | Disables tracing. |

all | Collects all types of traces. |

request | Collects traces for requests. |

db_query | Collects traces for databases queries. |

dns_query | Collects traces for DNS queries. |

router | Collects traces for routers, including router reconnections. |

http_client | Collects traces for OpenResty HTTP client requests. |

balancer | Collects traces for load balancing retries. |

plugin_rewrite | Collects traces for the execution of the plug-in iterator in the rewrite phase. |

plugin_access | Collects traces for the execution of the plug-in iterator in the access phase. |

plugin_header_filter | Collects traces for the execution of the plug-in iterator in the header filter phase. |

Example

In this example, the traces of Kong Gateway and a backend service are collected and reported to Managed Service for OpenTelemetry.

Prerequisites

Git, Docker, and Docker Compose are installed. The Docker version is 20.10.0 or later.

Project structure

The following structure shows only the files related to this example.

docker-kong/compose/

│

├── docker-compose.yml # The configuration file of Docker Compose.

│

├── config/

│ └── kong.yaml # The declarative configuration file of Kong Gateway.

│

└── backend/

├── Dockerfile # The Dockerfile of the backend service.

├── main.js

├── package.json

└── package-lock.jsonProcedure

Download the

Kong/docker-kongopen source project.git clone https://github.com/Kong/docker-kong.git && cd docker-kong/composeCreate a backend service. In this example, a Node.js application is created.

This step creates a Node.js application that uses the Express framework. This application is started on port 7001 and exposes the

/helloendpoint.Create an application directory.

mkdir backend && cd backendCreate the package.json file.

cat << EOF > package.json { "name": "backend", "version": "1.0.0", "main": "index.js", "scripts": {}, "keywords": [], "author": "", "license": "ISC", "description": "", "dependencies": { "@opentelemetry/api": "^1.9.0", "@opentelemetry/auto-instrumentations-node": "^0.52.0", "axios": "^1.7.7", "express": "^4.21.1" } } EOFCreate the main.js file.

cat << EOF > main.js "use strict"; const axios = require("axios").default; const express = require("express"); const app = express(); app.get("/", async (req, res) => { const result = await axios.get("http://localhost:7001/hello"); return res.status(201).send(result.data); }); app.get("/hello", async (req, res) => { console.log("hello world!") res.json({ code: 200, msg: "success" }); }); app.use(express.json()); app.listen(7001, () => { console.log("Listening on http://localhost:7001"); }); EOFCreate the Dockerfile.

NoteReplace

${HTTP_ENDPOINT}with the HTTP endpoint that you obtained in the Prerequisites section.cat << EOF > Dockerfile FROM node:20.16.0 WORKDIR /app COPY package*.json ./ RUN npm install COPY . . ENV OTEL_TRACES_EXPORTER="otlp" ENV OTEL_LOGS_EXPORTER=none ENV OTEL_METRICS_EXPORTER=none ENV OTEL_EXPORTER_OTLP_TRACES_ENDPOINT="${HTTP_ENDPOINT}" ENV OTEL_NODE_RESOURCE_DETECTORS="env,host,os" ENV OTEL_SERVICE_NAME="ot-nodejs-demo" ENV NODE_OPTIONS="--require @opentelemetry/auto-instrumentations-node/register" EXPOSE 7001 CMD ["node", "main.js"] EOF

Enable tracing for Kong Gateway and add the backend service to Docker Compose.

Return from the

backenddirectory to the upper-level directory.cd ..Modify the configuration file of Docker Compose.

vim docker-compose.ymlAdd environment variables to the

docker-compose.ymlfile to enable tracing for Kong Gateway.... services: kong: ... environment: ... # Add two environment variables. KONG_TRACING_INSTRUMENTATIONS: all KONG_TRACING_SAMPLING_RATE: 1.0 ... ...Define a Node.js backend service named

backend-apiin thedocker-compose.ymlfile.... services: ... backend-api: build: context: ./backend dockerfile: Dockerfile environment: NODE_ENV: production ports: - "7001:7001" networks: - kong-net ...

Configure the backend service and routing rules in Kong Gateway, and enable the OpenTelemetry plug-in.

Go to the config directory.

cd configAdd the following content to the kong.yaml file:

NoteReplace

${HTTP_ENDPOINT}with the HTTP endpoint that you obtained in the Prerequisites section.cat << EOF >> kong.yaml services: - name: backend-api url: http://backend-api:7001 routes: - name: main-route paths: - /hello plugins: - name: opentelemetry config: traces_endpoint: ${HTTP_ENDPOINT} # Replace ${HTTP_ENDPOINT} with the obtained HTTP endpoint. headers: X-Auth-Token: secret-token resource_attributes: service.name: kong-dev EOF

Start Kong Gateway and the backend service.

Return to the upper-level directory.

cd ..Run the following command in the directory in which the

docker-compose.ymlfile resides to start Kong Gateway and the backend service:docker compose up -d

Access the backend service by using Kong Gateway.

curl http://localhost:8000/hello # Expected output: {"code":200,"msg":"success"}Log on to the Managed Service for OpenTelemetry console to view the traces of Kong Gateway and the backend service.

NoteIn this example, the name of Kong Gateway displayed on the Applications page is kong-dev, and the name of the backend service is ot-nodejs-demo.

FAQs

What do I do if no Kong Gateway traces are displayed in the Managed Service for OpenTelemetry after I enable the Kong OpenTelemetry plug-in?

Set the environment variable KONG_LOG_LEVEL to debug or add log_level=debug to the kong.conf file to set the Kong Gateway log level to debug. Send a request to Kong Gateway, and check whether the generated logs contain the Kong trace.

What do I do if the Kong Gateway traces cannot be associated with other applications?

Check whether the trace pass-through protocol used by other applications is the same as that of Kong Gateway. By default, Kong Gateway uses W3C as the trace pass-through protocol.

Does the Kong OpenTelemetry plug-in affect the performance of Kong Gateway?

After you enable the Kong OpenTelemetry plug-in, Kong Gateway may be affected. We recommend that you adjust the trace sampling rate and the following parameters of the OpenTelemetry plug-in: batch size, number of retries, and retry interval. For more information about how to adjust the trace sampling rate, see the Step 1: Enable tracing for Kong Gateway section of this topic. For more information about the configurations of the plug-in, see Kong OpenTelemetry Configuration.