This topic provides answers to frequently asked questions about the graceful start and shutdown solution provided by Microservices Governance of Microservices Engine (MSE).

Where did the Advanced Features go, what is the impact on integrated applications, and how can I still enable or disable them?

If you enable the new version of Microservices Governance for your applications in the MSE console, you do not need to take note of this issue.

Advanced settings for the graceful start and shutdown solution are provided in the old version of Microservices Governance in the MSE console. However, the advanced settings involve numerous microservices concepts and are hidden in the new version of Microservices Governance for ease of use. In the old version of Microservices Governance, the advanced settings involve the following features:

Completion of service registration before readiness probe

This feature is still available and enabled by default in the new version. If this feature was not previously enabled for your application, it is automatically enabled after you re-enable the graceful start feature on the Graceful Start and Shutdown page in the console. If this feature was already enabled for your application, we recommend that you do not disable it. When this feature is enabled by default, it has no negative impact on your application and can prevent the risk of traffic dropping to zero in specific scenarios during a release. For more information, see Why configure 55199/readiness.

Completion of service prefetching before readiness probe

This feature is no longer provided in the new version. It was originally designed to ensure that the prefetching effect can meet expectations and prevent a sudden increase in the queries per second (QPS) curve for prefetched services. The implementation principle of this feature is to delay the time to pass a check performed by a Kubernetes readiness probe. This prolongs the overall version release time. This also ensures that the system provides sufficient time for new nodes to prefetch and allows old nodes to process specific amounts of traffic during the service prefetching. This ensures that the QPS value of prefetched services in the curve gradually increases over time. In the version release process, if all the old nodes are disabled during the prefetching of the new nodes, the new nodes need to process all online traffic. As a result, low-traffic service prefetching cannot be performed. Applications that have this feature enabled are not affected. However, you can no longer enable this feature for new applications when you configure rules. If you have previously enabled this feature, we do not recommend that you disable it. This feature has no negative impact on your application when it is enabled. For information about how to achieve the desired effect for low-traffic prefetching in the new version, see Best practices for low-traffic prefetching.

How does the low-traffic service prefetching feature work?

When a consumer calls a service, the consumer selects a provider of the service. If low-traffic service prefetching is enabled for providers, Microservices Governance optimizes the provider selection process. When a consumer selects a provider, the weight of each provider is calculated as a percentage value (0% to 100%). The consumer preferentially selects and calls a provider that has a high weight. If low-traffic service prefetching is enabled for a provider, when the provider starts, the weight of the provider calculated by the consumer is low, and the consumer calls the prefetched nodes with a low probability. The calculated weight gradually increases over time and finally reaches 100%. When the calculated weight reaches 100%, the prefetching is complete, and the prefetched nodes can receive traffic as normal nodes. The provider includes its startup time in the metadata of the service registration information. The consumer calculates the weight of the provider based on the startup time. This process requires that Microservices Governance is enabled for both consumers and providers.

Low-traffic service prefetching starts after the application receives the first request and ends after the configured prefetching duration elapses. The default prefetching duration is 120s. If the application does not receive external requests, the prefetching is not triggered.

To trigger low-traffic prefetching, you must make sure that service registration is complete. If you find that your application has started low-traffic prefetching but has not yet completed service registration (that is, you observe in the console that the prefetching start event appears before the service registration event), you can refer to Why are prefetching events of my application reported before service registration events? to resolve this issue.

Why is the prefetching data trend in the curve of the line chart not as expected? How do I resolve this issue?

Before you read this question, we recommend that you learn about the principles of low-traffic service prefetching.

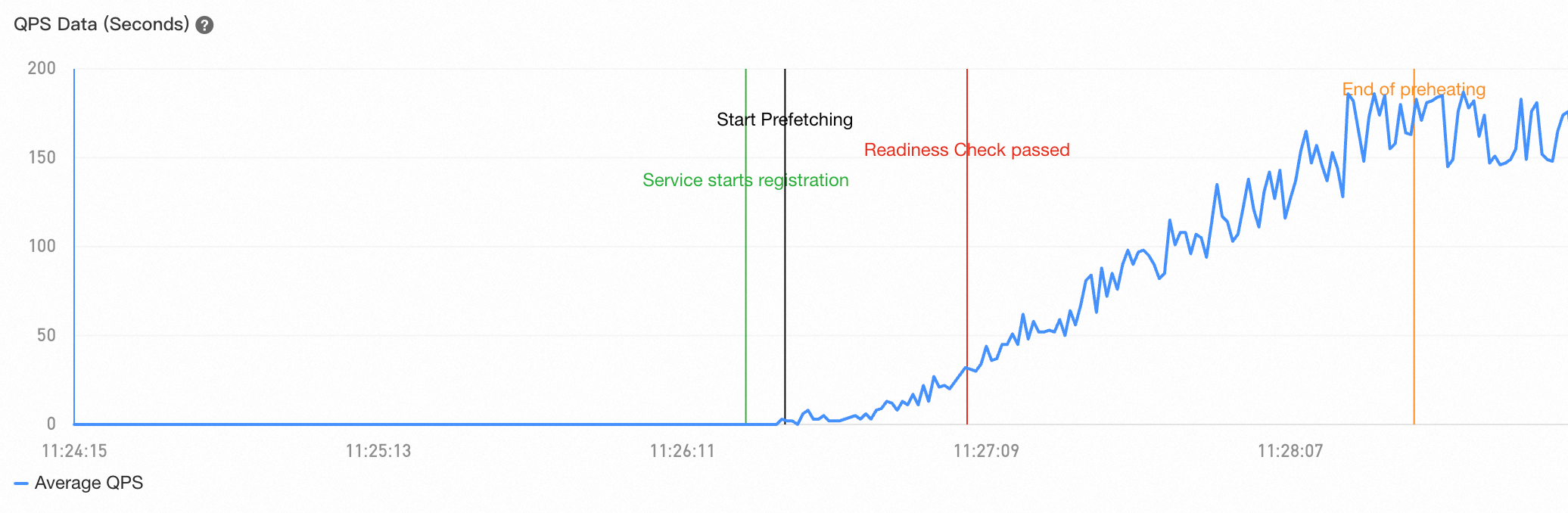

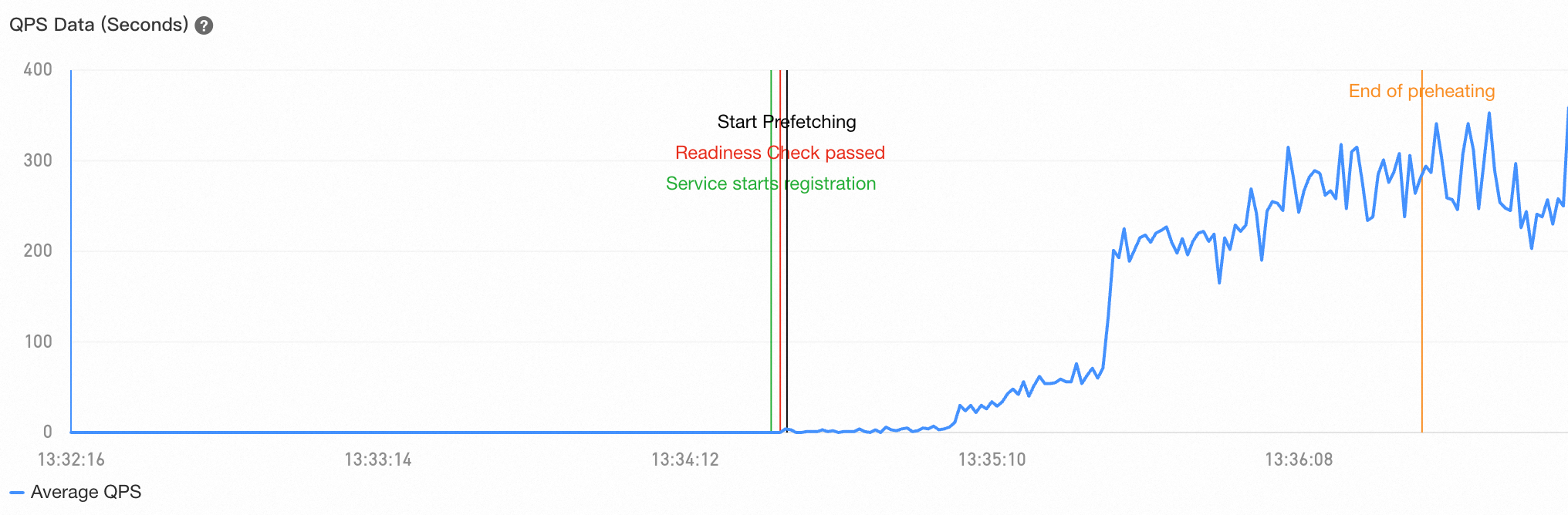

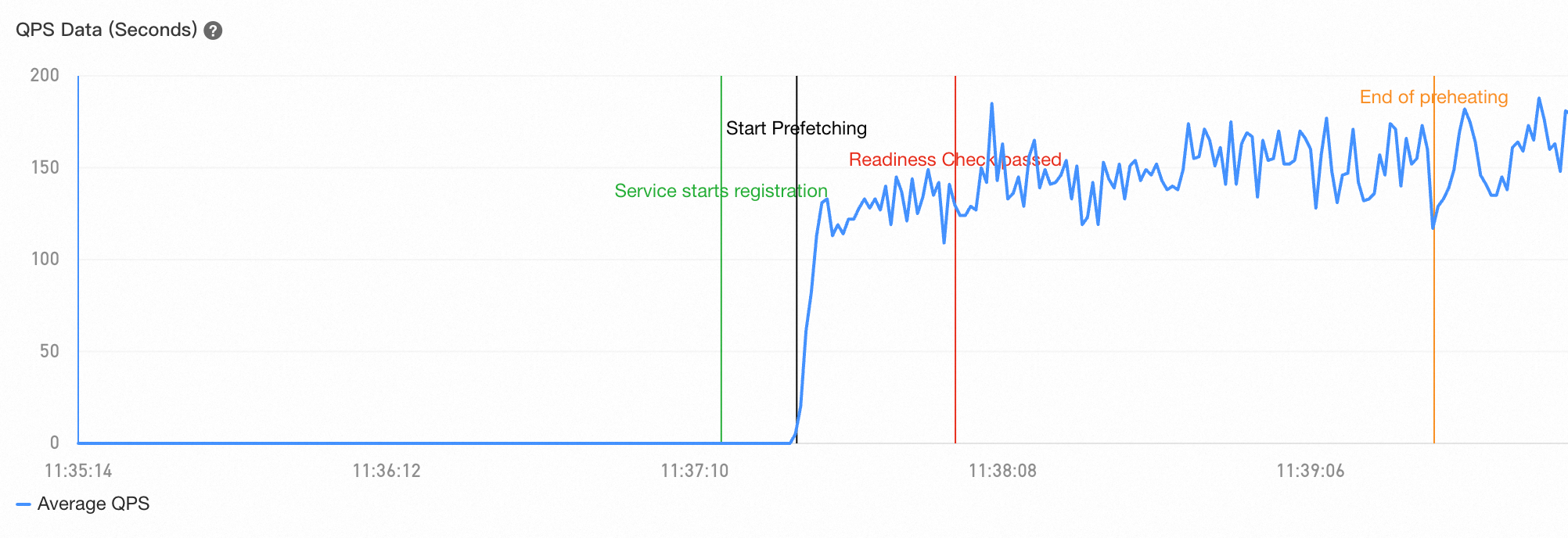

The following line chart shows an example of the QPS curve for low-traffic service prefetching in normal cases.

In some cases, however, the QPS value in the curve does not gradually increase over time for applications for which low-traffic service prefetching is performed. The following content describes the two common situations that you may encounter.

The QPS value surges at a point in time

In most cases, this situation occurs during service releases. If old nodes are taken offline before new nodes are completely prefetched, the new nodes are frequently called when the consumer selects a provider. As a result, the QPS line chart shows that the QPS of a new node slowly increases and then surges at a point in time after all the old nodes are taken offline. You can refer to Best practices for low-traffic prefetching to resolve this issue.

The QPS value does not gradually increase

If this situation occurs, we recommend that you check whether Microservices Governance is enabled for the consumer. If Microservices Governance is not enabled for the consumer, you can enable Microservices Governance for the consumer to resolve this issue. If the traffic of the application that you need to prefetch is sourced from an external service, such as a Java gateway, Microservices Governance is not enabled for the consumer, and low-traffic service prefetching is not supported in this scenario.

What is the best practice for low-traffic service prefetching?

Incomplete prefetching often occurs during rolling deployment. You can refer to the following practice to ensure that service prefetching meets expectations.

Configure minimum ready time (Recommended): You can configure

.spec.minReadySecondsfor your workload to control the time interval between a pod becoming ready and it becoming available. Set the value of this parameter to be greater than the pod's low-traffic prefetch duration to ensure that K8s waits for the pod to complete prefetching before continuing the rolling deployment. If you use ACK, you can find your application on the Container Platform and configure this setting directly under More > Upgrade Policy > Rolling Upgrade > Minimum Ready Time (minReadySeconds). (This setting ensures that the deployment continues only after a newly started pod is ready and has maintained this status for a specified period.)(Recommended) Use the batch deployment method: You can use methods or tools such as OpenKruise to deploy workloads in batches. The deployment interval between the batches must be longer than the low-traffic service prefetching duration. After the new nodes in a batch are fully prefetched, you can continue to release the next batch of nodes.

You can also increase the value of the initialDelaySeconds parameter to resolve the issue. However, we recommend that you do not use this method. The initialDelaySeconds parameter specifies the time to wait before the first readiness probe of the workload. You must make sure that the parameter value is greater than the sum of the low-traffic service prefetching duration, delayed registration duration, and application startup time. Note that you can obtain the application startup time from actual log outputs. The application startup time varies along with business development. If you delay the time to pass the readiness probe, the endpoints of the newly started nodes may fail to be added to the endpoints of the Kubernetes services. Therefore, if you want to ensure the optimal prefetching effect, we recommend that you do not use this method.

If you follow the best practice to perform operations and the prefetching QPS curve still does not meet your expectations, you can check whether the traffic received by the application is all sourced from the consumers for which Microservices Governance is enabled. If Microservices Governance is not enabled for specific consumers or traffic called by external load balancers exists, the QPS curve during application prefetching also does not meet expectations.

What is 55199/readiness? If I do not configure 55199/readiness, traffic may drop to zero. Why?

55199/readiness is a built-in, HTTP-type readiness probe port provided by MSE Microservices Governance. When an application's Kubernetes readiness probe is configured to 55199/readiness, the readiness probe returns a 500 status code if a new node has not yet completed service registration during startup, and a 200 status code if the node has completed service registration.

Based on the default Kubernetes deployment policy, old nodes are not taken offline until new nodes are ready. When the readiness probe is configured with 55199/readiness, a new node becomes ready only after it completes service registration. This means old nodes are taken offline only after new nodes have completed service registration. This ensures that the service registered with a registry always has available nodes. If you do not configure 55199/readiness, old nodes may be taken offline during a service release before new nodes have registered. As a result, the service is left with no available nodes in the registry. This causes all consumers of the service to receive errors when making calls, and the service's traffic to drop to zero. Therefore, we strongly recommend that you enable graceful start and configure the 55199/readiness readiness probe for your application.

If the probe version of your application is earlier than 4.1.10, you must configure the readiness check path as /health instead of /readiness. To view the probe version, navigate to MSE Console > Administration Center > Application Governance, click the corresponding application, and then select Node Details. The probe version is displayed on the right.

Why are prefetching events of my application reported before service registration events? What do I do if this issue occurs?

In the current version, when a service receives the first external request, the service starts the prefetching process and reports a prefetching start event. In some cases, however, the first request that is received by an application may not be a microservices call request. Such a request does not follow the business logic to trigger the prefetching. For example, you configure a Kubernetes liveness probe for the workload of an application. In this case, when a new node is started, even if the service registration operation is not performed on the node, the system considers that the prefetching has started when Kubernetes performs a check using the liveness probe.

To prevent this issue, you can configure the following parameter in the environment variables of the provider. This way, the system no longer triggers the prefetching logic for these requests.

# Ignore requests for paths /xxx or /yyy/zz to prevent them from triggering the prefetch process.

profile_micro_service_record_warmup_ignored_path="/xxx,/yyy/zz"This parameter can also be configured as a Java virtual machine (JVM) startup parameter.

The value of this parameter does not support regular expression matching.

What is proactive notification? When do I need to enable proactive notification?

Proactive notification is an advanced capability provided by the graceful shutdown module. This capability allows a Spring Cloud provider in the offline state to proactively initiate a network request to notify the consumer of the offline state. After the consumer receives the notification, the consumer no longer calls the provider node. In normal cases, if both providers and consumers use the Spring Cloud framework, the consumers cache the provider node list on the on-premises machine. In specific scenarios, even if a consumer receives a notification from the registry, the local cache may not be refreshed at the earliest opportunity. As a result, the consumer still initiates calls to offline nodes. The proactive notification feature is introduced to resolve this issue.

By default, the proactive notification feature is disabled. Based on the default graceful shutdown solution, after Microservices Governance is enabled, if a provider that is going offline receives a request, the provider adds a special header to the response, and returns the response to the consumer. After the consumer receives the response, the consumer identifies the header and no longer calls the provider node. Therefore, if the consumer sends requests to a provider that is going offline, the consumer can detect that the provider is about to go offline and automatically remove the provider node from the provider node list. However, if the consumer does not send requests to the provider within the grace period (about 30 seconds) before the provider becomes offline, the consumer may not detect that the provider node is in the offline state and may send a request when the provider is about to go offline. As a result, a request error is reported. In this case, you can enable the proactive notification feature. We recommend that you enable the proactive notification feature for providers if a consumer sends few requests at long intervals to the providers.

After a graceful shutdown event is reported, traffic does not drop to zero immediately. Why?

In most cases, traffic drops to zero immediately after a graceful shutdown event is reported. The following content describes the possible causes and solutions to this issue.

The application receives requests from non-microservices objects such as external load balancers. Another possible cause is that the application receives requests that are initiated using call methods such as local scripts and scheduled tasks.

The graceful start and shutdown solution supports only requests from microservice applications for which Microservices Governance is enabled. The solution is not supported in the preceding scenarios. We recommend that you configure custom solutions based on the graceful shutdown features that are provided by the infrastructures and frameworks.

The proactive notification feature is not enabled for the application. (For more information, see What is proactive notification?)

We recommend that you check whether the curve in the line chart meets your expectations after you enable the proactive notification feature.

The version of the framework that is used by the application is not supported by the graceful start and shutdown solution. For more information about frameworks that are supported by the graceful start and shutdown solution of Microservices Governance, see Java frameworks supported by Microservices Governance.

If the framework version of your application is not supported, you can upgrade the framework version of your application.

The version release time becomes longer after the graceful start and shutdown solution is enabled for an application. What do I do?

Perform the following steps to check whether service prefetching before the readiness probe is enabled:

Log on to the MSE Administration Center console, and in the top menu bar, select a region.

In the navigation pane on the left, select Administration Center > Application Administration.

On the Application List page, click the target application, and select the Traffic Governance > Graceful Online/Offline tab.

In the Graceful Online/Offline tab, press F12 to open the developer tools. In the Network tab, search for the

GetLosslessRuleByApprequest. (If you cannot find the request, refresh the page.) In the Response, check whether the value of the Related field in Data is true. If the value is true, it indicates that the Complete service prefetch before passing the readiness probe feature was enabled for the application a long time ago. (This feature is no longer available.) When this feature is enabled, it may increase the release time. We recommend that you submit a ticket to disable this feature.

What is 55199/readiness provided by MSE Microservices Governance? Why does MSE readiness probe failures persist in some scenarios?

Kubernetes provides the following types of probes:

Startup probe: Detects whether an application successfully starts during the pod startup process. If the probe fails multiple times during the pod startup process and the configured failure threshold is reached, the pod will be restarted.

Liveness probe: Detects whether an application is alive. The system starts a liveness probe after the startup probe succeeds. Liveness probes are performed throughout lifecycle of a pod. If the probe fails multiple times and the configured failure threshold is reached, the pod will be restarted.

Readiness probe: Detects whether an application is ready. The system starts a readiness probe after the startup probe succeeds. Readiness probes are performed throughout lifecycle of a pod. If the probe fails multiple times and the configured failure threshold is reached, Kubernetes marks the pod as unready, but does not restart the pod. When an application is released, you can use a readiness probe to control the release process. Based on the default release policy, if a new pod is not ready, Kubernetes will stop the release process until the new pod becomes ready.

After your service is integrated with MSE Service Governance, you can use the built-in 55199/readiness endpoint in MSE Service Governance to configure a service readiness check. After the configuration is complete, the K8s readiness probe passes only after the application completes service registration. This ensures that during the deployment process, newly started pods have completed registration with the service registry before the old pods are taken offline by K8s and unregistered from the service registry. This process allows service callers to always have available nodes to make calls, which prevents errors caused by unavailable services. For more information about why you need to configure the MSE readiness probe, see Service Registration Status Check.

If MSE readiness probe failures persist, this issue may be caused by the following reasons:

Graceful start is not enabled for the application, and 55199/readiness does not take effect. As a result, the readiness probe fails.

The current application is not connected to service governance. You can check whether probe logs exist in the service governance probe directory. In a K8s environment, the probe directory is

/home/admin/.opt/AliyunJavaAgentor/home/admin/.opt/ArmsAgentby default. If the directory does not contain a logs directory, it indicates that the application failed to connect to service governance. Please submit a ticket to contact us.The application is repeatedly restarted because the startup or liveness probe failures reach the specified threshold. In this case, the MSE readiness probe always fails. You can query Kubernetes events of the pod in the background and check whether events related to startup or liveness probe failures exist.