Metadata migration refers to the process of migrating metadata from a self-managed Apache Kafka cluster to an ApsaraMQ for Kafka instance. You can export the metadata of a self-managed Apache Kafka cluster and import the metadata to an ApsaraMQ for Kafka instance. Then, the system creates topics and groups on the ApsaraMQ for Kafka instance based on the imported metadata to implement the metadata migration.

Prerequisites

An ApsaraMQ for Kafka instance is purchased and deployed. For more information, see Step 2: Purchase and deploy an instance.

Before you migrate the metadata of a self-managed Apache Kafka cluster to an ApsaraMQ for Kafka instance, you can use the specification evaluation feature of ApsaraMQ for Kafka to evaluate the specifications of the cluster, such as the traffic usage, disk capacity, disk type, and partition number of the cluster. This helps you select an ApsaraMQ for Kafka instance that meets your business requirements. For more information about the specification evaluation feature, see Evaluate instance specifications.

Java Development Kit (JDK) 8 or later is downloaded. For more information, see Java Downloads.

Taobao System Activity Reporter (Tsar) is installed. For more information, see tsar.

To accurately obtain the configurations of the machine that is used for the self-managed Apache Kafka cluster, we recommend that you install Tsar before you migrate the metadata of the self-managed Apache Kafka cluster. You can also configure the

--installTsarparameter to automatically install Tsar when you run the migration tool. However, the automatic installation of Tsar requires a long period of time to complete and may fail if the environment is incompatible.

Serverless ApsaraMQ for Kafka instances do not support the metadata export tool used in this topic.

Background information

The metadata of a self-managed Apache Kafka cluster consists of the configurations of topics and groups in the cluster. The metadata of a self-managed Apache Kafka cluster is stored in ZooKeeper. Each node of the self-managed Apache Kafka cluster obtains the most recent metadata of the cluster from ZooKeeper. This way, the metadata that is exported from each node of the cluster is consistent and up-to-date. You can export the metadata of a self-managed Apache Kafka cluster to a JSON file and then import the JSON file to another self-managed Apache Kafka cluster to back up the metadata of the self-managed Apache Kafka cluster.

Step 1: Export the metadata

Perform the following steps to export the metadata of a self-managed Apache Kafka cluster by using the metadata export tool:

Click kafka-migration-assessment.jar to download the file that contains the metadata export tool.

Upload the file that contains the metadata export tool to your self-managed Apache Kafka cluster.

Run the following command in the directory in which the file is stored to allow the JAR file to be executed:

chmod 777 kafka-migration-assessment.jarRun the following command to export the metadata of the self-managed Apache Kafka cluster:

java -jar kafka-migration-assessment.jar MigrationFromZk \ --sourceZkConnect <host:port> \ --sourceBootstrapServers <host:port> \ --targetDirectory ../xxx/ \ --fileName metadata.json \ --commitParameter

Description

Example

sourceZkConnect

The IP address and port number of ZooKeeper on which the self-managed Apache Kafka cluster is deployed. If you do not configure this parameter, the metadata export tool automatically obtains the IP address and the port number.

192.168.XX.XX:2181

sourceBootstrapServers

The IP address and port number of the self-managed Apache Kafka cluster. If you do not configure this parameter, the metadata export tool automatically obtains the IP address and the port number.

192.168.XX.XX:9092

targetDirectory

The directory in which you want to store the exported metadata file. If you do not configure this parameter, the current directory is automatically used.

../home/

fileName

The name of the metadata file. If you do not configure this parameter, the default file name kafka-metadata-export.json is used.

metadata.json

commit

Commit and run the code.

commit

installTsar

Specifies whether to install Tsar. By default, Tsar is not automatically installed.

Tsar can be used to obtain accurate information about the specifications of the machine that is used to run the self-managed Apache Kafka cluster and the recent memory usage, traffic usage, and configurations of the cluster. The automatic installation of Tsar requires a long period of time to complete and may fail if the environment is incompatible.

None

evaluate

Specifies whether to obtain information about the specifications of the machine that is used to run the self-managed Apache Kafka cluster and the recent memory usage, traffic usage, and configurations of the cluster for specification evaluation. Valid values: true and false. Default value: true, which specifies that the ApsaraMQ for Kafka console evaluates and recommends the specifications of the ApsaraMQ for Kafka instance to which the metadata is migrated.

If you do not want to use the specification evaluation feature, set this parameter to false.

None

After you export the metadata, a JSON file is generated in the directory that you specified to store the exported metadata file.

You can view the exported metadata file in the specified directory and download the file to your on-premises machine.

Step 2: Create a metadata import task

Create a task

Log on to the ApsaraMQ for Kafka console.

In the Resource Distribution section of the Overview page, select the region where the ApsaraMQ for Kafka instance that you want to manage resides.

In the left-side navigation pane, click Migration.

On the Migration page, click the Metadata Import tab and click Create Task.

In the Create Metadata Import Task panel, configure the parameters and click Create.

In the Create Task step, configure the Task Name and Destination Instance parameters and upload the metadata file that you obtained in Step 1: Export the metadata.

In the Edit Topic step, perform the following operations on the topics and groups that you want to migrate:

Add a topic: Click Add Topic. In the panel that appears, configure the Name, Description, Partitions, and Message Type parameters.

Modify a topic: Find the topic that you want to modify and click Modify in the Actions column. In the panel that appears, configure the Description, Partitions, Message Type, and Log Cleanup Policy parameters.

Delete a topic: Find the topic that you want to delete and click Delete in the Actions column. In the Note message, click OK.

In the Edit Group step, perform the following operations:

Add a group: Click Add Group. In the panel that appears, configure the Group ID and Description parameters.

Modify a group: Find the group that you want to modify and click Modify in the Actions column. In the panel that appears, configure the Description parameter.

Delete a group: Find the group that you want to delete and click Delete in the Actions column. In the Note message, click OK.

View the migration progress

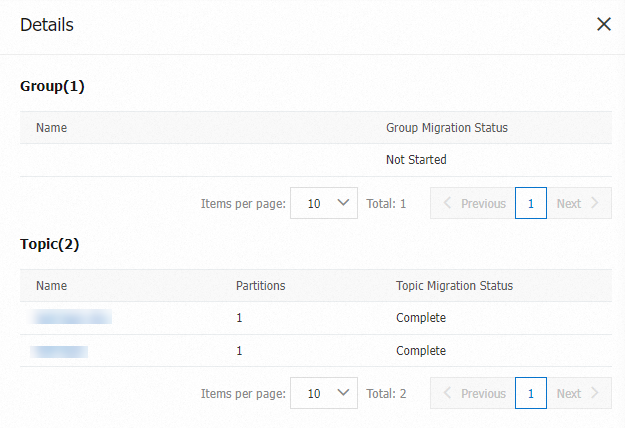

After you create a metadata import task, you can view the migration progress of topics and groups on the Metadata Import tab of the Migration page. You can also click Details in the Actions column of the task to view the task details.

The migration of metadata requires approximately 30 minutes to complete. After the metadata is migrated, you can use the topics and groups that are migrated to the ApsaraMQ for Kafka instance.

Step 3: Verify the migration result

On the Instances page, click the name of the instance that you want to manage.

View topics and groups on the destination instance.

In the left-side navigation pane, click Topics. On the Topics page, view the created topics on the instance.

In the left-side navigation pane, click Groups. On the Groups page, view the created groups on the instance.

Step 4: Delete the migration task

On the Metadata Import tab of the Migration page, find the migration task that you want to delete and click Delete in the Actions column.

What to do next

After you migrate metadata from a self-managed Apache Kafka cluster to an ApsaraMQ for Kafka instance, check whether you need to update the endpoint used by the client to access the instance. For more information, see View endpoints.