MaxCompute allows you to use the extract, transform, load (ETL) tool Kettle to schedule MaxCompute jobs. You can use Kettle to define a data transmission topology by dragging controls. This topic describes how to use a Java Database Connectivity (JDBC) driver to connect Kettle to a MaxCompute project and use Kettle to schedule MaxCompute jobs.

Background information

Kettle is an open source ETL tool that is written in Java. It can run on the Windows, UNIX, and Linux operating systems and provides a GUI for you. Kettle supports a wide range of input and output data sources, such as databases and open source big data systems. The databases include the Oracle, MySQL, and DB2 databases. The open source big data systems include the Hadoop Distributed File System (HDFS), HBase, Cassandra, and MongoDB systems.

You can create a job in Kettle to connect to a MaxCompute project and schedule the job based on the ETL workflow.

Prerequisites

A MaxCompute project is created.

For more information, see Create a MaxCompute project.

The AccessKey pair of your Alibaba Cloud account that is used to access the MaxCompute project is obtained.

You can click the profile picture in the upper-right corner of the MaxCompute console and select AccessKey Management to obtain the AccessKey pair.

The package of MaxCompute JDBC driver V3.2.8 or later that includes the

jar-with-dependenciespackage is downloaded.MaxCompute JDBC driver V3.2.9 is used in this topic.

The Kettle installation package is downloaded and decompressed to a local directory.

Kettle 8.2.0.0-342 is used in this topic.

Procedure

Step 1: Save the package of the MaxCompute JDBC driver

Save the package of the MaxCompute JDBC driver to the Kettle installation directory. Then, you can use this driver to connect Kettle to the MaxCompute project.

Step 2: Connect Kettle to the MaxCompute project

Configure connection parameters to connect Kettle to the MaxCompute project.

Step 3: Create a job scheduling workflow

Create a job scheduling workflow and configure jobs in Spoon.

Step 4: Run the job scheduling workflow

Run jobs based on the created workflow.

Step 5: View the job scheduling result

Use the SQL editor to query the job scheduling result.

Step 1: Save the package of the MaxCompute JDBC driver

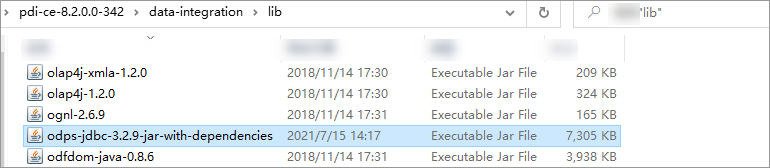

Save the package of the MaxCompute JDBC driver, such as odps-jdbc-3.2.9-jar-with-dependencies.jar, to the Kettle installation directory data-integration/lib.

Step 2: Connect Kettle to the MaxCompute project

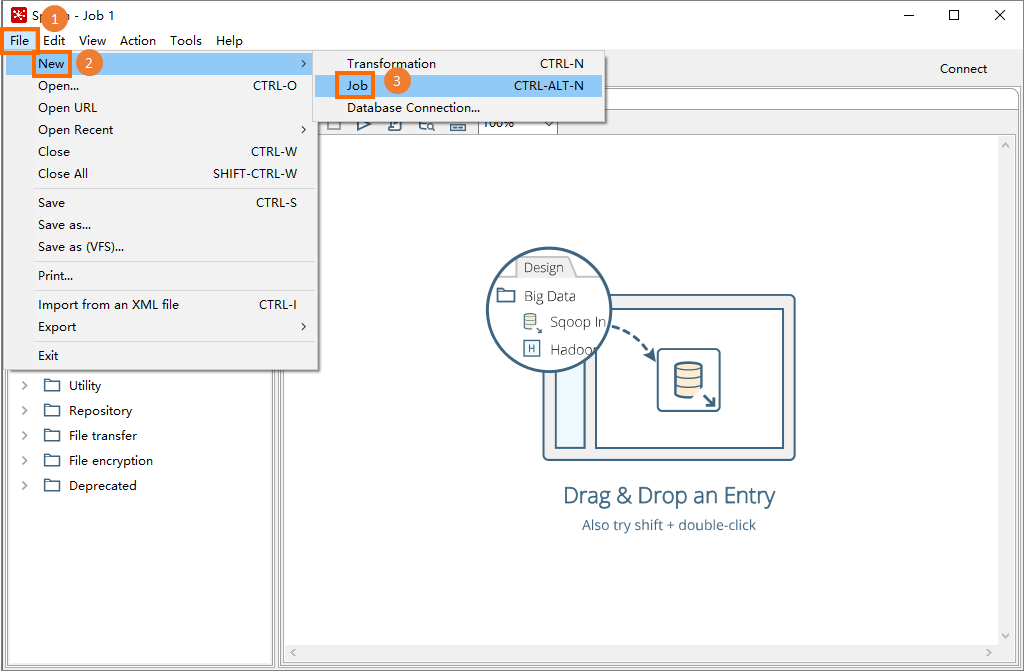

In the

data-integrationdirectory, double-clickSpoon.bat(Windows) or double-clickSpoon(macOS) to start Spoon.In the main menu bar of Spoon, choose to create a Kettle job. This job can be used to create a job scheduling workflow.

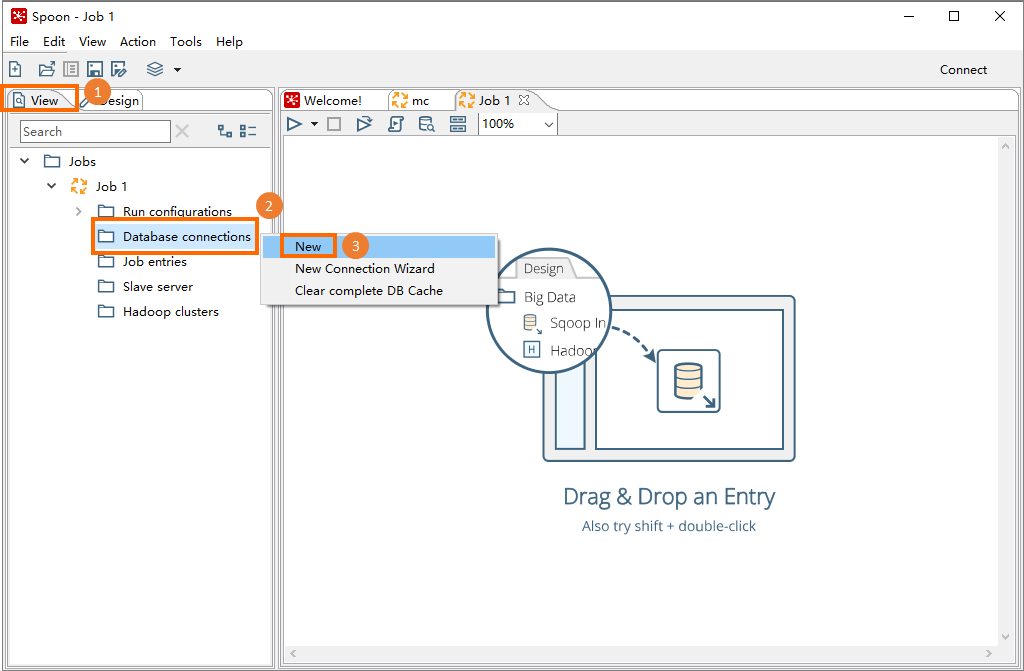

In the left-side pane, click the View tab. In the navigation tree that appears, right-click Database connections and select New.

In the dialog box that appears, click the General tab and configure connection parameters. The following table describes the parameters.

Parameter

Description

Connection name

The name of the data connection that you want to establish, such as MaxCompute. This parameter is used to distinguish connections to different databases.

Connection type

The type of the data connection that you want to establish. Select Generic database from the drop-down list.

Method of access

The connection method. Select Native (JDBC) from the drop-down list.

Dialect

The SQL dialect. Select Hadoop Hive 2 from the drop-down list.

Custom connection URL

The URL that is used to connect to the MaxCompute project. The format is

jdbc:odps:<MaxCompute_endpoint>?project=<MaxCompute_project_name>. Delete the angle brackets (<>) when you configure this parameter. Parameters:<MaxCompute_endpoint>: required. The endpoint of MaxCompute. Configure this parameter based on the region where the MaxCompute project resides.

For more information about the endpoints of MaxCompute in different regions, see Endpoints.

<MaxCompute_project_name>: required. The name of the MaxCompute project.

This parameter specifies the name of your MaxCompute project instead of the DataWorks workspace to which the MaxCompute project corresponds. You can log on to the MaxCompute console, select the region where your MaxCompute project resides in the top navigation bar, and then view the name of the MaxCompute project on the Project management tab.

Custom driver class name

The driver that is used to connect to the MaxCompute project. Set this parameter to com.aliyun.odps.jdbc.OdpsDriver.

Username

The AccessKey ID that is used to access the MaxCompute project.

You can click the profile picture in the upper-right corner of the MaxCompute console and select AccessKey Management to obtain the AccessKey ID.

Password

The AccessKey secret that corresponds to the AccessKey ID.

Click Test. If the connection tests successfully, click OK and Confirm.

Step 3: Create a job scheduling workflow

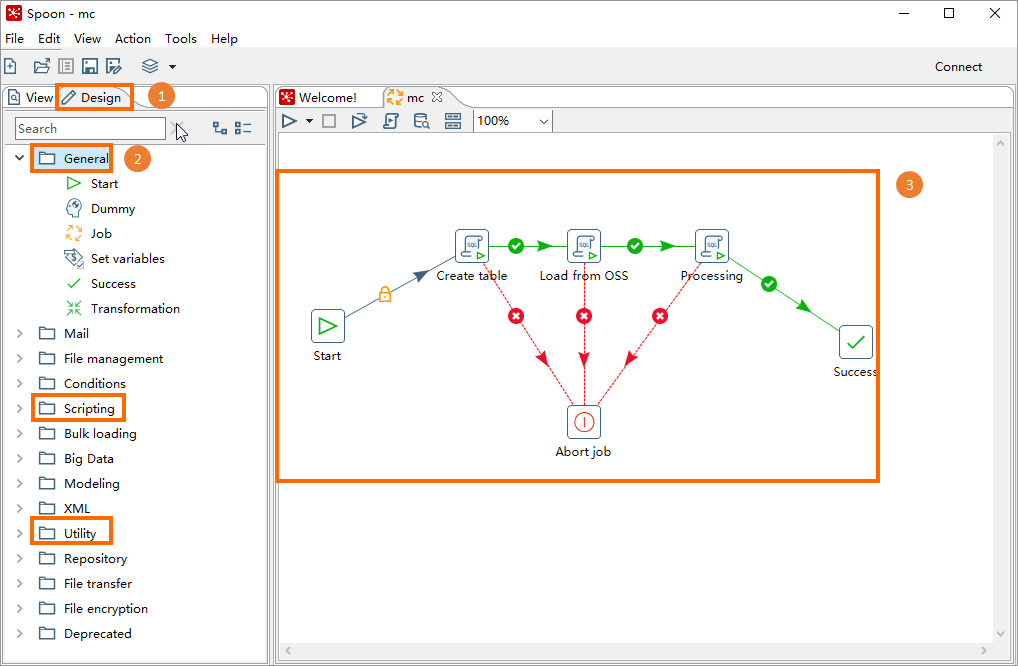

In Spoon, you can create and associate core objects (jobs) on the Design tab to create a job scheduling workflow.

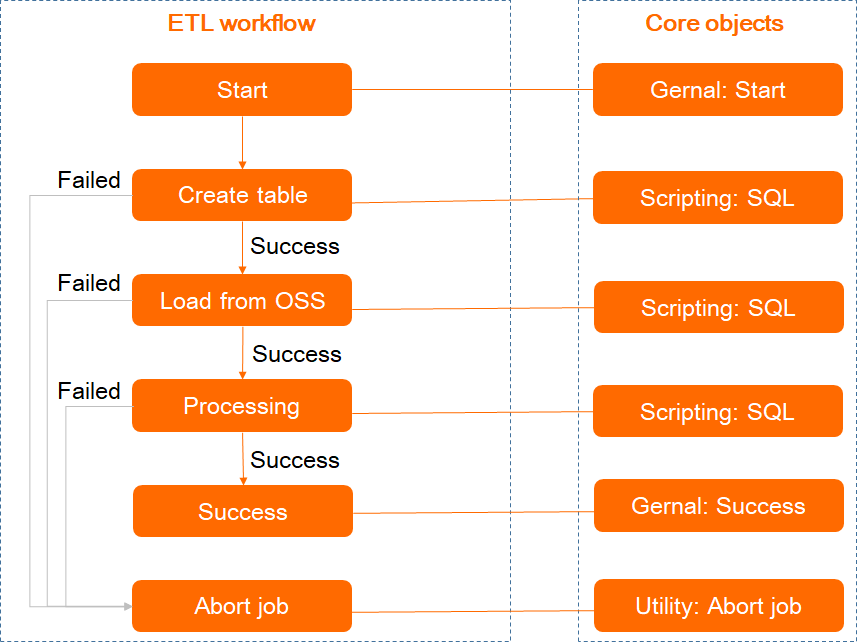

This step describes how to create an ETL workflow that uses the LOAD command to load data from Object Storage Service (OSS) and writes data to a MaxCompute internal table. For more information about the sample data, see Use a built-in extractor or a storage handler to import data. The following figure shows the core objects that are involved in the ETL workflow. These core objects are categorized based on their types.

In Spoon, click the Design tab.

In the left-side navigation pane, drag the core objects that are shown in the preceding figure to the right-side job pane and use lines to connect these core objects based on the structure shown in the following figure.

To connect two core objects, select a core object and press Shift to connect this object to the destination object.

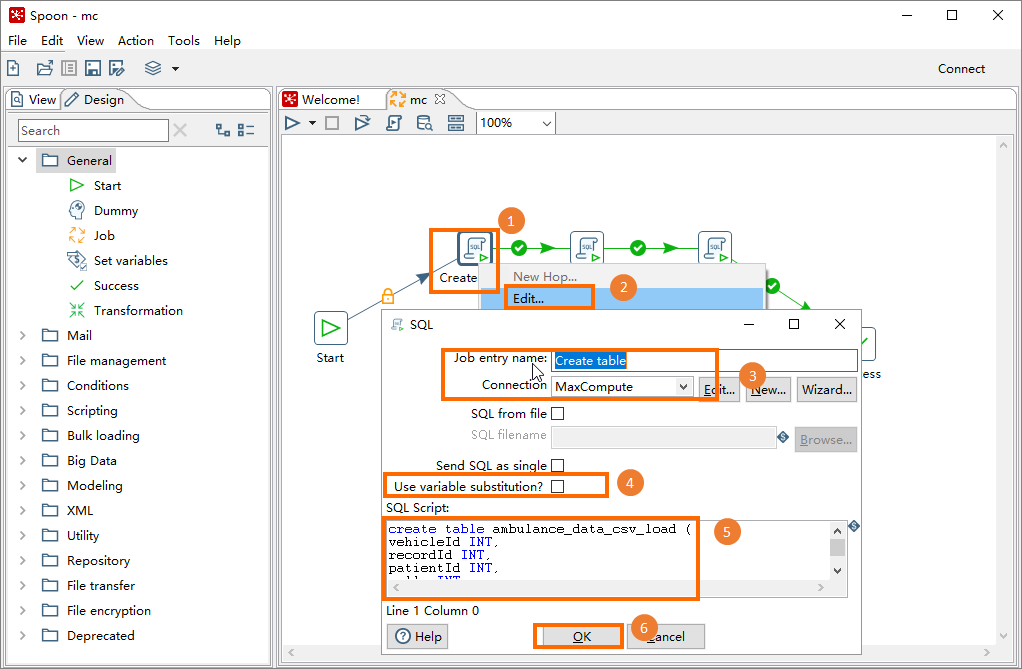

Right-click a core object (script) in the right-side pane and select Edit. In the SQL dialog box, configure parameters that are described in the following table and click OK. Configure all core objects (scripts) in sequence. The following table describes the parameters.

Parameter

Description

Job entry name

The name of the job that you want to schedule, such as Create table, Load from OSS, or Processing.

Connection

The name of the data connection, such as MaxCompute. In this topic, the data connection is the one that you create in Step 2. This connection is used to connect to the MaxCompute project.

Send SQL as single

Do not select this option.

SQL Script

The SQL script that corresponds to the job that you want to schedule. The following scripts are used in this topic:

Create table

CREATE TABLE ambulance_data_csv_load ( vehicleId INT, recordId INT, patientId INT, calls INT, locationLatitute DOUBLE, locationLongtitue DOUBLE, recordTime STRING, direction STRING);Load from OSS

LOAD OVERWRITE TABLE ambulance_data_csv_load FROM LOCATION 'oss://oss-cn-hangzhou-internal.aliyuncs.com/mc-test/data_location/' STORED BY 'com.aliyun.odps.CsvStorageHandler' WITH serdeproperties ( 'odps.properties.rolearn'='acs:ram::xxxxx:role/aliyunodpsdefaultrole', --The Alibaba Cloud Resource Name (ARN) of the AliyunODPSDefaultRole role. You can obtain the ARN from the Roles page of the Resource Access Management (RAM) console. 'odps.text.option.delimiter'=',' );Processing

INSERT OVERWRITE TABLE ambulance_data_csv SELECT * FROM ambulance_data_csv_load;

Step 4: Run the job scheduling workflow

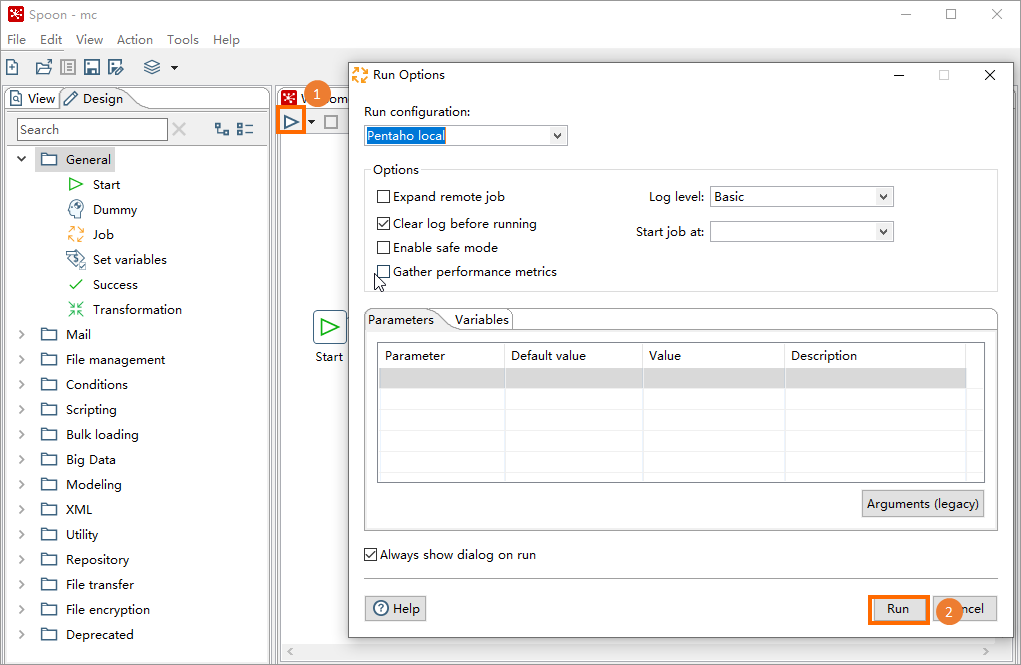

Click the

icon in the upper-left corner of the job tab. In the Run Options dialog box, click Run.

icon in the upper-left corner of the job tab. In the Run Options dialog box, click Run.

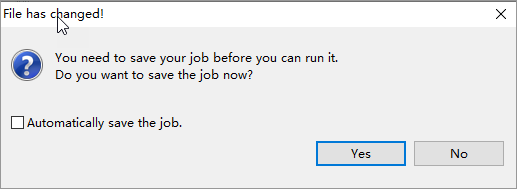

Optional:If the message shown in the following figure appears, click Yes and name the workflow as prompted, such as mc.

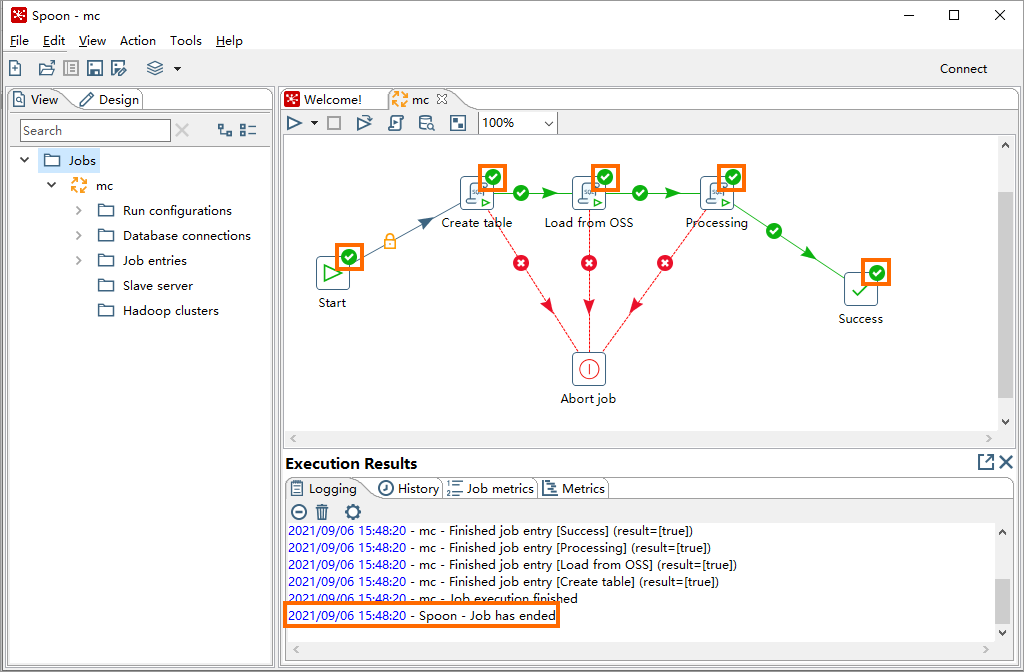

Query the status of the workflow based on the directed acyclic graph (DAG) or information in the Execution Results section. If the execution result shown in the following figure is displayed, the job scheduling workflow is completed.

Step 5: View the job scheduling result

After the job scheduling workflow is completed, use SQL scripts to check whether the data is written to a MaxCompute table.

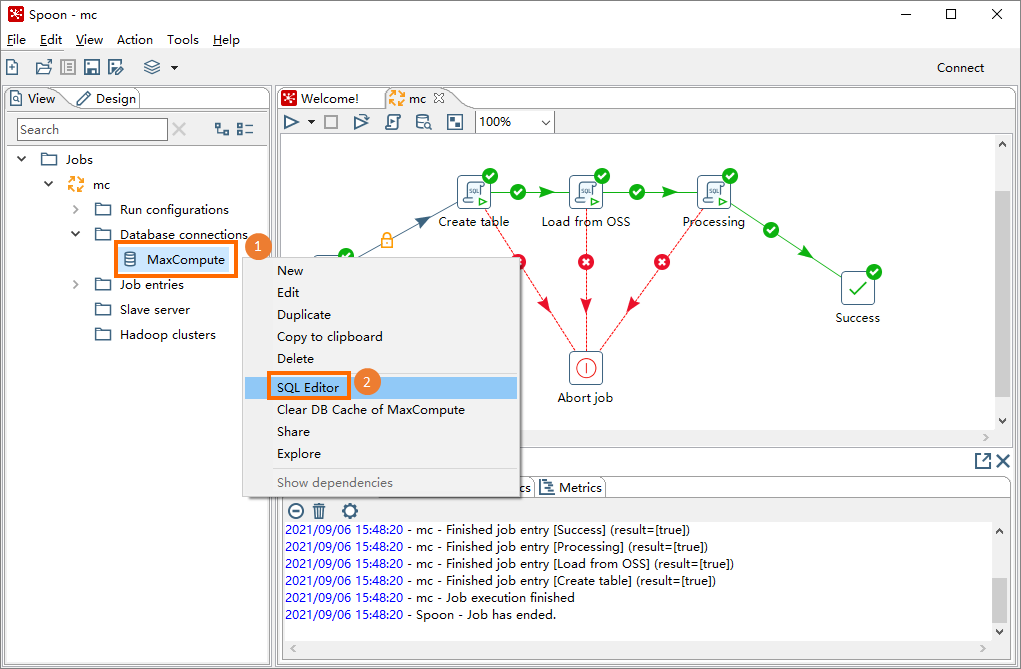

In Spoon, click the View tab, and click Database connections under the created Kettle job, such as mc.

Right-click the established data connection, such as MaxCompute, and select SQL Editor.

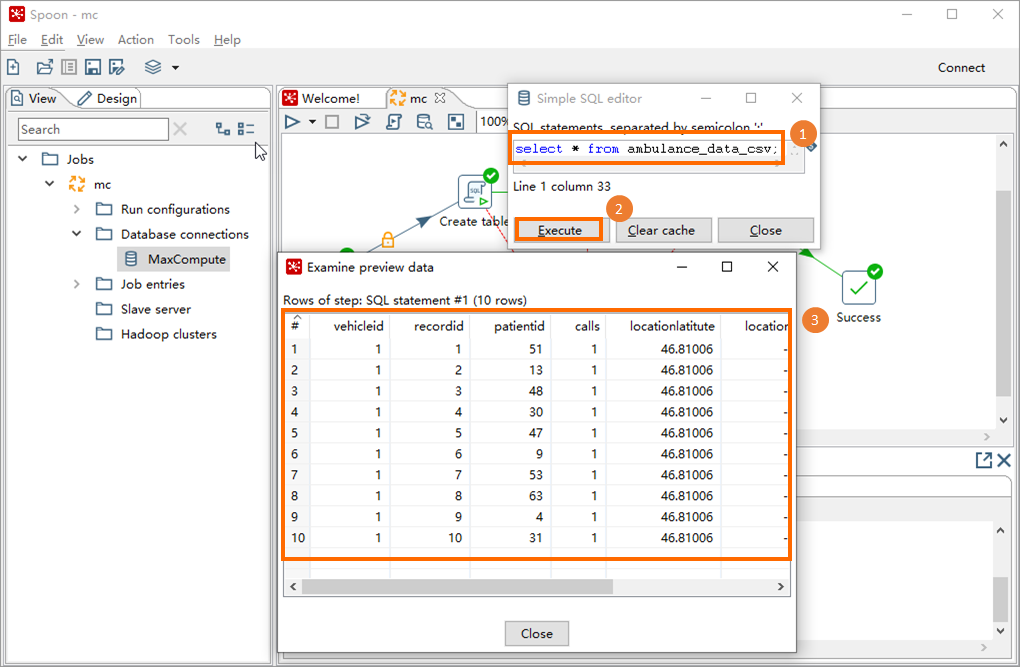

In the Simple SQL editor dialog box, enter the SQL script and click Execute. Then, you can view the query result in the Examine preview data dialog box.

Sample SQL script:

Sample SQL script:SELECT * FROM ambulance_data_csv;