When you use PyODPS DataFrame to develop data applications, executing code in different environments may lead to unexpected issues. This topic describes how to identify the runtime environment and how to resolve the issues in specific scenarios.

Overview

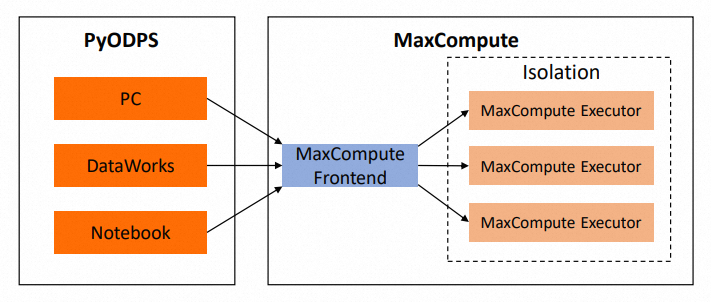

PyODPS is a Python package rather than a Python implementation. PyODPS runs in Python environments. Therefore, PyODPS behavior is the same as behavior of a common Python interpreter. The following code shows operating principle and runtime environment of PyODPS.

Sample code

from odps import ODPS, options import numpy as np o = ODPS(...) df = o.get_table('pyodps_iris').to_df() coeffs = [0.1, 0.2, 0.4] def handle(v): import numpy as np return float(np.cosh(v)) * sum(coeffs) options.df.supersede_libraries = True val = df.sepal_length.map(handle).sum().execute(libraries=['numpy.zip', 'other.zip']) print(np.sinh(val))Code execution systems

The following figure shows the systems that are involved when the preceding sample code is executed. The code is executed are located outside MaxCompute. In this topic, the systems are referred to as an on-premises system.

Code analysis

Reference of third-party packages

All sample code except code of the HANDLE function is executed on the on-premises system. Take note that the HANDLE function is passed to the MAP method but is not executed. Therefore, the execution behavior of the sample code is similar to the execution behavior of ordinary Python code. When a third-party package is imported, the on-premises package is referenced. In the sample code, the

other.zippackage that is referenced inlibraries=['numpy.zip', 'other.zip']is not installed on the on-premises system. Thus, the code contains statements such asimport other, an error is reported. This issue also occurs even if theother.zippackage is uploaded to MaxCompute as a resource because theother.zippackage does not exist on the on-premises system. Theoretically, if no PyODPS package is involved in the code that is executed on the on-premises system, this issue is not caused by PyODPS. In this case, you must check the code for troubleshooting.HANDLE function

If the MaxCompute backend is used when the HANDLE function is passed to the MAP method, the HANDLE function is processed in the following phases:

The cloudpickle module extracts the closure and bytecode.

PyODPS DataFrame uses the closure and bytecode to generate a Python user-defined function (UDF), and then submits the UDF to MaxCompute.

If you run jobs in MaxCompute by using SQL statements, the generated Python UDF is called, the bytecode and closure content in the UDF are unpickled, and then the UDF is executed in a MaxCompute executor.

Summary

The code in the body of the HANDLE function is executed in a MaxCompute executor rather than the on-premises system.

The packages installed on the on-premises system cannot be referenced in the body of the HANDLE function. Only packages in MaxCompute executors can be referenced.

The uploaded third-party package must be integrated with Python 2.7, which uses UCS-2, in a MaxCompute executor.

If you modify a referenced external variable, such as

coeffsin the sample code, in the body of the HANDLE function, the value of the variable on the on-premises system is retained.If you reference a package in the body of the HANDLE function, and the package is imported outside the HANDLE function, an error may be reported. This is because the cloudpickle module sends the reference of the on-premises package to a MaxCompute executor but the structure of the package may not be supported by the MaxCompute executor. Therefore, we recommend that you import the package in the body of the HANDLE function.

If the code of another file is referenced in the body of the HANDLE function, the package to which the file belongs must exist in a MaxCompute executor. If you do not want to resolve the issue by using a third-party package, store all referenced personal code in the same file.

You can apply the preceding summary about the HANDLE function to custom methods and aggregate classes that are referenced in the apply, map_reduce, and custom aggregate functions. If you use a pandas backend, all code runs on the on-premises system. Therefore, you must install the relevant packages on the on-premises system. However, code is transferred to MaxCompute to run after debugging at the pandas backend. In this case, we recommend that you comply with the MaxCompute backend rules for development when you install the package on the on-premises system.

Use a third-party package

Personal computer or on-premises server: If you want to use code in a third-party package, install the third-party package on the required Python version.

Notebook: If you want to use code in a third-party package, contact the platform provider for technical support.

DataWorks: Installing third-party packages is not supported on the on-premises system. However, you can reference third-party packages in DataWorks. You can read other files and run the

execcommand to use code in other files on the on-premises system. For more information, see Use a PyODPS node to reference a third-party package.ImportantThe directory structure in DataStudio is not an actual directory structure in the file system. If you import or open the file path that is displayed in DataStudio, the execution fails.

After you upload resources in DataWorks, click Submit to ensure that the resources are uploaded to MaxCompute.

If you want to use your custom NumPy version, you must specify

odps.df.supersede_libraries = Truein code when you upload the required wheel package. Make sure that you use the name of the NumPy package as the first parameter for the libraries parameter.

Reference data in other MaxCompute tables

Personal computer, on-premises server, Notebook, or DataWorks: Access the MaxCompute table by using on-premises code. If you can access the endpoint, use PyODPS or DataFrame to access the MaxCompute table. If you cannot access the endpoint, contact the platform provider for technical support.

Map, apply, map_reduce, and custom aggregate functions: The MaxCompute executor does not support access to endpoints or Tunnel endpoints, and no PyODPS package is available. Therefore, you cannot directly use ODPS entry objects or PyODPS DataFrame to access other MaxCompute tables. You cannot pass these objects from outside of UDFs. If the table does not contain a large amount of data, we recommend that you specify a DataFrame as a resource. For more information, see Reference resources. If the table contains a large amount of data, we recommend that you join tables.