Error code: ODPS-0130071: Semantic analysis exception

Error Message 1: the number of input partition columns (n) doesn't equal to table's partition columns (m)

Sample

FAILED: ODPS-0130071:[m,n] Semantic analysis exception - the number of input partition columns (n) doesn't equal to table's partition columns (m)

Description

The table into which data is inserted is a partitioned table. The table has m partition fields, but n (n < m) partition key columns are specified in the SQL statement that is executed to insert data. As a result, data fails to be written to a specific partition, and an error is returned.

Solutions

Modify the SQL statement to ensure that the partition key columns specified in the statement match the partition fields in the table.

Examples

-- Create a table.

create table if not exists mf_sale_detail

(

shop_name string,

customer_id string,

total_price double

)

partitioned by

(

sale_date string,

region string

);

-- Incorrect usage: The destination table has two levels of partitions. The partition clause specifies some or none of the partitions.

insert overwrite table mf_sale_detail

values ('s1','c1',100.1),('s2','c2',100.2),('s3','c3',100.3);

FAILED: ODPS-0130071:[1,24] Semantic analysis exception - the number of input partition columns (0) doesn't equal to table's partition columns (2)

-- Correct usage: The partition clause specifies complete partition information.

insert overwrite table mf_sale_detail partition(sale_date = '2013', region = 'china')

values ('s1', 'c1', 100.1), ('s2', 'c2', 100.2), ('s3', 'c3', 100.3);

OK

-- Correct usage: Dynamic partitioning is used.

insert overwrite table mf_sale_detail partition(sale_date = '2013', region)

values ('s1', 'c1', 100.1, 'china'), ('s2', 'c2', 100.2, 'china'), ('s3', 'c3', 100.3, 'china');

OKError Message 2: expect equality expression (i.e., only use '=' and 'AND') for join condition without mapjoin hint

Sample

ODPS-0130071:[m,n] Semantic analysis exception - expect equality expression (i.e., only use '=' and 'AND') for join condition without mapjoin hint

Description

In MaxCompute SQL, the sort-merge join is used as a physical algorithm for join operations. The join condition must include an equivalent expression. In actual query operations, shuffling is performed based on the columns of the left and right tables in the equivalent expression.

Solutions

(1) Make sure that the join condition contains an equivalent expression.

(2) Add a map join hint. (Note: If the ON condition contains only a non-equivalent expression, a large amount of data may be generated after a join operation. As a result, the query operation takes a longer time.)

Examples

-- Incorrect usage: The join condition contains only a non-equivalent expression.

odps>select t1. *

from src t1

join src t2

on t1.value > t2.value;

FAILED: ODPS-0130071:[4,4] Semantic analysis exception - expect equality expression (i.e., only use '=' and 'AND') for join condition without mapjoin hint

-- Correct usage: The join condition contains the equivalent expression t1.key = t2.key.

odps>select t1. *

from src t1

join src t2

on t1.key = t2.key and t1.value > t2.value;

-- Correct usage: A map join hint is added.

odps>select /*+mapjoin(t1)*/ t1. *

from src t1

join src t2

on t1.value > t2.value;Error Message 3: insert into HASH CLUSTERED table/partition xxx is not current supported

Sample

ODPS-0130071:[m,n] Semantic analysis exception - insert into HASH CLUSTERED table/partition xxx is not current supported

Description

The INSERT INTO statement cannot be used to write data to a clustered table.

Solutions

(1) Change the table to a common table.

(2) Change the INSERT INTO statement to the INSERT OVERWRITE statement.

Examples

-- Create a clustered table.

odps>create table sale_detail_hashcluster

(

shop_name STRING,

total_price decimal,

customer_id BIGINT

)

clustered by(customer_id)

sorted by(customer_id)

into 1024 buckets;

-- Incorrect usage: Insert data into the clustered table.

odps>insert into sale_detail_hashcluster

values ('a', 123, 'id123');

FAILED: ODPS-0130071:[1,13] Semantic analysis exception - insert into HASH CLUSTERED table/partition meta.sale_detail_hashcluster is not current supported

-- Correct usage: Insert data into a common table.

odps>create table sale_detail

(

shop_name STRING,

total_price decimal,

customer_id BIGINT

);

odps>insert into sale_detail

values ('a', 123, 'id123');

-- Correct usage: Execute the INSERT OVERWRITE statement for the clustered table.

odps>insert overwrite table sale_detail_hashcluster

values ('a', 123, 'id123');

Error Message 4: should appear in GROUP BY key

Sample

ODPS-0130071:[m,n] Semantic analysis exception - column reference xx.yy should appear in GROUP BY key

Description

The GROUP BY clause aggregates data in the input table based on the specified key. The following conclusions are obtained after the aggregate operation is performed:

For the column that corresponds to the aggregate key, you can directly obtain output values. You can also call common functions (non-aggregate functions) to further process and calculate data in the column.

For the columns that do not correspond to the aggregate key, you cannot directly obtain output values. You must call aggregate functions such as SUM, COUNT, and AVG to calculate the aggregate results.

Solutions

For the columns that do not correspond to the aggregate key, call aggregate functions such as SUM, COUNT, AVG, and ANY_VALUE to calculate the aggregate result.

Query examples

-- Incorrect usage: Column c does not correspond to the key of GROUP BY. No aggregate function is used.

odps> select a, sum(b), c

from values (1L, 2L, 3L) as t(a, b, c)

group by a;

FAILED: ODPS-0130071:[1,19] Semantic analysis exception - column reference t.c should appear in GROUP BY key

-- Correct usage: Use the aggregate function ANY_VALUE to calculate the aggregate value of Column c.

odps> select a, sum(b), any_value(c)

from values (1L, 2L, 3L) as t(a, b, c)

group by a;Error Message 5: Invalid partition value

Sample

ODPS-0130071:[m,n] Semantic analysis exception - Invalid partition value: 'xxx'

Description

The value of a partition field is invalid. The values of partition fields in a MaxCompute table must comply with the following rules:

The value of a partition field cannot contain double-byte characters such as Chinese characters. The value must be 1 to 128 bytes in length, and can contain letters, digits, and supported special characters. The value must start with a letter.

The following special characters are supported: spaces, colons (:), underscores (_), dollar signs ($), number signs (#), periods (.), exclamation points (!), and at signs (@). The behavior of other characters, such as escaped characters

\t,\n, and/, is not defined.

Solutions

Change the value of the partition field to a valid value.

Query examples

-- Create a table.

odps> create table mc_test

(

a bigint

)

partitioned by

(

ds string

);

-- Incorrect usage: The value '${today}' is invalid for the partition field.

odps> alter table mc_test add partition(ds = '${today}');

FAILED: ODPS-0130071:[1,40] Semantic analysis exception - Invalid partition value: '${today}'

-- Correct usage: Change the value of the partition field to the valid value '20221206'.

odps> alter table mc_test add partition(ds='20221206');Error Message 6: only oss external table support msck repair syntax

Sample

ODPS-0130071:[m,n] Semantic analysis exception - only oss external table support msck repair syntax

Description

Only Object Storage Service (OSS) external tables support the MSCK REPAIR TABLE statement. For more information, see Create an OSS external table.

Method 1 (recommended): MaxCompute automatically parses the OSS directory structure, identifies partitions, and then adds partition data to the OSS external table.

This way, MaxCompute automatically supplements the partitions of the OSS external table based on the partition directory that you specify when you create the OSS external table, instead of adding the partitions one by one based on the names of partition key columns and partition names. This method is suitable for supplementing all missing historical partitions at a time.

msck repair TABLE <mc_oss_extable_name> ADD partitions [ WITH properties (key:VALUE, key: VALUE ...)];NoteThis method is not suitable for scenarios in which partitions are continuously added, especially in scenarios in which the OSS directory contains a large number of partitions. For example, if the OSS directory contains more than 1,000 partitions, we recommend that you do not use this method. If the number of new partitions is far less than the number of existing partitions, frequent use of the

msckcommand results in a large number of repeated scans and metadata update requests for the OSS directory. This significantly reduces the command execution efficiency. Therefore, we recommend that you use method 2 if you want to update data in new partitions.Method 2: Manually run the following commands to add partition data to the OSS external table:

If specific partitions have been created and you need to periodically add partitions, we recommend that you use this method to create partitions before you run tasks for data writing. After a partition is created, the external table can read the latest data from the OSS directory without the need to update the related partition even if new data is written to the OSS directory.

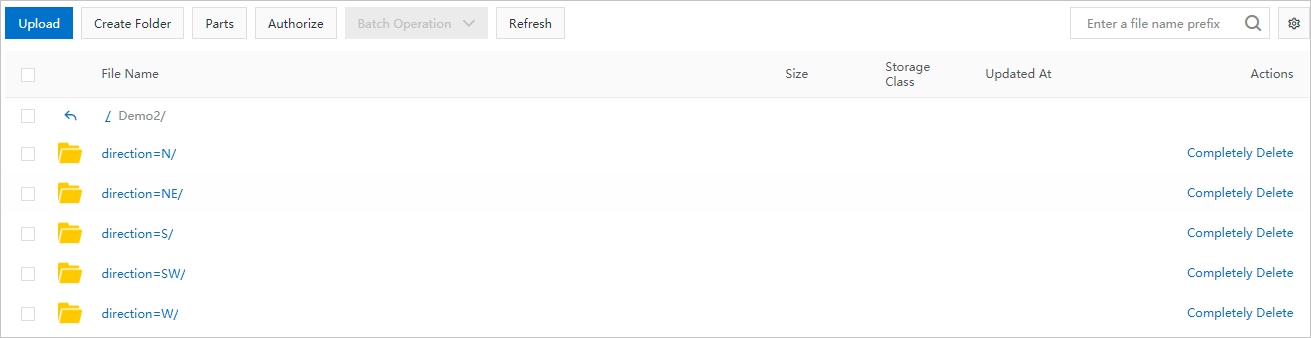

ALTER TABLE < mc_oss_extable_name > ADD PARTITION (< col_name >= < col_value >)[ ADD PARTITION (< col_name >= < col_value >)...][location URL];The values of col_name and col_value must be consistent with the names of the OSS subdirectories where the partition data file is stored. If the OSS directory structure of the partition data file shown in the following figure is used, the value of col_name is

direction, and the values of col_value areN, NE, S, SW, and W. Oneadd partitionclause in the ALTER TABLE statement is used to add a partition that is mapped to an OSS subdirectory. The number ofadd partitionclauses must be the same as that of OSS subdirectories.

Sample statement

Create a directory named

demo8in OSS and create two partition folders under the directory to store the related files.The file

demo8-pt1.txtis stored in the partition folder$pt1=1/$pt2=2.The file

demo8-pt2.txtis stored in the partition folder$pt1=3/$pt2=4.

Create an external table and specify

partitionfields.-- Create an OSS external table. CREATE EXTERNAL TABLE mf_oss_spe_pt (id int, name string) partitioned BY (pt1 string, pt2 string) stored AS TEXTFILE location "oss://oss-cn-beijing-internal.aliyuncs.com/mfoss*******/demo8/"; -- Specify partition fields. MSCK REPAIR TABLE mf_oss_spe_pt ADD PARTITIONS WITH PROPERTIES ('odps.msck.partition.column.mapping'='pt1:$pt1,pt2:$pt2'); -- Query data from the external table. SELECT * FROM mf_oss_spe_pt WHERE pt1=1 AND pt2=2; -- The following result is returned: +------------+------------+------------+------------+ | id | name | pt1 | pt2 | +------------+------------+------------+------------+ | 1 | kyle | 1 | 2 | | 2 | nicole | 1 | 2 | +------------+------------+------------+------------+ -- Query data from the external table. SELECT * FROM mf_oss_spe_pt WHERE pt1=3 AND pt2=4; +------------+------------+------------+------------+ | id | name | pt1 | pt2 | +------------+------------+------------+------------+ | 3 | john | 3 | 4 | | 4 | lily | 3 | 4 | +------------+------------+------------+------------+If the names of partition key columns in the OSS external table are inconsistent with the OSS directory structure, specify OSS directories.

-- Mappings between MaxCompute partitions and OSS directories. --pt1=8-->8 --pt2=8-->$pt2=8 -- Add partitions. ALTER TABLE mf_oss_spe_pt ADD PARTITION (pt1=8,pt2=8) location 'oss://oss-cn-beijing-internal.aliyuncs.com/mfosscostfee/demo8/8/$pt2=8/'; -- Disable the commit mode. -- Insert data into the external table. SET odps.sql.unstructured.oss.commit.mode=false; INSERT INTO mf_oss_spe_pt PARTITION (pt1=8,pt2=8) VALUES (1,'tere'); -- Query data from the external table. SET odps.sql.unstructured.oss.commit.mode=false; SELECT * FROM mf_oss_spe_pt WHERE pt1=8 AND pt2=8; +------+------+-----+-----+ | id | name | pt1 | pt2 | +------+------+-----+-----+ | 1 | tere | 8 | 8 | +------+------+-----+-----+

Solutions

Follow the instructions in the documentation to create an OSS external table before you execute the MSCK REPAIR TABLE statement.

Query examples

-- Create a common table.

odps> create table mc_test

(

a bigint

)

partitioned by

(

ds string

);

-- Incorrect usage: The MSCK REPAIR TABLE statement cannot be executed for a common table.

odps> msck table mc_test add partitions;

FAILED: ODPS-0130071:[1,12] Semantic analysis exception - only oss external table support msck repair syntax

Error Message 7: column xx in source has incompatible type yy with destination column zz, which has type ttt

Sample

ODPS-0130071:[m,n] Semantic analysis exception - column xx in source has incompatible type yy with destination column zz, which has type ttt

Description

When data is inserted into a table, the data type of the destination table must match the data type of the inserted data, or the data type of the inserted data can be implicitly converted into the data type of the destination table. Otherwise, an error is returned.

Solutions

Modify the query statement to ensure that the data type of the inserted data matches the data type of the destination table.

Query examples

-- Create a table.

odps> create table mc_test

(

a datetime

);

-- Incorrect usage: A data type mismatch issue occurs.

odps> insert overwrite table mc_test

values (1L);

FAILED: ODPS-0130071:[2,9] Semantic analysis exception - column __value_col0 in source has incompatible type BIGINT with destination column a, which has type DATETIME

-- Correct usage: Data of a valid data type is inserted.

odps> insert overwrite table mc_test

values (datetime '2022-12-06 14:23:45');

Error Message 8: function datediff cannot match any overloaded functions with (STRING, STRING, STRING), candidates are BIGINT DATEDIFF(DATE arg0, DATE arg1, STRING arg2); BIGINT DATEDIFF(DATETIME arg0, DATETIME arg1, STRING arg2); BIGINT DATEDIFF(TIMESTAMP arg0, TIMESTAMP arg1, STRING arg2); INT DATEDIFF(DATE arg0, DATE arg1); INT DATEDIFF(STRING arg0, STRING arg1); INT DATEDIFF(TIMESTAMP arg0, TIMESTAMP arg1)

Sample

ODPS-0130071:[m,n] Semantic analysis exception - function datediff cannot match any overloaded functions with (STRING, STRING, STRING), candidates are BIGINT DATEDIFF(DATE arg0, DATE arg1, STRING arg2); BIGINT DATEDIFF(DATETIME arg0, DATETIME arg1, STRING arg2); BIGINT DATEDIFF(TIMESTAMP arg0, TIMESTAMP arg1, STRING arg2); INT DATEDIFF(DATE arg0, DATE arg1); INT DATEDIFF(STRING arg0, STRING arg1); INT DATEDIFF(TIMESTAMP arg0, TIMESTAMP arg1)

Description

The data types of the input parameters of the function DATEDIFF do not match. A common type mismatch issue is caused because implicit data type conversions are not allowed after the MaxCompute V2.0 data type edition is enabled.

Solutions

Use one of the following methods:

(1) Add set odps.sql.type.system.odps2=false; before the SQL statement that you want to execute and commit them together to disable the MaxCompute V2.0 data type edition. After the MaxCompute V2.0 data type edition is disabled, implicit data type conversions are allowed.

(2) Change the data types of the input parameters.

Error Message 9: The role not exists: acs:ram::xxxxxx:role/aliyunodpsdefaultrole

Sample

ODPS-0130071:[1,1] Semantic analysis exception - external table checking failure, error message: java.lang.RuntimeException: {"RequestId":"A7BFAD2F-8982-547A-AB5E-93DAF5061FBD","HostId":"sts.aliyuncs.com","Code":"EntityNotExist.Role","Message":"The role not exists: acs:ram::xxxxxx:role/aliyunodpsdefaultrole. ","Recommend":"https://next.api.aliyun.com/troubleshoot?q=EntityNotExist.Role&product=Sts"}

Description

When you create an OSS external table, you need to specify a RAM role that is used to access OSS. In this case, a non-existing RAM role is used. As a result, role authentication fails.

Solutions

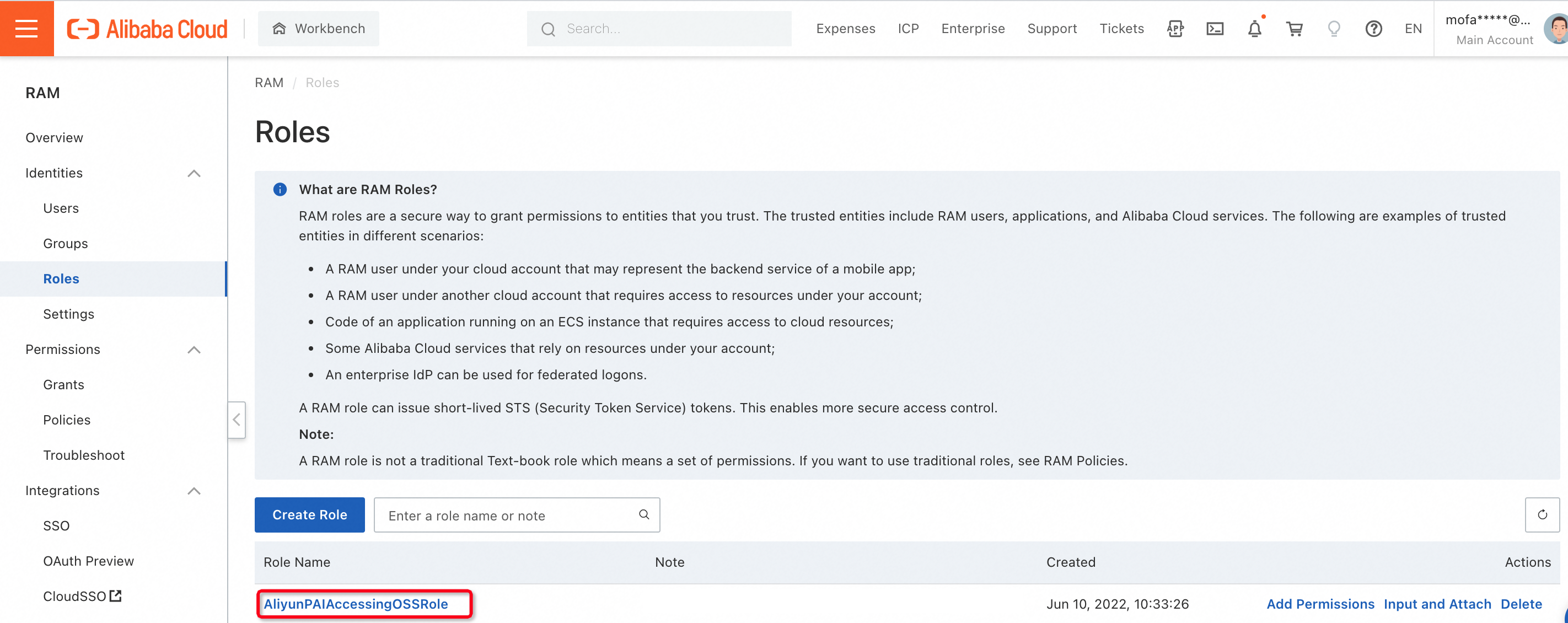

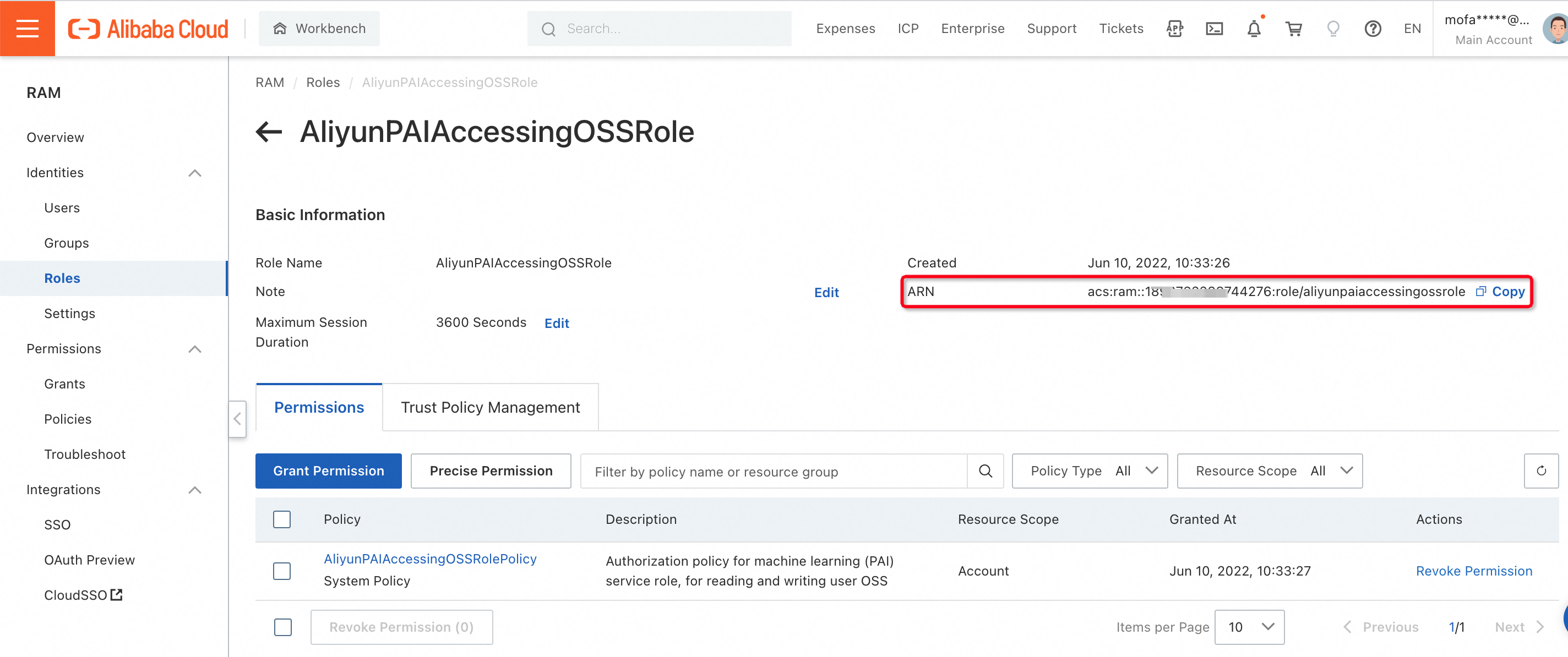

Change the value of the odps.properties.rolearn parameter that specifies the Alibaba Cloud Resource Name (ARN). The ARN is in the following format:

"acs:ram::<UID>:role/<Role>"

Take note of the following information:

UID: a 16-digit number.

Role: the name of a role that is configured in the Resource Access Management (RAM) console.

Examples

'odps.properties.rolearn'='acs:ram::189xxxxxxx76:role/aliyunpaiaccessingossrole'

To obtain the ARN, go to the RAM console. On the Roles page, click the role name to view the ARN, as shown in the following figures.

Error Message 10: encounter runtime exception while evaluating function MAX_PT

Sample

FAILED: ODPS-0130071:[33,26] Semantic analysis exception - encounter runtime exception while evaluating function MAX_PT, detailed message: null

Description

When an SQL statement is executed, the partition that is specified by max_pt is changed. As a result, an error is returned due to data inconsistency.

Solutions

1. Do not execute SQL statements in which max_pt is specified for new partitions.

2. Enable the system to rerun a configuration task if the error is reported for the configuration task

Error Message 11: column xxx cannot be resolved

Sample

ODPS-0130071:[73,12] Semantic analysis exception - column xxx cannot be resolved

Description

The xxx column does not exist in the table.

Solutions

Check the SQL script and update xxx to a valid column name.

Error Message 12: evaluate function in class XX for user defined function YY does not match annotation ZZ

Sample

FAILED: ODPS-0130071:[1,8] Semantic analysis exception - evaluate function in class test.MyPlus for user defined function my_plus does not match annotation bigint->bigint

Description

The code of the Java or Python user-defined function (UDF) does not comply with UDF writing standards. The information in the function signature annotation is not the same as that in the code.

Solutions

Modify the UDF code to ensure that the information in the function signature annotation is the same as that in the code.

Query examples

-- The following sample code is the invalid code of a Python UDF. Two input parameters are included in the code, but only one input parameter is included in the annotation.

from odps.udf import annotate

@annotate("bigint->bigint")

class MyPlus(object):

def evaluate(self, arg0, arg1):

if None in (arg0, arg1):

return None

return arg0 + arg1

-- The following sample code is the invalid code of another Python UDF. The self parameter is not included in the evaluate function.

from odps.udf import annotate

@annotate("bigint,bigint->bigint")

class MyPlus(object):

def evaluate(arg0, arg1):

if None in (arg0, arg1):

return None

return arg0 + arg1

-- The following sample code is the valid code of a Python UDF.

from odps.udf import annotate

@annotate("bigint,bigint->bigint")

class MyPlus(object):

def evaluate(self, arg0, arg1):

if None in (arg0, arg1):

return None

return arg0 + arg1Error Message 13: Vpc white list: , Vpc id: vpc-xxxx is not allowed to access

Sample

FAILED: ODPS-0130071:[0,0] Semantic analysis exception - physical plan generation failed: com.aliyun.odps.lot.cbo.plan.splitting.impl.vpc.AliHyperVpcRuntimeException: Vpc white list: , Vpc id: vpc-xxxx is not allowed to access. Contact project owner to set allowed accessed vpc id list.=

Description

When you access services in a VPC by using an external table, the error message appears due to invalid VPC configurations.

Solutions

Configure an IP address whitelist in the VPC and ensure that MaxCompute can access services in the VPC from an IP address in the whitelist. For more information, see the "Access over a VPC (dedicated connection)" section in Network connection process.

Error Message 14: Semantic analysis exception - physical plan generation failed:

Sample

FAILED: ODPS-0130071:[0,0] Semantic analysis exception - physical plan generation failed: com.aliyun.odps.common.table.na.NativeException: kNotFound:The role_arn you provide not exists in HOLO auth service. Please check carefully.

Description

When you access an Alibaba Cloud service, the service-linked role (SLR) that is linked to the service is not assigned to your Alibaba Cloud account. As a result, you cannot access the data of the service by using the SLR.

Solutions

Click RAM Quick Authorization to assign the SLR to your Alibaba Cloud account.