This topic explains how to implement screen sharing on iOS.

Feature introduction

Screen sharing enables a user to present their screen content to other users in a channel during a video call or live stream. It facilitates instant information sharing and enhances visual communication.

How it works

iOS does not support screen capture in the main app process. To implement screen sharing, use a Broadcast Upload Extension that works with the main app process to publish the stream. The system launches this extension when a screen sharing session begins. The extension uses the native iOS ReplayKit framework to capture the screen and sends the captured frames to the main app process, which then publishes the stream.

Due to Apple's privacy settings, data cannot be shared between different apps or processes in separate sandboxes. To share data between the main app and the extension, use Apple's App Group feature to configure the same App Group ID for the main app process and the extension.

Sample code

Alibaba Real-Time Communication (ARTC) provides open-source sample code: Implement screen sharing on iOS.

Prerequisites

Before you implement screen sharing, make sure you meet the following requirements:

Create an ARTC application and obtain the AppID and AppKey from the ApsaraVideo Live console.

Integrate the ARTC SDK into your project and implement basic audio and video call features.

Screen sharing requires iOS 12.0 or later. Ensure you run the application on a compatible device.

Notes

This is a resource-intensive feature. We recommend using an iPhone X or later model.

When a user starts screen sharing on an iOS device, the audio route automatically switches to the earpiece due to system limitations.

Project configuration

(Optional) Create an app group

Although screen sharing may work in some scenarios without a configured app group (using an unregistered ID), this approach is unstable, and the extension process may fail to access shared data or behave unpredictably.

To ensure reliable data synchronization and communication between your main app and the extension, configure and use a valid App Group ID registered in the Apple Developer Portal.

To register an app group, see Register an app group.

Create a Broadcast Upload Extension

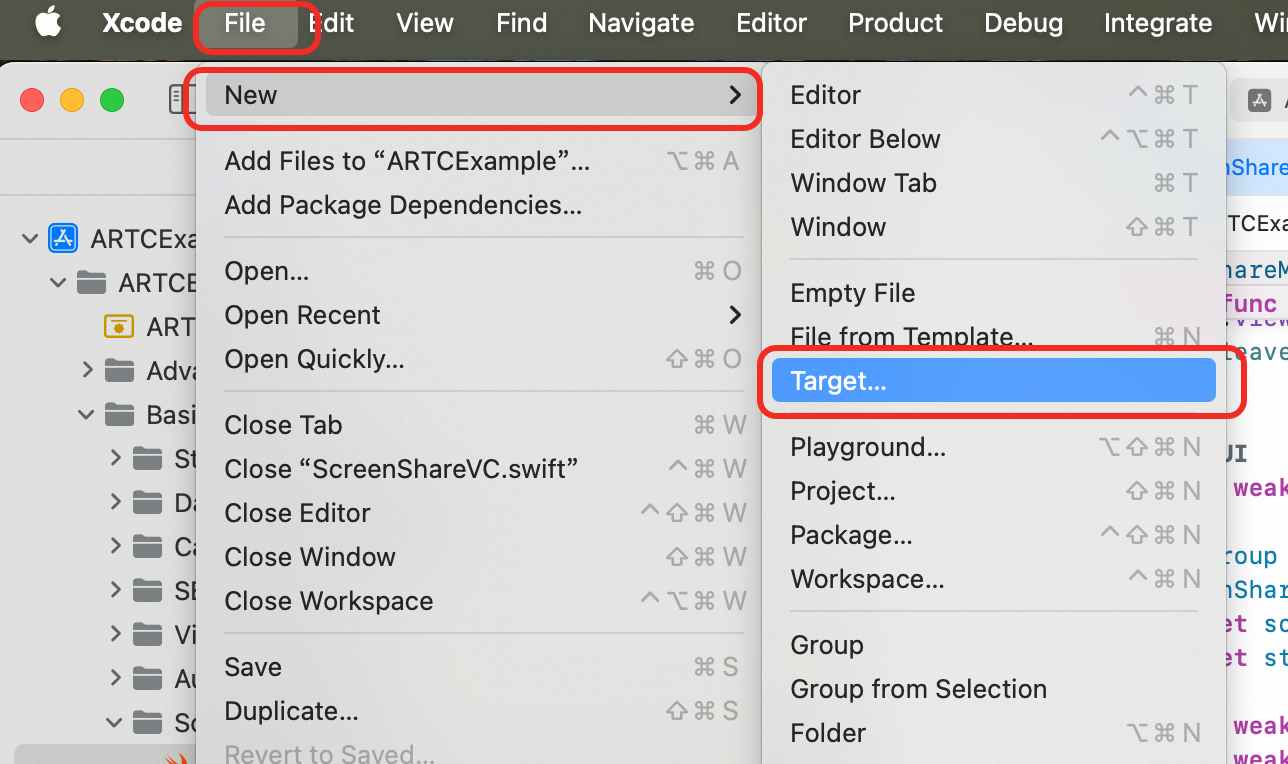

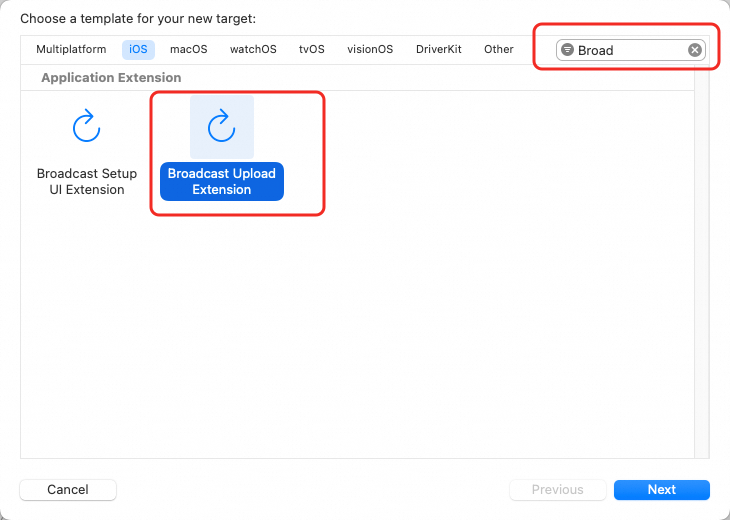

Open your project in Xcode. Go to File > New > Target..., select the iOS platform, choose Broadcast Upload Extension, then click Next.

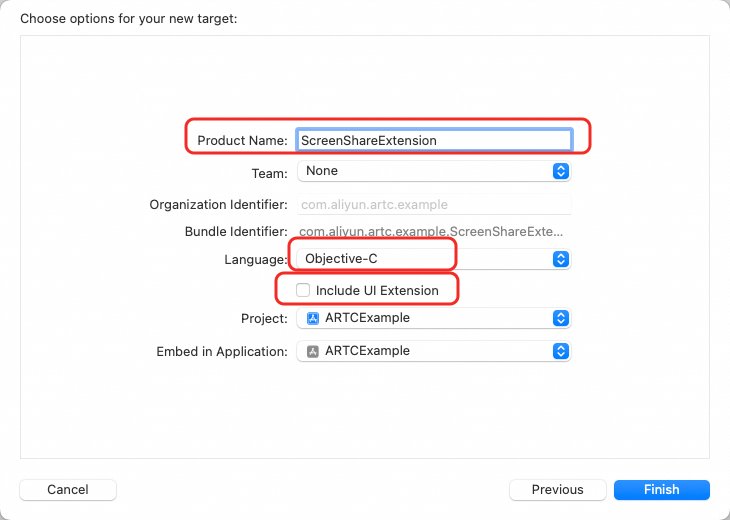

In the dialog that appears, set the Product Name, select Objective-C as the language, clear the Include UI Extension checkbox, then click Finish. Xcode automatically creates a new folder for the extension, which includes the

SampleHandlerfile.

Integrate AliScreenShare.framework.

Automatically integrate with CocoaPods

Open Terminal and install CocoaPods. If you have already installed it, skip this step.

sudo gem install cocoapodsOpen Terminal, navigate to your project's root directory, and run the following command to create a

Podfile.

pod initOpen and edit the generated

Podfileto add theAliScreenSharedependency for theScreenShareExtensiontarget you created.

# Uncomment the next line to define a global platform for your project platform :ios, '11.0' target 'ARTCExample' do # Pods for ARTCExample pod 'AliVCSDK_ARTC', '~> 7.5.0' end # Add the AliScreenShare library dependency for the ScreenShareExtension target target 'ScreenShareExtension' do # Pods for ScreenShareExtension pod "AliScreenShare", '7.5.0' endRun the following command in Terminal to install the dependencies.

pod installThis command creates a

.xcworkspacefile in your project folder. From now on, use this file to open your project in Xcode.Manually integrate

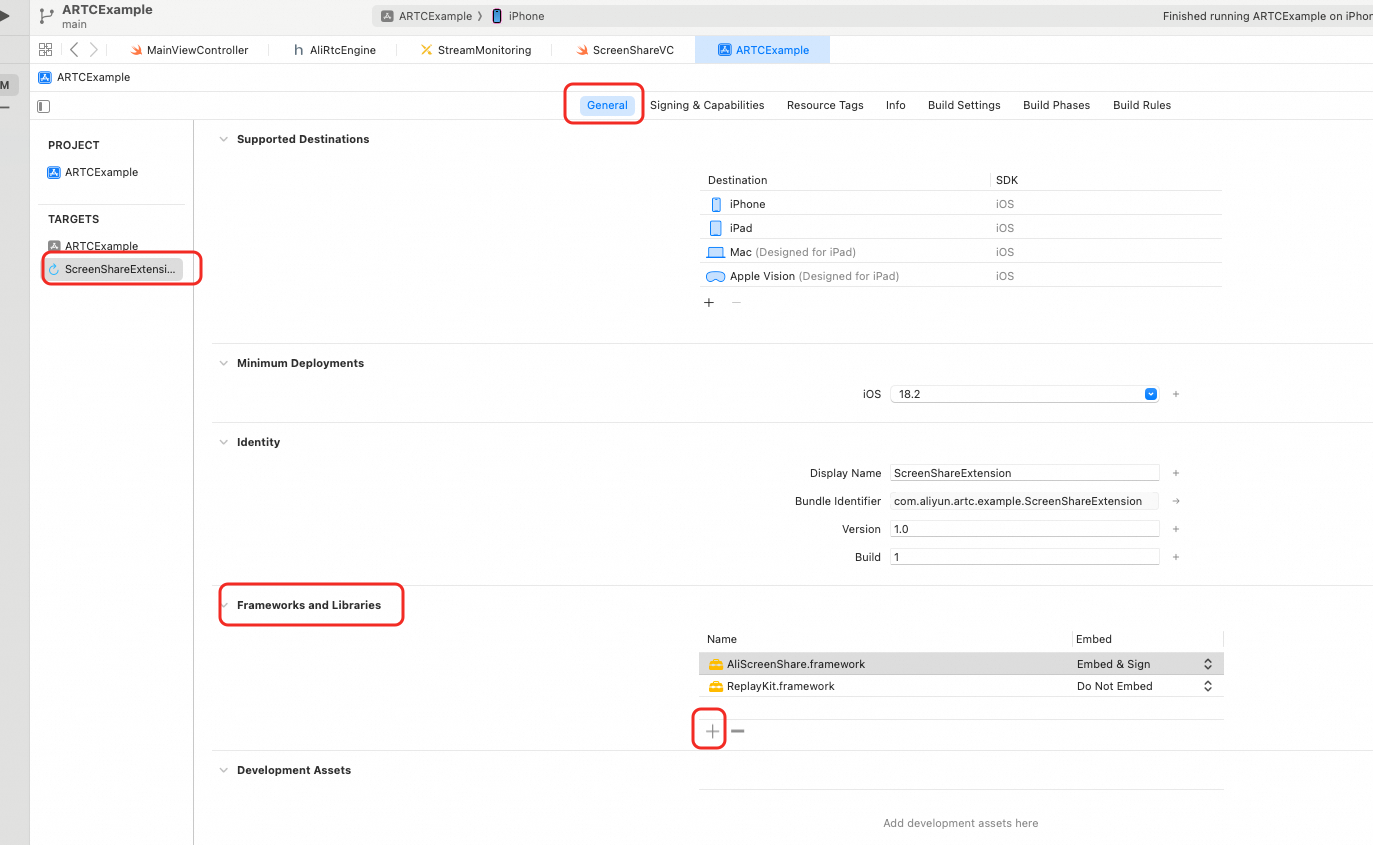

Click Download SDK and copy the downloaded

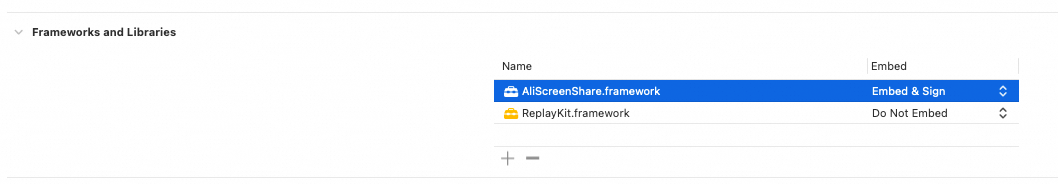

AliScreenShare.frameworkfile to your project directory. Select the Extension target you just created. Go to General > Frameworks and Libraries, click the plus sign (+), click Add Files, and importAliScreenShare.framework.

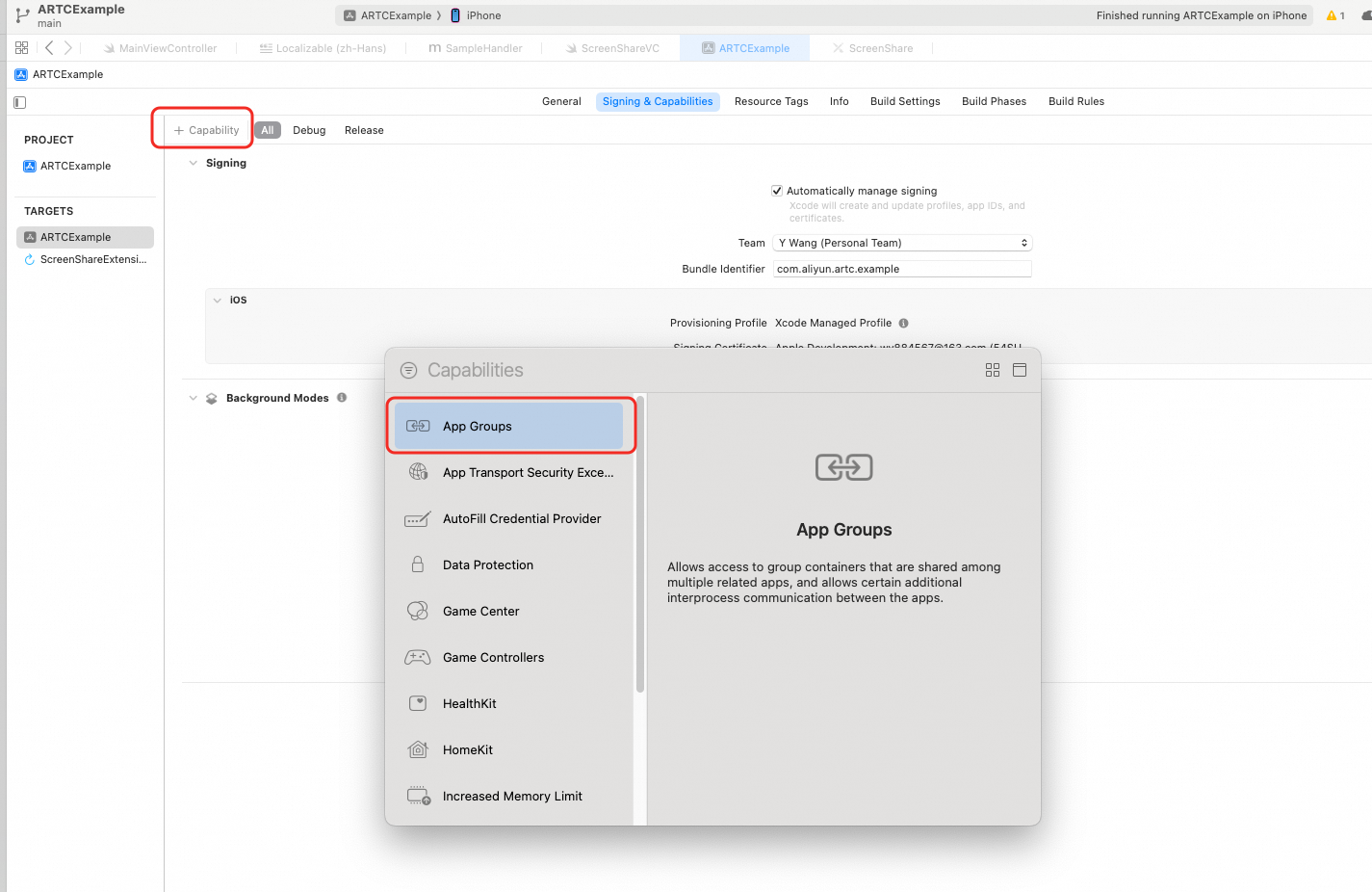

The extension and the main app run in different processes with separate sandboxes. To enable inter-process communication, use Apple's App Group feature. For both your main app target and the extension target, configure the same app group: In the project editor, select the target and go to the Signing & Capabilities tab. Click + Capability and add an app group.

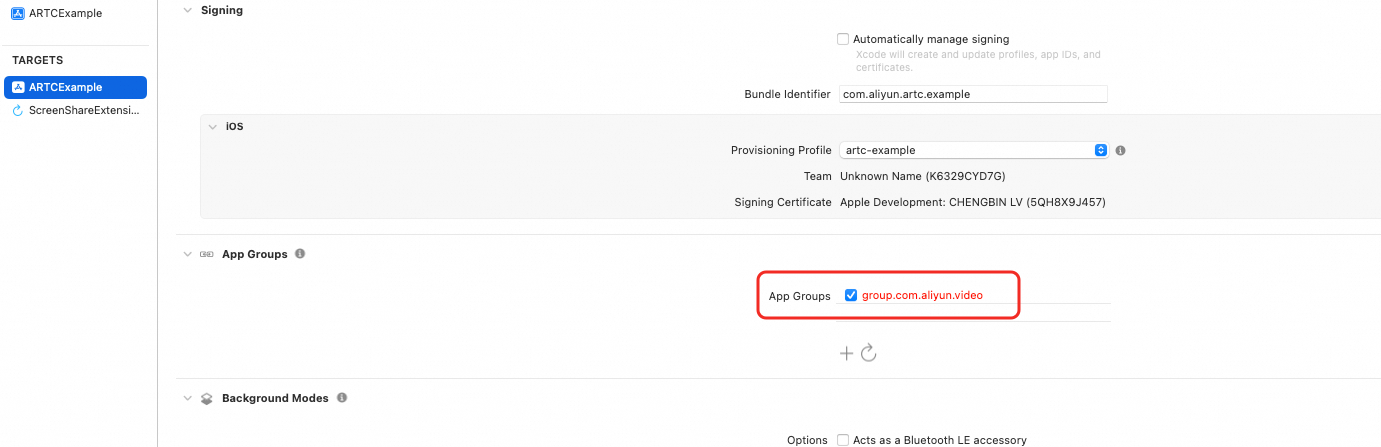

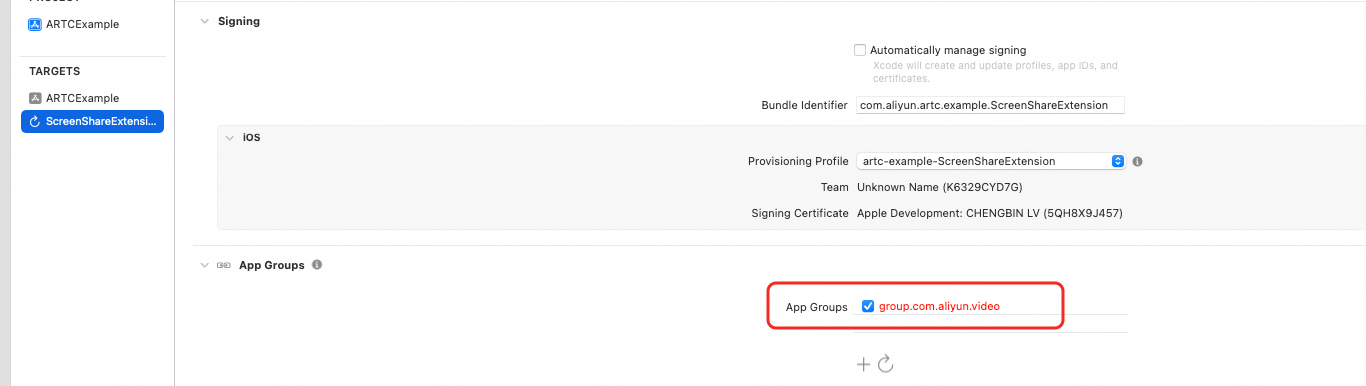

As shown below, you need to configure the same App Group ID for both targets.

Select the extension's target, click General, and set the supported iOS version (Minimum Deployments) to 12.0 or higher.

The main app and the App Extension must have the same Bundle ID prefix and be signed with the same certificate.

In the new target, Xcode automatically creates a class named

SampleHandler. Replace the contents of its.mfile with the following code.NoteReplace

APP GROUP ID (kAppGroup)in the code with your App Group ID.

#import "SampleHandler.h"

#import <AliScreenShare/AliScreenShareExt.h>

static NSString * _Nonnull kAppGroup = @"group.com.aliyun.video"; // The App Group for the main screen sharing app and the plugin.

@interface SampleHandler() <AliScreenShareExtDelegate>

@property (nonatomic, assign) int32_t frameNum;

@end

@implementation SampleHandler

- (void)broadcastStartedWithSetupInfo:(NSDictionary<NSString *,NSObject *> *)setupInfo {

// User has requested to start the broadcast. Setup info from the UI extension can be supplied but optional.

NSLog(@"SampleHandler SEND broadcastStartedWithSetupInfo");

[[AliScreenShareExt sharedInstance] setupWithAppGroup:kAppGroup delegate:self];

}

- (void)broadcastPaused {

// User has requested to pause the broadcast. Samples will stop being delivered.

NSLog(@"SampleHandler SEND broadcastPaused");

}

- (void)broadcastResumed {

// User has requested to resume the broadcast. Samples delivery will resume.

NSLog(@"SampleHandler SEND broadcastResumed");

}

- (void)broadcastFinished {

// User has requested to finish the broadcast.

NSLog(@"SampleHandler SEND broadcastFinished");

}

- (void)processSampleBuffer:(CMSampleBufferRef)sampleBuffer withType:(RPSampleBufferType)sampleBufferType {

@autoreleasepool {

[[AliScreenShareExt sharedInstance] sendSampleBuffer:sampleBuffer type:sampleBufferType];

}

}

#pragma mark - AliScreenShareExtDelegate

- (void)finishBroadcastWithError:(AliScreenShareExt *)broadcast error:(NSError *)error

{

[self finishBroadcastWithError:error];

}

@end

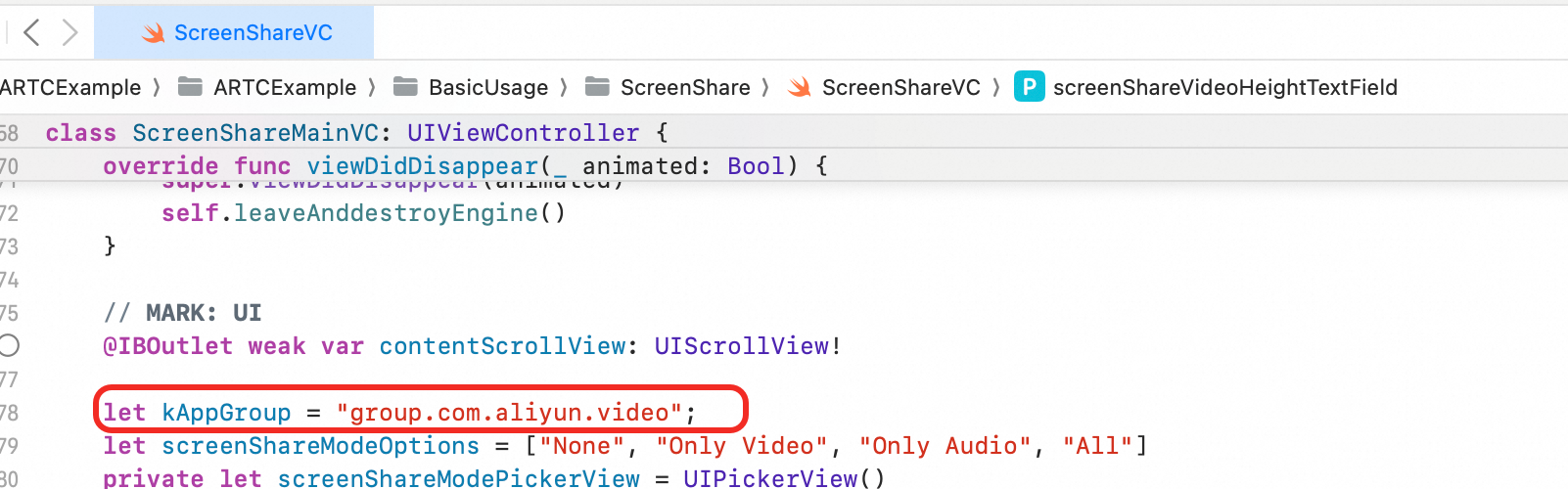

Modify the

kAppGroupparameter in theARTCExample/BasicUsage/ScreenShare/ScreenShareVC.swiftfile to match the one you set.

Feature implementation

1. Configure camera and screen sharing streams

ARTC supports ingesting camera and screen sharing streams. Configure them based on your use case.

1.1. Push only the screen sharing stream

To ingest only the screen sharing stream, you must explicitly disable the camera stream, as it is enabled by default.

Before joining a channel, call

publishLocalVideoStream(false)to disable camera stream ingest.After joining a channel, call

startScreenShareto start screen capture and ingest the screen sharing stream.

engine.publishLocalVideoStream(false)1.2. Push both the camera and screen sharing streams

To ingest both the camera and screen sharing streams, perform the following steps:

Camera stream ingest is enabled by default. If disabled, call

publishLocalVideoStream(true)to enable it.After joining a channel, call

startScreenShareto start screen capture and ingest the screen sharing stream.

engine.publishLocalVideoStream(true)2. (Optional) Configure the screen sharing stream encoder

To customize the encoding properties of the screen sharing stream, call the setScreenShareEncoderConfiguration method. You can configure properties such as resolution, frame rate, bitrate, keyframe interval (GOP), and video orientation.

You can call this method both before and after joining a channel. To set the encoding properties only once per session, call this method before joining.

To update the configuration, you can call this method multiple times.

The following table describes the configuration parameters:

Parameter | Description | Value |

dimensions | The video resolution. | Default value: 0×0, which indicates that the ingested stream's resolution matches the screen capture resolution. Maximum value: 3840×2160. |

frameRate | The video frame rate. | Default value: 5. Maximum value: 30. |

bitrate | The video encoding bitrate in Kbps. Note: The bitrate must be set within a reasonable range corresponding to the resolution and frame rate. Otherwise, the SDK automatically adjusts the bitrate to a valid value. | Default value: 512. |

keyFrameInterval | The keyframe interval, or GOP. Unit: milliseconds. | Default value: 0, which indicates that the SDK internally controls the keyframe interval. |

forceStrictKeyFrameInterval | Specifies whether to force the encoder to generate keyframes strictly at the set interval. | Default value: false. Valid values:

|

rotationMode | The orientation of the ingested stream. | Default value: AliRtcRotationMode_0. You can choose 0, 90, 180, or 270 degrees. |

Sample code:

var screenShareConfig: AliRtcScreenShareEncoderConfiguration = AliRtcScreenShareEncoderConfiguration()

screenShareConfig.dimensions = CGSize(width: Int(720), height: Int(1280))

screenShareConfig.frameRate = 15

screenShareConfig.bitrate = 512

screenShareConfig.keyFrameInterval = 2000

alirtcEngine.setScreenShareEncoderConfiguration(screenShareConfig)3. Start screen capture

Call

startScreenShareto start screen capture and set the parameters based on your use case:kAppGroup: The screen capture extension and the main app must use the same app group.

mode: The screen sharing mode, which includes disabled, video only, system audio only, or both system audio and video.

// The main app must use the same app group as the extension.

let kAppGroup = "group.com.aliyun.video";

@IBAction func onStartScreenShareBtnClicked(_ sender: UIButton) {

guard let alirtcEngine = self.rtcEngine else {return}

// Configure the screen sharing stream encoder.

alirtcEngine.setScreenShareEncoderConfiguration(screenShareConfig)

// Launch the picker view.

startBroadcastPicker()

// Start screen sharing.

alirtcEngine.startScreenShare(kAppGroup, mode: screenShareMode)

}Enable the extension process.

Apple requires a user action to start screen capture. The startBroadcastPicker function below uses RPSystemBroadcastPickerView, introduced in iOS 12.0, to display a pop-up that prompts the user to start screen recording.

// Create and configure a system broadcast picker view. Apple requires users to explicitly trigger screen sharing.

func startBroadcastPicker() {

if #available(iOS 12.0, *) {

let broadcastPickerView = RPSystemBroadcastPickerView(frame: CGRect(x: 0, y: 0, width: 44, height: 44))

guard let bundlePath = Bundle.main.path(forResource: "ScreenShareExtension", ofType: "appex", inDirectory: "PlugIns") else {

self.showErrorAlertView("Can not find bundle at path", code: 0, forceShow: false)

return

}

guard let bundle = Bundle(path: bundlePath) else {

self.showErrorAlertView("Can not find bundle at path", code: 0, forceShow: false)

return

}

broadcastPickerView.preferredExtension = bundle.bundleIdentifier

// Traverse the subviews to find the button to skip the step of clicking the system view.

// This solution is not officially recommended by Apple and may be invalid in future system updates.

for subView in broadcastPickerView.subviews {

if let button = subView as? UIButton {

button.sendActions(for: .allEvents)

}

}

} else {

self.showErrorAlertView("This feature only supports iOS 12 or above", code: 0, forceShow: false)

return

}

}4. Stop screen sharing

Call stopScreenShare to stop screen sharing in the channel.

@IBAction func onStopScreenShareBtnClicked(_ sender: UIButton) {

guard let alirtcEnging = self.rtcEngine else {return}

if(alirtcEnging.isScreenSharePublished()) {

alirtcEnging.stopScreenShare()

}

}5. View the shared screen

When a remote user publishes a stream, the local client receives the onRemoteTrackAvailableNotify callback. Use the videoTrack parameter in this callback to determine which video streams (camera, screen sharing, or both) are available and display them accordingly.

// Save all views.

var videoSeatViewMap: [String: SeatView] = [:]

// Set up the view based on the callback.

func onRemoteTrackAvailableNotify(_ uid: String, audioTrack: AliRtcAudioTrack, videoTrack: AliRtcVideoTrack) {

"onRemoteTrackAvailableNotify uid: \(uid) audioTrack: \(audioTrack) videoTrack: \(videoTrack)".printLog()

// The stream status of the remote user.

DispatchQueue.main.async {

switch videoTrack {

case .no:

// Remove all views for this user.

self.removeSeatView(uid: uid, streamType: .camera)

self.removeSeatView(uid: uid, streamType: .screen)

case .camera:

// Add the camera view.

self.createOrUpdateSeatView(uid: uid, streamType: .camera)

case .screen:

// Add the screen sharing view.

self.createOrUpdateSeatView(uid: uid, streamType: .screen)

case .both:

// Add both the camera and screen sharing views.

self.createOrUpdateSeatView(uid: uid, streamType: .camera)

self.createOrUpdateSeatView(uid: uid, streamType: .screen)

@unknown default:

break

}

}

}

// Remove the specified view for the specified user.

func removeSeatView(uid: String, streamType: StreamType) {

let key = "\(uid)_\(streamType)"

guard let seatView = videoSeatViewMap.removeValue(forKey: key) else { return }

// 1. Remove from the UI.

seatView.removeFromSuperview()

// 2. Clean up video resources.

rtcEngine?.setRemoteViewConfig(nil, uid: uid, for: streamType == .camera ? .camera : .screen)

// 3. Check if there are other views for this user.

let hasOtherViews = videoSeatViewMap.keys.contains { $0.hasPrefix("\(uid)_") }

if !hasOtherViews {

// Remove the user container.

if let container = findUserContainer(for: uid) {

container.removeFromSuperview()

}

} else {

// Re-layout the remaining views.

updateLayoutForUser(uid: uid)

}

}

// Create or update a video render view and add it to contentScrollView.

func createOrUpdateSeatView(uid: String, streamType: StreamType) ->SeatView {

let key = "\(uid)_\(streamType)"

// 1. If a view already exists, return it directly.

if let existingView = videoSeatViewMap[key] {

return existingView

}

// 2. Create a new view.

let seatView = SeatView(frame: .zero)

seatView.seatInfo = SeatInfo(uid: uid, streamType: streamType)

if uid != self.userId {

// 3. Configure the video canvas.

let canvas = AliVideoCanvas()

canvas.view = seatView.canvasView

canvas.renderMode = .fill

canvas.mirrorMode = streamType == .screen ? .allDisabled : .allEnabled

canvas.rotationMode = ._0

rtcEngine?.setRemoteViewConfig(canvas, uid: uid, for: streamType == .camera ? .camera : .screen)

}

// 4. Add to the management dictionary.

videoSeatViewMap[key] = seatView

// 6. Update the layout.

updateLayoutForUser(uid: uid)

return seatView

}6. (Optional) Configure screen sharing audio volume

To control the volume of shared system audio, call setAudioShareAppVolume.

@IBAction func onShareAudioVolumeSliderChanged(_ sender: UISlider) {

let volume = Int32(sender.value)

rtcEngine?.setAudioShareAppVolume(volume)

}