This tutorial describes how to use the face search and video editing features of Intelligent Media Services (IMS) to create and edit face compilation videos. It covers topics such as basic media asset retrieval, API calls for face search, Timeline configuration, and advanced template-based editing.

Introduction

Face compilations are an effective way to showcase a person's activities across different scenes. They are widely used in film production, news reporting, and social entertainment. To create such videos, it is important to quickly find and organize video clips in which key people appear. This tutorial shows you how to do so using the powerful features of IMS.

Use cases

Use case 1: Athlete highlight reels in sports events, such as marathon

In sports events such as marathons, organizers or media outlets often need to create highlight videos of athletes. These videos showcase key moments in the competition, such as sprints or overtaking opponents. These highlight videos are important for event promotion, recaps, and personal promotion for the athletes.

Use case 2: Personal highlight reels for amusement park visitors

Amusement parks often create personalized highlight reels for visitors to enhance their experience. These reels capture exciting moments on various rides, such as screams on a roller coaster or laughter on a carousel. These compilations can serve as souvenirs for visitors or be used for the park's social media promotion.

Use case 3: Behind-the-scenes videos for banquets, such as wedding reception

In the wedding industry, wedding videos are an important way to record a couple's happy moments and preserve memories. Both behind-the-scenes footage from the wedding day and travel videos of the couple require careful editing and arrangement. This showcases their love story and the romantic atmosphere of the wedding.

Use case 4: Fan face compilations at concerts

At large concerts, organizers often create face compilations of the audience to show their enthusiasm and engagement. These compilations can be displayed on large screens to enhance the atmosphere. They can also be used as material for social media promotion.

Face compilations can also be used in many other areas, such as personal memoirs, family commemorative videos, tourism promotions, and corporate annual meetings or event recaps.

Procedure

Before you begin

Install the IMS server-side SDK. For more information, see Preparations. If you have already installed the SDK, skip this step.

Step 1: Retrieve video clip information using face search

Use the face search feature of Intelligent Media Services to search your video materials and retrieve information about media clips that contain faces. For more information about this step, see the Search for faces in a large number of media assets tutorial.

Step 2: Edit the face video clips

The following sections describe three methods for creating face compilation videos. These sections cover applicable scenarios, steps, SDK call examples, and case studies.

Method 1: Use Script-to-Video

Features

Convenient and efficient: Automated script-based generation provides a convenient way to create face compilation videos. You can efficiently generate multiple similar videos in batches with simple configurations.

Easy to start: Even if you do not have video editing experience, you can easily create impressive videos using the powerful one-click video creation feature.

SDK call example

Expand to view the code sample

package com.example;

import java.util.*;

import com.alibaba.fastjson.JSONArray;

import com.alibaba.fastjson.JSONObject;

import com.aliyun.ice20201109.Client;

import com.aliyun.ice20201109.models.*;

import com.aliyun.teaopenapi.models.Config;

/**

* You need to add the following Maven dependencies:

* <dependency>

* <groupId>com.aliyun</groupId>

* <artifactId>ice20201109</artifactId>

* <version>2.3.0</version>

* </dependency>

* <dependency>

* <groupId>com.alibaba</groupId>

* <artifactId>fastjson</artifactId>

* <version>1.2.9</version>

* </dependency>

*/

public class SubmitFaceEditingJobService {

static final String regionId = "cn-shanghai";

static final String bucket = "<your-bucket>";

/*** Submit a face compilation job based on face search results ****/

public static void submitBatchEditingJob(String mediaId, SearchMediaClipByFaceResponse response) throws Exception {

Client iceClient = initClient();

JSONArray intervals = buildIntervals(response);

JSONObject editingTimeline = buildEditingTimeline(mediaId, intervals);

String openingMediaId = "icepublic-9a2df29956582a68a59e244a5915228c";

String endingMediaId = "icepublic-1790626066bee650ac93bd12622a921c";

String mainGroupName = "main";

String openingGroupName = "opening";

String endingGroupName = "ending";

JSONObject inputConfig = buildInputConfig(mediaId, mainGroupName, openingMediaId, openingGroupName, endingMediaId, endingGroupName);

JSONObject editingConfig = buildEditingConfig(mediaId, mainGroupName, intervals, openingMediaId, openingGroupName, endingMediaId, endingGroupName);

JSONObject outputConfig = new JSONObject();

outputConfig.put("MediaURL", "https://ice-auto-test.oss-cn-shanghai.aliyuncs.com/testBatch/" + System.currentTimeMillis() + "{index}.mp4");

outputConfig.put("Width", 1280);

outputConfig.put("Height", 720);

outputConfig.put("FixedDuration", 18);

outputConfig.put("Count", 2);

SubmitBatchMediaProducingJobRequest request = new SubmitBatchMediaProducingJobRequest();

request.setInputConfig(inputConfig.toJSONString());

request.setEditingConfig(editingConfig.toJSONString());

request.setOutputConfig(outputConfig.toJSONString());

SubmitBatchMediaProducingJobResponse response = iceClient.submitBatchMediaProducingJob(request);

System.out.println("JobId: " + response.getBody().getJobId());

}

public Client initClient() throws Exception {

// An Alibaba Cloud account AccessKey has full permissions for all APIs. We recommend using a Resource Access Management (RAM) user for API calls and daily O&M.

// This example shows how to store the AccessKey ID and AccessKey secret in environment variables. For configuration instructions, see: https://www.alibabacloud.com/help/en/sdk/developer-reference/v2-manage-access-credentials

com.aliyun.credentials.Client credentialClient = new com.aliyun.credentials.Client();

Config config = new Config();

config.setCredential(credentialClient);

// To hard-code the AccessKey ID and AccessKey secret, use the following code. However, we strongly recommend that you do not store your AccessKey ID and AccessKey secret in your project code. This can lead to an AccessKey leak and compromise the security of all resources in your account.

// config.accessKeyId = <The AccessKey ID you created in Step 2>;

// config.accessKeySecret = <The AccessKey secret you created in Step 2>;

config.endpoint = "ice." + regionId + ".aliyuncs.com";

config.regionId = regionId;

return new Client(config);

}

public JSONArray buildIntervals(SearchMediaClipByFaceResponse response) {

JSONArray intervals = new JSONArray();

List<SearchMediaClipByFaceResponseBodyMediaClipListOccurrencesInfos> occurrencesInfos =

response.getBody().getMediaClipList().get(0).getOccurrencesInfos();

for (SearchMediaClipByFaceResponseBodyMediaClipListOccurrencesInfos occurrence: occurrencesInfos) {

Float startTime = occurrence.getStartTime();

Float endTime = occurrence.getEndTime();

// You can adjust the filtering logic as needed

// Filter out clips shorter than 2s

if (endTime - startTime < 2) {

continue;

}

// Truncate clips longer than 6s

if (endTime - startTime > 6) {

endTime = startTime + 6;

}

JSONObject interval = new JSONObject();

interval.put("In", startTime);

interval.put("Out", endTime);

intervals.add(interval);

}

return intervals;

}

public static JSONObject buildSingleInterval(Float in, Float out) {

JSONObject interval = new JSONObject();

interval.put("In", in);

interval.put("Out", out);

return interval;

}

public static JSONObject buildMediaMetaData(String mediaId, String groupName, JSONArray intervals) {

JSONObject mediaMetaData = new JSONObject();

mediaMetaData.put("Media", mediaId);

mediaMetaData.put("GroupName", groupName);

mediaMetaData.put("TimeRangeList", intervals);

return mediaMetaData;

}

public static JSONObject buildInputConfig(String mediaId, String mainGroupName, String openingMediaId, String openingGroupName, String endingMediaId, String endingGroupName) {

JSONObject inputConfig = new JSONObject();

JSONArray mediaGroupArray = new JSONArray();

if (openingMediaId != null) {

// Configure the intro

JSONObject openingGroup = new JSONObject();

openingGroup.put("GroupName", openingGroupName);

openingGroup.put("MediaArray", Arrays.asList(openingMediaId));

mediaGroupArray.add(openingGroup);

}

JSONObject mediaGroupMain = new JSONObject();

mediaGroupMain.put("GroupName", mainGroupName);

mediaGroupMain.put("MediaArray", Arrays.asList(mediaId));

mediaGroupArray.add(mediaGroupMain);

if (endingMediaId != null) {

// Configure the outro

JSONObject endingGroup = new JSONObject();

endingGroup.put("GroupName", endingGroupName);

endingGroup.put("MediaArray", Arrays.asList(endingMediaId));

mediaGroupArray.add(endingGroup);

}

inputConfig.put("MediaGroupArray", mediaGroupArray);

// Custom background music

inputConfig.put("BackgroundMusicArray", Arrays.asList("icepublic-0c4475c3936f3a8743850f2da942ceee"));

return inputConfig;

}

public static JSONObject buildEditingConfig(String mediaId, String mainGroupName, JSONArray intervals, String openingMediaId, String openingGroupName, String endingMediaId, String endingGroupName) {

JSONObject editingConfig = new JSONObject();

JSONObject mediaConfig = new JSONObject();

JSONArray mediaMetaDataArray = new JSONArray();

if (openingMediaId != null) {

// Configure the in and out points of the intro material as needed

JSONObject openingInterval = buildSingleInterval(1.5f, 5.5f);

JSONArray openingIntervals = new JSONArray();

openingIntervals.add(openingInterval);

JSONObject metaData = buildMediaMetaData(openingMediaId, openingGroupName, openingIntervals);

mediaMetaDataArray.add(metaData);

}

// Configure the in and out points of the main material (clips where faces appear)

JSONObject mainMediaMetaData = buildMediaMetaData(mediaId, mainGroupName, intervals);

mediaMetaDataArray.add(mainMediaMetaData);

if (endingMediaId != null) {

// Configure the in and out points of the outro material as needed

JSONObject endingInterval = buildSingleInterval(1.5f, 5.5f);

JSONArray endingIntervals = new JSONArray();

endingIntervals.add(endingInterval);

JSONObject metaData = buildMediaMetaData(endingMediaId, endingGroupName, endingIntervals);

mediaMetaDataArray.add(metaData);

}

mediaConfig.put("MediaMetaDataArray", mediaMetaDataArray);

editingConfig.put("MediaConfig", mediaConfig);

return editingConfig;

}

}

Use the console

If you choose to create videos using the console, you can skip "Step 1: Retrieve video clip information using face search" and follow these steps.

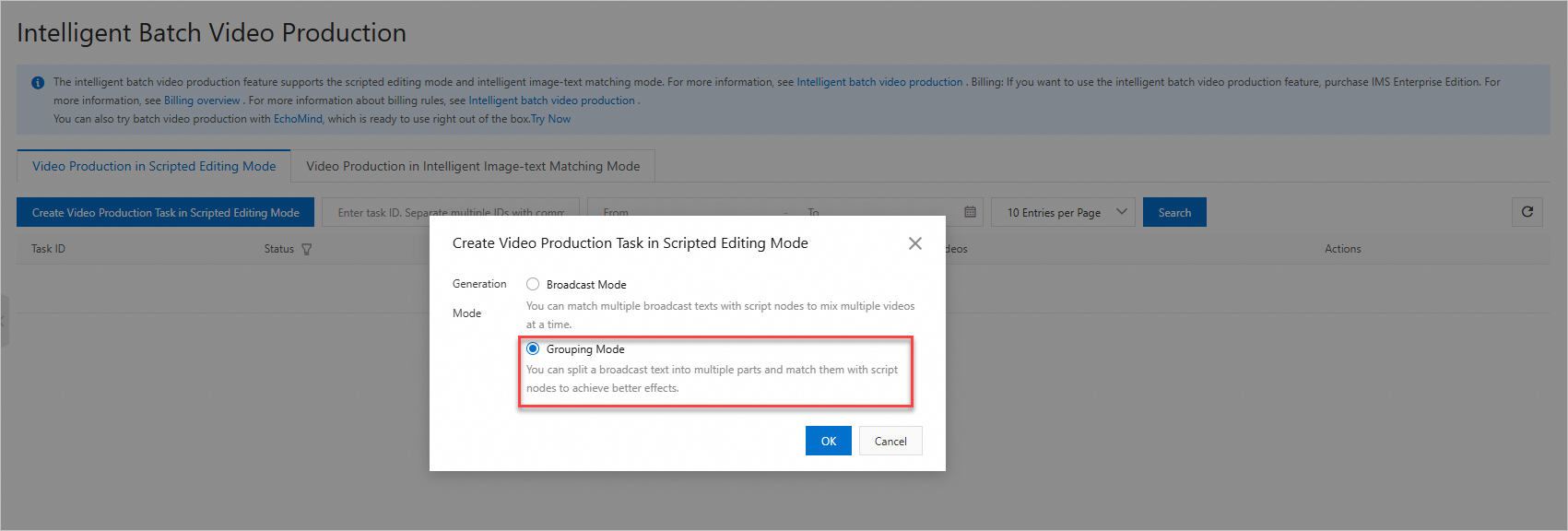

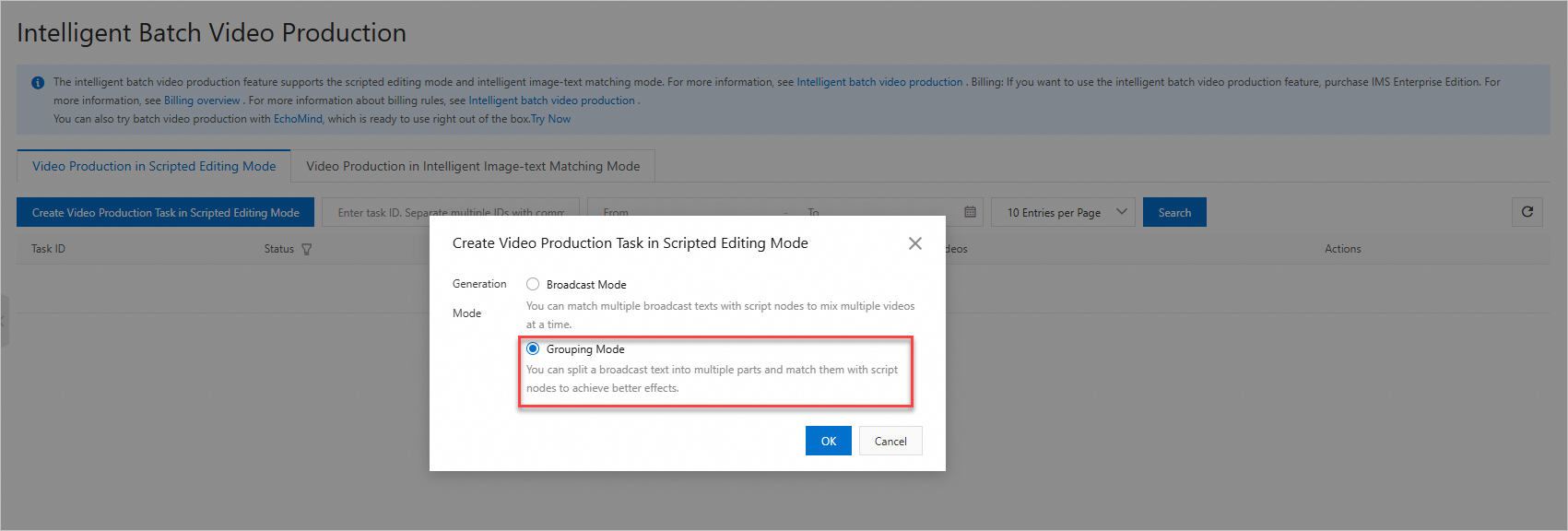

Step 1: In the navigation pane on the left of the console, click Intelligent Batch Video Production. Then, under Script-to-Video, click Create Task. Select a generation mode. Both Global Scripts and Segmented Scripts are applicable. This example uses Segmented Scripts mode.

Step 2: In the Script Node Configuration section, click Add Script Node > Add Material. In the dialog box that appears, select Face Retreival. Then, upload a face image to search for materials.

Step 3: In the search results list, view the matched clips. Then, click Import Matched Clips and click OK. The matched materials are then added to the script node.

Step 4: To view or adjust the clips matched by face search, hover the mouse pointer over the material and click the settings button. In the dialog box, you can manually adjust the matched clips under Trimming. You can skip this step if you do not need to adjust the in and out points.

After completing these steps, follow the standard procedure for Script-to-Video to configure the title, background music, stickers, logos, and synthesis settings. Then, submit the job.

Method 2: Use Timeline editing

Features

Customizable video content: Timeline provides a high degree of customization, which lets you precisely control every edit point, effect, transition, and audio track. You can freely combine and adjust materials according to your creative needs to produce unique videos.

High flexibility: Timeline editing is not limited by templates. You can adjust the editing plan at any time based on project requirements. You can flexibly handle various creative needs, such as the editing order, adding effects, or audio processing.

Steps

Based on the face search results from Step 1, use the Timeline configuration in SubmitMediaProducingJob API to edit the face compilation video. For an example, see the SDK call example section below. For more advanced editing needs, such as setting effects, transitions, or audio processing, see Basic parameters for video editing and Timeline configuration.

SDK call example

Expand to view the code sample

Expand to view the sample Timeline parameter

{

"VideoTracks": [

{

"VideoTrackClips": [

{

"MediaId": "b5a003f0cd3f71ed919fe7e7c45b****",

"In": 54.106018,

"Effects": [

{

"Type": "Volume",

"Gain": 0

}

],

"Out": 56.126015

},

{

"MediaId": "b5a003f0cd3f71ed919fe7e7c45b****",

"In": 271.47302,

"Effects": [

{

"Type": "Volume",

"Gain": 0

}

],

"Out": 277.393

},

{

"MediaId": "b5a003f0cd3f71ed919fe7e7c45b****",

"In": 326.03903,

"Effects": [

{

"Type": "Volume",

"Gain": 0

}

],

"Out": 331.959

},

{

"MediaId": "b5a003f0cd3f71ed919fe7e7c45b****",

"In": 372.20602,

"Effects": [

{

"Type": "Volume",

"Gain": 0

}

],

"Out": 375.126

},

{

"MediaId": "b5a003f0cd3f71ed919fe7e7c45b****",

"In": 383.03903,

"Effects": [

{

"Type": "Volume",

"Gain": 0

}

],

"Out": 388.959

},

{

"MediaId": "b5a003f0cd3f71ed919fe7e7c45b****",

"In": 581.339,

"Effects": [

{

"Type": "Volume",

"Gain": 0

}

],

"Out": 587.25903

},

{

"MediaId": "b5a003f0cd3f71ed919fe7e7c45b****",

"In": 602.339,

"Effects": [

{

"Type": "Volume",

"Gain": 0

}

],

"Out": 607.293

}

]

}

],

"AudioTracks": [

{

"AudioTrackClips": [

{

"LoopMode": true,

"MediaId": "icepublic-0c4475c3936f3a8743850f2da942ceee"

}

]

}

]

}

Method 3: Use advanced templates

Features

Efficient and high-quality: The service provides ready-made templates that you can fill with your own materials to create a video. This process significantly reduces production time. You can also design your own templates to improve video quality.

Consistent video style: Templates ensure a consistent and professional style for your video works, which allows users without professional experience to create appealing, professional-looking videos.

Steps

Based on the face search results from Step 1, you can produce a face compilation video using advanced templates and ClipsParam parameter in the SubmitMediaProducingJob operation. The following SDK call example uses the public template IceSys_VETemplate_s100241 and configures video segments based on the face search results. For more information about using your own advanced templates, see Advanced templates.

SDK call example

Expand to view the code sample

package com.example;

import java.util.*;

import com.alibaba.fastjson.JSONArray;

import com.alibaba.fastjson.JSONObject;

import com.aliyun.ice20201109.Client;

import com.aliyun.ice20201109.models.*;

import com.aliyun.teaopenapi.models.Config;

/**

* You need to add the following Maven dependencies:

* <dependency>

* <groupId>com.aliyun</groupId>

* <artifactId>ice20201109</artifactId>

* <version>2.3.0</version>

* </dependency>

* <dependency>

* <groupId>com.alibaba</groupId>

* <artifactId>fastjson</artifactId>

* <version>1.2.9</version>

* </dependency>

*/

public class SubmitFaceEditingJobService {

static final String regionId = "cn-shanghai";

static final String bucket = "<your-bucket>";

/*** Submit a face compilation editing job based on face search results ****/

public static void submitEditingJob(String mediaId, SearchMediaClipByFaceResponse response) throws Exception {

Client iceClient = initClient();

JSONArray intervals = buildIntervals(response);

// Submit an advanced template job. This example uses the public template IceSys_VETemplate_s100241 and configures video segments based on face search results.

JSONObject clipParams = buildClipParams(mediaId, intervals);

SubmitMediaProducingJobRequest request2 = new SubmitMediaProducingJobRequest();

request2.setTemplateId("IceSys_VETemplate_s100241");

request2.setClipsParam(clipParams.toJSONString());

request2.setOutputMediaTarget("oss-object");

outputConfig = new JSONObject();

outputConfig.put("MediaURL",

"https://" + bucket + ".oss-" + regionId + "/testTemplate/" + System.currentTimeMillis() + ".mp4");

request2.setOutputMediaConfig(outputConfig.toJSONString());

SubmitMediaProducingJobResponse response2 = iceClient.submitMediaProducingJob(request2);

System.out.println("JobId: " + response2.getBody().getJobId());

}

public Client initClient() throws Exception {

// An Alibaba Cloud account AccessKey has full permissions for all APIs. We recommend using a RAM user for API calls and daily O&M.

// This example shows how to store the AccessKey ID and AccessKey secret in environment variables. For configuration instructions, see: https://www.alibabacloud.com/help/en/sdk/developer-reference/v2-manage-access-credentials

com.aliyun.credentials.Client credentialClient = new com.aliyun.credentials.Client();

Config config = new Config();

config.setCredential(credentialClient);

// To hard-code the AccessKey ID and AccessKey secret, use the following code. However, we strongly recommend that you do not store your AccessKey ID and AccessKey secret in your project code. This can lead to an AccessKey leak and compromise the security of all resources in your account.

// config.accessKeyId = <The AccessKey ID you created in Step 2>;

// config.accessKeySecret = <The AccessKey secret you created in Step 2>;

config.endpoint = "ice." + regionId + ".aliyuncs.com";

config.regionId = regionId;

return new Client(config);

}

public JSONArray buildIntervals(SearchMediaClipByFaceResponse response) {

JSONArray intervals = new JSONArray();

List<SearchMediaClipByFaceResponseBodyMediaClipListOccurrencesInfos> occurrencesInfos =

response.getBody().getMediaClipList().get(0).getOccurrencesInfos();

for (SearchMediaClipByFaceResponseBodyMediaClipListOccurrencesInfos occurrence: occurrencesInfos) {

Float startTime = occurrence.getStartTime();

Float endTime = occurrence.getEndTime();

// You can adjust the filtering logic as needed

// Filter out clips shorter than 2s

if (endTime - startTime < 2) {

continue;

}

// Truncate clips longer than 6s

if (endTime - startTime > 6) {

endTime = startTime + 6;

}

JSONObject interval = new JSONObject();

interval.put("In", startTime);

interval.put("Out", endTime);

intervals.add(interval);

}

return intervals;

}

public JSONObject buildClipParams(String mediaId, JSONArray intervals) {

JSONObject clipParams = new JSONObject();

for (int i = 0; i< intervals.size(); i++) {

JSONObject interval = intervals.getJSONObject(i);

Float in = interval.getFloat("In");

Float out = interval.getFloat("Out");

clipParams.put("Media" + i, mediaId);

clipParams.put("Media" + i + ".clip_start", in);

}

return clipParams;

}

}

Expand to view an example of the clipParams parameter

{

"Media0": "b5a003f0cd3f71ed919fe7e7c45b****",

"Media0.clip_start": 54.066017,

"Media1": "b5a003f0cd3f71ed919fe7e7c45b****",

"Media1.clip_start": 67.33301,

"Media2": "b5a003f0cd3f71ed919fe7e7c45b****",

"Media2.clip_start": 271.47302,

"Media3": "b5a003f0cd3f71ed919fe7e7c45b****",

"Media3.clip_start": 326.03903,

"Media4": "b5a003f0cd3f71ed919fe7e7c45b****",

"Media4.clip_start": 372.20602,

"Media5": "b5a003f0cd3f71ed919fe7e7c45b****",

"Media5.clip_start": 383.03903,

"Media6": "b5a003f0cd3f71ed919fe7e7c45b****",

"Media6.clip_start": 581.339,

"Media7": "b5a003f0cd3f71ed919fe7e7c45b****",

"Media7.clip_start": 587.25903,

"Media8": "b5a003f0cd3f71ed919fe7e7c45b****",

"Media8.clip_start": 602.339

}

Case studies

Create a video with timeline editing This video is a face compilation of the athlete in the white jersey with the number 5. | Create a video with an advanced template The final video uses transitions and speed effects from the template. |

|

Create a video automatically with Script-to-Video (SDK) This video is a face compilation of the athlete in the white jersey with the number 5. | Create a video automatically with Script-to-Video (Console) This video is a face compilation of the athlete in the white jersey with the number 17. |

|