This topic provides answers to some frequently asked questions about the monitoring and alerting feature and logs.

How do I configure parameters at the log level for a single class?

How do I enable GC logging in the console of fully managed Flink?

What do I do if the TaskManager logs of a DataStream deployment contain a NullPointerException error but do not provide the details of the error stack?

On the Deployments page, click the name of the desired deployment. On the Configuration tab, click Edit in the upper-right corner of the Parameters section and add the following code to the Other Configuration field:

env.java.opts: "-XX:-OmitStackTraceInFastThrow"How do I configure parameters at the log level for a single class?

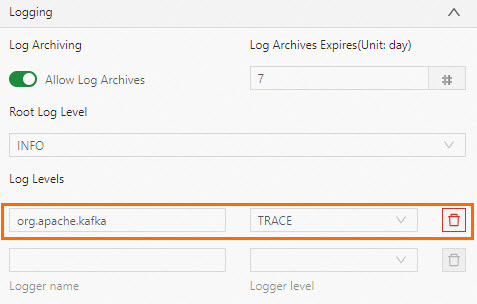

For example, if you specify log4j.logger.org.apache.kafka.clients.consumer=trace for an ApsaraMQ for Kafka source table and specify log4j.logger.org.apache.kafka.clients.producer=trace for an ApsaraMQ for Kafka result table when you use the Kafka connector, you must configure the parameters in the Log Levels field in the Logging section of the Configuration tab. You cannot configure parameters in the Other Configuration field of the Parameters section.

How do I enable GC logging in the console of fully managed Flink?

On the Deployments page, click the name of the desired deployment. On the Configuration tab, click Edit in the upper-right corner of the Parameters section and add the following code to the Other Configuration field:

env.java.opts: >-

-XX:+PrintGCDetails -XX:+PrintGCDateStamps -Xloggc:/flink/log/gc.log

-XX:+UseGCLogFileRotation -XX:NumberOfGCLogFiles=2 -XX:GCLogFileSize=50MWhat do I do if a DataStream deployment is not delayed but the values of delay-related metrics for output data indicate a delay in the deployment?

Problem description

Data is continuously read by using a source table of Flink, and the Kafka connector continuously writes the data to each partition of an ApsaraMQ for Kafka physical table. However, the values of the CurrentEmitEventTimeLag and CurrentFetchEventTimeLag metrics for the DataStream deployment indicate that the deployment is delayed for 52 years.

Cause

The Kafka connector in the DataStream deployment is provided by the Apache Flink community and is not a built-in connector that is supported by Ververica Platform (VVP). Connectors that are supported by the Apache Flink community do not support metric-based monitoring. As a result, the values of the metrics are abnormal.

Solution

Use the dependencies of connectors that are supported by VVP. For more information, see Ververica Maven Repository.

How do I resolve the issue that the logs generated by using a non-static method cannot be exported to Simple Log Service?

Problem description

The logic of Logger and Appender in Log4j Appender is used in Simple Log Service. As a result, the logs that are generated by using a non-static method cannot be exported to Simple Log Service.

Solution

Use the static method

private static final Logger LOG = LoggerFactory.getLogger(xxx.class);.

What do I do if Kafka can receive data that is written from Realtime Compute for Apache Flink but the value in the Records Received column on the Status tab of the related deployment is 0?

Problem description

The deployment has only one data operator. The source operator has no input but only output and the sink operator has only input but no output. In this case, I cannot view the amount of data that is read and written in the deployment topology.

Solution

Split the operators to view the amount of data in the deployment topology. Split the source and sink operators as independent operators from the topology. Then, separately connect the source operator and sink operator with other operators to form a new topology. You can view the data flow and traffic in the new topology.

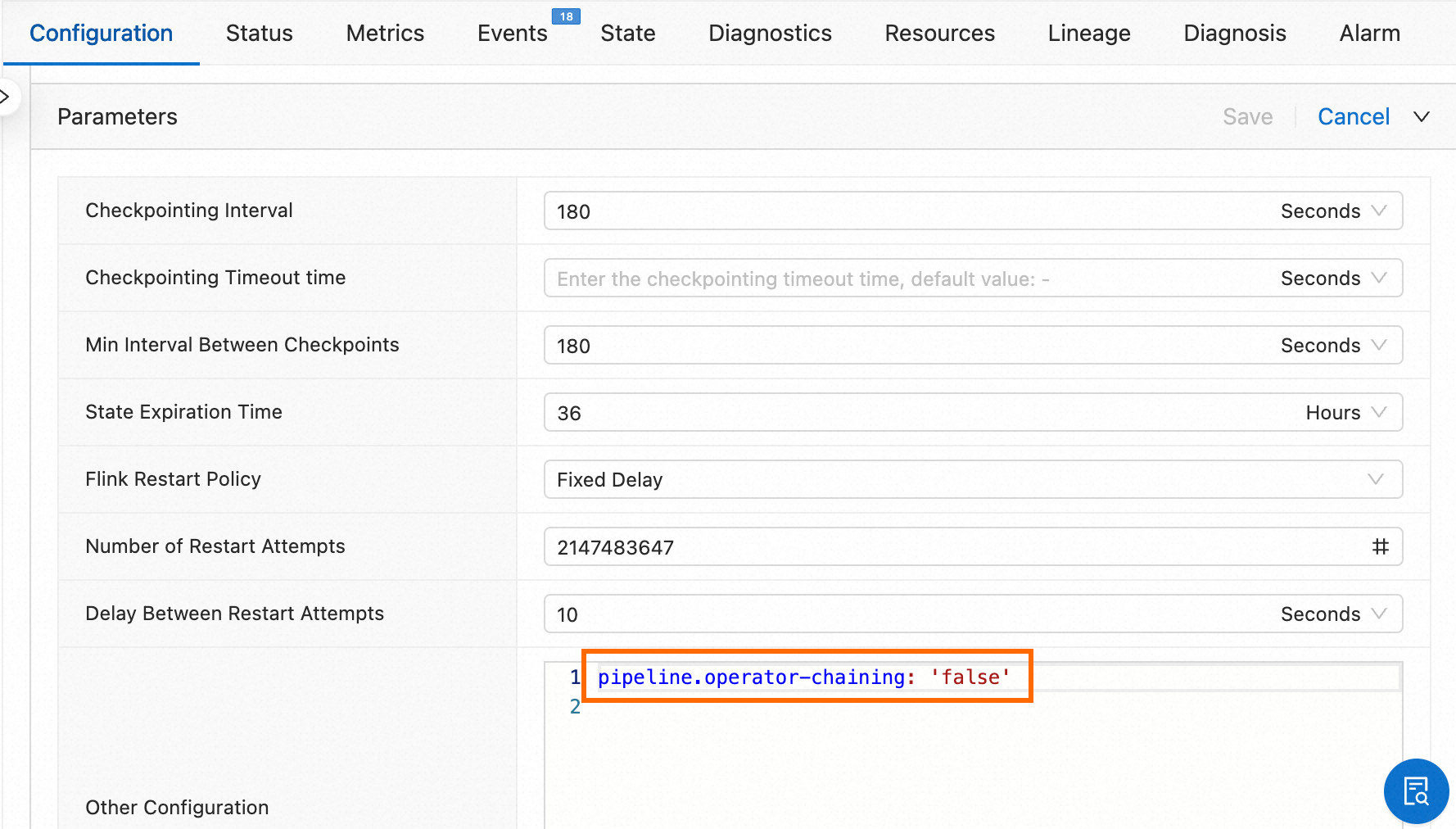

On the Deployments page, click the name of the desired deployment. On the Configuration tab, click Edit in the upper-right corner of the Parameters section and add the

pipeline.operator-chaining: 'false'configuration to the Other Configuration field.

What do I do if a deployment startup error is reported after I configure parameters to export the logs of the deployment to Simple Log Service?

Problem description

After I configure parameters to export the logs of the deployment to Simple Log Service, the error message "Failed to start the deployment. Try again." appears during the startup of the deployment and the following error message is also reported:

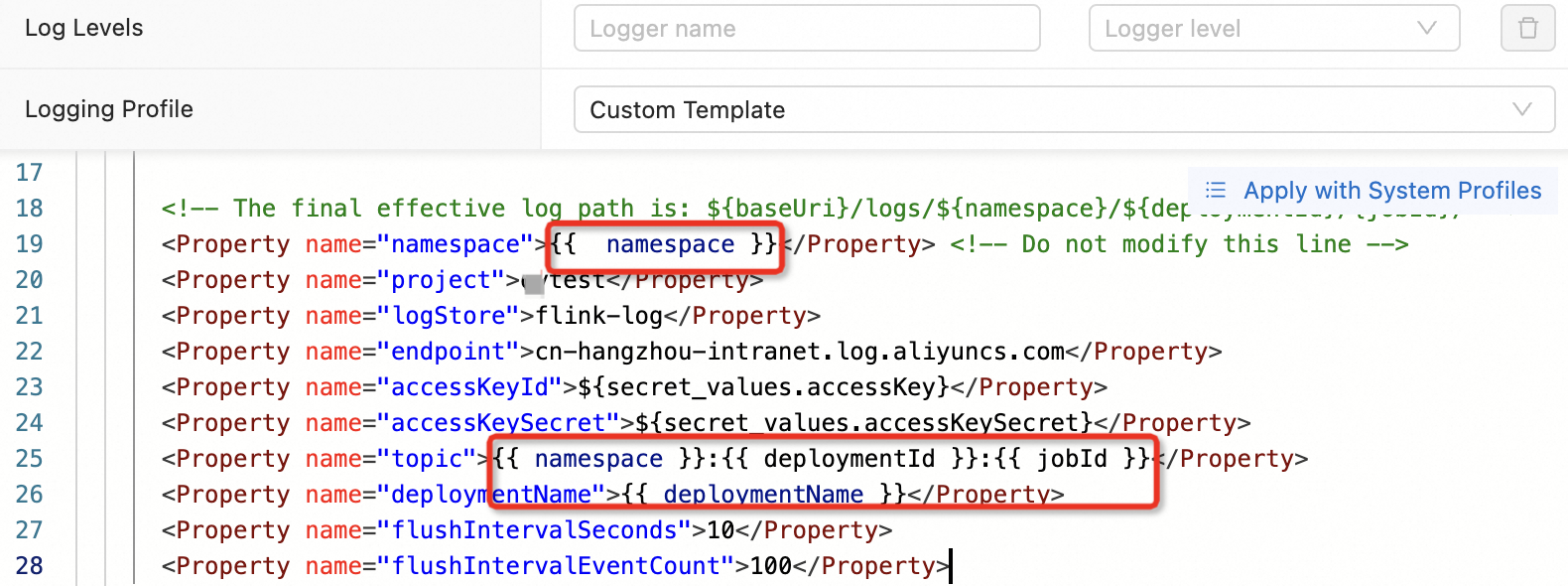

Unknown ApiException {exceptionType=com.ververica.platform.appmanager.controller.domain.TemplatesRenderException, exceptionMessage=Failed to render {userConfiguredLoggers={}, jobId=3fd090ea-81fc-4983-ace1-0e0e7b******, rootLoggerLogLevel=INFO, clusterName=f7dba7ec27****, deploymentId=41529785-ab12-405b-82a8-1b1d73******, namespace=flinktest-default, priorityClassName=flink-p5, deploymentName=test}} 029999 202312121531-8SHEUBJUJUCause

The values of the variables in Twig templates, such as namespace and deploymentId, are changed when you configure the parameters to export logs of the deployment to Simple Log Service.

Solution

Reconfigure the parameters that are described in the table in Configure parameters to export logs of a deployment based on your business requirements.