There are two usage modes of instances in Function Compute: on-demand mode and provisioned mode. In both modes, you can configure auto scaling rules based on the limits regarding the number and scaling speed of instances. For provisioned instances, you can configure scheduled scaling and metric-based scaling rules.

Instance scaling limits

Scaling limits for on-demand instances

Function Compute preferentially uses existing instances to process requests. When the existing instances are at full capacity, Function Compute creates new ones to process requests. As the number of requests increases, Function Compute continues to create new instances until enough instances are created to handle incoming requests or the number of instances reaches the upper limit. The scaling of on-demand instances is constrained by the following factors:

Maximum number of instances allowed: By default, each Alibaba Cloud account can run up to 100 instances in a region, including both on-demand and provisioned instances. The actual quota displayed on the General Quotas page in the Quota Center console prevails.

The scaling speed of running instances is limited by both the maximum number allowed of burstable instances and the maximum rate at which instances can increase. For more information about the limits in different regions, see Limits on the scaling speed of instances in different regions.

Burstable instances: immediately created instances. The default upper limit for burstable instances is either 100 or 300, depending on the region.

Instance growth rate: the speed at which the number of instances can increase once the upper limit for burstable instances is reached. The default upper limit for the instance growth rate is either 100 per minute or 300 per minute, depending on the region.

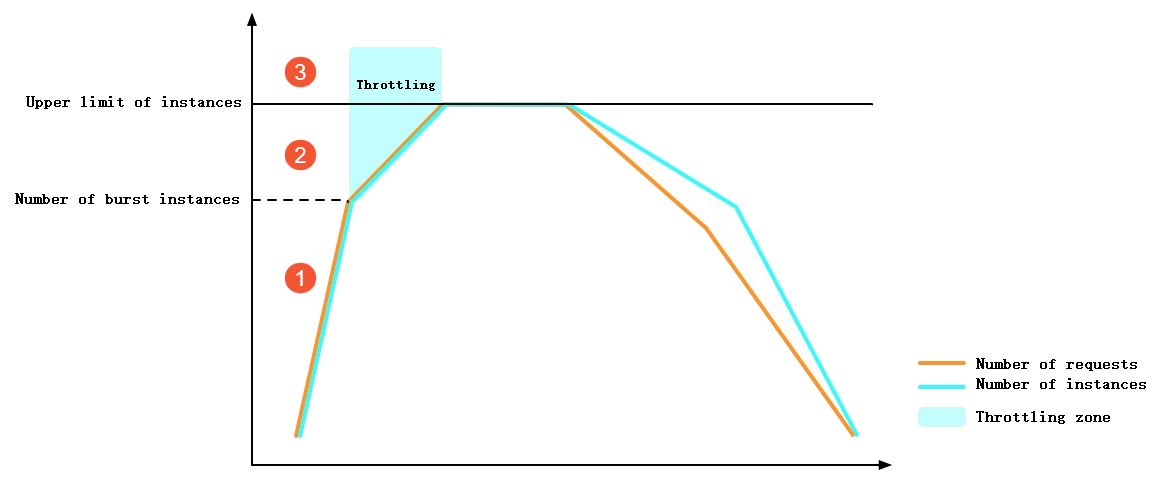

If the instance number or scaling speed goes beyond the limit, Function Compute returns an HTTP 429 status code, indicating that a throttling error has occurred. The following figure shows how Function Compute applies throttling when invocations surge.

①: Function Compute immediately creates instances to handle the surge in requests. Cold starts occur during this process. No throttling errors are reported because the number of burstable instances has not reached the upper limit.

②: The increase in the number of instances is now limited by the instance growth rate, as the upper limit for burstable instances has been reached. Throttling errors are reported for some requests.

③: The maximum number of instances has been reached, resulting in throttling errors for some requests.

By default, all functions within an Alibaba Cloud account in the same region share the same scaling limits. To configure the maximum number of instances for a specific function, see Overview of configuring the maximum number of on-demand instances. When the number of running instances exceeds the configured maximum, Function Compute returns a throttling error.

Scaling limits for provisioned instances

When the number of sudden invocations is too large, throttling errors become inevitable. In addition, the creation of new instances introduces cold starts. Both increase the request handling latency. To mitigate latency, you can reserve instances in advance in Function Compute. These reserved instances are called provisioned instances. The scaling of provisioned instances is unaffected by the limits imposed on on-demand instances. Instead, it is constrained by the following factors.

Maximum number of instances allowed: By default, each Alibaba Cloud account can run up to 100 instances in a region, including both on-demand and provisioned instances. The actual quota displayed on the General Quotas page in the Quota Center console prevails.

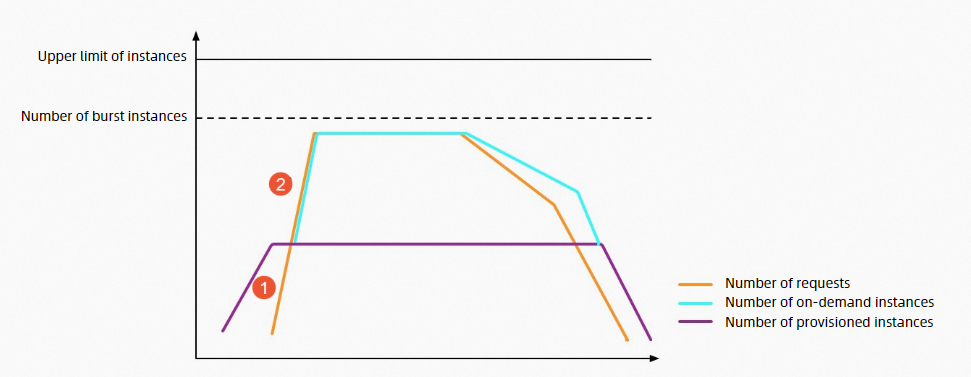

Maximum scaling speed: The default upper limit for the scaling speed of provisioned instances is either 100 per minute or 300 per minute, depending on the region. For more information about the limits in different regions, see Limits on the scaling speed of instances in different regions. The following figure shows how Function Compute applies throttling when provisioned instances are configured in the same loading scenario as the preceding figure.

①: All incoming requests are immediately processed, until the provisioned instances reach their full capacity. During this process, no cold starts occur, and no throttling errors are reported.

②: The provisioned instances are now fully utilized. Function Compute starts to create on-demand instances to handle subsequent requests until the number of burstable instances reaches the upper limit. During this process, cold starts occur, but no throttling errors are reported.

Limits on the scaling speed of instances in different regions

Region | Maximum number of burstable instances | Maximum instance growth rate |

China (Hangzhou), China (Shanghai), China (Beijing), China (Zhangjiakou), and China (Shenzhen) | 300 | 300 per minute |

Other regions | 100 | 100 per minute |

In the same region, the scaling speed limits do not distinguish between provisioned and on-demand instances.

By default, each Alibaba Cloud account can run up to 100 instances in a region. The actual quota displayed on the General Quotas page in the Quota Center console prevails. You can also apply for a quota adjustment in the Quota Center console.

GPU-accelerated instances have a slower scaling speed compared to CPU instances. Therefore, we recommend that you reserve GPU-accelerated instances in advance using provisioned mode.

Configure auto scaling rules

Create an auto scaling rule

Log on to the Function Compute console. In the left-side navigation pane, click Services & Functions.

In the top navigation bar, select a region. On the Services page, click the desired service.

- On the Functions page, click the function that you want to modify.

On the Function Details page, click the Auto Scaling tab and click Create Rule.

On the page that appears, configure the following parameters and click Create.

For on-demand instances

Set the Minimum Number of Instances parameter to 0 and the Maximum Number of Instances parameter to a value that best suits your business requirements. If you leave the Maximum Number of Instances parameter unset, the maximum allowed will be based on the limits applicable to your Alibaba Cloud account and the current region.

NoteThe Idle Mode, Scheduled Setting Modification, and Metric-based Setting Modification parameters take effect for provisioned instances.

For provisioned instances

Parameter

Description

Basic Settings

Version or Alias

Select the version or alias for which you want to create provisioned instances.

NoteYou can create provisioned instances only for the LATEST version.

Minimum Number of Instances

Enter the number of provisioned instances that you want to create. The minimum number of instances equals the number of provisioned instances to be created.

NoteBy setting a minimum number of function instances, you can reduce cold starts and the response time for function invocation requests. This helps improve the performance of your online services, especially those that are sensitive to response latency.

Idle Mode

Enable or disable the idle mode feature based on your business requirements. By default, it is disabled. Take note of the following items:

If you enable this feature, provisioned instances are allocated vCPU resources only while they are processing requests. When they stop processing requests, the vCPU resources are frozen.

Function Compute keeps sending incoming requests to the same instance based on the instance concurrency setting until the instance is at capacity. For example, if you set the instance concurrency to 50 and have 10 idle provisioned instances, when 40 requests arrive, they are sent to the same instance, and only that instance transitions to the active state.

If you disable this feature, provisioned instances remain active at all times, meaning they are allocated vCPU resources regardless of whether they are processing requests or not.

Maximum Number of Instances

Set a maximum number of instances that best suits your business requirements. Both provisioned and on-demand instances should be counted. The maximum number of instances is equal to the number of provisioned instances to be created plus the maximum number of on-demand instances allowed.

NoteBy setting a maximum number of instances, you can prevent a single function from occupying too many instances due to excessive invocations. This helps protect backend resources and avoids unexpected charges.

If you leave this parameter unset, the maximum number of instances allowed will be based on the limits applicable to your Alibaba Cloud account and the current region.

(Optional) Scheduled Setting Modification: You can create scheduled scaling rules to configure provisioned instances more flexibly. A scheduled scaling policy automatically adjusts the number of provisioned instances to a specified value at a designated time, meeting the specific concurrency requirements of your service. For more information about the configuration principles and examples, see Scheduled Setting Modification.

Policy Name

Enter a policy name.

Minimum Number of Instances

Enter the number of provisioned instances that you want for the specified time range.

Schedule Expression (UTC)

Enter the expression of the schedule. Example: cron(0 0 20 * * *). For more information, see Parameter description.

Effective Time (UTC)

Specify the start and end time for the scheduled scaling rule to take effect.

(Optional) Metric-based Setting Modification: A metric-based scaling policy automatically adjusts the number of provisioned instances every minute based on the utilization of instance concurrency and various function resources. For more information about the configuration principles and examples, see Metric-based Setting Modification.

Policy Name

Enter a policy name.

Minimum Range of Instances

Specify the range for the minimum number of provisioned instances.

Utilization Type

This parameter is available only for GPU-accelerated instances. Select the metric type that will determine how the scaling policy works. For more information about the auto scaling policies of GPU-accelerated instances, see Create an auto scaling policy for provisioned GPU-accelerated instances.

Concurrency Usage Threshold

Configure the threshold that triggers scaling. When the utilization of instance concurrency or specified function resources falls below the threshold you set, Function Compute reduces the number of provisioned instances. Conversely, when utilization exceeds the threshold, Function Compute scales out the instances.

Effective Time (UTC)

Specify the start and end time for the metric-based scaling rule to take effect.

Go to the Auto Scaling tab of a function to view the auto scaling rules that you create for it.

Modify or delete an auto scaling rule

On the Auto Scaling tab, find the rule that you want to manage, and click Modify or Delete in the Actions column to modify or delete the rule.

Set the Minimum Number of Instances parameter to 0 if you no longer need any provisioned instances.

Auto scaling of provisioned instances

In addition to setting a fixed number of provisioned instances, you can make flexible adjustments by configuring the Scheduled Setting Modification and Metric-based Setting Modification parameters. This approach helps improve instance utilization.

Scheduled Setting Modification

Definition: A scheduled scaling policy automatically adjusts the number of provisioned instances to a specified value at a designated time, meeting the specific concurrency requirements of your service.

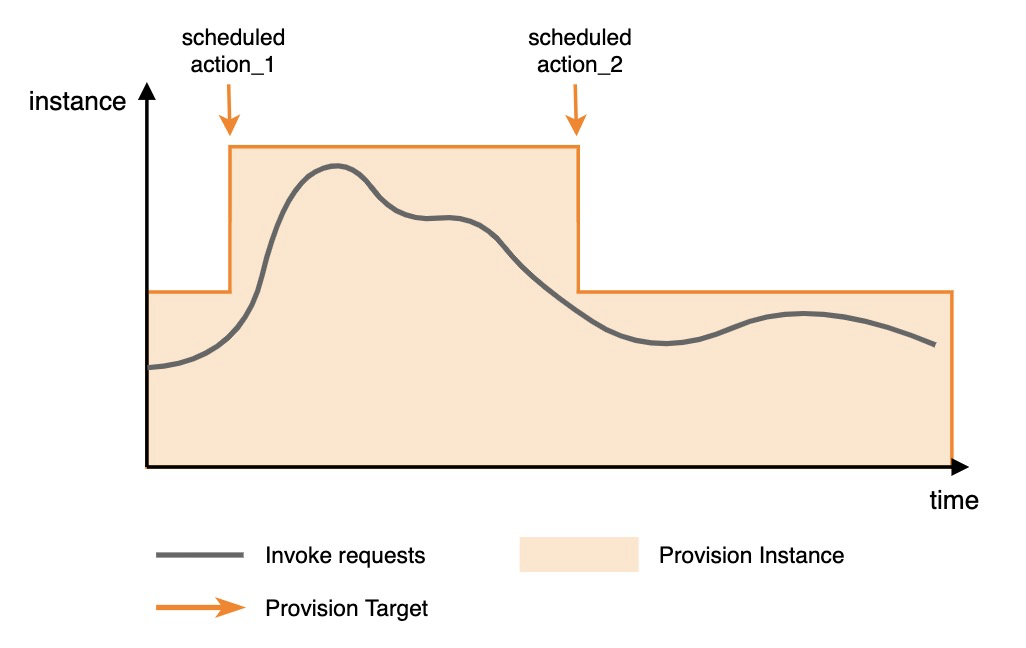

Applicable scenarios: Choose scheduled scaling when your service has distinct periodic patterns or predictable traffic peaks. When the number of concurrent invocations exceeds the capacity defined by the scheduled scaling policy, all excess requests will be directed to on-demand instances for processing. For more information, see Instance types and usage modes.

Example: The following figure shows two scheduled actions for instance scaling. The first scheduled action scales out the provisioned instances before the traffic peak, and the second scheduled action scales in the provisioned instances after the traffic peak.

The following sample code shows the configuration details. In this example, a function named function_1 in a service named service_1 is configured to automatically scale in and out. The configurations take effect from 10:00:00 on November 1, 2022 to 10:00:00 on November 30, 2022. The number of provisioned instances is adjusted to 50 at 20:00 and 10 at 22:00 every day. For more information about how to use the PutProvisionConfig operation to configure scheduled scaling, see the following sample code.

{

"ServiceName": "service_1",

"FunctionName": "function_1",

"Qualifier": "alias_1",

"ScheduledActions": [

{

"Name": "action_1",

"StartTime": "2022-11-01T10:00:00Z",

"EndTime": "2022-11-30T10:00:00Z",

"TargetValue": 50,

"ScheduleExpression": "cron(0 0 20 * * *)"

},

{

"Name": "action_2",

"StartTime": "2022-11-01T10:00:00Z",

"EndTime": "2022-11-30T10:00:00Z",

"TargetValue": 10,

"ScheduleExpression": "cron(0 0 22 * * *)"

}

]

}The following table describes the parameters in the sample code.

Parameter | Description |

Name | The name of the scheduled auto scaling task. |

StartTime | The time when the scaling policy starts to take effect, in UTC. |

EndTime | The time when the scaling policy expires, in UTC. |

TargetValue | The target number of instances. |

ScheduleExpression | The expression that specifies when to run the scheduled scaling task. The following formats are supported:

|

The following table describes the fields of the cron expression in the format of Seconds Minutes Hours Day-of-month Month Day-of-week.

Table 1. Field description

Field | Valid values | Allowed special characters |

Seconds | 0 to 59 | None |

Minutes | 0 to 59 | , - * / |

Hours | 0 to 23 | , - * / |

Day-of-month | 1 to 31 | , - * ? / |

Month | 1 to 12 or JAN to DEC | , - * / |

Day-of-week | 1 to 7 or MON to SUN | , - * ? |

Table 2. Special character description

Character | Description | Example |

* | Indicates any or each. | In the |

, | Specifies a list of values. | In the |

- | Specifies a range. | In the |

? | Indicates an uncertain value. | This character is used together with specified values. For example, when you specify a date without tying it to a particular day of the week, you can use this character in the |

/ | Specifies increments. n/m indicates an increment of m starting from the position of n. | In the |

Metric-based Setting Modification

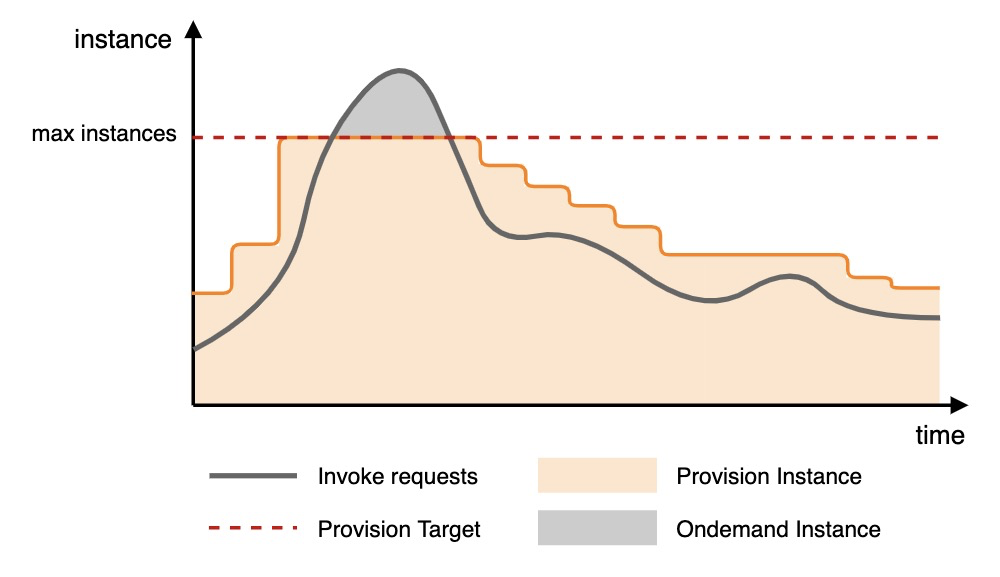

Definition: A metric-based auto scaling policy dynamically adjusts the number of provisioned instances based on the metrics it tracks.

Functionality: After you configure a metric-based scaling policy, Function Compute periodically collects the concurrency utilization metrics or resource utilization metrics for the provisioned instances. It uses these metrics in conjunction with the scaling trigger value you specify to control the scaling of the instances, ensuring that the number of instances better matches the actual resource usage.

Principle: The number of provisioned instances is adjusted every minute based on the metric values.

When the metric value exceeds the trigger value, or threshold, you specify for scale-out, Function Compute rapidly increases the number of provisioned instances to the preset target for scale-out.

Conversely, when the metric value falls below the threshold you set for scale-in, Function Compute gradually adjusts the number of provisioned instances toward the preset target for scale-in.

If the maximum and minimum numbers of provisioned instances are configured, Function Compute scales the instances within that range. When the number of instances reaches either the maximum or minimum, scaling stops.

Example: The following figure shows an example of auto scaling based on the utilization of instance concurrency.

When the traffic volume increases, the scale-out threshold is triggered and Function Compute starts to increase the number of provisioned instances. The scale-out stops when the number reaches the upper limit. Excess requests are sent to on-demand instances for processing.

When the traffic volume decreases, the scale-in threshold is triggered and Function Compute starts to reduce the number of provisioned instances.

The concurrency utilization metric only includes the concurrency of provisioned instances and does not include the concurrency of on-demand instances.

The metric is calculated using the following formula: The number of concurrent requests handled by provisioned instances/The maximum number of concurrent requests that all provisioned instances can handle. The metric value ranges from 0 to 1.

The maximum number of concurrent requests that all provisioned instances can handle, or maximum concurrency, is determined by the instance concurrency setting. For more information, see Configure instance concurrency.

Each instance processes a single request at a time: Maximum concurrency = Number of instances.

Each instance concurrently processes multiple requests: Maximum concurrency = Number of instances × Number of requests concurrently processed by one instance.

Target values for scaling:

The values are determined by the current metric value, metric target, current number of provisioned instances, and scale-in coefficient.

Calculation principle: Function Compute scales in provisioned instances based on the scale-in coefficient, which ranges from 0 (excluded) to 1. The scale-in coefficient is a system parameter used to slow down the scale-in speed. It does not require manual configuration. The target values for scaling tasks are the smallest integers that are greater than or equal to the following calculation results:

Scale-out target value = Current provisioned instances × (Current metric value/Metric target)

Scale-in target value = Current provisioned instances × Scale-in coefficient × (1 - Current metric value/Metric target)

Example: If the current metric value is 80%, the metric target is 40%, and the current number of provisioned instances is 100, the target value is calculated based on the following formula: 100 × (80%/40%) = 200. The number of provisioned instances is increased to 200 to ensure that the metric target remains near 40%.

The following sample code shows the configuration details. In this example, a function named function_1 in a service named service_1 is configured to automatically scale in and out based on the ProvisionedConcurrencyUtilization metric. The configurations take effect from 10:00:00 on November 1, 2022 to 10:00:00 on November 30, 2022. When concurrency utilization exceeds 60%, the number of provisioned instances is increased, with an upper limit of 100. When concurrency utilization falls below 60%, the number of provisioned instances is reduced, with a lower limit of 10. For more information about how to use the PutProvisionConfig operation to configure scheduled scaling, see the following sample code.

{

"ServiceName": "service_1",

"FunctionName": "function_1",

"Qualifier": "alias_1",

"TargetTrackingPolicies": [

{

"Name": "action_1",

"StartTime": "2022-11-01T10:00:00Z",

"EndTime": "2022-11-30T10:00:00Z",

"MetricType": "ProvisionedConcurrencyUtilization",

"MetricTarget": 0.6,

"MinCapacity": 10,

"MaxCapacity": 100,

}

]

}The following table describes the parameters in the sample code.

Parameter | Description |

Name | The name of the configured metric-based auto scaling task. |

StartTime | The time when the scaling policy starts to take effect, in UTC. |

EndTime | The time when the scaling policy expires, in UTC. |

MetricType | The metric that is tracked. In this example, the value is set to ProvisionedConcurrencyUtilization. |

MetricTarget | The threshold for metric-based auto scaling. |

MinCapacity | The maximum number of provisioned instances for scale-out. |

MaxCapacity | The minimum number of provisioned instances for scale-in. |

References

For more information about the basic concepts and billing methods of on-demand and provisioned instances: Instance types and usage modes.

After you configure auto scaling policies for provisioned instances, you can check the FunctionProvisionedCurrentInstance metric to see how many provisioned instances are occupied in function executions. For more information, see Function-specific metrics.