Data Lake Formation (DLF) is a fully managed service for storing and managing Paimon metadata and data. It supports multiple storage optimization policies to provide secure and high-performance data lake management. This topic describes how to use an Alibaba Cloud DLF catalog in EMR Serverless StarRocks.

Background

Alibaba Cloud Data Lake Formation (DLF) is a fully managed platform that provides unified metadata, data storage, and management services.

Use DLF

Prerequisites

You have created a Serverless StarRocks instance. For more information, see Create an instance.

The instance must be version 3.3 or later, with a Minor Version of 3.3.8-1.99 or later.

NoteYou can view the minor version in the Version Information section on the Instance Details page. If the minor version is earlier than 3.3.8-1.99, you must update it. For more information, see Update the minor version.

You have created a data catalog in DLF.

Example: Use a DLF Catalog

Step 1: Add a user in Serverless StarRocks

DLF uses Resource Access Management (RAM) for access control. By default, StarRocks users do not have any permissions on DLF resources. You must add an existing RAM user and grant the required permissions to that user. If you have not created a RAM user, see Create a RAM user.

Go to the EMR Serverless StarRocks instance list page.

Log on to the E-MapReduce console.

In the navigation pane on the left, choose .

In the top menu bar, select the required region.

On the Instance List page, find your instance and click Connect in the Actions column. For more information, see Connect to a StarRocks instance using EMR StarRocks Manager.

You can connect to the StarRocks instance using the admin user or a StarRocks super administrator account.

In the left-side menu, choose , and then click Create User.

In the Create User dialog box, configure the following parameters and click OK.

User Source: Select RAM User.

Username: Select the RAM user from the previous step (dlf-test).

Password and Confirm Password: Enter a custom password.

Roles: Keep the default value public.

Step 2: Grant permissions on the catalog in DLF

Log on to the Data Lake Formation console.

On the Catalogs page, click the name of your catalog.

Click the Permissions tab, and then click Grant Permissions.

From the Select DLF User drop-down list, select the RAM user (dlf-test).

Set Preset Permission Type to Custom and grant the ALL permission on the current data catalog and all its resources to the user.

Click OK.

Step 3: Create a DLF Catalog in Serverless StarRocks

Paimon Catalog

Connect to the instance. For more information, see Connect to a StarRocks instance using EMR StarRocks Manager.

ImportantReconnect to the StarRocks instance using the RAM user that you added in Step 1 (dlf-test). You will use this user to create an SQL query to access the DLF foreign table.

To create an SQL query, go to the Querys page in SQL Editor and click the

icon.

icon.Create a

Paimon Catalog. Enter the following SQL statement and click Run.CREATE EXTERNAL CATALOG `dlf_catalog` PROPERTIES ( 'type' = 'paimon', 'uri' = 'http://cn-hangzhou-vpc.dlf.aliyuncs.com', 'paimon.catalog.type' = 'rest', 'paimon.catalog.warehouse' = 'StarRocks_test', 'token.provider' = 'dlf' );Read and write data.

Create a database.

CREATE DATABASE IF NOT EXISTS dlf_catalog.sr_dlf_db;Create a data table.

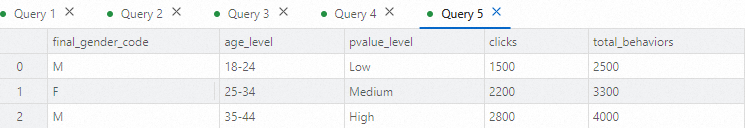

CREATE TABLE dlf_catalog.sr_dlf_db.ads_age_pvalue_analytics( final_gender_code STRING COMMENT 'Gender', age_level STRING COMMENT 'Age level', pvalue_level STRING COMMENT 'Consumption level', clicks INT COMMENT 'Number of clicks', total_behaviors INT COMMENT 'Total number of behaviors' );Insert data.

INSERT INTO dlf_catalog.sr_dlf_db.ads_age_pvalue_analytics (final_gender_code, age_level, pvalue_level, clicks, total_behaviors) VALUES ('M', '18-24', 'Low', 1500, 2500), ('F', '25-34', 'Medium', 2200, 3300), ('M', '35-44', 'High', 2800, 4000);Query data.

SELECT * FROM dlf_catalog.sr_dlf_db.ads_age_pvalue_analytics;The following figure shows the query result.

Iceberg Catalog

Connect to the instance. For more information, see Connect to a StarRocks instance using EMR StarRocks Manager.

ImportantReconnect to the StarRocks instance using the RAM user that you added in Step 1 (dlf-test). You will use this user to create an SQL query to access the DLF foreign table.

On the Querys page of the SQL Editor, click the

icon to create an SQL query.

icon to create an SQL query.Create an

Iceberg Catalog. Enter the following SQL statement and click Run.CREATE EXTERNAL CATALOG `iceberg_catalog` PROPERTIES ( 'type' = 'iceberg', 'iceberg.catalog.type' = 'dlf_rest', 'uri' = 'http://cn-hangzhou-vpc.dlf.aliyuncs.com/iceberg', 'warehouse' = 'iceberg_test', 'rest.signing-region' = 'cn-hangzhou' );Query data.

NoteIceberg foreign tables are read-only in StarRocks. You can execute

SELECTqueries, but you cannot write data to Iceberg tables from StarRocks.select * from iceberg_catalog.`default`.test_iceberg;The following figure shows the query result.

Use DLF 1.0 (legacy)

Prerequisites

You have created a Serverless StarRocks instance. For more information, see Create an instance.

You have created a data catalog in DLF 1.0 (legacy). For more information, see Data Catalog.

Create a catalog

Create a Hive Catalog

Syntax

CREATE EXTERNAL CATALOG <catalog_name>

[COMMENT <comment>]

PROPERTIES

(

"type" = "hive",

GeneralParams,

MetastoreParams

)Parameters

catalog_name: The name of the Hive catalog. This parameter is required. The name must meet the following requirements:It must start with a letter and can contain only letters (a-z or A-Z), numbers (0-9), and underscores (_).

The total length cannot exceed 64 characters.

comment: The description of the Hive catalog. This parameter is optional.type: The type of the data source. Set this tohive.GeneralParams: A set of parameters for general settings.GeneralParamsincludes the following parameter.Parameter

Required

Description

enable_recursive_listing

No

Specifies whether StarRocks recursively reads data from files in a table or partition directory, including its subdirectories. Valid values:

true (default): Recursively traverses the directory.

false: Reads data only from files at the current level of the table or partition directory.

MetastoreParams: Parameters related to how StarRocks accesses the metadata of the Hive cluster.Property

Description

hive.metastore.type

The type of metadata service used by Hive. Set this to

dlf.dlf.catalog.id

The ID of an existing data catalog in DLF 1.0. This parameter is required only when

hive.metastore.typeis set todlf. If thedlf.catalog.idparameter is not specified, the system uses the default DLF Catalog.

Example

CREATE EXTERNAL CATALOG hive_catalog

PROPERTIES

(

"type" = "hive",

"hive.metastore.type" = "dlf",

"dlf.catalog.id" = "sr_dlf"

);For more information about Hive Catalogs, see Hive Catalog.

Create an Iceberg Catalog

Syntax

CREATE EXTERNAL CATALOG <catalog_name>

[COMMENT <comment>]

PROPERTIES

(

"type" = "iceberg",

MetastoreParams

)Parameters

catalog_name: The name of the Iceberg catalog. This parameter is required. The name must meet the following requirements:It must consist of letters (a-z or A-Z), digits (0-9), or underscores (_), and must start with a letter.

The total length cannot exceed 64 characters.

The catalog name is case-sensitive.

comment: The description of the Iceberg catalog. This parameter is optional.type: The type of the data source. Set this toiceberg.MetastoreParams: The parameters for StarRocks to access the metadata service of the Iceberg cluster.Property

Description

iceberg.catalog.type

The type of catalog in Iceberg. The value must be

dlf.dlf.catalog.id

The ID of an existing data catalog in DLF. If you do not configure the

dlf.catalog.idparameter, the system uses the default DLF catalog.

Example

CREATE EXTERNAL CATALOG iceberg_catalog_hms

PROPERTIES

(

"type" = "iceberg",

"iceberg.catalog.type" = "dlf",

"dlf.catalog.id" = "sr_dlf"

);For more information about Iceberg Catalogs, see Iceberg Catalog.

Create a Paimon Catalog

Syntax

CREATE EXTERNAL CATALOG <catalog_name>

[COMMENT <comment>]

PROPERTIES

(

"type" = "paimon",

CatalogParams,

StorageCredentialParams

);Parameters

catalog_name: The name of the Paimon catalog. This parameter is required. The name must meet the following requirements:It must start with a letter and can contain only letters (a-z or A-Z), digits (0-9), or underscores (_).

The total length cannot exceed 64 characters.

comment: The description of the Paimon catalog. This parameter is optional.type: The type of the data source. Set this parameter topaimon.CatalogParams: The parameters for StarRocks to access the metadata of the Paimon cluster.Property

Required

Description

paimon.catalog.type

Yes

The type of the data source. The value is

dlf.paimon.catalog.warehouse

Yes

The storage path of the warehouse where Paimon data is stored. HDFS, OSS, and OSS-HDFS are supported. The format for OSS or OSS-HDFS is

oss://<yourBucketName>/<yourPath>.ImportantIf you use OSS or OSS-HDFS as the warehouse, you must configure the aliyun.oss.endpoint parameter. For more information, see StorageCredentialParams: Parameters for StarRocks to access the file storage of the Paimon cluster.

dlf.catalog.id

No

The ID of an existing data catalog in DLF. If you do not configure the

dlf.catalog.idparameter, the system uses the default DLF catalog.StorageCredentialParams: The parameters for StarRocks to access the file storage of the Paimon cluster.If you use HDFS as the storage system, you do not need to configure

StorageCredentialParams.If you use OSS or OSS-HDFS, you must configure

StorageCredentialParams."aliyun.oss.endpoint" = "<YourAliyunOSSEndpoint>"The parameters are described in the following table.

Property

Description

aliyun.oss.endpoint

The endpoint information for OSS or OSS-HDFS is as follows:

OSS: Go to the Overview page of your bucket and find the endpoint in the Port section. You can also see OSS regions and endpoints to view the endpoint of the corresponding region. For example,

oss-cn-hangzhou.aliyuncs.com.OSS-HDFS: Go to the Overview page of your bucket and find the endpoint for the OSS-HDFS in the Port section. For example, the endpoint for the China (Hangzhou) region is

cn-hangzhou.oss-dls.aliyuncs.com.ImportantAfter you configure this parameter, you must also go to the Parameter Configuration page in the EMR Serverless StarRocks console. Then, modify the fs.oss.endpoint parameter in

core-site.xmlandjindosdk.cfgto be consistent with the value of aliyun.oss.endpoint.

Example

CREATE EXTERNAL CATALOG paimon_catalog

PROPERTIES

(

"type" = "paimon",

"paimon.catalog.type" = "dlf",

"paimon.catalog.warehouse" = "oss://<yourBucketName>/<yourPath>",

"dlf.catalog.id" = "paimon_dlf_test"

);For more information about Paimon Catalogs, see Paimon Catalog.

References

For more information about Paimon Catalogs, see Paimon Catalog.