When you create an E-MapReduce (EMR) cluster, the EMR Doctor environment is automatically installed and the job collection feature is enabled for health status evaluation by default. Some client settings may cause job collection configurations to become invalid. This topic describes how to append the parameters that are used to collect jobs of different engine types to a client to ensure that EMR Doctor can collect the jobs as expected.

EMR Doctor settings

In most cases, you do not need to configure EMR Doctor parameters. The client settings are automatically configured by default when an EMR cluster is created. If you modify or configure the parameters in the following table for a job, the default settings of the parameters in the cluster on which the job is run are overwritten. In this case, you must manually add the EMR Doctor settings in the following table to the parameters that you modified or configured.

| Engine name | Parameter | Appended EMR Doctor setting |

|---|---|---|

| MapReduce | yarn.app.mapreduce.am.command-opts |

|

| mapreduce.map.java.opts | ||

| mapreduce.reduce.java.opts | ||

| Tez | tez.task.launch.cmd-opts |

|

| tez.am.launch.cmd-opts | ||

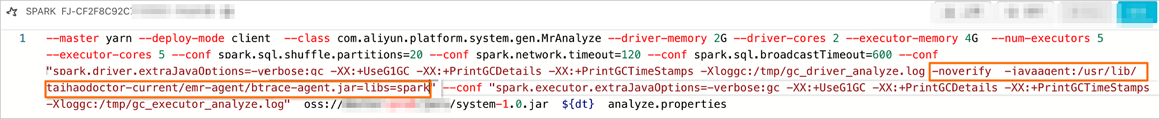

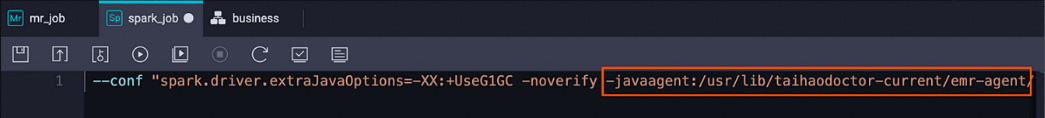

| Spark | spark.driver.extraJavaOptions |

|

| spark.executor.extraJavaOptions | ||

| spark.yarn.am.extraJavaOptions |

Use the job collection feature of EMR Doctor for EMR nodes in DataWorks

If you configure EMR Doctor settings for EMR nodes when you configure the EMR nodes, and you want to schedule the EMR nodes in DataWorks, you must append the EMR Doctor settings to the related parameters.

Use the job collection feature of EMR Doctor in DolphinScheduler

We recommend that you use an EMR gateway environment because the scheduling system that runs in the EMR gateway environment contains information such as the software packages of EMR Doctor.

If DolphinScheduler is deployed in the gateway environment, you can add the EMR Doctor settings that are described in the preceding table to the parameters that you configure in the Optional Parameter section when you define a workflow. This way, EMR Doctor can collect jobs in the workflow when the workflow runs and analyze the jobs later.

Use the job collection feature of EMR Doctor when you develop data by using Data Platform in the old EMR console