OSS-HDFS (JindoFS) allows you to use the JindoFS CLI to configure RootPolicy to specify a prefix for OSS-HDFS based on your business requirements. This way, you can run jobs on OSS-HDFS without the need to modify the original access prefix hdfs://. This topic describes how to use RootPolicy to access OSS-HDFS.

Background information

JindoFS CLI is an executable program used to access OSS-HDFS. The functions of the JindoFS CLI are similar to ossutil provided by Alibaba Cloud Object Storage Service (OSS). You can use the JindoFS CLI to perform common operations. For example, you can read metadata, read or write streams, create directories, and copy files. The JindoFS CLI also supports functions that are specific to OSS-HDFS. For example, you can export inventories. The JindoFS CLI provides common commands of HDFS Shell and OSS-HDFS-specific methods, which the JindoSDK CLI does not support. The JindoFS CLI can be used only by OSS-HDFS.

Prerequisites

An E-MapReduce (EMR) cluster that contains the OSS-HDFS service is created. For more information, see Create a cluster.

OSS-HDFS is activated. For more information, see Enable OSS-HDFS.

Procedure

Log on to the master node of the created cluster. For more information, see Log on to a cluster.

Configure the AccessKey pair.

Download the JindoFS CLI.

Upload the downloaded package to the specific directory of the EMR cluster and decompress the package.

The following sample code provides an example on how to go to the bin directory of the

jindofs-sdk-6.2.5-linux.tar.gzpackage. If you use a different version of JindoFS, replace the package name in the following code based on your business requirements.tar zxf jindofs-sdk-6.2.5-linux.tar.gz cd jindofs-sdk-6.2.5-linux/binCreate a configuration file named

jindofs.cfgin thebindirectory and configure the AccessKey pair of your Alibaba Cloud account or the AccessKey pair of a RAM user that has the required permissions.[client] # Configure the AccessKey ID and AccessKey secret that are used to access OSS-HDFS. fs.oss.accessKeyId = <access key> fs.oss.accessKeySecret = <access secret> # Configure the endpoint that is used to access OSS-HDFS. Example: cn-hangzhou.oss-dls.aliyuncs.com. fs.oss.endpoint = <OSS-HDFS endpoint> fs.oss.data.lake.storage.enable = true # EMR Credential provider for password-free access # fs.oss.provider.format = JSONNoteAfter you decompress the package, you can find the sample configuration file

jindofs.cfg.templatein theconf/directory.

Configure the environment variable.

export JINDOSDK_CONF_DIR=<JINDOSDK_CONF_DIR>Replace

<JINDOSDK_CONF_DIR>with the absolute path of thejindofs.cfgconfiguration file.Configure RootPolicy.

You can run the

setRootPolicycommand to register an access address that contains a custom prefix for a bucket../jindofs admin -setRootPolicy oss://<bucket_name>.<dls_endpoint>/ hdfs://<ns_name>/Parameters in the command:

<bucket_name>: the name of the bucket for which OSS-HDFS is enabled. In this example, only the root directory of the bucket is supported.<ns_name>: the name of the namespace in which OSS-HDFS resides. The value is a non-empty string, such asdir.<dls_endpoint>: the endpoint of OSS-HDFS. For example, the endpoint of OSS-HDFS in the China (Hangzhou) region iscn-hangzhou.oss-dls.aliyuncs.com. If you do not want to repeatedly add the <dls_endpoint> parameter to the setRootPolicy command each time you run RootPolicy, you can use one of the following methods to add the specific configuration item to thecore-site.xmlfile of Hadoop:Method 1

<configuration> <property> <name>fs.oss.endpoint</name> <value><dls_endpoint></value> </property> </configuration>Method 2

<configuration> <property> <name>fs.oss.bucket.<bucket_name>.endpoint</name> <value><dls_endpoint></value> </property> </configuration>

Configure the discovery address of an access policy and the implementation class of a scheme

To use OSS-HDFS to integrate Hadoop with OSS in an effective manner, you must add the following configuration items to the

core-site.xmlfile of Hadoop-Common on the Services tab of the desired cluster.<configuration> <property> <name>fs.accessPolicies.discovery</name> <value>oss://<bucket_name>.<dls_endpoint>/</value> </property> <property> <name>fs.AbstractFileSystem.hdfs.impl</name> <value>com.aliyun.jindodata.hdfs.HDFS</value> </property> <property> <name>fs.hdfs.impl</name> <value>com.aliyun.jindodata.hdfs.JindoHdfsFileSystem</value> </property> </configuration>If you want to configure the discovery address of the access policy and scheme implementation class for multiple buckets, separate the buckets with commas (

,).Run the following command to check whether RootPolicy is successfully configured:

hadoop fs -ls hdfs://<ns_name>/If the following results are returned, RootPolicy is successfully configured:

drwxr-x--x - hdfs hadoop 0 2023-01-05 12:27 hdfs://<ns_name>/apps drwxrwxrwx - spark hadoop 0 2023-01-05 12:27 hdfs://<ns_name>/spark-history drwxrwxrwx - hdfs hadoop 0 2023-01-05 12:27 hdfs://<ns_name>/tmp drwxrwxrwx - hdfs hadoop 0 2023-01-05 12:27 hdfs://<ns_name>/userUse a custom prefix to access OSS-HDFS.

After you restart services such as Hive and Spark, you can access OSS-HDFS by using a custom prefix.

Other features of RootPolicy

Delete registered access addresses that contain a custom prefix specified for a bucket

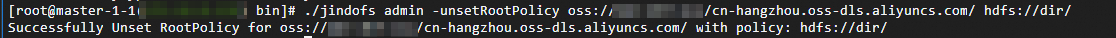

You can run the

unsetRootPolicycommand to delete all registered access addresses that contain a custom prefix specified for a bucket../jindofs admin -unsetRootPolicy oss://<bucket_name>.<dls_endpoint>/ hdfs://<ns_name>/Example:

Query registered access addresses that contain a custom prefix specified for a bucket

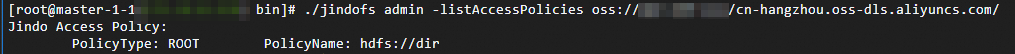

You can run the

listAccessPoliciescommand to query all registered access addresses that contain a custom prefix specified for a bucket../jindofs admin -listAccessPolicies oss://<bucket_name>.<dls_endpoint>/Example: