This topic describes the node label feature of YARN and how to use the feature. This topic also provides answers to some frequently asked questions about the feature.

Overview

The node label feature allows you to manage the nodes on which NodeManagers are deployed in a cluster based on different partitions. One node can belong to only one partition. You can use the node label feature to divide the cluster into multiple partitions that contain different nodes. By default, the partition parameter is set to an empty string for a node (partition=""). This means that the node belongs to the DEFAULT partition.

The partitions can be classified into the following types:

Exclusive partition: Containers can be allocated to a node of an exclusive partition only if the partition is exactly matched.

Non-exclusive partition: Containers can be allocated to a node of a non-exclusive partition when the partition is exactly matched. If no partition is specified or the DEFAULT partition is specified as the partition to which containers are allocated, containers can be allocated to a node of a non-exclusive partition that has idle resources.

Only Capacity Scheduler supports the node label feature. You can configure schedulers or the node-label-expression parameters of a compute engine to allocate containers in a queue to partitions that are accessible to the queue.

For more information about node labels, see YARN Node Labels.

Limits

If your cluster is of a minor version earlier than E-MapReduce (EMR) V5.11.1 or EMR V3.45.1, and the value of the

yarn.node-labels.configuration-typeconfiguration item in theyarn-site.xmlfile is centralized, you can follow instructions in this topic to use the node label feature.If your cluster is of EMR V5.11.1, EMR V3.45.1, or a minor version later than EMR V5.11.1 or EMR V3.45.1, you can manage YARN partitions in the EMR console. For more information, see Manage YARN partitions in the EMR console.

Use the node label feature

Enable the node label feature in the EMR console

On the Configure tab of the YARN service page, click the yarn-site.xml tab, add the configuration items that are described in the following table, and then click Save. Then, restart ResourceManager to make the configurations take effect.

Key | Value | Description |

yarn.node-labels.enabled | true | Specifies whether to enable the node label feature. |

yarn.node-labels.fs-store.root-dir | /tmp/node-labels | Specifies the directory in which the data of node labels is stored. By default, node labels are configured in centralized mode. In this mode, you can specify the storage directory of node labels. |

To better understand the working principles of the node label feature, this topic describes how to configure node labels in centralized mode. In EMR V5.11.1, EMR V3.45.1, or a minor version later than EMR V5.11.1 or EMR V3.45.1, the

yarn.node-labels.configuration-typeparameter is set to distributed by default. In this case, you do not need to manually add a command. Instead, you can manage YARN partitions in the EMR console. For more information, see Manage YARN partitions in the EMR console. For more information about node labels, see "YARN Node Labels" in Apache Hadoop documentation.If you set the yarn.node-labels.fs-store.root-dir parameter to a directory instead of a URL, the file system specified by the

fs.defaultFSvariable is used. This is equivalent to the configuration of${fs.defaultFS}/tmp/node-labels. In most cases, the file system used in EMR is Hadoop Distributed File System (HDFS).

Run commands to create partitions and map nodes to partitions

If you want the system to automatically specify a partition for each of the nodes on which NodeManagers reside in a node group that is scaled out, you can create one or more partitions and add the yarn rmadmin -replaceLabelsOnNode command to the bootstrap script of the node group based on your business requirements. This way, the commands are run after NodeManagers are started.

Sample code:

# Log on as the default administrator hadoop of YARN.

sudo su - hadoop

# Create partitions in your cluster.

yarn rmadmin -addToClusterNodeLabels "DEMO"

yarn rmadmin -addToClusterNodeLabels "CORE"

# Query the YARN nodes in the cluster.

yarn node -list

# Map each node to a partition.

yarn rmadmin -replaceLabelsOnNode "core-1-1.c-XXX.cn-hangzhou.emr.aliyuncs.com=DEMO"

yarn rmadmin -replaceLabelsOnNode "core-1-2.c-XXX.cn-hangzhou.emr.aliyuncs.com=DEMO"After the commands are run, check the configuration results on the web UI of ResourceManager.

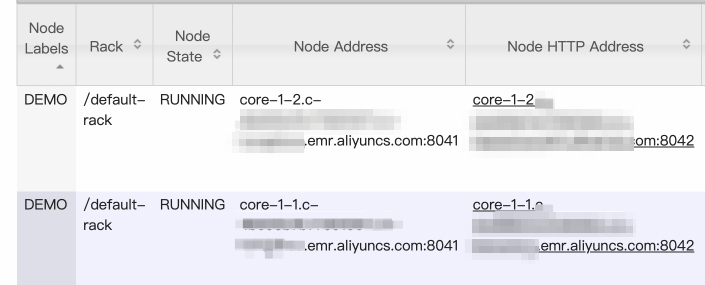

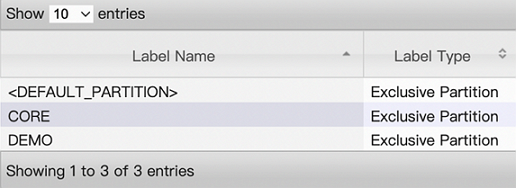

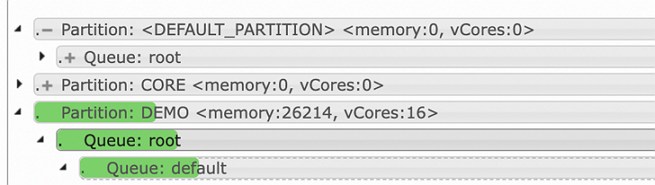

Configurations of nodes

Configurations of node labels

Use Capacity Scheduler to configure partitions that are accessible to queues and jobs

Before you use Capacity Scheduler to configure partitions, make sure that the yarn.resourcemanager.scheduler.class parameter in the yarn-site.xml file is set to org.apache.hadoop.yarn.server.resourcemanager.scheduler.capacity.CapacityScheduler.

To specify the accessible partitions and the available capacity of resources in each partition for a queue, add the configuration items that are described in the following table to the capacity-scheduler.xml file. Replace <queue-path> with the path of a queue and <label> with the name of a partition. If you want the DEFAULT partition to be accessible to a queue, use the default values of the yarn.scheduler.capacity.<queue-path>.accessible-node-labels.<label>.capacity and yarn.scheduler.capacity.<queue-path>.accessible-node-labels.<label>.maximum-capacity configuration items.

Configuration item | Value | Description |

yarn.scheduler.capacity.<queue-path>.accessible-node-labels | The names of partitions. Separate multiple partitions with commas (,). | The partitions that are accessible to a specific queue. |

yarn.scheduler.capacity.<queue-path>.accessible-node-labels.<label>.capacity | For more information about valid values, refer to the yarn.scheduler.capacity.<queue-path>.capacity configuration item. | The capacity of resources in a specific partition that are available for a specific queue. Important To make this configuration take effect for a queue, you must specify the resource capacity for all corresponding ancestor queues. |

yarn.scheduler.capacity.<queue-path>.accessible-node-labels.<label>.maximum-capacity | For more information about valid values, refer to the yarn.scheduler.capacity.<queue-path>.maximum-capacity configuration item. Default value: 100. | The maximum capacity of resources in a specific partition that are available for a specific queue. |

yarn.scheduler.capacity.<queue-path>.default-node-label-expression | The name of a partition. The default value is an empty string, which indicates the DEFAULT partition. | The default partition that is allocated to containers in jobs submitted by a specific queue if no partition is specified for the containers. |

To set the actual resource capacity of a partition that is available for a queue to a value greater than zero, you must specify the same resource capacity for all corresponding ancestor queues. This is because the default value of the

yarn.scheduler.capacity.<queue-path>.accessible-node-labels.<label>.capacityconfiguration item is 0 for all queues. You must set theyarn.scheduler.capacity.root.accessible-node-labels.<label>.capacityconfiguration item to 100 for the root queue. This way, the actual resource capacity of a partition that is available for all descendant queues can be greater than zero. Theoretically, you need to only set the configuration item to a value greater than 0 for the root queue. However, if you set the configuration item to a value smaller than 100, the actual resource capacity for descendant queues is less than calculated. In this case, the configuration is meaningless. If the default resource capacity of a partition that is available for the ancestor queues of a queue is set to 0, the actual resource capacity available for the queue in the partition is still zero.If you set the

yarn.scheduler.capacity.root.accessible-node-labels.<label>.capacityconfiguration item to a value greater than 0 for the root queue, the sum of the resource capacity for the corresponding child queues in the same partition must be 100. The same rule applies to theyarn.scheduler.capacity.<queue-path>.capacityconfiguration item.The following sample code provides an example on how to configure partitions and resource capacity:

<configuration> <!-- Add configuration items related to node labels to the capacity-scheduler.xml file. --> <property> <!-- Required. Specify that the DEMO partition is accessible to the default queue. --> <name>yarn.scheduler.capacity.root.default.accessible-node-labels</name> <value>DEMO</value> </property> <property> <!-- Required. Specify the resource capacity available for all ancestor queues of the default queue in the DEMO partition. --> <name>yarn.scheduler.capacity.root.accessible-node-labels.DEMO.capacity</name> <value>100</value> </property> <property> <!-- Required. Specify the resource capacity available for the default queue in the DEMO partition. --> <name>yarn.scheduler.capacity.root.default.accessible-node-labels.DEMO.capacity</name> <value>100</value> </property> <property> <!-- Optional. Specify the maximum resource capacity available for the default queue in the DEMO partition. The default value is 100. --> <name>yarn.scheduler.capacity.root.default.accessible-node-labels.DEMO.maximum-capacity</name> <value>100</value> </property> <property> <!-- Optional. Specify the default partition to which containers in jobs submitted by the default queue are submitted. The default value is an empty string, which indicates the DEFAULT partition. --> <name>yarn.scheduler.capacity.root.default.default-node-label-expression</name> <value>DEMO</value> </property> <configuration>

Save the capacity-scheduler.xml file. On the Status tab of the YARN service page, update the configurations of scheduler queues in real time by using the refreshQueues feature of ResourceManager. After the update is complete, check the configuration results on the web UI of ResourceManager.

In addition to the default partitioning feature for scheduler queues, compute engines (excluding Tez) also support the node label feature. The following table describes the parameters that are required for different engines.

Engine | Configuration item | Description |

MapReduce | mapreduce.job.node-label-expression | The default partition that contains the nodes used by all containers of jobs. |

mapreduce.job.am.node-label-expression | The partition that contains the nodes used by ApplicationMaster. | |

mapreduce.map.node-label-expression | The partition that contains the nodes used by map tasks. | |

mapreduce.reduce.node-label-expression | The partition that contains the nodes used by reduce tasks. | |

Spark | spark.yarn.am.nodeLabelExpression | The partition that contains the nodes used by ApplicationMaster. |

spark.yarn.executor.nodeLabelExpression | The partition that contains the nodes used by Executor. | |

Flink | yarn.application.node-label | The default partition that contains the nodes used by all containers of jobs. |

yarn.taskmanager.node-label | The partition that contains the nodes used by TaskManager. This parameter is supported only if Apache Flink 1.15.0 or later is used in a cluster of EMR V3.44.0, EMR V5.10.0, or a minor version later than EMR V3.44.0 or EMR V5.10.0. |

FAQ

Why is the data of node labels configured in centralized mode in a high availability cluster stored in a distributed file system?

In a high availability cluster, ResourceManager is deployed on multiple nodes. By default, open source Hadoop stores the data of node labels in the file:///tmp/hadoop-yarn-${user}/node-labels/ path. If a node failover occurs, the new node in the Active state cannot read the data of node labels from a local path. You must set the yarn.node-labels.fs-store.root-dir parameter to the storage directory of a distributed file system, such as /tmp/node-labels or ${fs.defaultFS}/tmp/node-labels. The default file system of EMR is HDFS. For more information, see the Use node labels section in this topic.

Before you set the yarn.node-labels.fs-store.root-dir parameter to the storage directory of a distributed file system, make sure that the file system works as expected and the hadoop user can read data from and write data to the file system. Otherwise, ResourceManager fails to be started.

Why is the port of NodeManager not specified when I map a node to a partition?

Only one NodeManager resides on a node in an EMR cluster. Therefore, you do not need to specify the port of NodeManager. If you specify an invalid port of NodeManager or a random port, the specified node cannot be mapped to the specified partition when you run the yarn rmadmin -replaceLabelsOnNode command. You can run the yarn node -list -showDetails command to query the partition to which a node belongs.

What are the use scenarios of node labels?

In most cases, the node label feature is not enabled for EMR clusters. This is because the statistics collected by the open source Hadoop YARN service only account for the status of the DEFAULT partition, rather than the partition mapping of nodes. This increases O&M complexity. In addition, partition scheduling may not make full use of the resources in a cluster. This causes a waste of resources. Node labels are suitable for various scenarios. For example, you can separate batch jobs from streaming jobs to allow the jobs to run in different partitions at the same time in a cluster. After partition mapping is performed, you can run important jobs on a node that is not elastically added. If the specifications of nodes vary in a cluster, you can run jobs on nodes in different partitions to prevent the imbalance of resources.