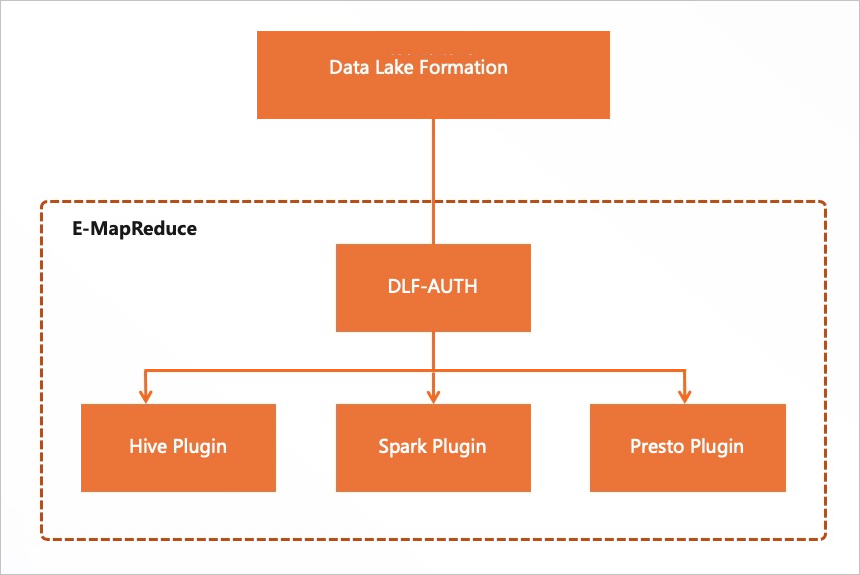

The DLF-Auth component is provided by Data Lake Formation (DLF). You can use DLF-Auth to enable the data permission management feature of DLF. DLF-Auth allows you to implement fine-grained permission management on databases, tables, columns, and functions. This way, you can manage data permissions on your data lake in a centralized manner. This topic describes how to enable DLF-Auth to manage permissions.

Background information

DLF is a fully managed service that helps you build cloud-based data lakes. DLF provides centralized permission management and metadata management for cloud-based data lakes. For more information about DLF, see What is Data Lake Formation.

Prerequisites

An E-MapReduce (EMR) cluster that contains the OpenLDAP service is created. For more information, see Create a cluster.

When you create the EMR cluster, you must select DLF Unified Metadata for Metadata in the Software Configuration step.

Limits

DLF allows only RAM users to manage permissions. Therefore, you must use the user management feature to add a RAM user in the EMR console.

For more information about the regions in which you can use the data permission management feature of DLF, see Supported regions and endpoints.

If you enable Hive or Spark for DLF-Auth, you cannot enable or disable Hive or Spark for Ranger. If you enable Hive or Spark for Ranger, you cannot enable or disable Hive or Spark for DLF-Auth.

The following table describes the EMR versions and compute engines that are supported by DLF-Auth.

EMR version

Hive

Spark

Presto

Impala

EMR V3.X

EMR V3.39.0 and earlier

Not supported

Not supported

Not supported

Not supported

EMR V3.40.0

Supported

Supported

Supported

Not supported

EMR V3.41.0 to EMR V3.43.1

Supported

Supported

Not supported

Not supported

EMR V3.44.0 and later

Supported

Supported

Supported

Supported

EMR V5.X

EMR V5.5.0 and earlier

Not supported

Not supported

Not supported

Not supported

EMR V5.6.0

Supported

Supported

Supported

Not supported

EMR V5.7.0 to EMR V5.9.1

Supported

Supported

Not supported

Not supported

EMR V5.10.0 and later

Supported

Supported

Supported

Supported

Procedure

This section describes how to enable DLF-Auth to implement fully managed and centralized permission management on data lakes.

Step 1: Enable Hive in DLF-Auth

Go to the DLF-Auth service page.

Log on to the EMR console. In the left-side navigation pane, click EMR on ECS.

In the top navigation bar, select the region in which your cluster resides and select a resource group based on your business requirements.

On the EMR on ECS page, find the desired cluster and click Services in the Actions column.

On the Services tab, find DLF-Auth and click Status.

Enable Hive in DLF-Auth.

In the Service Overview section of the Status tab, turn on enableHive.

In the message that appears, click OK.

Restart HiveServer.

On the Services tab, find Hive and click Status.

In the Components section of the Status tab, find HiveServer and click in the Actions column.

In the dialog box that appears, configure the Execution Reason parameter and click OK.

In the Confirm message, click OK.

Step 2: Add a RAM user

You can add a RAM user by using the user management feature.

Go to the Users tab.

Log on to the EMR console. In the left-side navigation pane, click EMR on ECS.

In the top navigation bar, select the region in which your cluster resides and select a resource group based on your business requirements.

On the EMR on ECS page, find the desired cluster and click Services in the Actions column.

Click the Users tab.

On the Users tab, click Add User.

In the Add User dialog box, select an existing RAM user as an EMR user account from the Username drop-down list and specify Password and Confirm Password.

Click OK.

Step 3: Verify the permissions of the RAM user

If the AliyunDLFDssFullAccess or AdministratorAccess policy is attached to the RAM user, the RAM user has the required permissions to access all fine-grained resources in DLF. You do not need to grant permissions to the RAM user.

Verify the permissions of the RAM user before you grant permissions to the RAM user.

Log on to your cluster in SSH mode. For more information, see Log on to a cluster.

Run the following command to access HiveServer2:

beeline -u jdbc:hive2://master-1-1:10000 -n <user> -p <password>NoteReplace <user> and <password> with the username and password that you set in Step 2: Add a RAM user.

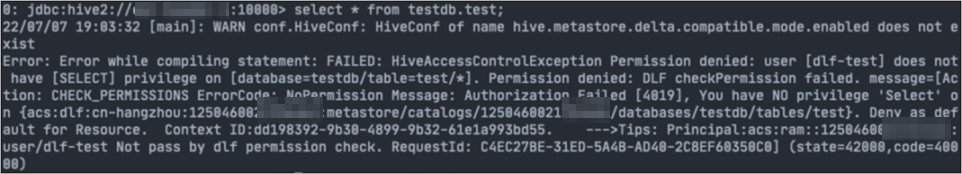

Query the information about an existing table.

For example, run the following command to query the test table. Replace

testdb.testwith the actual name of the table.select * from testdb.test;If the RAM user does not have permissions on the table, an error message indicating that the query fails due to the lack of permissions is returned.

Grant permissions to the RAM user.

Log on to the DLF console.

In the left-side navigation pane, choose .

On the Data Permissions page, click Add Permission.

On the Add Permission page, configure the parameters. The following table describes the parameters.

Parameter

Description

Principal

Principal Type

The type of the principal. Default value: RAM User/Role.

Choose Principal

The RAM user to which you want to grant permissions. Select the user that you added in Step 2: Add a RAM user from the Choose Principal drop-down list.

Resources

Authorization Method

The authorization method. Default value: Resource Authorization.

Resource Type

The type of resources. Select a type based on your business requirements.

In this example, a metadata table is used.

Permissions

Data Permission

In this example, Select is used.

Granted Permission

Click OK.

Verify the permissions that are granted to the RAM user.

Query data in the table again by referring to Step 1. The query is successful because the RAM user is granted the Select permission.

Step 4: Optional. Enable LDAP authentication for Hive

After you enable DLF-Auth to manage permissions, we recommend that you enable Lightweight Directory Access Protocol (LDAP) authentication for Hive. This way, all users who connect to Hive can run related scripts after they pass LDAP authentication.

Go to the Services tab.

Log on to the EMR console. In the left-side navigation pane, click EMR on ECS.

In the top navigation bar, select the region in which your cluster resides and select a resource group based on your business requirements.

On the EMR on ECS page, find the desired cluster and click Services in the Actions column.

Enable LDAP authentication.

On the Services tab, click Status in the Hive section.

Turn on enableLDAP.

Clusters of EMR V5.11.1 or a later minor version and clusters of EMR V3.45.1 or a later minor version

In the Service Overview section, turn on enableLDAP.

In the Confirm message, click OK.

Clusters of EMR V5.11.0 or an earlier minor version and clusters of EMR V3.45.0 or an earlier minor version

In the Components section, find HiveServer, click the .

In the dialog box that appears, enter an execution reason in the Execution Reason field and click OK.

In the Confirm dialog box, click OK.

Restart HiveServer.

In the Components section of the Status tab, find HiveServer and click Restart in the Actions column.

In the dialog box that appears, enter an execution reason in the Execution Reason field and click OK.

In the Confirm dialog box, click OK.

> enableLDAP

> enableLDAP