OSS-HDFS (JindoFS) is a storage service that supports cache-based acceleration and Ranger authentication. OSS-HDFS is available for clusters of the following versions: E-MapReduce (EMR) V3.42 or a later minor version and EMR V5.8.0 or a later minor version. Clusters that use OSS-HDFS as the backend storage provide better performance in big data extract, transform, and load (ETL) scenarios and allow you to smoothly migrate data from HDFS to OSS-HDFS. This topic describes how to use OSS-HDFS in EMR Hive or Spark.

Background information

OSS-HDFS is a cloud-native data lake storage service. OSS-HDFS provides unified metadata management capabilities and is fully compatible with the HDFS API. OSS-HDFS also supports Portable Operating System Interface (POSIX). OSS-HDFS allows you to manage data in various data lake-based computing scenarios in the big data and AI fields. For more information, see Overview.

Prerequisites

An EMR cluster is created . For more information, see Create a cluster.

Procedure

Step 1: Enable OSS-HDFS

Enable OSS-HDFS and obtain the permissions to access OSS-HDFS. For more information, see Enable OSS-HDFS and grant access permissions.

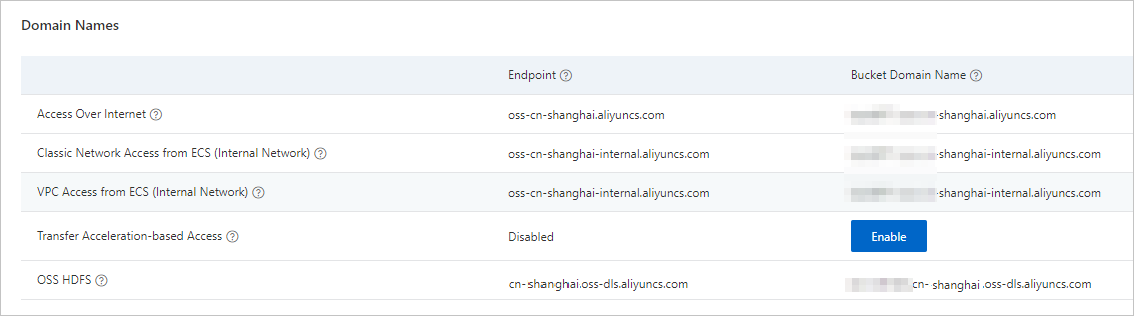

Step 2: Obtain the bucket domain name of OSS-HDFS

Step 3: Use OSS-HDFS in the EMR cluster

- Log on to the EMR cluster. For more information, see Log on to a cluster.

- Create a Hive table in a directory of OSS-HDFS.

- Run the following command to open the Hive CLI:

hive - Run the following command to create a database in a directory of OSS-HDFS:

CREATE DATABASE if not exists dw LOCATION 'oss://<yourBucketName>.<yourBucketEndpoint>/<path>';Note- In the command,

dwis the database name,<path>specifies a random path, and<yourBucketName>.<yourBucketEndpoint>specifies the bucket domain name of OSS-HDFS that you obtained in Step 2: Obtain the bucket domain name of OSS-HDFS. - In this example, the bucket domain name of OSS-HDFS is used as the prefix of the path. If you want to use only the bucket name to point to the directory of OSS-HDFS, you can specify a bucket-level endpoint or a global endpoint. For more information, see Appendix 1: Other methods used to configure the endpoint of OSS-HDFS.

- In the command,

- Run the following command to use the new database:

use dw; - Run the following command to create a Hive table in the new database:

CREATE TABLE IF NOT EXISTS employee(eid int, name String,salary String,destination String) COMMENT 'Employee details'; - Run the following command to query the information about the table:

desc formatted employee;The following information is returned. The value of theLocationparameter indicates that the Hive table is created in the directory of OSS-HDFS.# col_name data_type comment eid int name string salary string destination string # Detailed Table Information Database: dw Owner: root CreateTime: Fri May 06 16:40:06 CST 2022 LastAccessTime: UNKNOWN Retention: 0 Location: oss://****.cn-hangzhou.oss-dls.aliyuncs.com/dw/employee Table Type: MANAGED_TABLE

- Run the following command to open the Hive CLI:

- Insert data into the Hive table. Execute the following SQL statement to write data to the Hive table. An EMR job is generated.

INSERT INTO employee(eid, name, salary, destination) values(1, 'liu hua', '100.0', ''); - Verify the data in the Hive table.

SELECT * FROM employee WHERE eid = 1;The returned information contains the inserted data.OK 1 liu hua 100.0 Time taken: 12.379 seconds, Fetched: 1 row(s)

Grant access permissions to an EMR cluster

If the default role AliyunECSInstanceForEMRRole is not used by your EMR cluster, you must grant the EMR cluster the permissions to access OSS-HDFS.

If the default role AliyunECSInstanceForEMRRole is used by your EMR cluster, you do not need to grant the EMR cluster the permissions to access OSS-HDFS. By default, the policy AliyunECSInstanceForEMRRolePolicy is attached to the role, and the policy contains the oss:PostDataLakeStorageFileOperation permission.