This topic describes the cause of and solution to GPU initialization errors, such as XID 119 or XID 120, that occur on a GPU-accelerated Linux instance. The errors may be caused by exceptions in the GPU System Processor (GSP) component.

Problem description

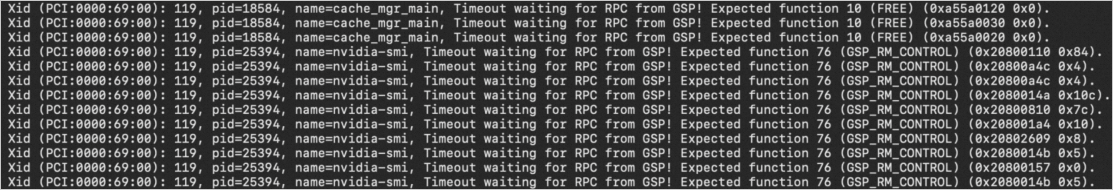

A GPU falls off the bus on a GPU-accelerated Linux instance. For example, an error message appears indicating that the GPU fails initialization on the instance. After you run the sh nvidia-bug-report.sh nvidia-bug-report.sh command, you can view XID 119 or XID 120 error messages in the command output. The following figure shows an example of XID 119 error messages.

For information about other XID errors, visit NVIDIA Common XID Errors.

Cause

The preceding issue may occur because an exception occurs in the GSP component. You can update the NVIDIA driver to the latest version. If the issue persists after the update, we recommend that you disable the GSP component.

For more information about GSP, see Chapter 42. GSP Firmware in the official NVIDIA documentation.

Solution

Connect to the GPU-accelerated instance.

For more information, see Connect to a Linux instance by using a password or key.

Run the following commands to disable the GSP component:

sudo su echo options nvidia NVreg_EnableGpuFirmware=0 > /etc/modprobe.d/nvidia-gsp.confRestart the GPU-accelerated instance.

For more information, see Restart instances.

Reconnect to the GPU-accelerated instance.

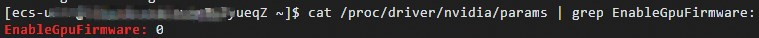

Run the following command to obtain the value of the

EnableGpuFirmwareparameter:cat /proc/driver/nvidia/params | grep EnableGpuFirmware:If

0is returned for the EnableGpuFirmware parameter, the GSP component is disabled. In this case, the preceding issue is resolved. Note

NoteIf the value of the EnableGpuFirmware parameter is

0, the output of thenvidia-smicommand indicates that the NVIDIA GPU runs as expected when you run the nvidia-smi command to check the status of the NVIDIA GPU.If

0is not returned for the EnableGpuFirmware parameter, the GSP component is not disabled. In this case, proceed to the next step to check whether the NVIDIA GPU runs as expected.

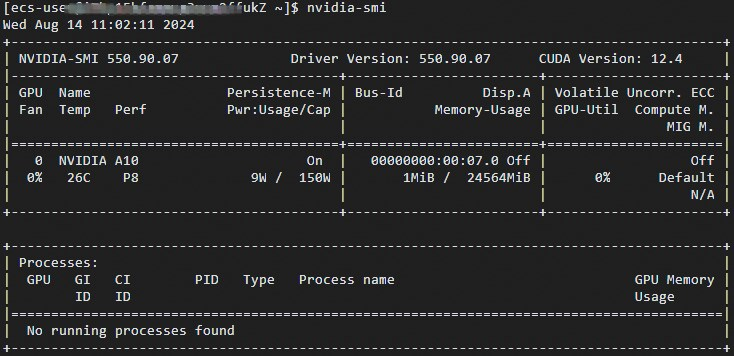

Run the

nvidia-smicommand to check whether the NVIDIA GPU runs as expected.If the command output indicates that the GPU runs as expected, such as if the command output displays the normal values of the fan speed, temperature, and performance mode of the GPU, as shown in the following figure, the preceding issue is resolved.

If an error is returned, the issue persists on the GPU. Contact Alibaba Cloud technical support to shut down the instance and migrate data.