AIACC-Training provides a set of commands that you can run to start distributed training. You can use the commands together with environment variables to adjust the performance of AIACC-Training. This way, AIACC-Training brings better training performance and higher training efficiency. This topic describes the startup commands and the environment variables of AIACC-Training.

Startup commands

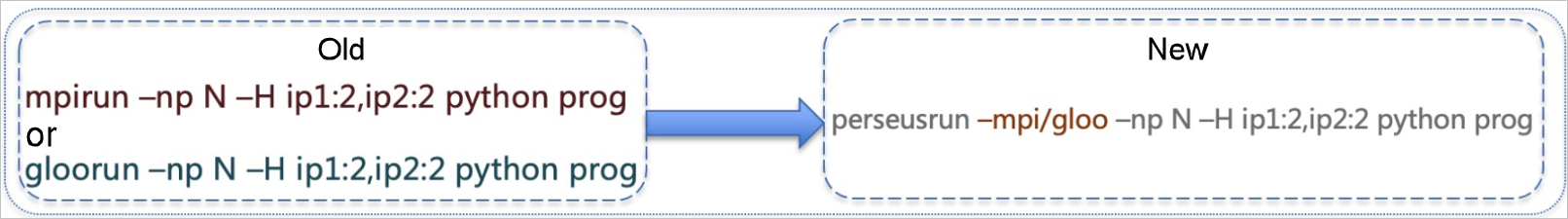

Alibaba Cloud provides a set of perseusrun commands that are used to start distributed training based on AIACC-Training. This improves the performance of AIACC-Training. You can use the perseusrun commands for underlying communication infrastructures, various training modes, distributed training, and elastic training. The following figure compares traditional commands and perseusrun commands.

The following section describes the syntax of perseusrun commands.

Single machine

By default, the Gloo backend is used. Command syntax:

perseusrun -np NP [-H localhost:N] -- COMMAND [ARG [ARG...]]Multiple machines

In this example, the Message Passing Interface (MPI) backend and two machines are used. Command syntax:

perseusrun –-mpi –np NP –H host1:N,host2:N -- COMMAND [ARG [ARG...]]In this example, the Gloo backend and two machines are used. Command syntax:

perseusrun --gloo –np NP –H host1:N,host2:N -- COMMAND [ARG [ARG...]]

Parameters in the preceding command syntax:

N: the number of startup processes on each machine. In most cases, the value of N is the same as the number of GPUs on the machine.

NP: the total number of startup processes. The value of NP is calculated based on the following formula: NP = N × Total number of machines.

host1 or host2: the private IP address of each machine.

COMMAND: the Python command for the training program.

ARG: the Python parameter for the training program.

If you want to obtain more information about perseusrun commands, run the

perseusrun -hcommand.

The following sample code provides examples on how to run perseusrun commands:

# In this example, a single machine and the default Gloo backend are used. Eight processes run on the machine.

perseusrun -np 8 -H localhost:8 -- python train.py --model resnet50

perseusrun -np 8 -- python train.py --model resnet50

# In this example, two machines and an MPI communication backend are used. Eight processes run on each machine.

perseusrun –-mpi –np 16 –H host1:8,host2:8 -- python train.py --model resnet50

# In this example, four machines and the Gloo communication backend are used. Eight processes run on each machine.

perseusrun –-gloo –np 32 –H host1:8,host2:8,host3:8,host4:8 -- python train.py --model resnet50Environment variables

If you want to use the default settings of AIACC-Training, you do not need to configure environment variables. If you do not want to use the default settings, you can configure environment variables to change the default settings. This section describes how to configure environment variables of AIACC-Training.

Perseus environment variable | Description | Suggestion |

PERSEUS_ALLREDUCE_NANCHECK | Specifies whether to check whether the gradient is set to a Not a Number (NaN) value.

Default value: 0. | None. |

PERSEUS_ALLREDUCE_DTYPE | The gradient compression type for communication among GPUs.

Default value: 0. | If the precision decreases when you perform FP32 training, we recommend that you set the environment variable to 2. In most cases, we recommend that you leave the environment variable empty. By default, FP16 precision compression is enabled. In scenarios where automatic mixed precision (AMP) is enabled, we recommend that you set the environment variable to 1. |

PERSEUS_ALLREDUCE_MODE | The AllReduce communication mode among machines. Valid values:

Perseus automatically selects the AllReduce communication mode. | Perseus automatically selects the optimal value. In most cases, we recommend that you leave the environment variable empty. |

PERSEUS_ALLREDUCE_STREAMS | The upper limit of streams for multi-stream communication. Default value: 4. Valid values: 1 to 12. | In most cases, we recommend that you leave the environment variable empty. If the following conditions are met, you can specify a larger value:

|

PERSEUS_ALLREDUCE_FUSION | The granularity for gradient blending. If you set the environment variable to 16, the total number of gradients is 196. Valid values: 0 to 128. The environment variable does not have a default value. If you leave the environment variable empty, AIACC-Training automatically selects an optimal value. | We recommend that you leave the environment variable empty. |

PERSEUS_ACCUMULATE_N_STEPS (Perseus 1.3.0 or later) | The number of steps for local gradient accumulation based on the multistep method. Default value: 1. Sample values: 2, 4, and 8. | If your GPU memory is insufficient, you can use local gradient accumulation to increase the batch size. If you want to reduce the amount of transmitted data, you can set the environment variable to N to increase the batch size N times and retain the original epochs. This way, the amount of transmitted data is reduced to 1/N. Note Local gradient accumulation increases the batch size of your training. You can increase the batch size for hyperparameters, such as the learning rate. |

PERSEUS_DOWNSAMPLE_N_ELEMENTS (Perseus 1.3.0 or later) | The granularity at which the gradient compression is downsampled based on the gossip method. Sample values: 2, 4, and 8. Default value: 1. | When the step size is large, you can use the gossip method to downsample the amount of transmitted data of gradients. When the batch size is 8*64 in an ImageNet or a ResNet50 environment, you can set the environment variable to 2, 4, or 8 to ensure the granularity and downsample the amount of transmitted data by 50%, 75%, or 87.5%. |

PERSEUS_GRADIENT_MOMENTUM (Perseus 1.3.0 or later) | The momentum value of the gradient. Default value: 1. You can use the environment variable together with PERSEUS_DOWNSAMPLE_N_ELEMENTS. | If you use the MomentumSGD optimizer for ImageNet training, we recommend that you set the environment variable to 0.9. |

PERSEUS_NCCL_ENABLE (Special version) |

Default value: 0. | When you use an SCC instance, you can set the environment variable to 1. This way, you can enable Remote Direct Memory Access (RDMA) links and virtual private cloud (VPC) links to use hybrid bandwidth. To obtain details of the environment variable, submit a ticket. |

PERSEUS_ALLREDUCE_GRADIENT_SCALE (Perseus 1.3.0 or later) | The coefficient of the gradient scale. Default value: 10. | The environment variable takes effect only when PERSEUS_ALLREDUCE_DTYPE is set to 0 or 2. In this case, you can change precision compression from FP32 to FP16. If you change precision compression from FP32 to FP16, the gradient scale is multiplied by the coefficient. If you change precision compression from FP16 to FP32, the gradient scale is divided by the coefficient. If a NaN error is caused due to a large loss value, you can set the environment variable to a smaller value. |

PERSEUS_OFFLINE_NEG (Perseus 1.3.2 or later) | The offline negotiation mode for the gradient. Default value: 0. If you set the environment variable to 1, the system enables the offline negotiation mode for the gradient. |

|

PERSEUS_PERF_CHECK_N_STEPS(1.3.2+) | The frequency at which the system performs anomaly detection for GPU performance. Default value: 0. A value of 0 indicates that anomaly detection is disabled. | If you set the environment variable to 100, the system performs anomaly detection every 100 steps. If an anomaly is detected on a GPU, the details of the anomaly are displayed on the machine on which the GPU is installed. Important The environment variable is incompatible with TensorFlow Accelerated Linear Algebra (XLA). We recommend that you disable anomaly detection in a TensorFlow ALA environment. |

PERSEUS_MASTER_PORT(1.5.0+) | The port number that is used to start the primary machine. Default value: 6666. The environment variable takes effect only when the PyTorch launcher starts training by using DistributedDataParallel (DDP). | By default, a rendezvous functionality is started during PyTorch training. AIACC-Training starts a functionality that is similar to the rendezvous functionality because both the functionalities share the same master_addr value. You need to only make sure that the port numbers of the functionalities are different. |

PERSEUS_NCCL_NETWORK_INTERFACE(1.5.0+) | The network interface controller (NIC) for NCCL communication. Default value: eth0. | You can modify the NIC settings based on your business requirements. |

PERSEUS_GLOO_NETWORK_INTERFACE(1.5.0+) | The NIC for Gloo communication. Default value: eth0. | You can modify the NIC settings based on your business requirements. |

GLOO_TIMEOUT_SECONDS(1.4.0+) | The timeout period for Gloo communication. Default value: 60. Unit: seconds. | If the communication is hanging due to complex logic and network environment issues, we recommend that you set the environment variable to a larger value. |

PERSEUS_CHANGE_HVD_ALLGATHER(1.5.0+) | The calculation method of Allgather. Default value: 0. A value of 0 indicates that Allgather is compatible with DDP and mpi4py. If you set the environment variable to 1, Horovod is used. In this example, the following tensors are used: tensor1=[0,0] and tensor2=[1,1]. If you set the environment variable to 0, the return value is tensor([[0,0], [1,1]]). If you set the environment variable to 1, the return value is tensor([0,0,1,1]). | If you use original PyTorch DDP to perform training, you can set the environment variable to 0. If you use Horovod to perform training, you can set the environment variable to 1. The performance of PyTorch SyncBN varies based on the value of the environment variable. |

PERSEUS_USE_DDP_LAUNCHER(1.5.0+) | The launcher of training. Default value: 1. A value of 1 indicates that the original launcher of PyTorch DDP is used. If you set the environment variable to 0, the Mpirun launcher of Horovod is used. | If you use original PyTorch DDP to perform training, you can set the environment variable to 1. If you use Horovod to perform training, you must set the environment variable to 0. |

You can add environment variables before perseusrun commands. In the following sample code, mixed precision is enabled and the coefficient of the gradient scale is set to 5:

PERSEUS_ALLREDUCE_DTYPE=2 PERSEUS_ALLREDUCE_GRADIENT_SCALE=5 perseusrun xxx