This topic describes how to run Burrows-Wheeler Alignment (BWA), Genome Analysis Toolkit (GATK), and SAMtools software in an Elastic High Performance Computing (E-HPC) cluster to perform gene sequencing.

Background information

Fast-developing gene sequencing technology is helping people discover more gene sequences. However, operations that involve a large number of genes, such as sequence similarity search, alignment, and variant discovery, require heavy-load data processing and parallel computing. High-performance computing provides powerful computing capabilities. This process uses various types of schedulers to improve concurrency and graphics processing units (GPUs) to accelerate computing.

This topic describes how to perform gene sequencing by using the widely used whole genome sequencing (WGS) process in combination with GATK. You can prepare a test based on your business requirements. For example, you can add quality control and filtering processes for variant discoveries.

When you perform gene sequencing, you can use the BWA tool to build indexes and generate alignments, use SAMtools to sort the alignments, and then use GATK to remove duplicates, recalibrate base quality scores, and discover variants.

BWA is a software package that maps low-divergent sequences against a large reference genome, such as the human genome.

GATK is a next-generation software package that is used to analyze DNA sequencing data. It is used to remove duplicates, recalibrate base quality scores, and discover variants.

SAMtools is a set of utilities that interact with and post-process short DNA sequence read alignments in the SAM, BAM, and CRAM formats. SAMtools supports complex tasks such as the viewing, sorting, indexing, data extraction, and format conversion of alignments. SAMtools also sorts alignments based on SAM flags and tags.

Preparations

Create an E-HPC cluster. For more information, see Create a standard cluster.

In this example, the following configurations are used for the cluster:

Item

Configuration

Series

Standard Edition

Deployment Mode

Public cloud cluster

Cluster Type

SLURM

Node configurations

One management node, one logon node, and one compute node with the following specifications:

Management node: Elastic Compute Service (ECS) instance of the ecs.c7.large type and with 2 vCPUs and 4 GiB memory

Logon node: ECS instance of the ecs.c7.large type and with 2 vCPUs and 4 GiB memory

Compute node: ECS instance of the ecs.c7.16xlarge type and with 64 vCPUs and 256 GiB memory

Image

CentOS 7.6 public image

Create a cluster user. For more information, see Manage users.

The user is used to log on to the cluster, compile software, and submit jobs. The following settings are used in this example:

Username: testuser

User group: sudo permissions group

Install software.

The following software versions are used in this example:

Software

Version

Download URL

BWA

4.2.0.0

GATK

0.7.17

Samtools

1.14

Step 1: Connect to the E-HPC cluster

Connect to the cluster. For more information, see Connect to a cluster.

Run the following command to create a directory for job execution. In this example,

/home/data/humanis used.NoteTo ensure sufficient storage space for data downloading and processing, we recommend that use a directory under the mounted shared directory

/home.sudo mkdir -p /home/data/human

Step 2: Download and pre-process data

Run the following command to create a job script named

data_pre.slurmand open the script:sudo vim data_pre.slurmSample script:

#!/bin/bash -l #SBATCH -J data_pre #SBATCH --partition comp #SBATCH -N 1 #SBATCH -n 1 #SBATCH --output=data_pre.log cd /home/data/human/ # Download and decompress the files of the reference genome, gene sequencing sample 1, and gene sequencing sample 2. wget https://public-ehpc-package.oss-cn-hangzhou.aliyuncs.com/lifescience/b37_human_g1k_v37.fasta wget https://public-ehpc-package.oss-cn-hangzhou.aliyuncs.com/lifescience/b37_1000G_phase1.indels.b37.vcf wget https://public-ehpc-package.oss-cn-hangzhou.aliyuncs.com/lifescience/b37_Mills_and_1000G_gold_standard.indels.b37.vcf wget https://public-ehpc-package.oss-cn-hangzhou.aliyuncs.com/lifescience/b37_dbsnp_138.b37.vcf.zip unzip b37_dbsnp_138.b37.vcf.zip wget https://public-ehpc-package.oss-cn-hangzhou.aliyuncs.com/lifescience/gatk-examples_example1_NA20318_ERR250971_1.filt.fastq.gz gunzip gatk-examples_example1_NA20318_ERR250971_1.filt.fastq.gz wget https://public-ehpc-package.oss-cn-hangzhou.aliyuncs.com/lifescience/gatk-examples_example1_NA20318_ERR250971_2.filt.fastq.gz gunzip gatk-examples_example1_NA20318_ERR250971_2.filt.fastq.gz # Compress the files with BGZip. bgzip -f b37_1000G_phase1.indels.b37.vcf bgzip -f b37_Mills_and_1000G_gold_standard.indels.b37.vcf bgzip -f b37_dbsnp_138.b37.vcf # Build an index with Tabix. tabix -p vcf b37_1000G_phase1.indels.b37.vcf.gz tabix -p vcf b37_Mills_and_1000G_gold_standard.indels.b37.vcf.gz tabix -p vcf b37_dbsnp_138.b37.vcf.gzRun the following command to submit the job:

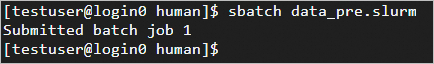

sbatch data_pre.slurmSample output for successful submission:

Run the following command to view the job status:

squeueAfter the job is executed, run the following command to view the

data_pre.logfile:cat data_pre.log

Step 3: Build a reference genome index

Run the following command to create a job script named

bwa_index.slurmand open the script:sudo vim bwa_index.slurmSample script:

#!/bin/bash -l #SBATCH -J bwa_index #SBATCH --partition comp #SBATCH -N 1 #SBATCH -n 1 #SBATCH --output=bwa_index.log cd /home/data/human/ # Build an index that is required during sequence alignment. time bwa index b37_human_g1k_v37.fasta # Create a FASTA index file. samtools faidx b37_human_g1k_v37.fastaRun the following command to submit the job:

sbatch --dependency=afterok:jobid1 bwa_index.slurmRun the following command to view the job status:

squeueAfter the job is executed, run the following command to view the

bwa_index.logfile:cat bwa_index.log

Step 4: Perform gene sequencing

Run the following command to create a job script named

wgs_main_process.slurmand open the script:sudo vim wgs_main_process.slurmSample script:

ImportantReplace

compute000with the actual running node of the cluster for the#SBATCH -w compute000field in the sixth line.#!/bin/bash -l #SBATCH -J wgs_main_process #SBATCH --partition comp #SBATCH -N 1 #SBATCH -n 64 #SBATCH -w compute000 #SBATCH --output=wgs_main_process.log cd /home/data/human/ # Align the reads to the reference genome. Then, generate alignments in a BAM file to minimize disk usage. time bwa mem -t 64 \ -R '@RG\tID:ehpc\tPL:illumina\tLB:library\tSM:b37' \ b37_human_g1k_v37.fasta \ gatk-examples_example1_NA20318_ERR250971_1.filt.fastq \ gatk-examples_example1_NA20318_ERR250971_2.filt.fastq | samtools view -S -b - > ERR250971.bam # Sort the alignments in ascending order. time samtools sort -@ 64 -O bam -o ERR250971.sorted.bam ERR250971.bam # Use MarkDuplicates to remove the reads that are likely artifacts from the PCR amplification. time gatk MarkDuplicates \ -I ERR250971.sorted.bam -O ERR250971.markdup.bam \ -M ERR250971.sorted.markdup_metrics.txt # Create an index for the sorted BAM file. samtools index ERR250971.markdup.bam # Generate a DICT file for the reference genome. time gatk CreateSequenceDictionary \ -R b37_human_g1k_v37.fasta \ -O b37_human_g1k_v37.dict # Build the recalibration model and create a recalibration table based on existing SNP and Indels databases. time gatk BaseRecalibrator \ -R b37_human_g1k_v37.fasta \ -I ERR250971.markdup.bam \ --known-sites b37_1000G_phase1.indels.b37.vcf.gz \ --known-sites b37_Mills_and_1000G_gold_standard.indels.b37.vcf.gz \ --known-sites b37_dbsnp_138.b37.vcf.gz -O ERR250971.BQSR.table # Adjust base quality scores. time gatk ApplyBQSR \ --bqsr-recal-file ERR250971.BQSR.table \ -R b37_human_g1k_v37.fasta \ -I ERR250971.markdup.bam \ -O ERR250971.BQSR.bam # Create an index for the sorted BAM file. time samtools index ERR250971.BQSR.bam # Generate a VCF file for the variant discovery output. time gatk HaplotypeCaller \ -R b37_human_g1k_v37.fasta \ -I ERR250971.BQSR.bam \ -O ERR250971.HC.vcf.gzRun the following command to submit the job:

sbatch --dependency=afterok:jobid2 wgs_main_process.slurmRun the following command to view the job status:

squeueAfter the job is executed, run the following command to view the

wgs_main_process.logfile:cat wgs_main_process.log

Duration of gene sequencing

In this topic, ecs.g7.16xlarge is selected as the instance type of the compute node to test the process of WGS. The entire process may take six to seven hours. The following table describes the estimated time that is required for each step:

Process | Description | Duration (minutes) |

bwa index | Indexing | 54 |

bwa mem | Alignment | 25 |

samtools sort | Sorting | 2 |

gatk MarkDuplicates | Duplicate removal | 13 |

gatk CreateSequenceDictionary | Dictionary creation | 0.15 |

gatk BaseRecalibrator | Recalibration table creation | 40 |

gatk ApplyBQSR | Recalibration | 21 |

gatk HaplotypeCaller | Variant discovery | 211 |