DataHub is an Alibaba Cloud platform for processing streaming data. It enables you to publish, subscribe to, and distribute data streams to build data analytics and applications.

Product overview

DataHub is a streaming data processing platform provided by Alibaba Cloud. You can use its core features of publishing, subscribing to, and distributing data streams to build analytics and applications for streaming data.

Key capabilities

Data collection: DataHub continuously collects, stores, and processes large volumes of streaming data from various sources, such as mobile devices, applications, web services, and sensors.

Real-time processing: Streaming data written to DataHub, such as web access logs and application events, can be processed by compute engines such as Flink or by custom applications. This processing generates real-time results, including real-time charts, alert messages, and statistics.

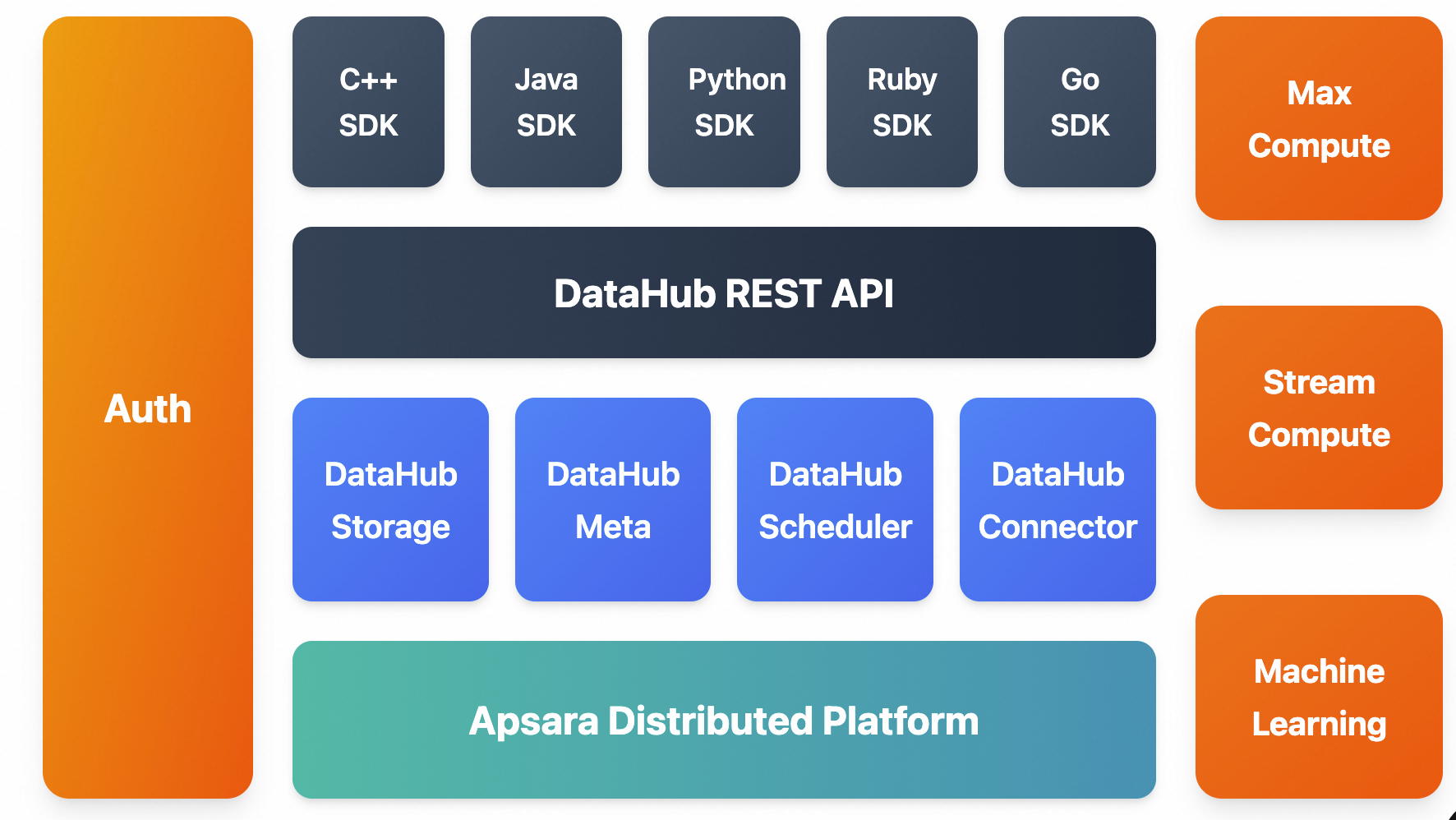

Overall architecture

DataHub is built on Apsara, Alibaba Cloud's proprietary distributed platform. It features high availability, low latency, high scalability, and high throughput.

It provides a unified REST API. Applications can interact with the API using software development kits (SDKs) for multiple languages.

DataHub also seamlessly connects with other cloud products and compute engines, such as MaxCompute and Flink. This lets you use SQL for stream data analytics.

DataHub can also distribute streaming data to various cloud products. It currently supports distribution to MaxCompute (formerly ODPS) and OSS.

Benefits

High throughput: Supports up to 160 million writes per day on a single Shard.

Timeliness: DataHub collects and processes data from various sources in real time, which lets you quickly respond to your business needs.

Ease of use

It provides SDKs for languages such as C++, Java, Python, and Go.

It provides RESTful API specifications that support custom access interfaces.

It provides common client plug-ins, such as Fluentd, Logstash, and Flume.

It supports both structured data with a strong schema (by creating a topic of the TUPLE type) and unstructured data (by creating a topic of the BLOB type).

High availability

Service availability is at least 99.9%.

Data durability is at least 99.999%.

It scales automatically without affecting external services.

DataHub supports automatic backup for redundancy.

Dynamic scaling

The data stream throughput capacity for each Topic can be dynamically scaled up or down, reaching a maximum throughput of

256,000 Records/sper Topic.High security

It provides enterprise-grade, multilayer security protection and multi-user resource isolation.

DataHub supports multiple authentication and authorization methods. For example, you can configure a whitelist or grant permissions using Resource Access Management (RAM).

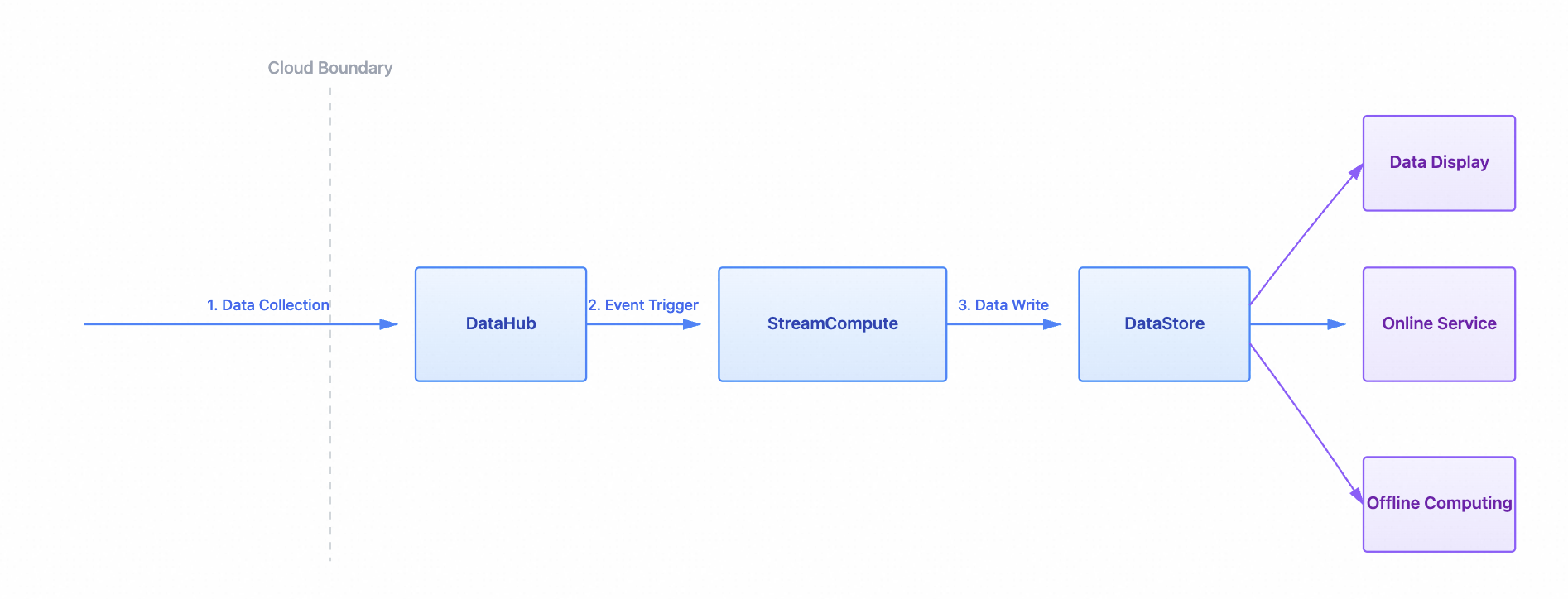

Scenarios

As a streaming data processing service, DataHub can be combined with other Alibaba Cloud products to build a complete data processing solution.

Stream computing (StreamCompute)

Real-time Compute for Apache Flink is an Alibaba Cloud stream computing engine that provides an SQL-like language for stream processing. DataHub seamlessly integrates with Flink and can serve as both a data source and an output destination. For more information, see Real-time Computing (Stream Computing).

Stream processing applications

Custom applications can subscribe to data in DataHub, process it in real time, and output the results. The results from one application can be sent back to DataHub for another application to process. This lets you build a directed acyclic graph (DAG) for your data processing workflow.

Streaming data archiving

Streaming data can be archived to MaxCompute (formerly ODPS). You can create a DataHub connector and specify the required configurations to set up a sync task that periodically archives streaming data from DataHub.