Product overview

Global Traffic Manager (GTM) allows users to access the nearest application services, shares high-concurrency loads, and performs health checks on application services. Based on the health check results, GTM can perform fault isolation or traffic switchovers. This lets you flexibly and quickly build active zone-redundancy and remote disaster recovery services.Global Traffic Manager

Features

Feature | Description | References |

Address pool configuration | An address pool is a feature of GTM that is used to manage the endpoints (IP addresses or domain names) of application services. An address pool represents a group of IP addresses or domain names that provide the same application service and have the same carrier or region attributes. You can configure multiple address pools for a GTM instance. This way, users in different regions can access different address pools to implement nearest access. If an address pool becomes unavailable, GTM can switch to a backup address pool. | |

Access policy | An access policy helps enterprises manage global traffic. Based on the QoS policy that you configure, GTM can set different response address pools for users from different networks or regions. This implements nearest access and failover. GTM provides two types of access policies. You can enable only one type of access policy for an instance.

| |

Health check | Health check is used to monitor the IP addresses in an address pool. It monitors the availability of application services in real time. The following monitoring methods are supported: ping, TCP, and HTTP(S). | |

Failover | If a health check finds that the primary address pool collection that users access is unavailable, the system automatically switches user traffic to the backup address pool collection. This ensures that if an application endpoint fails, the backup address pool collection can respond to DNS queries from users. This reduces the risk of service interruptions and ensures service stability. The core capability that GTM provides is failover. Details are described as follows:

| - |

Scenarios

Application service primary-backup disaster recovery

For example, an application service has two IP addresses: 1.1.XX.XX and 2.2.XX.XX. Under normal circumstances, users access the IP address 1.1.XX.XX. If the IP address 1.1.XX.XX fails, user traffic is switched to the IP address 2.2.XX.XX.

You can use GTM to create two address pools, Pool A and Pool B. You can add the IP addresses 1.1.XX.XX and 2.2.XX.XX to the two address pools and configure health checks. In the access policy configuration, you can select Pool A as the primary address pool collection and Pool B as the backup address pool collection. This implements a disaster recovery switchover between the primary and backup IP addresses for the application service.

Multiple active IPs for an application service

For example, an application service has three IP addresses: 1.1.XX.XX, 2.2.XX.XX, and 3.3.XX.XX. The three IP addresses serve users at the same time. You want DNS to return all three IP addresses when they are working as expected. If one of the IP addresses fails, the failed address is temporarily removed from the DNS record list and is not returned to end users. After the IP address recovers, it is added back to the DNS record list.

You can use GTM to create an address pool named Pool A that contains the addresses 1.1.XX.XX, 2.2.XX.XX, and 3.3.XX.XX. You can then select Pool A as the primary address pool collection, and enable and configure health checks. This implements multiple active IP addresses for the application service.

Load balancing for high-concurrency application services

During online sales promotions such as Double 11, services are temporarily scaled out to handle sudden increases in user access requests. In most cases, multiple SLB instances are purchased in the same region to offload access traffic using different IP addresses.

When you use GTM, you can set the load balancing policy for the address pool in the primary address pool collection to Return All Addresses. In this case, each address handles an equal share of user access traffic. This implements load balancing across multiple SLB instances. You can also select Return Addresses by Weight and configure different weights for each address pool and each address. This way, each address handles a proportion of access traffic based on its assigned weight.

Access acceleration for different regions

Large or multinational enterprises usually need to provide network services to users across a country or around the globe. Network conditions vary by region, and network access is usually affected by factors such as distance. Therefore, service endpoints are established at core locations in several major regions. This allows users in different regions to access their respective core endpoints for the best access experience.

GTM provides two types of access policies to meet this requirement:

If you use a geography-based access policy, GTM can return addresses from specified address pool collections to users in different regions. This implements nearest-node access and accelerates access for global users.

If you use a latency-based access policy, GTM can route end users to the application service cluster that has the lowest latency. This accelerates access for end users.

How it works

For example, assume that the website is www.example.com:

After you activate a GTM instance, the system automatically assigns a CNAME endpoint: gtm12345678.gtm-000.com.

You can add three server IP addresses, 1.1.XX.XX, 2.2.XX.XX, and 3.3.XX.XX, to the GTM instance and enable health checks.

You can resolve the website www.example.com to gtm12345678.gtm-000.com using a CNAME record.

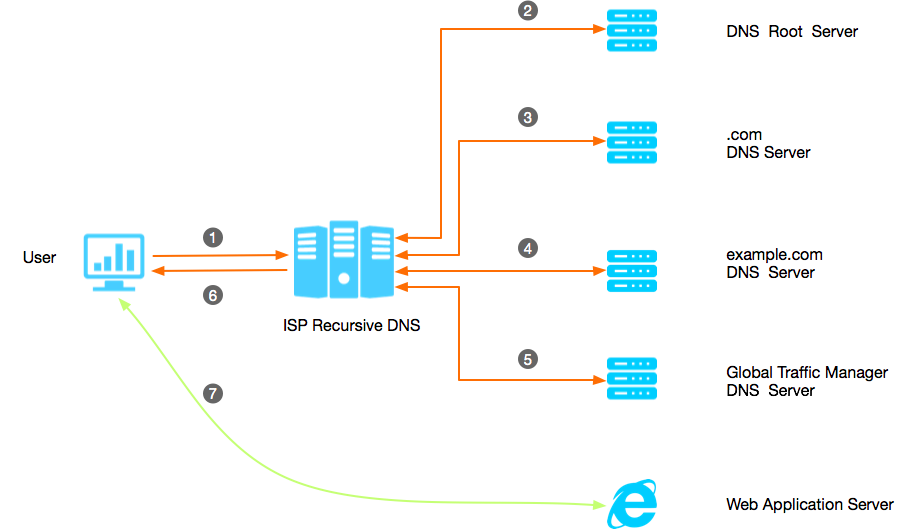

Flowchart

Process

An end user sends a DNS query for the domain name www.example.com to the local recursive DNS system.

If the local recursive DNS system does not have a cache for www.example.com, the local recursive DNS system sends a DNS query for this domain name to a root DNS server. The root DNS server returns the address of the DNS server for .com to the local recursive DNS server based on the domain name suffix.

After the local recursive DNS system receives the address of the .com DNS server from the root DNS server, it sends a DNS query for www.example.com to the .com DNS server. The .com DNS server receives the request and returns the address of the DNS server for example.com to the local recursive DNS server. If the domain name uses Alibaba Cloud DNS, this DNS server is an Alibaba Cloud DNS server.

After the local recursive DNS system receives the address of the Alibaba Cloud DNS server from the .com DNS server, it sends another DNS query for www.example.com to the Alibaba Cloud DNS server. When the Alibaba Cloud DNS server receives the DNS query, it finds in its database that www.example.com is resolved to gtm12345678.gtm-000.com using a CNAME record. Therefore, the Alibaba Cloud DNS server returns gtm12345678.gtm-000.com to the local recursive DNS system.

After the local recursive DNS system receives the domain name gtm12345678.gtm-000.com from the Alibaba Cloud DNS server, it sends another query for gtm12345678.gtm-000.com to the GTM DNS server. When GTM receives the request, it returns the final endpoint of the application service to the local recursive DNS system based on its operating mechanism and pre-configured policies.

The local recursive DNS server uses the IP address that is obtained from the last query as the final address for www.example.com, returns it to the end user, and caches it locally. This way, the cached result can be directly returned for subsequent queries.

After the end user receives the IP address from the local recursive DNS server, the end user directly initiates a network connection to the application service and starts to communicate.

Service architecture

Architecture diagram

Description

For more information, see the following architecture diagram.

The DNS module in the GTM system resolves access from end users to the primary and backup address pool collections of an application service. For example, you can configure GTM to allow users in the Chinese mainland to access the application service in the primary address pool collection and users outside the Chinese mainland to access the application service in the backup address pool collection. The two address pool collections are configured as mutual backups.

The health check module in the GTM system initiates health probes from multiple regions to multiple application service endpoints in an address pool. The health probes can use the ping, TCP, or HTTP(S) method.

If an application service endpoint in the primary address pool collection fails, the health check module detects the exception. Then, the health check module interacts with the DNS module. The DNS module temporarily removes the abnormal address from the list of application service endpoints that are returned to end users. If the health check module detects that the application service endpoint recovers, the DNS module restores the address to the list of application service endpoints and returns the address to end users.

If the primary address pool collection fails, GTM switches the access traffic of users in the Chinese mainland to the backup address pool collection named "secondaryAddresspoolSet" based on the pre-configured backup address pool collection and failover policy. If the backup address pool collection fails, GTM switches the access traffic of users outside the Chinese mainland to the primary address pool collection named "primaryAddressPoolSet".

Therefore, end users can automatically obtain the optimal application service through the GTM system to ensure continuous and uninterrupted user access.

System architecture

GTM consists of a control layer and a resolution layer:

Control layer: The control layer provides services through the console and OpenAPI. It is used to create, retrieve, update, and delete domain name resolution data, configuration data, monitoring data, and log data. The control layer is deployed in the China (Zhangjiakou) region.

Resolution layer: The resolution layer provides services through a cluster of DNS servers that are deployed globally. The resolution layer receives DNS records that are distributed from the control layer and responds to DNS queries. The resolution layer has nodes deployed in major continents and regions around the world.

Join us

DingTalk group: 79530043379