A Notebook is an interactive page where you can combine code, text, and charts to share information with other users. This topic describes how to use Notebooks in a workspace to query and analyze data.

Prerequisites

Your AnalyticDB for MySQL instance is imported into the target workspace. For more information, see Resources required for Notebooks.

Notes

The new Notebook version is available only in the following regions: China (Hangzhou), China (Beijing), China (Shanghai), China (Shenzhen), Singapore, and Indonesia (Jakarta).

Billing information

The fees for a Notebook session are based on its Specifications, which is the number of Compute Units (CUs) used. For the unit price, refer to the information on the page when you configure resources.

Procedure

Create a Notebook file and execute the code.

You can create a Notebook file in one of the following ways.

Create a file manually

Click the

(resource manager) icon on the right side of the workspace.

(resource manager) icon on the right side of the workspace.In the WORKSPACE area, right-click a blank area and select New Notebook File.

Enter a file name and click OK.

Import a file

Click the

(resource manager) icon on the right side of the workspace.

(resource manager) icon on the right side of the workspace.In the WORKSPACE area, right-click a blank area and select Upload File.

Select the file that you want to upload and click OK.

NoteAfter you create a Notebook file, if the file does not appear in the workspace area, click

to refresh the resource manager.

to refresh the resource manager.Double-click the file name to open the code execution page, where you can develop the job.

Enter your code in a cell and click the

Execute button.

Execute button.If the message "Execution failed. You need to create and mount a Notebook session resource first." appears, click Create Session.

Create a Notebook session.

Click Create Session and configure the following parameters.

Parameter

Description

Cluster

Select the target cluster type.

If you only need to use a CPU for execution, select the default DMS CPU cluster. This cluster is created automatically when you create the workspace.

To use Spark to develop jobs, select a Spark cluster. You must create this cluster manually.

From the cluster drop-down list, you can click Create Cluster and select Create Spark cluster.

Session Name

Enter a custom name for the session.

Image

The page displays the corresponding image specifications based on the cluster type you select.

Python3.9_U22.04:1.0.9

Python3.11_U22.04:1.0.9

Spark3.6_Scala2.12_Python3.9:1.0.9

Spark3.3_Scala2.12_Python3.9:1.0.9

Specifications

The resource specifications for the driver.

1 Core 4 GB

2 Core 8 GB

4 Core 16 GB

8 Core 32 GB

16 Core 64 GB

Configuration

The profile resource.

You can edit the profile name, resource release duration, data storage location, Pypi package management, and environment variable information.

NoteResource release duration: If a resource is idle for longer than the specified duration, it is automatically released. If you set the duration to 0, the resource is never automatically released.

Click Complete and Create.

The session is created when its status changes to Running.

Creating a session for the first time takes about 5 minutes. Subsequent creations or restarts take about 1 minute.

Execute the code again.

You can view the results of the executed Spark SQL code in the

data catalog area.

data catalog area.

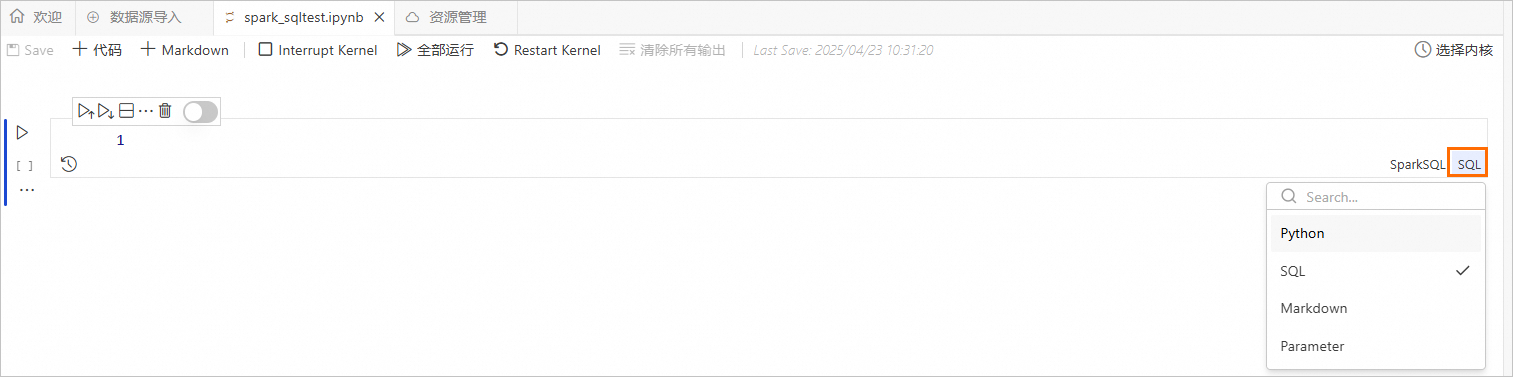

Notebook interface

Supported cell properties: Python, SQL, Markdown, and Parameter.

: Saves the code in the Notebook.Note

: Saves the code in the Notebook.NoteBy default, DMS automatically saves only executed code. You must manually save any unexecuted code. Otherwise, your changes will be lost if you close the feature page or the browser.

: Adds a cell with the Python property.

: Adds a cell with the Python property. : Adds a cell with the Markdown property.

: Adds a cell with the Markdown property. : Breaks the kernel. This stops the execution of code that is using the currently selected Spark resource.

: Breaks the kernel. This stops the execution of code that is using the currently selected Spark resource. : Runs the SQL in the current Notebook file.

: Runs the SQL in the current Notebook file. : Restarts the kernel. This restarts the Spark resource.

: Restarts the kernel. This restarts the Spark resource. : Executes the selected cell.

: Executes the selected cell. : Executes all cells above the current one.

: Executes all cells above the current one. : Executes the current cell and all cells below it.

: Executes the current cell and all cells below it. : Splits the cell.

: Splits the cell. : Deletes the selected cell.

: Deletes the selected cell. : View the execution history of the cell.

: View the execution history of the cell. : Disables the cell to prevent it from being executed.

: Disables the cell to prevent it from being executed.

Create a Spark cluster

On the Create Cluster page, configure the Spark Cluster information.

The following table describes the parameters.

Parameter

Description

Cluster Name

Enter a cluster name that helps you identify the scenario.

Environment

The following images are supported:

adb-spark:v3.3-python3.9-scala2.12

adb-spark:v3.5-python3.9-scala2.12

AnalyticDB Instance

From the drop-down list, select the prepared AnalyticDB for MySQL instance.

AnalyticDB For MySQL Resource Group

From the drop-down list, select the prepared Job resource group.

Spark App Executor Specifications

Select the resource specifications for the AnalyticDB for MySQL Spark executor.

Different model values correspond to different specifications. For more information, see the Model column in Spark application configuration parameters.

VSwitch

Select a vSwitch in the current VPC.

Dependent Jars

The OSS storage path of the JAR packages. Specify this parameter only if you submit a job using Python and a JAR package is used.

SparkConf

The configuration items are basically the same as those in open source Spark. The parameter format is

key: value. For configuration parameters that are used differently from open source Spark and parameters that are specific to AnalyticDB for MySQL, see Spark application configuration parameters.Click Create Cluster.

In the Notebook session, select the created Spark cluster.

After the Notebook session is associated with the Spark cluster, the cluster status changes to Running.

Other operations

Add a cell

On the Notebook toolbar, you can select

SQL,Python,Markdown, or Parameter to create a new cell of the corresponding type. You can also add a cell above or below an existing cell in the code editing area.Add a cell above the current cell: Hover your mouse over the top of a cell to display the button for adding code.

Add a cell below the current cell: Hover your mouse over the bottom of a cell to display the button for adding code.

Switch cell properties

Click the property type on the right side of a cell to change it.

Develop code in a cell

You can edit SQL, Python, and Markdown code in their corresponding cells. When you write code in an SQL cell, make sure that the SQL syntax is valid for the selected cell type.