Processing unstructured text data, such as user reviews, product descriptions, and customer service logs, is a common challenge in traditional data pipelines. You can now use the power of large language models (LLMs) directly within your DataWorks pipelines. Use natural language instructions to perform complex AI tasks such as text summarization, sentiment analysis, content classification, and information extraction. This approach simplifies data processing and allows data engineers and analysts to integrate AI capabilities into existing extract, transform, and load (ETL) pipelines without writing complex algorithms.

Preparations

Deploy a large language model service in DataWorks. For more information, see Deploy a model.

The choice of model and resource specifications directly affects the performance and response speed of the model service. Additionally, the model service incurs resource group fees.

Configure the large language model node

You can configure a few settings to run the large language model node.

Configuration item | Description |

Model Service | The large language model service that you deployed during the preparation stage. |

Model Name | The model within the selected model service. This is selected by default. |

System Prompt | Defines the system behavior for the large language model, including its role, capabilities, and code of conduct. You can use the ${param} format to get parameters. |

User Prompt | Enter a specific question or instruction. DataWorks provides four templates that you can select. You can use the ${param} format to get parameters. For example, you can write the prompt as: `Please select items that match |

Simple example

This example shows how to use a large language model in a pipeline and pass parameters between upstream and downstream nodes.

Log on to the DataWorks large language model service. Create a model service based on Qwen3-1.7B. For Resource Group, select the resource group that is attached to the current workspace.

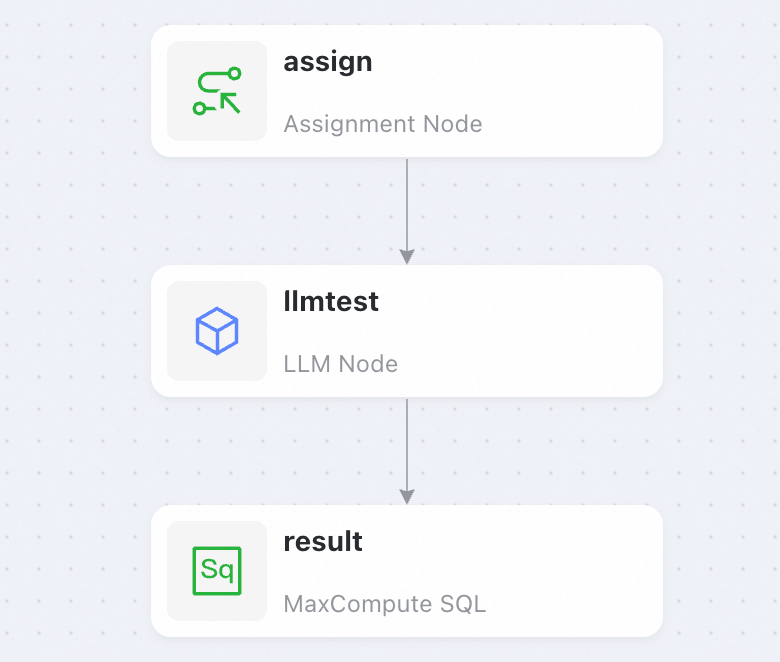

Go to Data Studio and create a pipeline with the following nodes.

Set the language mode for the assignment node to Shell in the toolbar in the lower-right corner. Then, write the following code.

For more information, see Assignment node.

echo 'DataWorks';Configure the LLM node.

Select the model service and model name that you configured.

Configure the user prompt as follows:

Write an introduction about ${title} with a word limit of ${length}.In the configuration pane on the right, go to . Change the resource group to the one you selected when you created the model service.

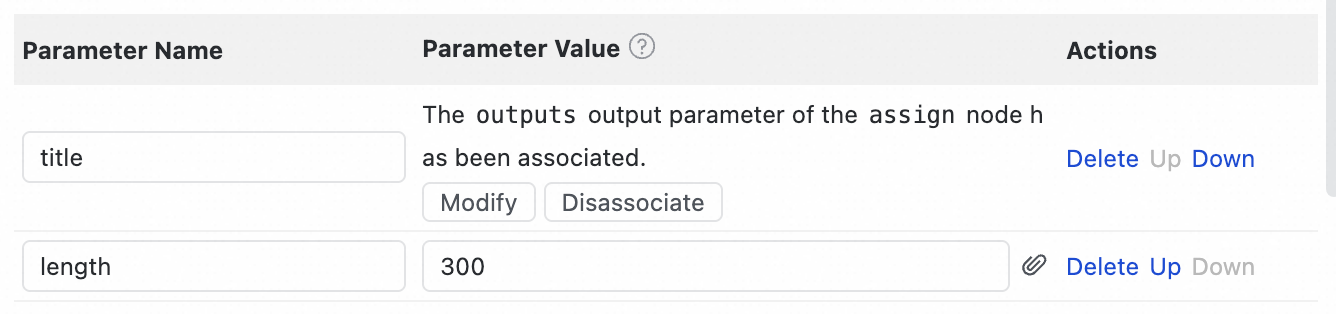

In the configuration pane on the right, go to . Add the title parameter and set its value to the output of the assignment node. Add the length parameter and set its value to the static field 300.

To the right of the value input box, click

to attach the output from the upstream node.

to attach the output from the upstream node.

Configure the MaxCompute SQL node to output the result from the large language model.

ImportantConfiguring a MaxCompute SQL node requires attaching a MaxCompute computing resource. If you do not have one, you can use a Shell node to demonstrate the output.

Configure the code as follows:

select '${content}';In the configuration pane on the right, go to . Change the resource group to the one you selected when you created the model service.

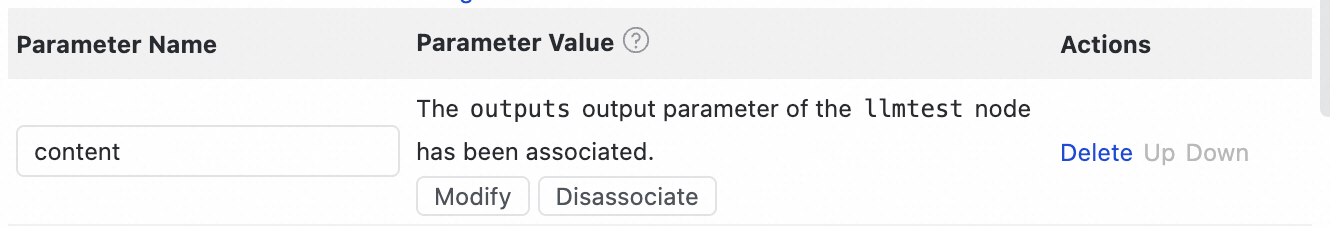

In the configuration pane on the right, go to . Add the content parameter and set its value to the output of the llmtest node.

To the right of the value input box, click

to attach the output from the upstream node.

to attach the output from the upstream node.

Return to the pipeline, click the Run button at the top, and enter the parameters for this run in the pop-up window.

After the pipeline runs successfully, the MaxCompute SQL node outputs a result from the large language model similar to the following.

DataWorks is an enterprise data development and management platform from Alibaba Cloud. It supports data collection, cleansing, integration, scheduling, and visualization for large-scale data processing. It provides a visual interface, connects to various data sources, and features powerful task scheduling and data quality monitoring. DataWorks handles both real-time and batch processing, helping enterprises manage data as assets and improve efficiency. Its unified process helps build reliable data pipelines for data governance and intelligent analysis.