A container's available CPU resources are restricted by its CPU Limit. When the actual usage reaches this limit, the kernel throttles the container, which can degrade service performance. The CPU Burst feature dynamically detects CPU throttling and adaptively adjusts container parameters. During sudden load spikes, CPU Burst can temporarily provide extra CPU resources to the container. This alleviates performance bottlenecks caused by the CPU limit and improves the service quality of applications, especially latency-sensitive ones.

To better understand this document and use this feature, learn about concepts such as CFS Scheduler and CPU Management Policies.

Why you need to enable CPU Burst

Kubernetes clusters use the CPU Limit to constrain the maximum CPU resources that a container can use. This ensures fair resource allocation among multiple containers and prevents a single container from consuming too many CPU resources and affecting the performance of other containers.

CPU is a time-sharing resource. This means multiple processes or containers can share CPU time slices. When you configure a container's CPU Limit, the operating system kernel uses the Completely Fair Scheduler (CFS) to control the container's CPU usage (cpu.cfs_quota_us) within each period (cpu.cfs_period_us). For example, if a container's CPU Limit is 4, the operating system kernel limits the container to a maximum of 400 ms of CPU time within each period. A scheduling period is typically 100 ms.

Benefits

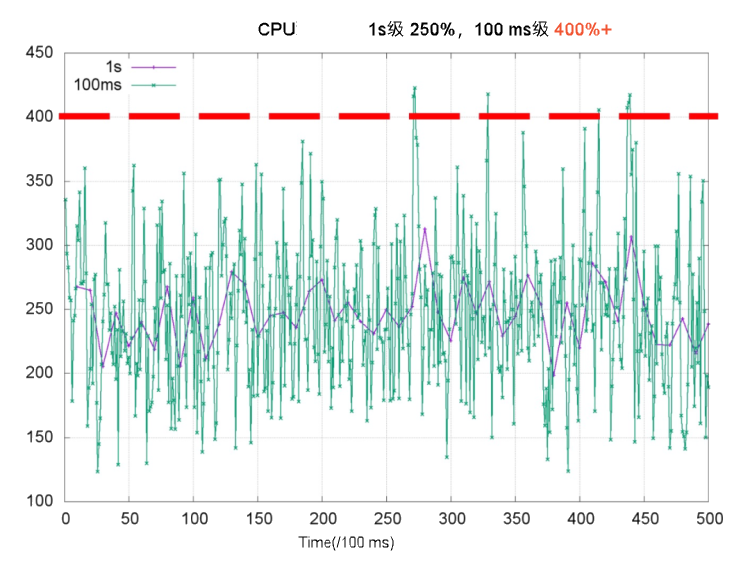

CPU utilization is a key metric for measuring the health of a container. Cluster administrators typically use this metric to configure a container's CPU Limit. Compared to the commonly used per-second metrics, a container's CPU utilization at the hundred-millisecond level often shows more pronounced glitches and rapid fluctuations. In the following figure, the CPU usage is significantly less than 4 cores on a per-second basis (purple line). However, on a per-millisecond basis (green line), the container's CPU usage exceeds 4 cores during some periods. If you set the CPU Limit to 4 cores, the operating system suspends threads because of CPU throttling.

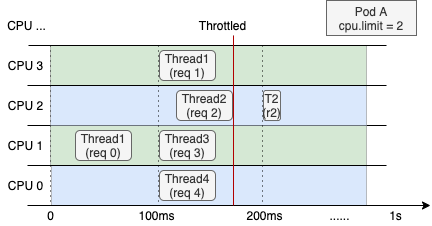

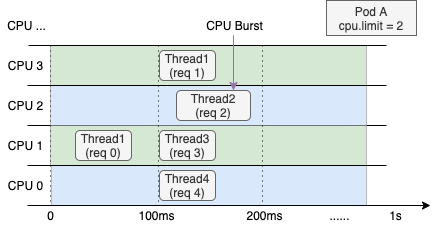

The following figures show how CPU resources are allocated to the threads of a web service container on a 4-core node after the container receives a request (req). The container's CPU Limit is 2. The figure on the left shows the normal case. The figure on the right shows the case after CPU Burst is enabled.

Even if the container's overall CPU utilization in the last second is low, Thread 2 must wait for the next period to finish processing req 2 because of CPU throttling. This increases the request's response time (RT). This is a major cause of long-tail latency problems in containers. | After you enable CPU Burst, a container can accumulate CPU time slices when it is idle and use them to meet resource demands during bursts. This improves container performance and reduces latency. |

In addition to the preceding scenarios, CPU Burst is also suitable for handling CPU usage spikes. For example, when service traffic spikes, ack-koordinator can eliminate CPU bottlenecks within a few seconds while ensuring that the overall load on the node remains at a safe level.

ack-koordinator only modifies the cfs quota in the node cgroup parameters and does not modify the CPU Limit field in the Pod Spec.

Scenarios

The CPU Burst feature is useful in the following scenarios.

A container frequently experiences CPU throttling, which affects application performance, even though its CPU usage is usually below the configured CPU Limit. You can enable CPU Burst to let the container use accumulated time slices during load bursts. This effectively resolves CPU throttling issues and improves application service quality.

A container application consumes more CPU resources during its startup phase. After the application starts and enters a stable running state, its CPU usage drops to a lower, stable level. If you enable CPU Burst, the application can use more time slices during startup to ensure a quick launch without needing to configure an excessively high CPU Limit.

Billing

No fee is charged when you install or use the ack-koordinator component. However, fees may be charged in the following scenarios:

ack-koordinator is a non-managed component that occupies worker node resources after it is installed. You can specify the amount of resources requested by each module when you install the component.

By default, ack-koordinator exposes the monitoring metrics of features such as resource profiling and fine-grained scheduling as Prometheus metrics. If you enable Prometheus metrics for ack-koordinator and use Managed Service for Prometheus, these metrics are considered custom metrics and fees are charged for these metrics. The fee depends on factors such as the size of your cluster and the number of applications. Before you enable Prometheus metrics, we recommend that you read the Billing topic of Managed Service for Prometheus to learn about the free quota and billing rules of custom metrics. For more information about how to monitor and manage resource usage, see Query the amount of observable data and bills.

Prerequisites

An ACK Pro cluster is created and the Kubernetes version of the cluster is 1.18 or later. For more information, see Create an ACK managed cluster and Manually upgrade a cluster.

NoteWe recommend that you use Alibaba Cloud Linux as the operating system. For more information, see Is it necessary to use the Alibaba Cloud Linux operating system to enable the CPU Burst policy?.

The ack-koordinator component is installed, and its version is 0.8.0 or later. For more information, see ack-koordinator.

Configuration description

You can enable the CPU Burst feature for a specific pod using a pod annotation. You can also enable it at the cluster or namespace level using a ConfigMap.

Use an annotation to enable CPU Burst for a pod

To enable the CPU Burst policy for a specific pod, you can add an annotation to the metadata field of the pod's YAML file.

To apply configurations to a workload, such as a deployment, set the appropriate annotations for the pod in the template.metadata field.

annotations:

# Set to auto to enable the CPU Burst feature for this pod.

koordinator.sh/cpuBurst: '{"policy": "auto"}'

# Set to none to disable the CPU Burst feature for this pod.

koordinator.sh/cpuBurst: '{"policy": "none"}'Use a ConfigMap to enable CPU Burst at the cluster level

A CPU Burst policy configured by a ConfigMap takes effect for the entire cluster by default.

Create a file named configmap.yaml using the following ConfigMap example.

apiVersion: v1 data: cpu-burst-config: '{"clusterStrategy": {"policy": "auto"}}' #cpu-burst-config: '{"clusterStrategy": {"policy": "cpuBurstOnly"}}' #cpu-burst-config: '{"clusterStrategy": {"policy": "none"}}' kind: ConfigMap metadata: name: ack-slo-config namespace: kube-systemCheck whether the

ack-slo-configConfigMap exists in the kube-system namespace.If it exists, use the PATCH method to update it. This avoids interfering with other configuration items in the ConfigMap.

kubectl patch cm -n kube-system ack-slo-config --patch "$(cat configmap.yaml)"If it does not exist, run the following command to create the ConfigMap.

kubectl apply -f configmap.yaml

Use a ConfigMap to enable CPU Burst at the namespace level

When you configure a CPU Burst policy for some pods by specifying a namespace, the policy takes effect for that namespace.

Create a file named configmap.yaml using the following ConfigMap example.

apiVersion: v1 kind: ConfigMap metadata: name: ack-slo-pod-config namespace: koordinator-system # Create this namespace manually if you are using it for the first time. data: # Individually enable or disable pods in some namespaces. cpu-burst: | { "enabledNamespaces": ["allowed-ns"], "disabledNamespaces": ["blocked-ns"] } # The CPU Burst policy is enabled for all pods in the allowed-ns namespace, corresponding to policy: auto. # The CPU Burst policy is disabled for all pods in the blocked-ns namespace, corresponding to policy: none.Check whether the

ack-slo-configConfigMap exists in the kube-system namespace.If it exists, use the PATCH method to update it. This avoids interfering with other configuration items in the ConfigMap.

kubectl patch cm -n kube-system ack-slo-config --patch "$(cat configmap.yaml)"If it does not exist, run the following command to create the ConfigMap.

kubectl apply -f configmap.yaml

Procedure

This example uses a web service application to demonstrate the access latency before and after the CPU Burst policy is enabled. This helps verify the optimization effects of the policy.

Verification steps

Create a file named apache-demo.yaml that contains the following content for the example application.

Add an annotation to the

metadatafield of the pod object to enable the CPU Burst policy for the pod.apiVersion: v1 kind: Pod metadata: name: apache-demo annotations: koordinator.sh/cpuBurst: '{"policy": "auto"}' # Enable the CPU Burst policy. spec: containers: - command: - httpd - -D - FOREGROUND image: registry.cn-zhangjiakou.aliyuncs.com/acs/apache-2-4-51-for-slo-test:v0.1 imagePullPolicy: Always name: apache resources: limits: cpu: "4" memory: 10Gi requests: cpu: "4" memory: 10Gi nodeName: $nodeName # Change this to the actual node name. hostNetwork: False restartPolicy: Never schedulerName: default-schedulerRun the following command to deploy Apache HTTP Server as the target application for evaluation.

kubectl apply -f apache-demo.yamlUse the wrk2 stress testing tool to send requests.

# Download, decompress, and install the open-source wrk2 testing tool. For details, see https://github.com/giltene/wrk2. # The Gzip compression module is enabled in the current Apache image configuration to simulate the server-side request processing logic. # Run the stress testing command. Make sure to change the IP address to that of the target application. ./wrk -H "Accept-Encoding: deflate, gzip" -t 2 -c 12 -d 120 --latency --timeout 2s -R 24 http://$target_ip_address:8010/static/file.1m.testNoteReplace the target address in the command with the IP address of the Apache pod.

You can modify the

-Rparameter to adjust the QPS rate from the sender.

Result analysis

The following data shows the performance on Alibaba Cloud Linux and the community version of CentOS before and after the CPU Burst policy is enabled.

Completely disabled means that the CPU Burst policy is set to

none.When enabled, the CPU Burst policy is set to

auto.

The following data is for reference only. Actual results may vary based on your operating environment.

Alibaba Cloud Linux | Shut Down All | Enabled |

apache RT-p99 | 107.37 ms | 67.18 ms (-37.4%) |

CPU Throttled Ratio | 33.3% | 0% |

Pod CPU average utilization | 31.8% | 32.6% |

CentOS | Shut Down All | Enabled |

apache RT-p99 | 111.69 ms | 71.30 ms (-36.2%) |

CPU Throttled Ratio | 33% | 0% |

Pod CPU average utilization | 32.5% | 33.8% |

The data comparison shows the following:

After the CPU Burst feature is enabled, the p99 response time (RT) of the application is significantly improved.

After the CPU Burst feature is enabled, CPU throttling is significantly reduced, while the overall pod CPU utilization remains almost unchanged.

Advanced configuration

You can specify advanced configurations using a ConfigMap or by adding annotations to pod configurations. Annotations take precedence over the ConfigMap. If a parameter is configurable by both methods and an annotation is not added, ack-koordinator uses the value from the namespace-level ConfigMap. If the parameter is not specified in the namespace-level ConfigMap, ack-koordinator uses the value from the cluster-level ConfigMap.

The following is a configuration example.

# Example of the ack-slo-config ConfigMap.

data:

cpu-burst-config: |

{

"clusterStrategy": {

"policy": "auto",

"cpuBurstPercent": 1000,

"cfsQuotaBurstPercent": 300,

"sharePoolThresholdPercent": 50,

"cfsQuotaBurstPeriodSeconds": -1

}

}

# Example of a pod annotation.

koordinator.sh/cpuBurst: '{"policy": "auto", "cpuBurstPercent": 1000, "cfsQuotaBurstPercent": 300, "cfsQuotaBurstPeriodSeconds": -1}'The following are the advanced parameters for the CPU Burst policy:

The Annotation and ConfigMap columns indicate whether you can configure the parameter using a pod annotation or a ConfigMap. ![]() indicates supported, and

indicates supported, and ![]() indicates not supported.

indicates not supported.

Parameter | Type | Description | Annotation | ConfigMap |

| string |

|

|

|

| int | The default value is This parameter is used to configure the kernel-level CPU Burst feature provided by Alibaba Cloud Linux. This parameter specifies the percentage to which the CPU limit can be increased by CPU Burst. This parameter corresponds to the For example, with the default configurations, for |

|

|

| int | The default value is Specifies the maximum percentage to which the value of a pod's |

|

|

| int | The default value is When the CFS quota burst feature is enabled, this parameter specifies the time limit for a pod to continuously consume CPU at its upper limit ( |

|

|

| int | The default value is This parameter specifies the CPU utilization threshold of the node when the automatic adjustment of CFS quotas is enabled. If this threshold is exceeded, the |

|

|

When you enable automatic adjustment of the CFS quota by setting

policytocfsQuotaBurstOnlyorauto, the pod CPU limit parametercpu.cfs_quota_uson the node is dynamically adjusted based on CPU throttling.When you perform stress tests on a pod, we recommend that you monitor the pod's CPU usage or temporarily disable the automatic adjustment of CFS quotas (set

policytocpuBurstOnlyornone) to maintain better resource elasticity in a production environment.

FAQ

Does the CPU Burst feature that I enabled based on an earlier version of the ack-slo-manager protocol still work after I upgrade ack-slo-manager to ack-koordinator?

Yes. Earlier versions of the Pod protocol require you to add the alibabacloud.com/cpuBurst annotation. ack-koordinator is fully compatible with these earlier protocol versions. You can seamlessly upgrade the component to the new version.

ack-koordinator is compatible with protocol versions no later than July 30, 2023. We recommend that you replace the resource parameters used in an earlier protocol version with those used in the latest version.

The following describes the compatibility of ack-koordinator with different protocol versions.

ack-koordinator version | alibabacloud.com protocol | koordinator.sh protocol |

≥0.2.0 | Supported | Not supported |

≥0.8.0 | Supported | Supported |

After I enable the CPU Burst configuration, why do my pods still experience CPU throttling?

This may happen for several reasons. Review the following items to troubleshoot the issue.

The configuration format may be incorrect, which prevents the CPU Burst policy from taking effect. For more information, see Advanced configuration to verify and modify the configuration.

CPU throttling events may still be generated if CPU utilization reaches the upper limit specified by

cfsQuotaBurstPercentdue to insufficient CPU resources.You can adjust the Request and Limit values based on your application's actual needs.

The CPU Burst policy adjusts two cgroup parameters for a pod:

cpu.cfs_quota_usandcpu.cfs_burst_us. For more information, see Advanced parameter configuration. Thecpu.cfs_quota_usparameter is set only after ack-koordinator detects a CPU Throttled event, which causes a slight delay. In contrast, thecpu.cfs_burst_usparameter is set directly based on the configuration, which makes it more responsive.For optimal results, we recommend that you use the Alibaba Cloud Linux operating system.

The CPU Burst policy includes a protection mechanism for adjusting

cpu.cfs_quota_us, which is a node-level safety threshold configured by thesharePoolThresholdPercentparameter. If the node's CPU utilization becomes too high,cpu.cfs_quota_usis reset to its initial value to prevent the pod from affecting other pods.You can set the safety threshold based on your application's requirements to avoid performance degradation caused by high machine utilization.

Is it necessary to use the Alibaba Cloud Linux operating system to enable the CPU Burst policy?

No. The ack-koordinator CPU Burst policy supports all Alibaba Cloud Linux and CentOS open source kernels. However, we recommend that you use the Alibaba Cloud Linux operating system. The kernel features of Alibaba Cloud Linux allow ack-koordinator to provide a more fine-grained and elastic CPU management mechanism. For more information, see Enable the CPU Burst Feature on the cgroup v1 Interface.

After I enable CPU Burst, why does the number of threads reported by my application's code change?

This occurs because the CPU Burst mechanism can conflict with the way some applications retrieve system resource information. ack-koordinator implements CPU Burst by dynamically adjusting the container's underlying cgroup parameter cpu.cfs_quota_us. This value represents the CPU time quota available to the container within the current scheduling period. ack-koordinator dynamically scales this quota based on the application's load.

Many applications, such as Java's Runtime.getRuntime().availableProcessors(), directly read cpu.cfs_quota_us to infer the number of available CPU cores. Therefore, when the CPU quota is dynamically adjusted, the number of cores retrieved by the application also changes, causing parameters that depend on this value, such as the thread pool size, to become unstable.

We recommend that you modify your application to rely on the fixed limits.cpu value defined in the pod.

Inject environment variables: You can use

resourceFieldRefto inject thelimits.cpuvalue of the pod into the container as an environment variable.env: - name: CPU_LIMIT valueFrom: resourceFieldRef: resource: limits.cpuModify the application code: You can modify the application startup logic to prioritize reading the

CPU_LIMITenvironment variable to calculate and set the thread pool size. After this change, the application runs based on a stable and reliable value, regardless of how CPU Burst adjusts the quota.