Service Mesh (ASM) can work with Kubernetes Armada (Karmada for short), allowing you to deploy and run your cloud-native applications across multiple Kubernetes clusters, with no changes to your applications. Karmada provides ready-to-use automation for multi-cluster application management in cloud-native scenarios, with key features such as centralized multi-cloud management, high availability, fault recovery, and traffic scheduling. This topic describes how to use ASM and Karmada for multi-cluster application management.

Background information

Karmada uses Kubernetes-native APIs to define federated resource templates for easy integration with existing tools that have been adopted by Kubernetes. In addition, Karmada provides a separate Propagation (placement) Policy API to define multi-cluster scheduling requirements.

Karmada supports one-to-many (1:n) mapping from a policy to workloads. You do not need to indicate scheduling constraints each time you create federated applications.

You can use the default policies to interact directly with Kubernetes-native APIs.

Cluster modes

Karmada supports two modes to manage member clusters: Push and Pull. The main difference between Push and Pull modes is the method of accessing member clusters when manifests are deployed.

Push mode

The Karmada control plane directly accesses the kube-apiserver of a member cluster to obtain the cluster status and deploy manifests.

Pull mode

The Karmada control plane does not access a member cluster but sends its request to an extra component named karmada-agent.

Each karmada-agent serves a cluster and takes the following responsibilities:

Registers the cluster to Karmada (creates a

Clusterobject).Maintains cluster status and reports to Karmada (updates the status of the

Clusterobject).Watches manifests from the Karmada execution space (which is the namespace and is in the format of

karmada-es-<cluster name>) and deploys the watched resources to the cluster that the agent serves.

Key components

The Karmada control plane consists of the following components:

Karmada API Server

Karmada Controller Manager

Karmada Scheduler

ETCD, an open source distributed key-value store, stores Karmada API objects. The Karmada API Server is the REST endpoint for all other components to communicate with each other. The Karmada Controller Manager performs operations based on the API objects that you created by using the Karmada API Server.

Prerequisites

Two Container Service for Kubernetes (ACK) clusters (member1 and member2 in this example) are created in the same virtual private cloud (VPC). For more information, see Create an ACK managed cluster.

NoteIn this example, two clusters in the same VPC are used. If two clusters in different VPCs are used, additional configurations are required to enable cross-VPC communication between the two clusters.

When you create a cluster, we recommend that you configure advanced security groups for the cluster.

An ASM instance (mesh1 in this example) is created, and a sidecar proxy injection policy is configured in the default namespace. For more information about how to create an ASM instance, see create an ASM instance. For more information about how to configure a sidecar proxy injection policy, see Configure sidecar proxy injection policies.

A Karmada primary cluster (karmada-master in this example) is deployed and the two ACK clusters (member1 and member2 in this example) are added to the Karmada primary cluster as member clusters. For more information, see Installation Overview.

The two ACK clusters are added to the ASM instance (mesh1 in this example) and a serverless ingress gateway is created. For more information, see the "Step 2: Add the clusters to the ASM instance and create a serverless ingress gateway" section in Use an ASM serverless gateway to act as a single entry point for access to multiple clusters.

Step 1: Use Karmada to deploy applications in multiple clusters

The sample application Bookinfo, as described in Use an ASM serverless gateway to act as a single entry point for access to multiple clusters, is used in this example. You do not need to manually deploy reviews-v3 and reviews-v1 in one cluster and deploy reviews-v2 in the other cluster. This section demonstrates how to create Karmada-based propagation policies in the karmada-master cluster to achieve the desired version deployment results.

Create a bookinfo-karmada.yaml file with the following content:

Run the following command to deploy the Bookinfo application in the Karmada primary cluster:

kubectl --kubeconfig /etc/karmada/karmada-apiserver.config apply -f bookinfo-karmada.yamlNoteBy default, the kubeconfig file of the Karmada primary cluster is saved in

/etc/karmada/karmada-apiserver.config. If you select another installation location when you deploy the primary cluster, replace the path following--kubeconfigwith the actual path.Create a propagation.yaml file with the following content:

apiVersion: policy.karmada.io/v1alpha1 kind: PropagationPolicy metadata: name: service-propagation spec: resourceSelectors: - apiVersion: v1 kind: Service name: productpage - apiVersion: v1 kind: Service name: details - apiVersion: v1 kind: Service name: reviews - apiVersion: v1 kind: Service name: ratings placement: clusterAffinity: clusterNames: - member1 - member2 --- apiVersion: policy.karmada.io/v1alpha1 kind: PropagationPolicy metadata: name: produtpage-propagation spec: resourceSelectors: - apiVersion: apps/v1 kind: Deployment name: productpage-v1 - apiVersion: v1 kind: ServiceAccount name: bookinfo-productpage placement: clusterAffinity: clusterNames: - member1 --- apiVersion: policy.karmada.io/v1alpha1 kind: PropagationPolicy metadata: name: details-propagation spec: resourceSelectors: - apiVersion: apps/v1 kind: Deployment name: details-v1 - apiVersion: v1 kind: ServiceAccount name: bookinfo-details placement: clusterAffinity: clusterNames: - member2 --- apiVersion: policy.karmada.io/v1alpha1 kind: PropagationPolicy metadata: name: reviews-propagation spec: resourceSelectors: - apiVersion: apps/v1 kind: Deployment name: reviews-v1 - apiVersion: apps/v1 kind: Deployment name: reviews-v2 - apiVersion: apps/v1 kind: Deployment name: reviews-v3 - apiVersion: v1 kind: ServiceAccount name: bookinfo-reviews placement: clusterAffinity: clusterNames: - member1 - member2 --- apiVersion: policy.karmada.io/v1alpha1 kind: PropagationPolicy metadata: name: ratings-propagation spec: resourceSelectors: - apiVersion: apps/v1 kind: Deployment name: ratings-v1 - apiVersion: v1 kind: ServiceAccount name: bookinfo-ratings placement: clusterAffinity: exclude: - member1The

.spec.placement.clusterAffinityfield of PropagationPolicy indicates the scheduling restriction on a particular cluster. Without this restriction, any cluster can be a scheduling candidate.PropagationPolicy has four fields that can be configured.

Field

Description

LabelSelector

A filter that is used to select member clusters by labels. If it is not empty and is not nil, only the clusters that match this filter are selected.

FieldSelector

A filter that is used to select member clusters by fields. If it is not empty and is not nil, only the clusters that match this filter are selected.

ClusterNames

You can set the

ClusterNamesfield to specify the selected clusters.ExcludeClusters

You can set the

ClusterNamesfield to specify the excluded clusters.In this example, only the

ClusterNamesandExcludeClustersfields are used. For more information about.spec.placement.clusterAffinityfields, see Resource Propagating.Run the following command to deploy the propagation policy:

kubectl --kubeconfig /etc/karmada/karmada-apiserver.config apply -f propagation.yamlUse kubectl to connect to member1 and member2 based on the information in the kubeconfig files of member1 and member2 respectively to view the Deployments.

member1

kubectl --kubeconfig member1 get deploymentExpected output:

NAME READY UP-TO-DATE AVAILABLE AGE productpage-v1 1/1 1 1 12m reviews-v1 1/1 1 1 12m reviews-v2 1/1 1 1 12m reviews-v3 1/1 1 1 12mmember2

kubectl --kubeconfig member2 get deploymentExpected output:

NAME READY UP-TO-DATE AVAILABLE AGE details-v1 1/1 1 1 16m ratings-v1 1/1 1 1 16m reviews-v1 1/1 1 1 16m reviews-v2 1/1 1 1 16m reviews-v3 1/1 1 1 16m

Step 2: Create a virtual service and an Istio gateway

In the default namespace, create a virtual service named bookinfo with the following content. For more information, see Manage virtual services.

apiVersion: networking.istio.io/v1alpha3 kind: VirtualService metadata: name: bookinfo spec: hosts: - "*" gateways: - bookinfo-gateway http: - match: - uri: exact: /productpage - uri: prefix: /static - uri: exact: /login - uri: exact: /logout - uri: prefix: /api/v1/products route: - destination: host: productpage port: number: 9080In the default namespace, create an Istio gateway named bookinfo-gateway with the following content. For more information, see Manage Istio gateways.

apiVersion: networking.istio.io/v1alpha3 kind: Gateway metadata: name: bookinfo-gateway spec: selector: istio: ingressgateway # use istio default controller servers: - port: number: 80 name: http protocol: HTTP hosts: - "*"

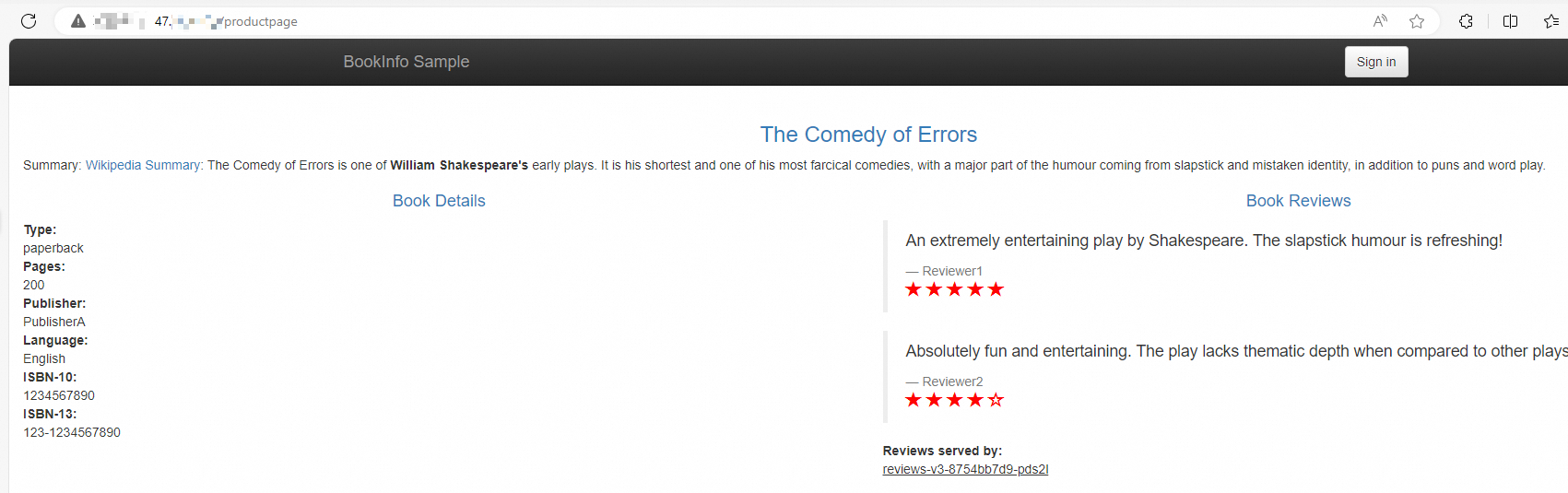

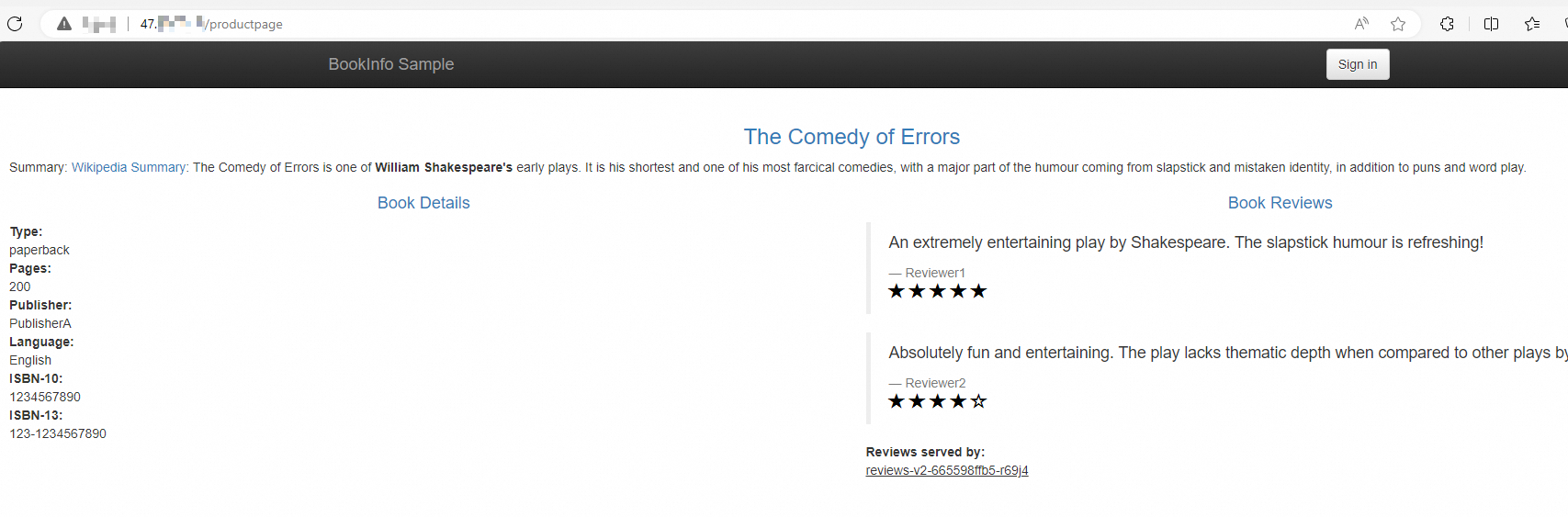

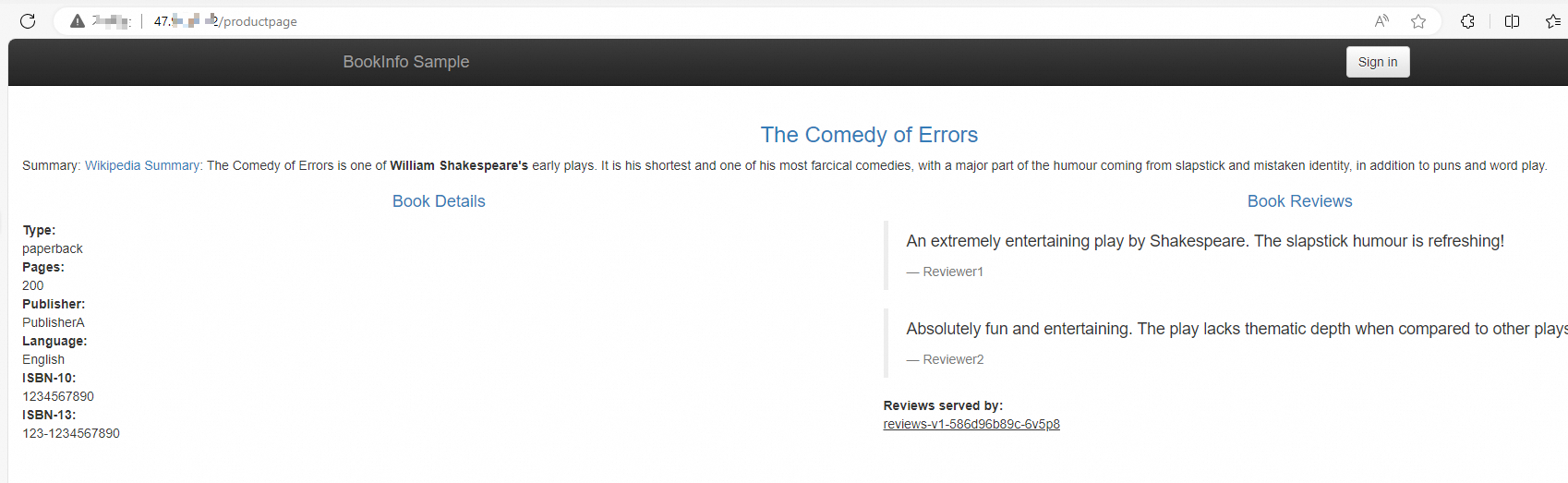

Step 3: Perform access tests

Obtain the IP address of the ingress gateway. For more information, see substep 1 of Step 3 in the Use Istio resources to route traffic to different versions of a service topic.

In the address bar of your browser, enter

http://{IP address of the serverless ingress gateway}/productpageand refresh the page multiple times. You can see that the ratio of the requests routed to the three versions of the reviews microservice is close to 1:1:1. The v3 version of the reviews microservice can take effect normally though it is not deployed in the same cluster as other microservices.