This topic describes how to use Managed Service for Prometheus to monitor Alibaba Cloud E-MapReduce (EMR).

Prerequisites

An EMR cluster is created. For more information, see Create a cluster.Limit

You can install the component only for Prometheus for ECS instances.

Step 1: Enable the Prometheus port for taihao-exporter

After you create an EMR cluster, the system automatically installs taihao-exporter in the corresponding Elastic Compute Service (ECS) instance. You must manually enable the Prometheus port.

- Log on to the EMR console. In the left-side navigation pane, click EMR on ECS. On the EMR on ECS page, obtain the ID of the EMR cluster, and then click the name of the cluster.

- Click the Nodes tab. Find the master node and core node, and click Details. In the Basic Information section of the Instance Details tab, click Connect to remotely log on to the ECS instance.

- Run the following command to query the exporter process.

ps -ef | grep taihao_exporter - Run the following command to add prom_sink_enable=true to the taihao_exporter.yaml file and restart the service:

sed -i 's/prom_sink_enable:\s*false/prom_sink_enable: true/g' /usr/local/taihao_exporter/taihao_exporter.yaml service taihao_exporter restartNote You need to modify the configurations of all nodes.

Step 2: Integrate EMR into Managed Service for Prometheus

Entry points

Entry point 1

- Log on to the ARMS console.

- In the left-side navigation pane, choose .

- Click the name of the Prometheus instance instance that you want to manage to go to the Integration Center page.

Entry point 2

- Log on to the Application Real-time Monitoring Service (ARMS) console.

- In the left-side navigation pane, click Integration Center. In the Application Components section, find the E-MapReduce component and click Add. In the panel that appears, follow the instructions to add the component.

Configure the EMR component

This section describes how to configure the EMR component in the integration center of the Prometheus instance. Perform the following steps:

- Add the EMR component.

- If you install the EMR component for the first time, perform the following operation:

In the uninstalled section of the Integration Center page, find the E-MapReduce component and click Install.

Note You can click the component to view the common EMR metrics and dashboard thumbnails in the panel that appears. The metrics listed are for reference only. After you install the EMR component, you can view the actual metrics. For more information, see E-MapReduce monitoring metrics. - If you have installed the EMR component, you must add the component again.

In the Installed section of the Integration Center page, find the E-MapReduce component and click Add.

- If you install the EMR component for the first time, perform the following operation:

- On the Configurations tab in the STEP2 section, configure the parameters and click OK. The following table describes the parameters.

Parameter Description EMR Cluster ID The EMR cluster ID obtained in Step 1: Enable the Prometheus port for taihao-exporter. EMR Cluster Name The name of the EMR cluster. Exporter Name The name of the current exporter. - The name can contain only lowercase letters, digits, and hyphens (-), and cannot start or end with a hyphen (-).

- The name must be unique.

Exporter Port Number The listening port of the metrics. Managed Service for Prometheus accesses the port to obtain metric data. Default value: 9712. Metrics Path The HTTP path used by Managed Service for Prometheus to collect metric data from the exporter. Default value: /metrics_preget. Metrics Collection Interval (Seconds) The interval at which EMR metrics are collected. Default value: 30. ECS Tag (Service Discovery) The ECS tag and tag value that are used to deploy the exporter. Managed Service for Prometheus uses this tag for service discovery. Valid values: acs:emr:nodeGroupType or acs:emr:hostGroupType. ECS Tag Value The ECS tag values. Default values: CORE,MASTER. Separate multiple values with commas (,). Note You can view the monitoring metrics on the Metrics tab in the STEP2 section.The installed components are displayed in the Installed section of the Integration Center page. Click the component. In the panel that appears, you can view information such as targets, metrics, dashboard, alerts, service discovery configurations, and exporters. For more information, see Integration center.

Step 3: View monitoring data

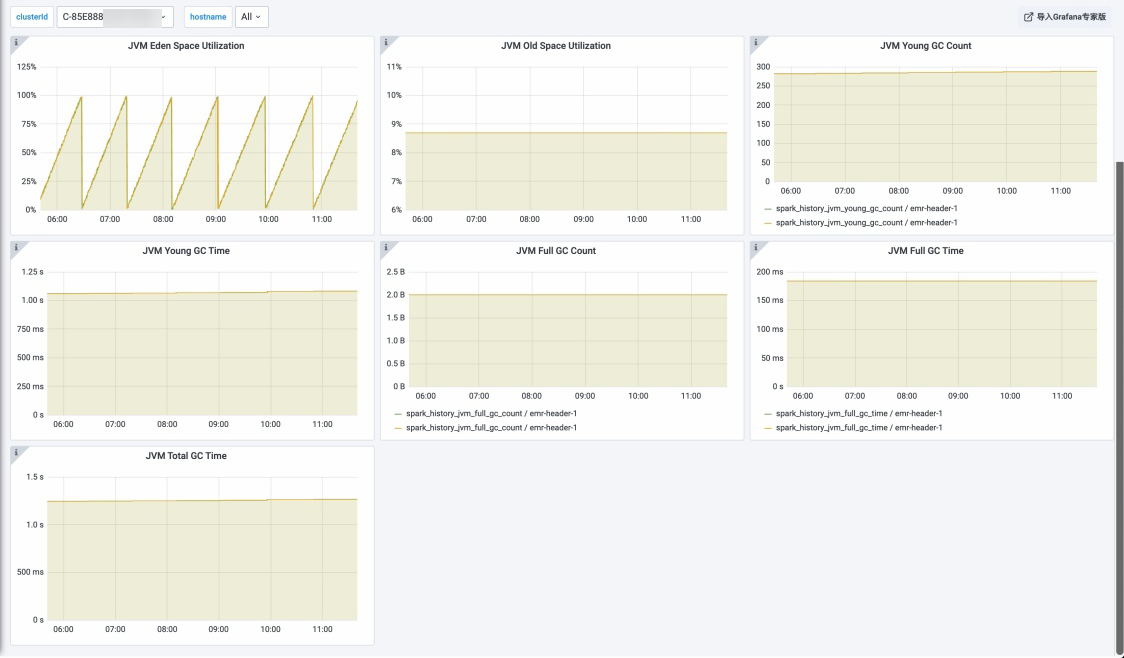

Managed Service for Prometheus provides more than 20 Grafana dashboards for E-MapReduce, such as HOST, HDFS, Hive, YARN, Impala, ZooKeeper, Spark, Flink, and ClickHouse.

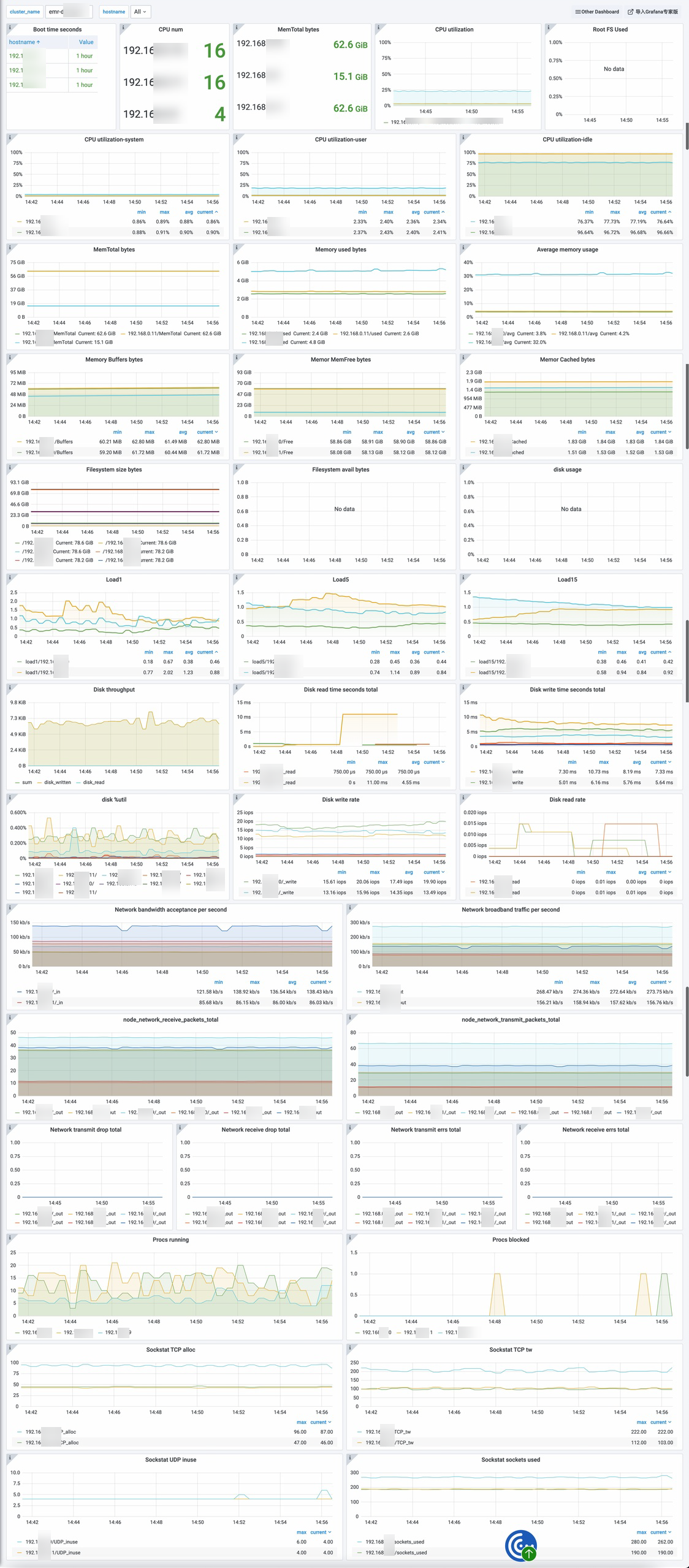

- HOST dashboard: displays the CPU utilization, memory usage, disk space, load, network, and socket of the ECS instance.

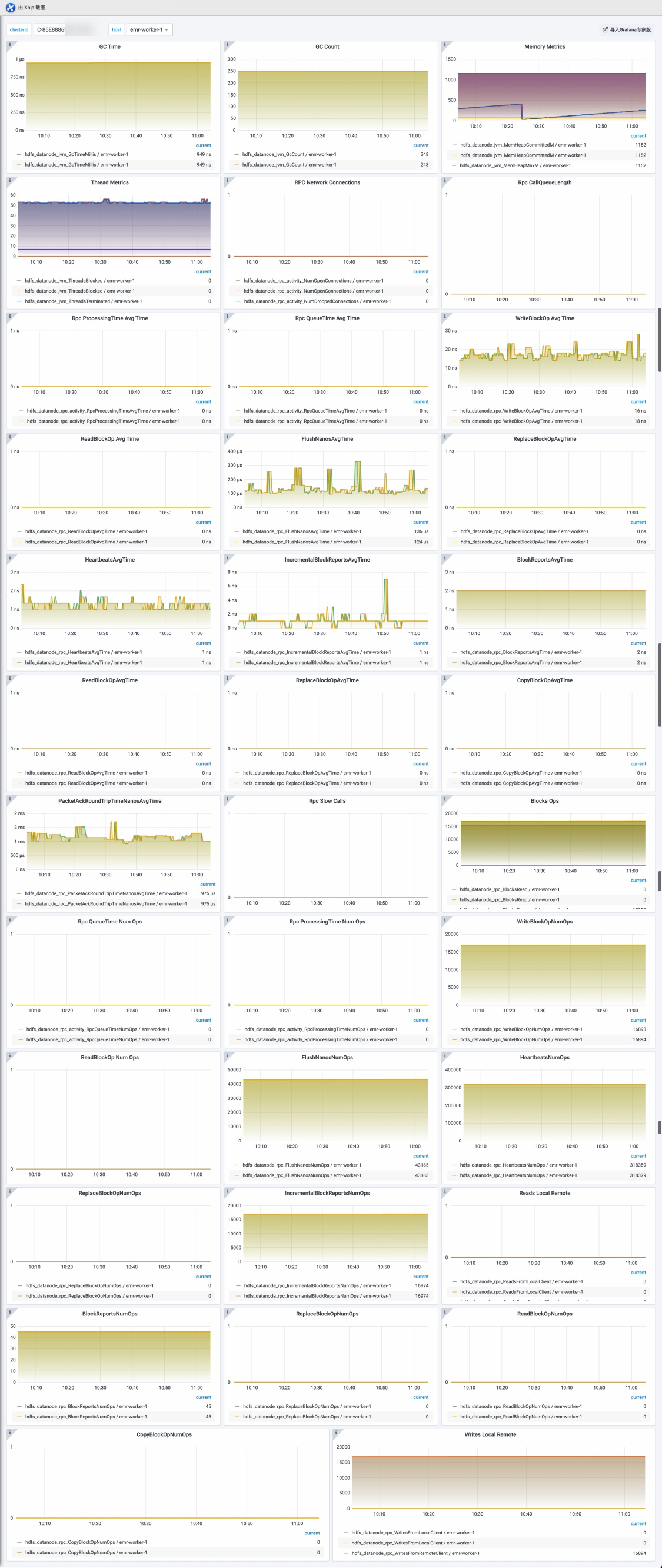

- HDFS dashboard

- HDFS-HOME

- HDFS-NameNodes

- HDFS-DataNodes

- HDFS-JournanlNodes

- HDFS-HOME

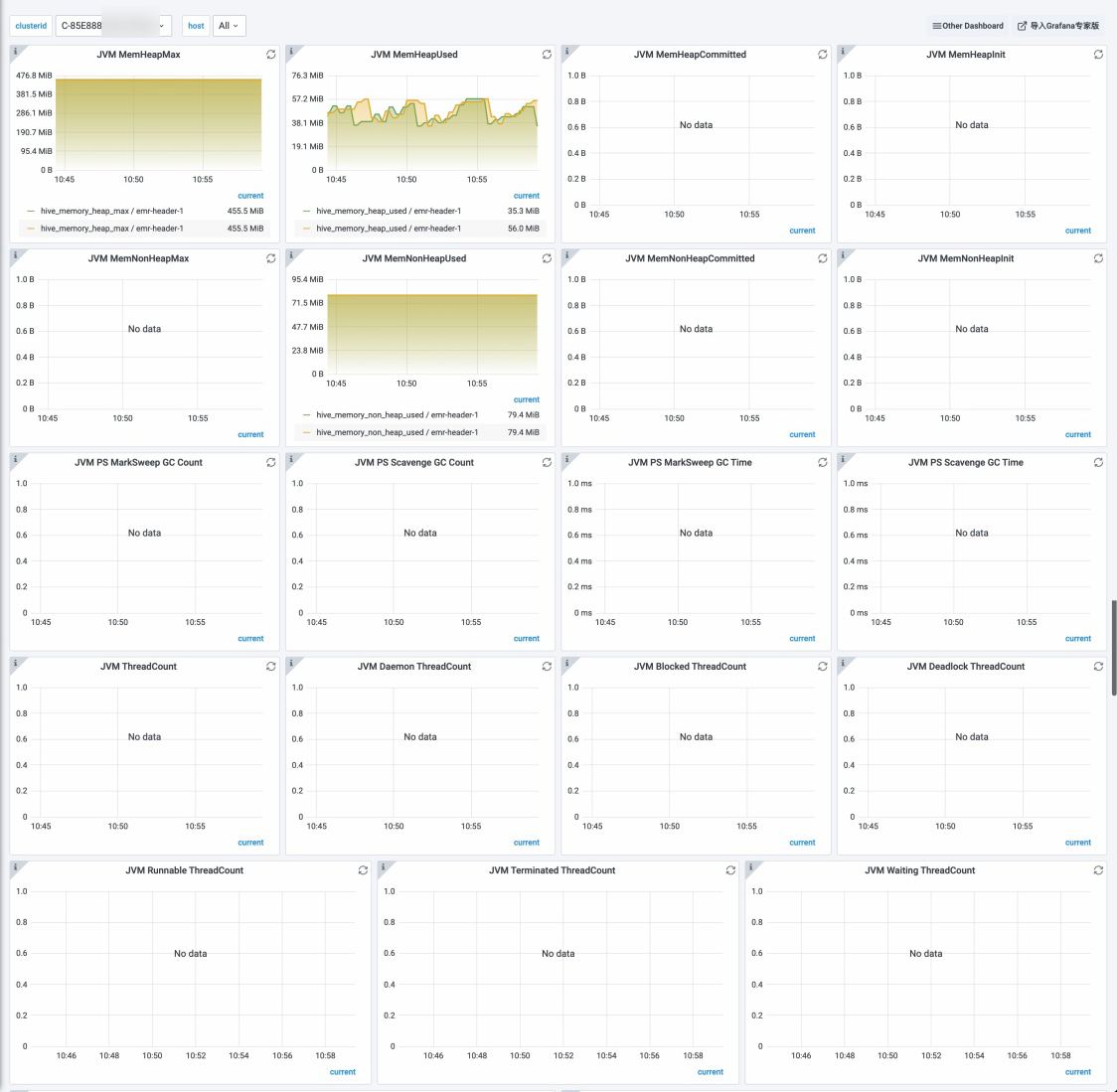

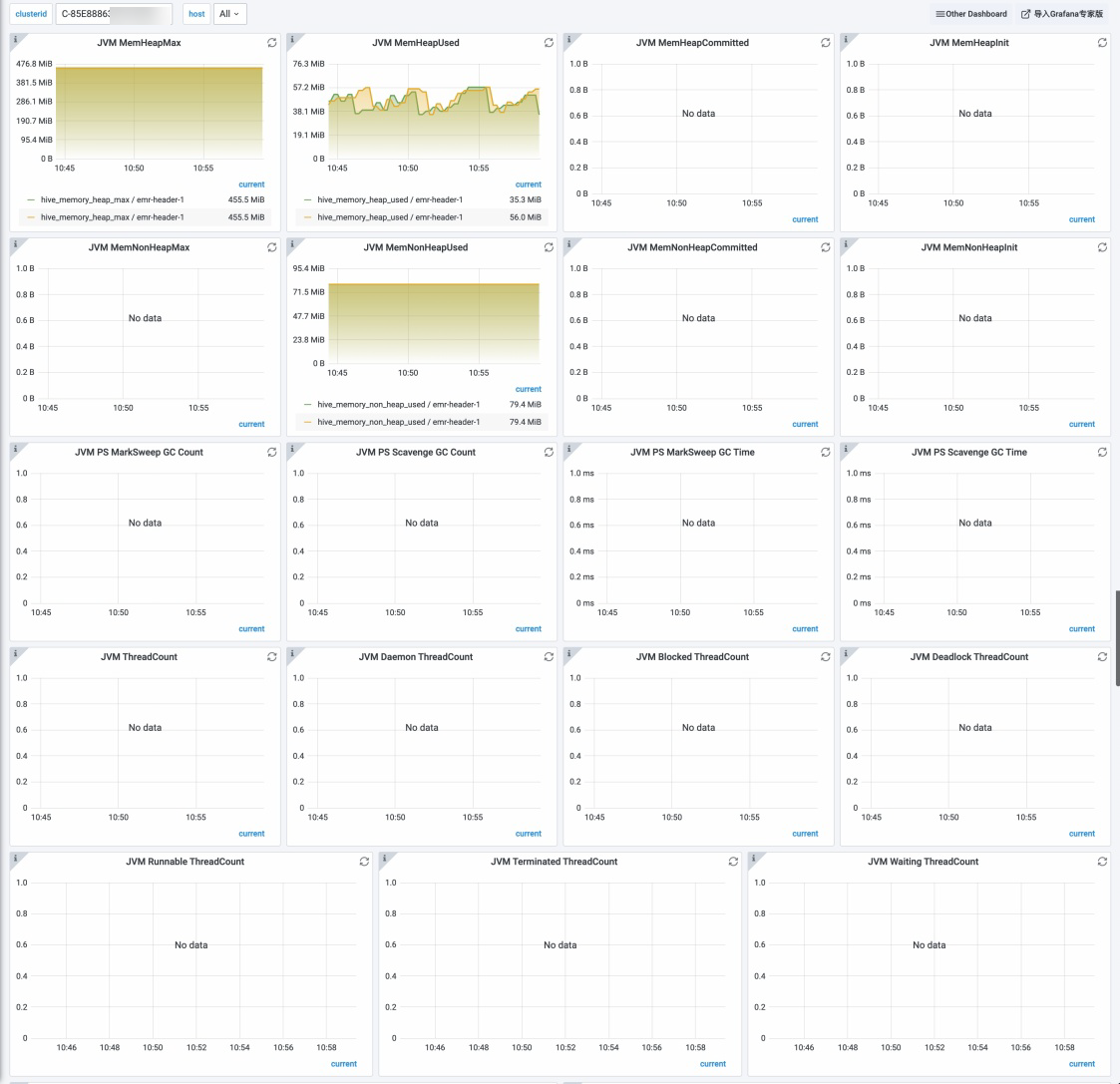

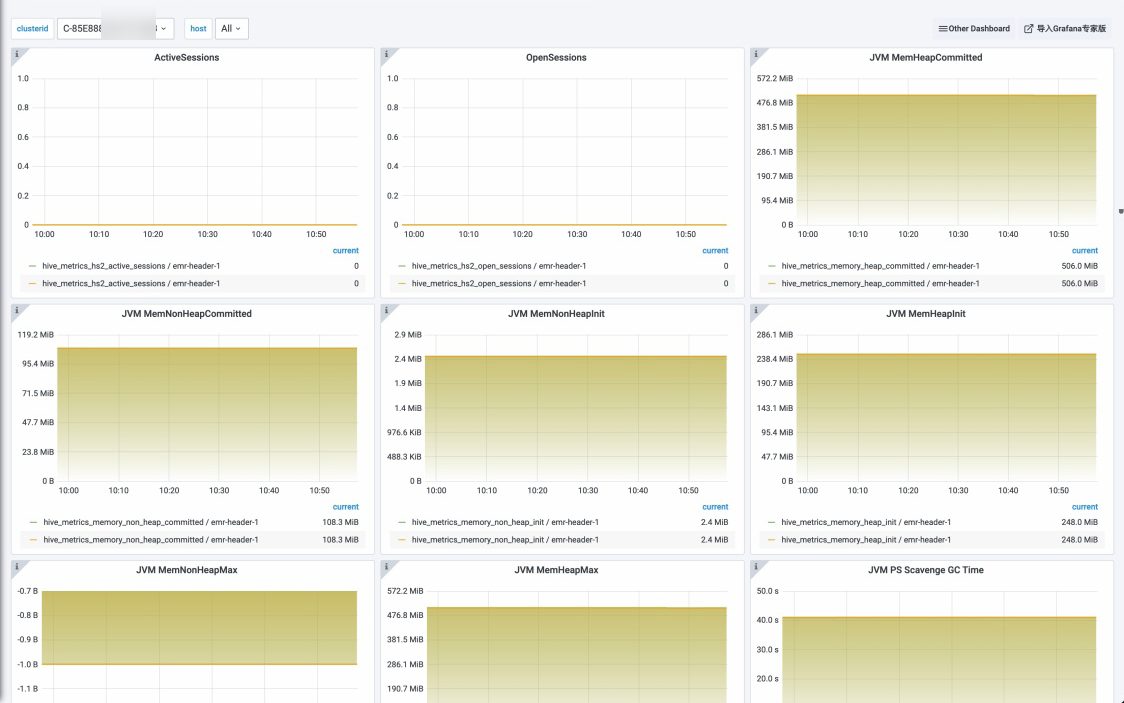

- Hive dashboard

- HiveServer2: the HiveQL query server that receives SQL requests from JDBC clients.

- HiveMetaStore: the metadata management module that is used to store metadata such as database and table data.

- HiveServer2: the HiveQL query server that receives SQL requests from JDBC clients.

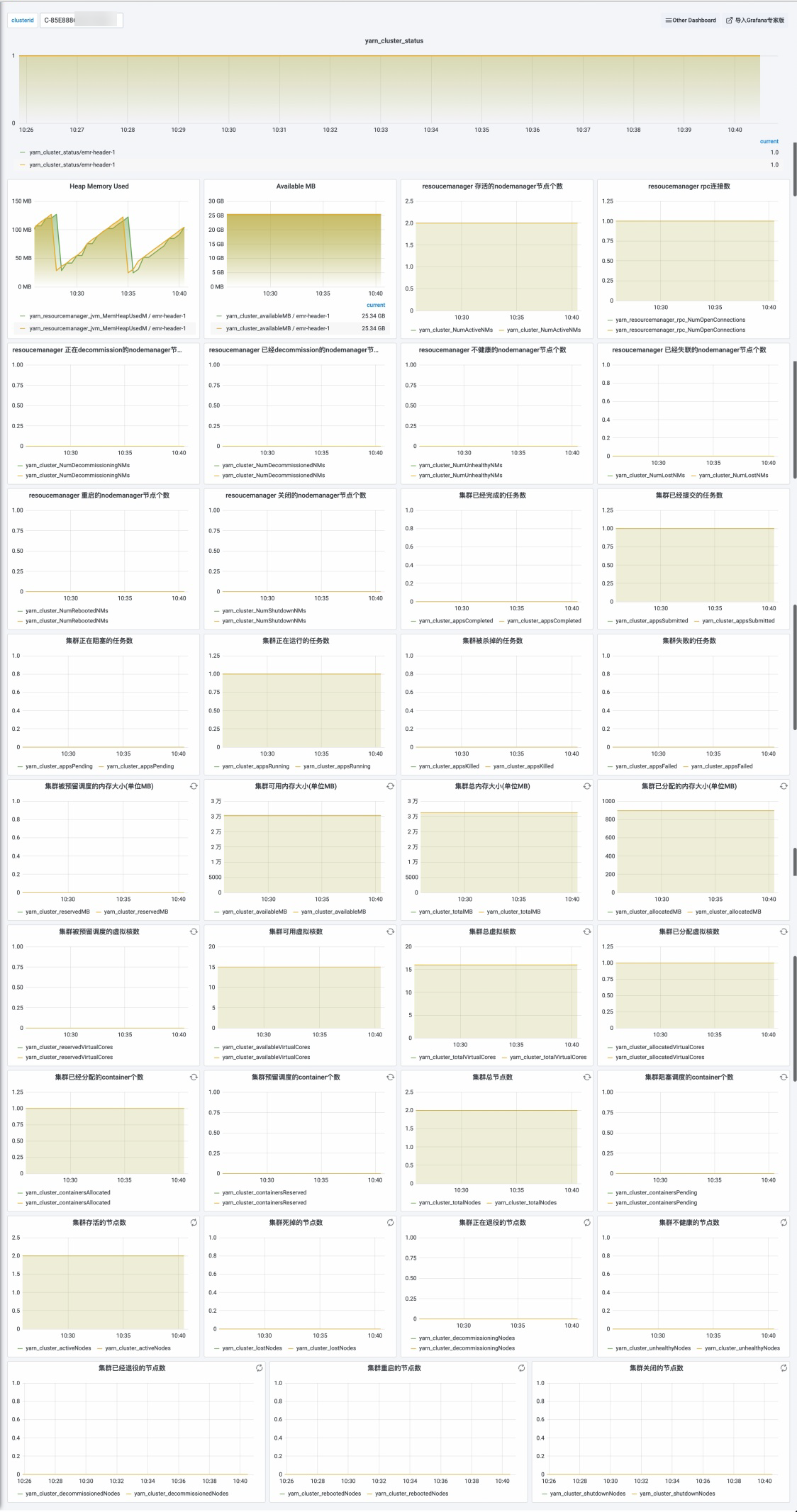

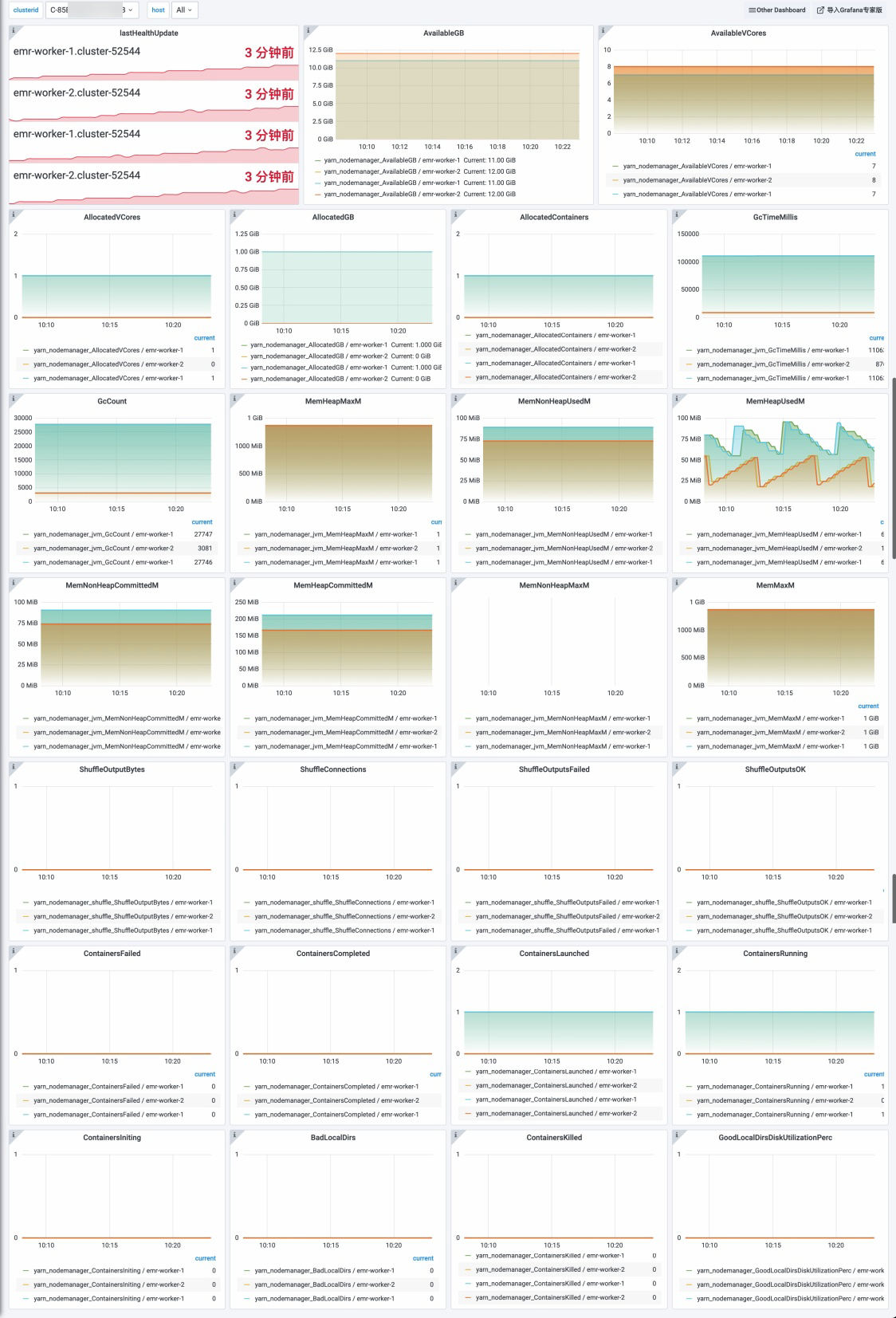

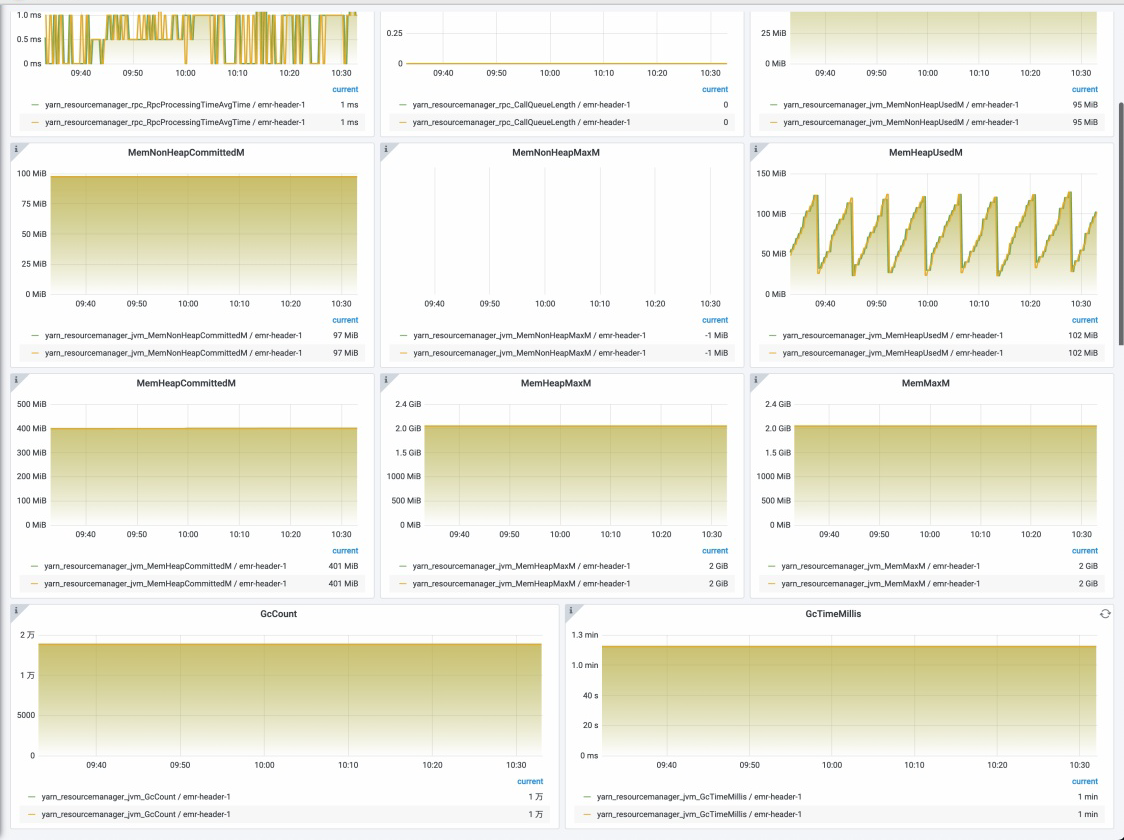

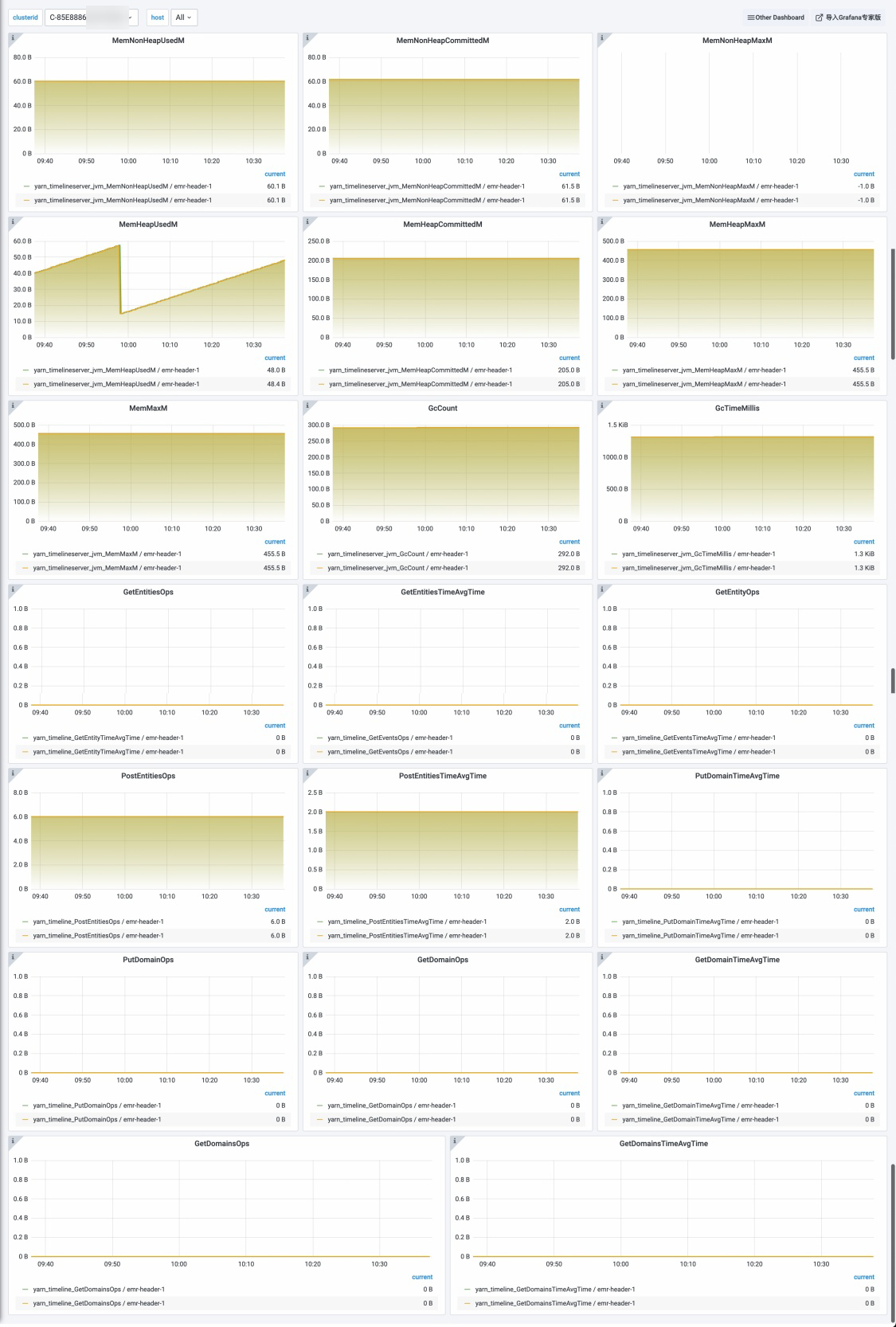

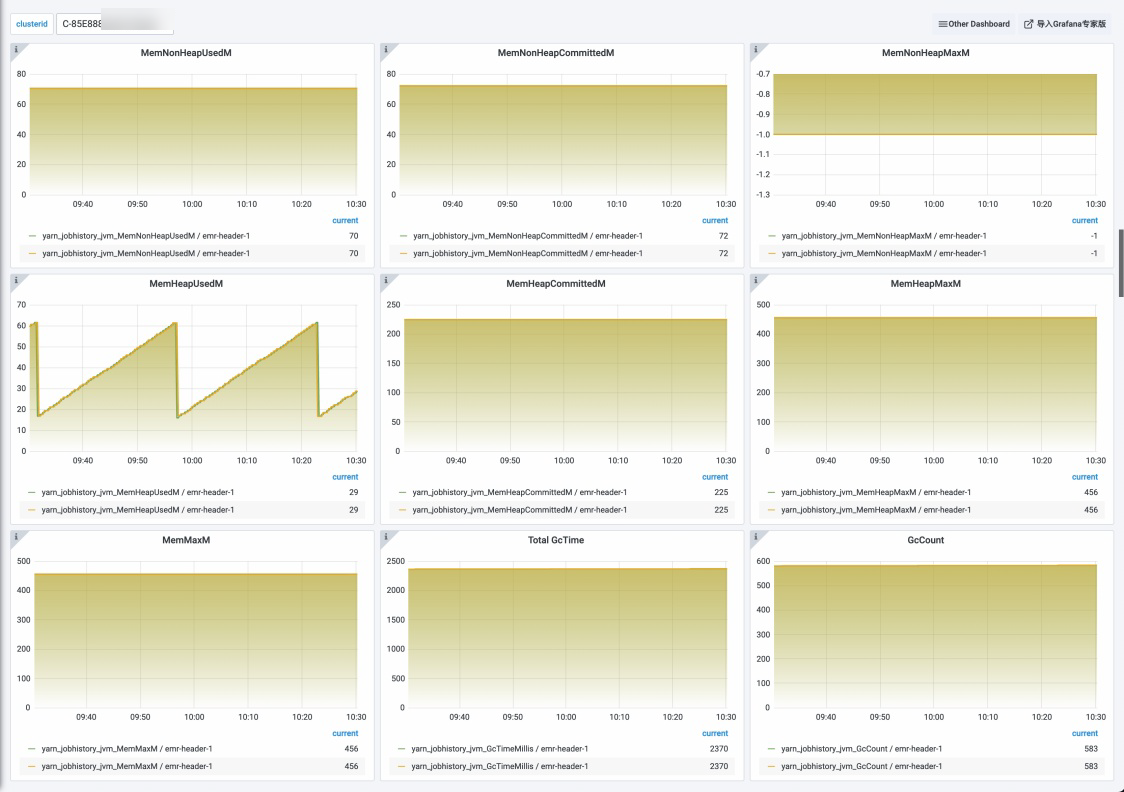

- YARN dashboard

- HOME: displays the cluster status, memory, tasks, nodes, and containers.

- NodeManager: manages and monitors node resources and executes jobs on nodes.

- ResourceManager: manages and schedules cluster resources and allocates resources for various types of jobs that are running on YARN.

- TimeLineServer: collects the metrics of a job and displays the job execution status.

- JobHistory

- HOME: displays the cluster status, memory, tasks, nodes, and containers.

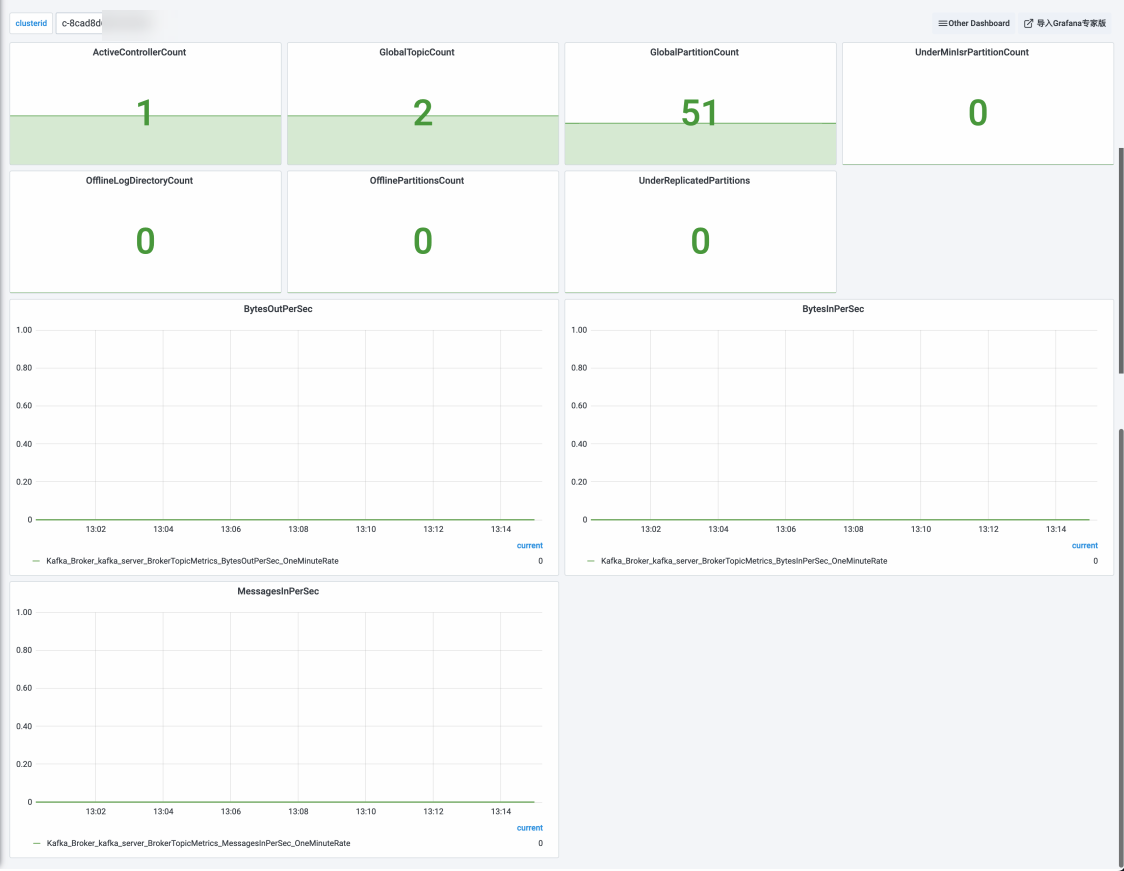

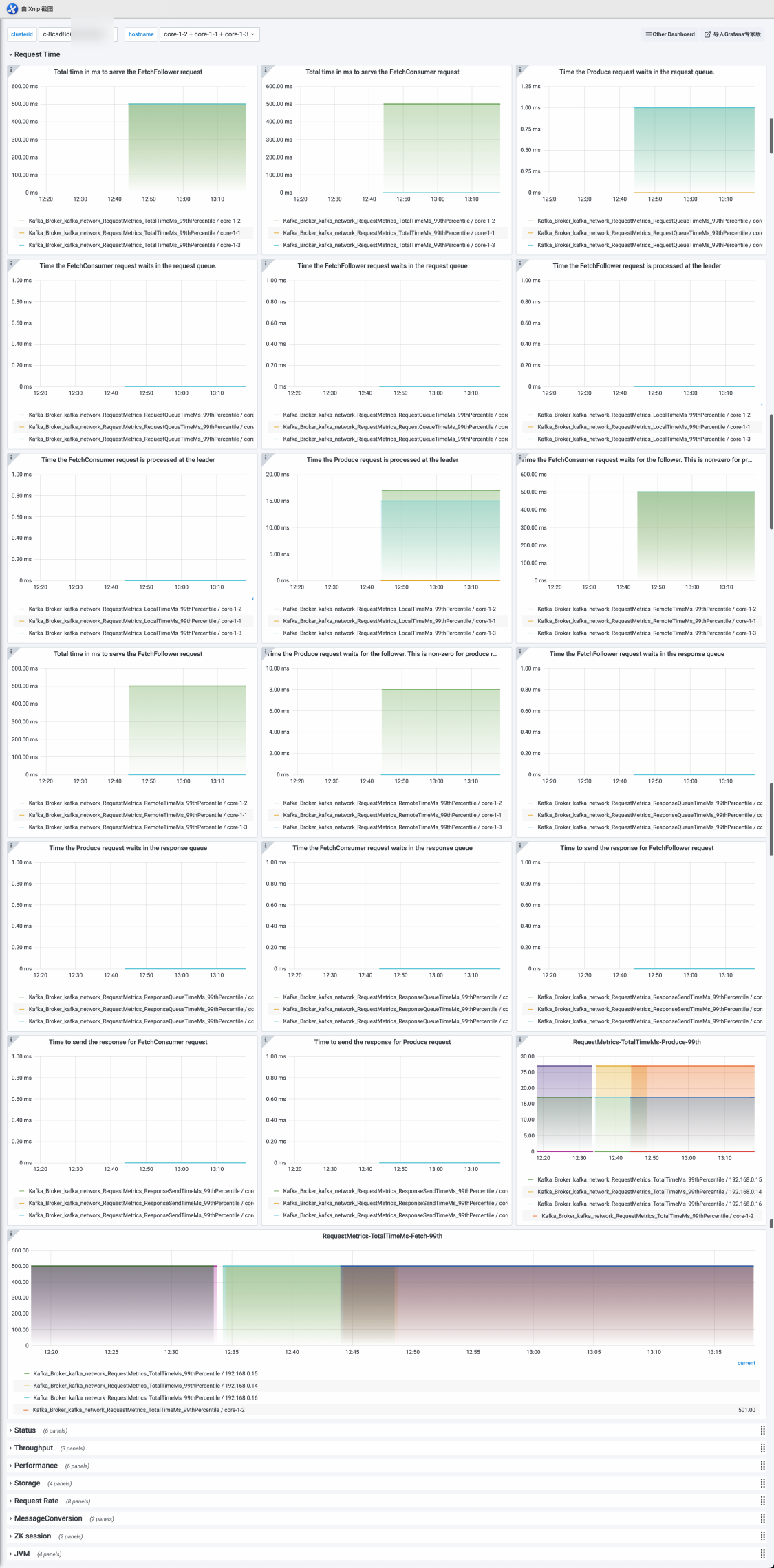

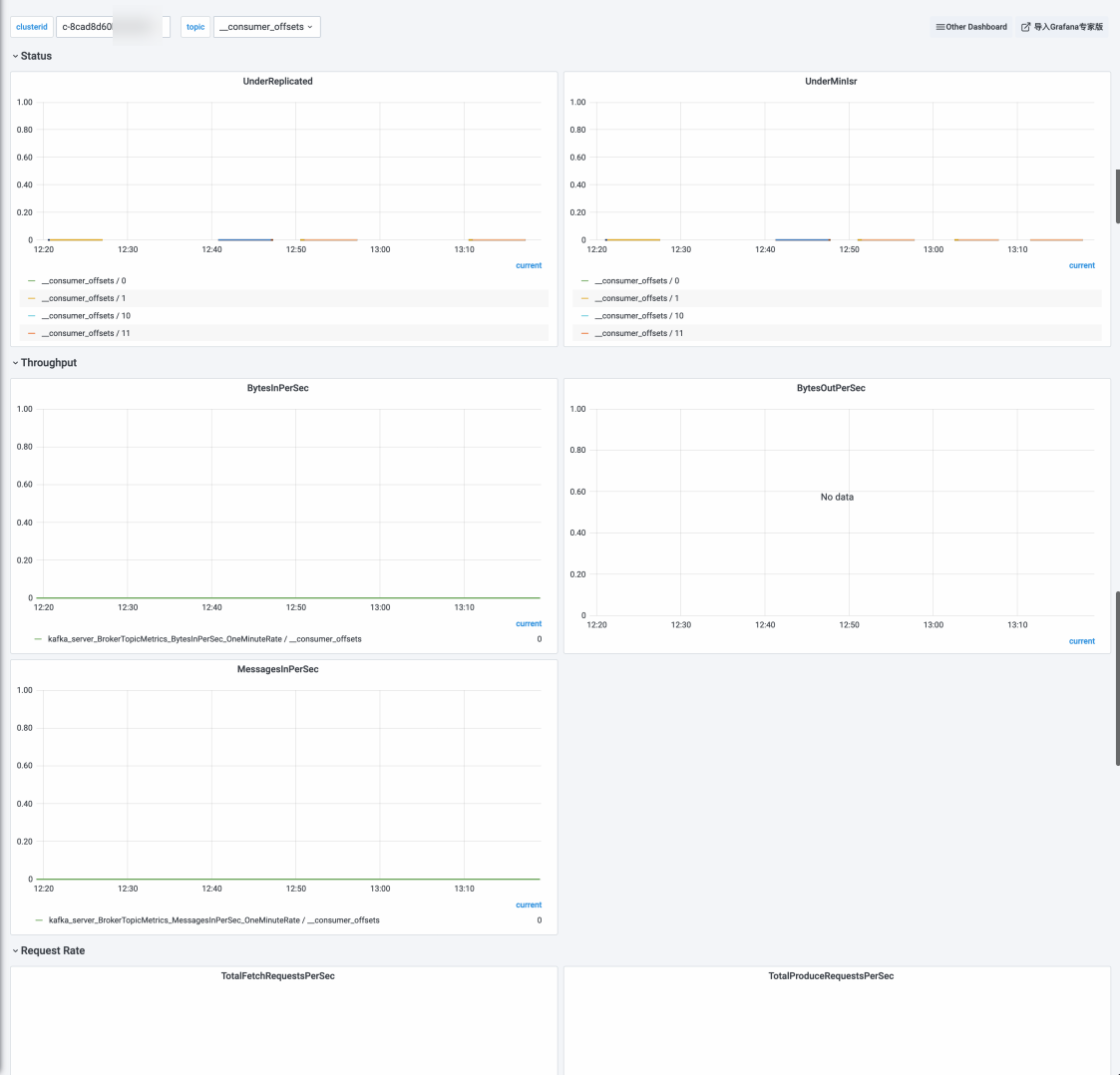

- Kafka dashboard

- KAFKA-HOME

- KAFKA-Broker

- KAFKA-Topic

- KAFKA-HOME

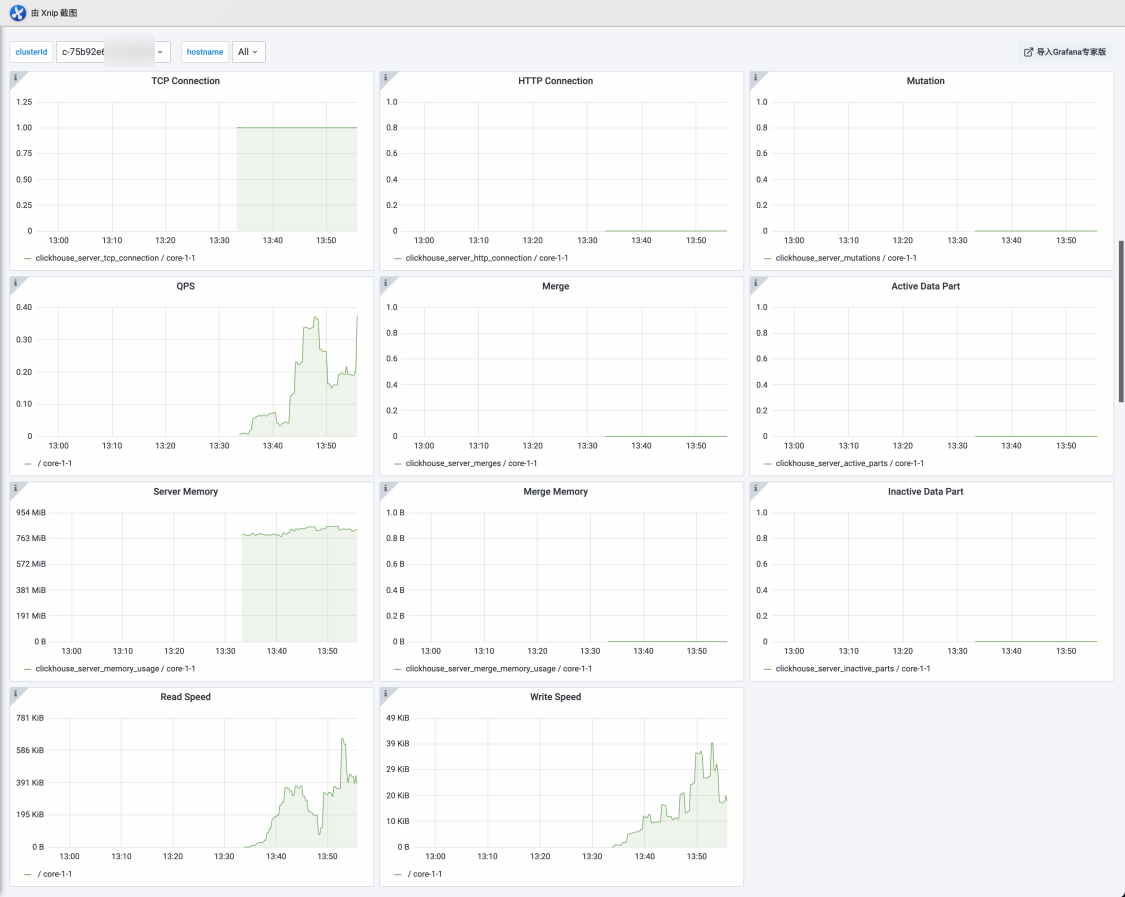

- ClickHouse dashboard

- Flink dashboard

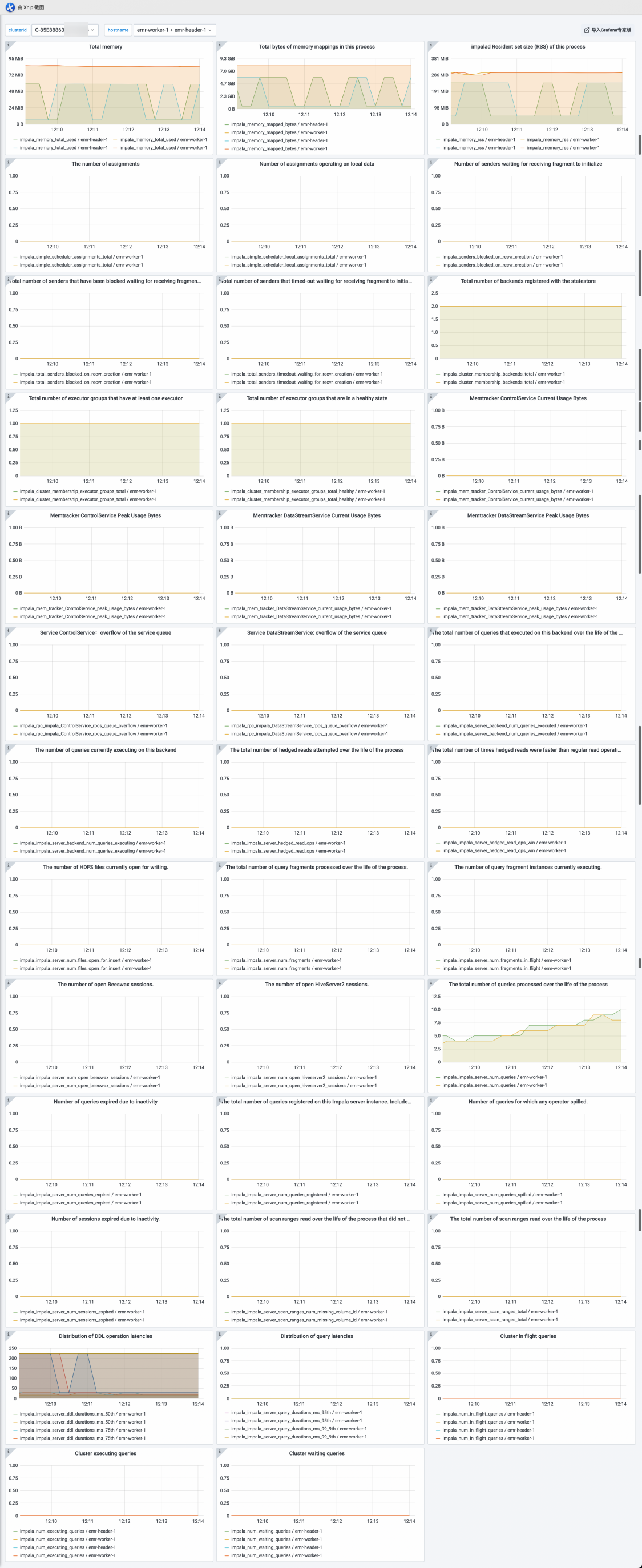

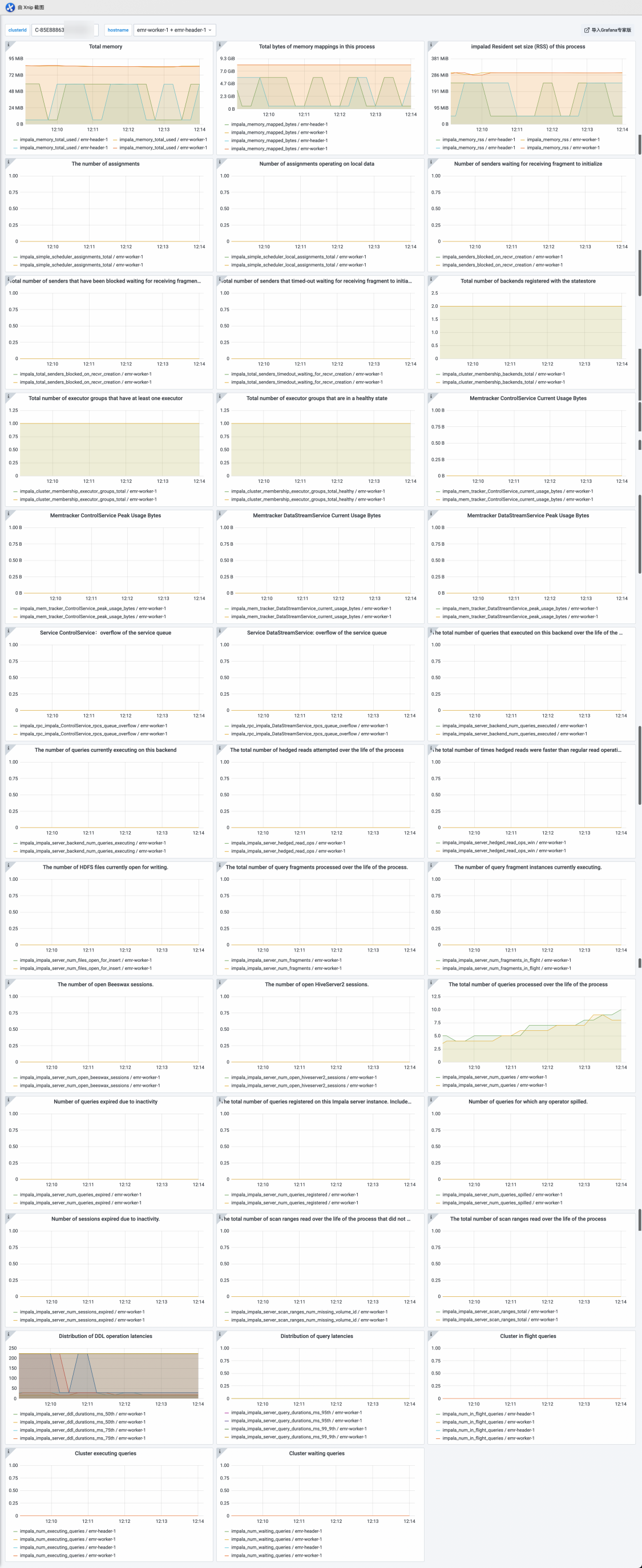

- Impala dashboard

- ZooKeeper dashboard

- Spark dashboard

E-MapReduce monitoring metrics

Metrics

Managed Service for Prometheus provides various Grafana dashboards for E-MapReduce, such as HOST, HDFS, Hive, YARN, Kafka, ZooKeeper, Flink, and ClickHouse.

HOST metrics

HOST metrics include the CPU utilization, memory usage, disk space, load, network, and sockets of the ECS instance.

HDFS metrics

Hadoop Distributed File System (HDFS) is suitable for distributed reading and writing of large-scale data, especially in scenarios with more reads and less writes. HDFS metrics include HOME, NameNodes, DataNodes, and JournnanlNodes.

YARN metrics

YARN is the core component of the Hadoop system. YARN manages resources in Hadoop clusters, and schedules and monitors jobs in the clusters. YARN metrics include HOME, Queue, ResourceManager, NodeManager, TImeLineServer, and JobHistory.

Hive metrics

- HiveMetaStore

Metric Description hive_memory_heap_max The maximum available heap memory of the JVM. Unit: bytes. hive_memory_heap_used The heap memory used by the JVM. Unit: bytes. hive_memory_non_heap_used The amount of off-heap memory used by the JVM. Unit: bytes. hive_active_calls_api_alter_table The number of active alter table requests. hive_active_calls_api_create_table The number of active create table requests. hive_active_calls_api_drop_table The number of active drop table requests. hive_api_alter_table The average duration of alter table requests. Unit: milliseconds. hive_api_alter_table_with_environment_context The average duration of alter table with env context requests. Unit: milliseconds. hive_api_create_table The average duration of create table requests. Unit: milliseconds. hive_api_create_table_with_environment_context The average duration of create table with env context requests. Unit: milliseconds. api_drop_table The average duration of drop table requests. Unit: milliseconds. hive_api_drop_table_with_environment_context The average duration of drop table with env context requests. Unit: milliseconds. hive_api_get_all_databases The average duration of get all databases requests. Unit: milliseconds. hive_api_get_all_functions The average duration of get all functions requests. Unit: milliseconds. hive_api_get_database The average duration of get database requests. Unit: milliseconds. hive_api_get_multi_table The average duration of get multi table requests. Unit: milliseconds. hive_api_get_tables_by_type The average duration of get table requests. Unit: milliseconds. hive_api_get_table_objects_by_name_req The average duration of get table objects by name requests. Unit: milliseconds. hive_api_get_table_req The average duration of get table req requests. Unit: milliseconds. hive_api_get_table_statistics_req The average duration of get table statistics requests. Unit: milliseconds. hive_api_get_tables The average duration of get tables requests. Unit: milliseconds. hive_api_get_tables_by_type The average duration of get tables by type requests. Unit: milliseconds. - HiveServer2

Metric Description hive_metrics_hs2_active_sessions The number of active sessions. hive_metrics_memory_total_init The total initialized memory of the JVM. Unit: bytes. hive_metrics_memory_total_committed The total memory reserved by the JVM. Unit: bytes. hive_metrics_memory_total_max The maximum available memory of the JVM. Unit: bytes. hive_metrics_memory_heap_committed The heap memory reserved by the JVM. Unit: bytes. hive_metrics_memory_heap_inithive_metrics_memory_heap_committed The heap memory initialized by the JVM. Unit: bytes. hive_metrics_memory_non_heap_committed The off-heap memory reserved by the JVM. Unit: bytes. hive_metrics_memory_non_heap_init The off-heap memory initialized by the JVM. Unit: bytes. hive_metrics_memory_non_heap_max The maximum available off-heap memory of the JVM. Unit: bytes. hive_metrics_gc_PS_MarkSweep_count The number of PS MarkSweep GCs in the JVM. hive_metrics_gc_PS_MarkSweep_time The time consumed for the PS MarkSweep GCs in the JVM. Unit: milliseconds. hive_metrics_gc_PS_Scavenge_time The time consumed for the PS Scavenge GCs in the JVM. Unit: milliseconds. hive_metrics_threads_daemon_count The number of JVM daemon threads. hive_metrics_threads_count The total number of JVM threads. hive_metrics_threads_blocked_count The number of blocked JVM threads. hive_metrics_threads_deadlock_count The number of deadlocked JVM threads. hive_metrics_threads_new_count The number of new JVM threads. hive_metrics_threads_runnable_count The number of runnable JVM threads. hive_metrics_threads_terminated_count The number of terminated JVM threads. hive_metrics_threads_waiting_count The number of waiting JVM threads. hive_metrics_threads_timed_waiting_count The number of timed_waiting JVM threads. hive_metrics_memory_heap_max The maximum available heap memory of the JVM. Unit: bytes. hive_metrics_memory_heap_used The heap memory used by the JVM. Unit: bytes. hive_metrics_memory_non_heap_used The amount of off-heap memory used by the JVM. Unit: bytes. hive_metrics_hs2_open_sessions The number of opened sessions. hive_metrics_hive_mapred_tasks The total number of submitted Hive on MR jobs. hive_metrics_hive_tez_tasks The total number of submitted Hive on Tez jobs. hive_metrics_cumulative_connection_count The cumulative number of connections. hive_metrics_active_calls_api_runTasks The number of current runtask requests. hive_metrics_hs2_completed_sql_operation_FINISHED The total number of completed SQL statements. hive_metrics_hs2_sql_operation_active_user The number of active users. hive_metrics_open_connections The number of opened connections. hive_metrics_api_PostHook_com_aliyun_emr_meta_hive_hook_LineageLoggerHook The average time to execute the LineageLoggerHook. Unit: milliseconds. hive_metrics_api_hs2_sql_operation_PENDING The average time that the SQL tasks are in the PENDING state. Unit: milliseconds. hive_metrics_api_hs2_sql_operation_RUNNING The average time that the SQL tasks are in the RUNNING state. Unit: milliseconds. hive_metrics_hs2_submitted_queries The average time taken to submit a query. Unit: milliseconds. hive_metrics_hs2_executing_queries The average time taken to execute a query. Unit: milliseconds. hive_metrics_hs2_succeeded_queries The number of successful queries after the service is started. hive_metrics_hs2_failed_queries The number of queries that fail after the service is started.

ZooKeeper metrics

| Metric | Description |

|---|---|

| zk_packets_received | The number of packets received by ZooKeeper. |

| zk_packets_sent | The number of packets sent by ZooKeeper. |

| zk_avg_latency | The average latency of ZooKeeper requests. Unit: milliseconds. |

| zk_min_latency | The minimum latency of ZooKeeper requests. Unit: milliseconds. |

| zk_max_latency | The maximum latency of ZooKeeper requests. Unit: milliseconds. |

| zk_watch_count | The number of ZooKeeper watches. |

| zk_znode_count | The number of ZooKeeper znodes. |

| zk_num_alive_connections | The number of ZooKeeper connections alive. |

| zk_outstanding_requests | The number of queuing ZooKeeper requests. The greater the value, the more difficultly ZooKeeper processes requests. |

| zk_approximate_data_size | The approximate size of the ZooKeeper data. Unit: bytes. |

| zk_open_file_descriptor_count | The number of files opened by ZooKeeper. |

| zk_max_file_descriptor_count | The maximum number of files that can be opened in ZooKeeper. |

| zk_node_status | The status of a ZooKeeper node. Valid values:

|

| zk_synced_followers | The number of synchronized ZooKeeper services. |

Kafka metrics

ApsaraMQ for Kafka is a distributed, high-throughput, and scalable message queue service provided by Alibaba Cloud. ApsaraMQ for Kafka is used in big data scenarios such as log collection, monitoring data aggregation, streaming data processing, and online and offline analysis. It is important for the big data ecosystem.

Impala metrics

| Metric | Description |

|---|---|

| impala_impala_server_resultset_cache_total_bytes | The size of the result set cache. Unit: bytes. |

| impala_num_executing_queries | The number of queries that are being executed. |

| impala_num_waiting_queries | The number of waiting queries. |

| impala_impala_server_query_durations_ms_95th | The query duration at the 95th percentile. Unit: milliseconds. |

| impala_num_in_flight_queries | The number of queries in the in fight state in the cluster. |

| impala_impala_server_query_durations_ms_75th | The query duration at the 75th percentile. Unit: milliseconds. |

| impala_impala_thrift_server_CatalogService_svc_thread_wait_time_99_9th | The amount of time that the Catalog Service client waits for the service thread. Unit: milliseconds. |

| impala_impala_thrift_server_CatalogService_connection_setup_time_99_9th | The amount of time that the Catalog Service client spends waiting to establish a connection at the 99th percentile. Unit: milliseconds. |

| impala_impala_server_query_durations_ms_99_9th | The query duration at the 99th percentile. Unit: milliseconds. |

| impala_impala_server_ddl_durations_ms_99_9th | The duration of the Data Definition Language (DDL) operation at the 99th percentile. Unit: milliseconds. |

| impala_impala_server_query_durations_ms_90th | The query duration at the 90th percentile. Unit: milliseconds. |

| impala_impala_server_ddl_durations_ms_90th | The duration of the DDL operation at the 90th percentile. Unit: milliseconds. |

| impala_impala_server_query_durations_ms_50th | The query duration at the 50th percentile. Unit: milliseconds. |

| impala_impala_server_ddl_durations_ms_50th | The duration of the DDL operation at the 50th percentile. Unit: milliseconds. |

| impala_impala_server_ddl_durations_ms_95th | The duration of the DDL operation at the 95th percentile. Unit: milliseconds. |

| impala_impala_server_scan_ranges_num_missing_volume_id | The total number of scan ranges with missing volume IDs during the process lifecycle. |

| impala_impala_server_ddl_durations_ms_75th | The duration of the DDL operation at the 75th percentile. Unit: milliseconds. |

| impala_impala_server_num_queries_spilled | The number of queries with overloading operators. |

| impala_impala_server_scan_ranges_total | The total number of scan ranges that are read during the process lifecycle. |

| impala_impala_server_num_queries_expired | The number of queries that expire due to inactivity. |

| impala_impala_server_resultset_cache_total_num_rows | The number of cached records in the result set. |

| impala_impala_server_num_open_hiveserver2_sessions | The number of opened HiveServer2 sessions. |

| impala_impala_server_num_sessions_expired | The number of sessions that expire due to inactivity. |

| impala_impala_server_num_fragments_in_flight | The number of segment instances that are being queried. |

| impala_impala_server_num_queries_registered | The total number of queries registered on the Impala server instance, including queries that are in progress and waiting to be closed. |

| impala_impala_server_num_files_open_for_insert | The number of HDFS files opened for writing. |

| impala_impala_server_num_queries | The total number of queries processed during the process lifecycle. |

| impala_impala_server_hedged_read_ops | The total number of hedged reads attempted during the process lifecycle. |

| impala_impala_server_num_open_beeswax_sessions | The number of open Beeswax sessions. |

| impala_impala_server_backend_num_queries_executed | The total number of queries executed on the backend server during the process lifecycle. |

| impala_impala_server_num_fragments | The total number of segment queried during the process lifecycle. |

| impala_rpc_impala_ControlService_rpcs_queue_overflow | The total number of incoming RPCs rejected by the ControlService due to service queue overflow. |

| impala_impala_server_hedged_read_ops_win | The total number of times that a hedged read is faster than a regular read operation. |

| impala_mem_tracker_DataStreamService_current_usage_bytes | The number of bytes used by the Memtracker DataStreamService. |

| impala_impala_server_backend_num_queries_executing | The number of queries that are being queried on the backend server. |

| impala_cluster_membership_executor_groups_total_healthy | The total number of healthy executor groups. |

| impala_rpc_impala_DataStreamService_rpcs_queue_overflow | The total number of incoming remote procedure calls (RPCs) of the DataStreamService rejected due to service queue overflow. |

| impala_cluster_membership_backends_total | The total number of backends registered with the statestore. |

| impala_mem_tracker_DataStreamService_peak_usage_bytes | The peak number of bytes used by the Memtracker DataStreamService. |

| impala_total_senders_blocked_on_recvr_creation | The total number of senders that have been forbidden to wait for the initialization of received segments. |

| impala_mem_tracker_ControlService_peak_usage_bytes | The peak number of bytes used by the Memtracker ControlService. |

| impala_simple_scheduler_local_assignments_total | The number of local jobs. |

| impala_mem_tracker_ControlService_current_usage_bytes | The number of bytes used by the Memtracker ControlService. |

| impala_memory_total_used | The memory used. Unit: bytes. |

| impala_cluster_membership_executor_groups_total | The total number of executor groups with at least one executor. |

| impala_memory_rss | The Resident Set Size (RSS), including TCMalloc, buffer pool, and JVM. Unit: bytes. |

| impala_total_senders_timedout_waiting_for_recvr_creation | The total number of senders that time out waiting for the initialization of received segments. |

| impala_senders_blocked_on_recvr_creation | The number of senders waiting for the initialization of received segments. |

| impala_simple_scheduler_assignments_total | The number of jobs. |

| impala_memory_mapped_bytes | The virtual memory size of the process. Unit: bytes. |

HUE metrics

| Metric | Description |

|---|---|

| hue_requests_response_time_avg | The average response duration of requests. |

| hue_requests_response_time_95_percentile | The response duration of requests at the 95th percentile. |

| hue_requests_response_time_std_dev | The standard deviation of the request response duration. |

| hue_requests_response_time_median | The response duration of requests at the 50th percentile. |

| hue_requests_response_time_75_percentile | The response duration of requests at the 75th percentile. |

| hue_requests_response_time_count | The number of request response durations. |

| hue_requests_response_time_5m_rate | The request response rate in the last 5 minutes. |

| hue_requests_response_time_min | The minimum request response duration. |

| hue_requests_response_time_sum | The total response durations of the requests. |

| hue_requests_response_time_max | The maximum request response duration. |

| hue_requests_response_time_mean_rate | The average request response rate. |

| hue_requests_response_time_99_percentile | The request response duration in the last hour at the 99th percentile. |

| hue_requests_response_time_15m_rate | The request response rate in the last 15 minutes. |

| hue_requests_response_time_999_percentile | The response duration of requests at the 99.9th percentile. |

| hue_requests_response_time_1m_rate | The request response rate in the last 1 minute. |

| hue_users_active_total | The total number of active users. |

| hue_users_active | The number of active users in the last hour. |

| hue_users | The total number of users. |

| hue_threads_total | The total number of threads. |

| hue_threads_daemon | The number of daemon threads. |

| hue_queries_number | The sum of the queried quantities. |

| hue_requests_exceptions | The number of abnormal requests. |

| hue_requests_active | The number of active requests. |

Kudu metrics

| Parameter | Metric | Description |

|---|---|---|

| op_apply_queue_length (99) | kudu_op_apply_queue_length_percentile_99 | The length of the operation queue at the 99th percentile. |

| op_apply_queue_length (75) | kudu_op_apply_queue_length_percentile_75 | The length of the operation queue at the 75th percentile. |

| op_apply_queue_length (mean) | kudu_op_apply_queue_length_mean | The average length of the operation queue. |

| rpc_incoming_queue_time (99) | kudu_rpc_incoming_queue_time_percentile_99 | The waiting duration of the RPC queue at the 99th percentile. Unit: μs. |

| rpc_incoming_queue_time (75) | kudu_rpc_incoming_queue_time_percentile_75 | The waiting duration of the RPC queue at the 75th percentile. Unit: μs. |

| rpc_incoming_queue_time (mean) | kudu_rpc_incoming_queue_time_mean | The average waiting duration of the RPC queue. Unit: μs. |

| reactor_load_percent (99) | kudu_reactor_load_percent_percentile_99 | The load of the Reactor thread at the 99th percentile. |

| reactor_load_percent (75) | kudu_reactor_load_percent_percentile_75 | The load of the Reactor thread at the 75th percentile. |

| reactor_load_percent (mean) | kudu_reactor_load_percent_mean | The average load of the Reactor thread. |

| op_apply_run_time (99) | kudu_op_apply_run_time_percentile_99 | The execution duration at the 99th percentile. Unit: μs. |

| op_apply_run_time (75) | kudu_op_apply_run_time_percentile_75 | The execution duration at the 75th percentile. Unit: μs. |

| op_apply_run_time (mean) | kudu_op_apply_run_time_mean | The average execution duration. Unit: μs. |

| op_prepare_run_time (99) | kudu_op_prepare_run_time_percentile_99 | The preparation duration at the 99th percentile. Unit: μs. |

| op_prepare_run_time (75) | kudu_op_prepare_run_time_percentile_75 | The preparation duration at the 75th percentile. Unit: μs. |

| op_prepare_run_time (mean) | kudu_op_prepare_run_time_mean | The average preparation duration. Unit: μs. |

| flush_mrs_duration (99) | kudu_flush_mrs_duration_percentile_99 | The MemRowSet flush time at the 99th percentile. Unit: milliseconds. |

| flush_mrs_duration (75) | kudu_flush_mrs_duration_percentile_75 | The MemRowSet flush time at the 75th percentile. Unit: milliseconds. |

| flush_mrs_duration (mean) | kudu_flush_mrs_duration_mean | The average MemRowSet flush time. Unit: milliseconds. |

| log_append_latency (99) | kudu_log_append_latency_percentile_99 | The append time of the logs at the 99th percentile. Unit: μs. |

| log_append_latency (75) | kudu_log_append_latency_percentile_75 | The append time of the logs at the 75th percentile. Unit: μs. |

| log_append_latency (mean) | kudu_log_append_latency_mean | The average append time of the logs. Unit: μs. |

| flush_dms_duration (99) | kudu_flush_dms_duration_percentile_99 | The DeltaMemStore flush time at the 99th percentile. Unit: milliseconds. |

| flush_dms_duration (75) | kudu_flush_dms_duration_percentile_75 | The DeltaMemStore flush time at the 75th percentile. Unit: milliseconds. |

| flush_dms_duration (mean) | kudu_flush_dms_duration_mean | The average DeltaMemStore flush time. Unit: milliseconds. |

| op_prepare_queue_length (99) | kudu_op_prepare_queue_length_percentile_99 | The length of the preparation queue at the 99th percentile. |

| op_prepare_queue_length (75) | kudu_op_prepare_queue_length_percentile_75 | The length of the preparation queue at the 75th percentile. |

| op_prepare_queue_length (mean) | kudu_op_prepare_queue_length_mean | The average length of the preparation queue. |

| log_gc_duration (99) | kudu_log_gc_duration_percentile_99 | The GC duration of the logs at the 99th percentile. Unit: milliseconds. |

| log_gc_duration (75) | kudu_log_gc_duration_percentile_75 | The GC duration of the logs at the 75th percentile. Unit: milliseconds. |

| log_gc_duration (mean) | kudu_log_gc_duration_mean | The average GC duration of the logs. Unit: milliseconds. |

| log_sync_latency (99) | kudu_log_sync_latency_percentile_99 | The Sync duration of the logs at the 99th percentile. Unit: μs. |

| log_sync_latency(75) | kudu_log_sync_latency_percentile_75 | The Sync duration of the logs at the 75th percentile. Unit: μs. |

| log_sync_latency (mean) | kudu_log_sync_latency_mean | The average Sync duration of the logs. Unit: μs. |

| prepare_queue_time (99) | kudu_op_prepare_queue_time_percentile_99 | The waiting duration of the preparation queue at the 99th percentile. Unit: μs. |

| prepare_queue_time (75) | kudu_op_prepare_queue_time_percentile_75 | The waiting duration of the preparation queue at the 75th percentile. Unit: μs. |

| prepare_queue_time (mean) | kudu_op_prepare_queue_time_mean | The average waiting duration of the preparation queue. Unit: μs. |

| rpc_connections_accepted | kudu_rpc_connections_accepted | The number of received RPC requests. |

| block_cache_usage | kudu_block_cache_usage | The cache usage of the TServer Blocks. Unit: bytes. |

| active_scanners | kudu_active_scanners | The number of active Scanners. |

| data_dirs_full | kudu_data_dirs_full | The number of data directories in the Full state. |

| rpcs_queue_overflow | kudu_rpcs_queue_overflow | The number of times that the RPC queue overflows. |

| cluster_replica_skew | kudu_cluster_replica_skew | The difference between the maximum number of tablets and the minimum number of tablets hosted on the server. |

| log_gc_running | kudu_log_gc_running | The number of logs during GCs. |

| data_dirs_failed | kudu_data_dirs_failed | The number of invalid data directories. |

| leader_memory_pressure_rejections | kudu_leader_memory_pressure_rejections | The number of requests rejected due to memory pressure. |

| transaction_memory_pressure_rejections | kudu_transaction_memory_pressure_rejections | The number of transactions rejected due to memory pressure. |

ClickHouse metrics

| Metric | Description |

|---|---|

| clickhouse_server_events_ReplicatedPartFailedFetches | The number of times that data cannot be obtained from replicas in the Replicated*MergeTree table. |

| clickhouse_server_events_ReplicatedPartChecksFailed | The number of times that data in the Replicated*MergeTree table fails to be checked. |

| clickhouse_server_events_ReplicatedDataLoss | The number of times that data in the Replicated*MergeTree table is not in a replica. |

| clickhouse_server_events_ReplicatedMetaDataChecksFailed | The number of times that the metadata of the Replicated*MergeTree table fails to be checked. |

| clickhouse_server_events_ReplicatedMetaDataLoss | The number of times that metadata is lost in the Replicated*MergeTree table. |

| clickhouse_server_events_DuplicatedInsertedBlocks | The number of duplicate blocks written to the Replicated*MergeTree table. |

| clickhouse_server_events_ZooKeeperUserExceptions | The number of times that errors related to the ClickHouse status occur in ZooKeeper. |

| clickhouse_server_events_ZooKeeperHardwareExceptions | The number of ZooKeeper network errors and other errors. |

| clickhouse_server_events_ZooKeeperOtherExceptions | The number of ZooKeeper non-hardware or status errors. |

| clickhouse_server_events_DistributedConnectionFailTry | The number of retry errors of the distributed connection. |

| clickhouse_server_events_DistributedConnectionMissingTable | The number of times that the distributed connection fails to find the table. |

| clickhouse_server_events_DistributedConnectionStaleReplica | The number of times that the replicas obtained by the distributed connection are not fresh. |

| clickhouse_server_events_DistributedConnectionFailAtAll | The number of times that the distributed connection fails after all retries. |

| clickhouse_server_events_SlowRead | The number of slow reads. |

| clickhouse_server_events_ReadBackoff | The number of threads reduced due to slow reads. |

| clickhouse_server_metrics_BackgroundPoolTask | The number of tasks in the background_pool. |

| clickhouse_server_metrics_BackgroundMovePoolTask | The number of tasks in the background_move_pool. |

| clickhouse_server_metrics_BackgroundSchedulePoolTask | The number of tasks in the schedule_pool. |

| clickhouse_server_metrics_BackgroundBufferFlushSchedulePoolTask | The number of tasks in the buffer_flush_schedule_pool. |

| clickhouse_server_metrics_BackgroundDistributedSchedulePoolTask | The number of tasks in the distributed_schedule_pool. |

| clickhouse_server_metrics_BackgroundTrivialSchedulePoolTask | The number of tasks in the trivial_schedule_pool. |

| clickhouse_server_metrics_TCPConnection | The number of TCP connections. |

| clickhouse_server_metrics_HTTPConnection | The number of HTTP connections. |

| clickhouse_server_metrics_InterserverConnection | The number of connections used to obtain data from other replicas. |

| clickhouse_server_metrics_MemoryTracking | The total memory used by the server. Unit: bytes. |

| clickhouse_server_metrics_MemoryTrackingInBackgroundProcessingPool | The memory used for task execution in the background_pool. Unit: bytes. |

| clickhouse_server_metrics_MemoryTrackingInBackgroundMoveProcessingPool | The memory used for task execution in the background_move_pool. Unit: bytes. |

| clickhouse_server_metrics_MemoryTrackingInBackgroundBufferFlushSchedulePool | The memory used for task execution in the buffer_flush_schedule_pool. Unit: bytes. |

| clickhouse_server_metrics_MemoryTrackingInBackgroundSchedulePool | The memory used for task execution in the schedule_pool. Unit: bytes. |

| clickhouse_server_metrics_MemoryTrackingInBackgroundDistributedSchedulePool | The memory used for task execution in the distributed_schedule_pool. Unit: bytes. |

| clickhouse_server_metrics_MemoryTrackingInBackgroundTrivialSchedulePool | The memory used for task execution in the trivial_schedule_pool. Unit: bytes. |

| clickhouse_server_metrics_MemoryTrackingForMerges | The memory used by the background merge operation. Unit: bytes. |

Flink metrics

- Overview

Parameter Metric Description Num Of RunningJobs numRunningJobs The number of jobs running in the JM. Job Uptime job_uptime The uptime of the job. Unit: milliseconds. Only single series or tables can be returned. TaskSlots Available taskSlotsAvailable The number of available TaskSlots. TaskSlots Total taskSlotsTotal The total number of TaskSlots. Num of TM numRegisteredTaskManagers The number of registered TMs. sourceIdleTime sourceIdleTime The time duration during which the source does not process records. Unit: milliseconds. currentFetchEventTimeLag currentFetchEventTimeLag The different between the time that the data starts to be generated and the time that the Flink Source fetches the data. currentEmitEventTimeLag currentEmitEventTimeLag The different between the time that the data starts to be generated and the time that the Flink Source emits the data. - Checkpoint

Parameter Metric Description Num of Checkpoints totalNumberOfCheckpoints The total number of checkpoints. numberOfFailedCheckpoints The number of checkpoints that fail. numberOfCompletedCheckpoints The number of completed checkpoints. numberOfInProgressCheckpoints The number of checkpoints in progress. lastCheckpointDuration lastCheckpointDuration The time when the last checkpoint was completed. Unit: milliseconds. lastCheckpointSize lastCheckpointSize The size of the last checkpoint. Unit: bytes. lastCheckpointRestoreTimestamp lastCheckpointRestoreTimestamp The recovery time of the last checkpoint on the coordinator. Unit: milliseconds. - Network

Parameter Metric Description InPool Usage inPoolUsage The size of the used input buffer. OutPool Usage outPoolUsage The size of the used output buffer. OutputQueue Length outputQueueLength The number of output queues. InputQueue Length inputQueueLength The number of input queues. - IO

Parameter Metric Description numBytesIn PerSecond numBytesInLocalPerSecond The number of bytes read from the local server per second. numBytesInRemotePerSecond The number of bytes read from the remote server per second. numBuffersInLocalPerSecond The number of buffers read from the local server per second. numBuffersInRemotePerSecond The number of buffers read from the remote server per second. numBytesOut PerSecond numBytesOutPerSecond The number of bytes sent per second. numBuffersOutPerSecond The number of outgoing buffers per second. Task numRecords I/O PerSecond numRecordsInPerSecond The number of records received per second. numRecordsOutPerSecond The number of records sent per second. Task numRecords I/O numRecordsIn The number of records received. numRecordsOut The number of records sent. Operator CurrentSendTime currentSendTime The time consumed to send the last record. Unit: milliseconds. - Watermark

Parameter Metric Description Task InputWatermark currentInputWatermark The time when the task receives the last watermark. Unit: milliseconds. Operator In/Out Watermark currentInputWatermark The time when the operator receives the last watermark. Unit: milliseconds. currentOutputWatermark The time when the operator sends the last watermark. Unit: milliseconds. watermarkLag watermarkLag The latency of the watermark. Unit: milliseconds. - CPU

Parameter Metric Description JM CPU Load CPU_Load The JM CPU utilization. TM CPU Load CPU_Load The TM CPU utilization. CPU Usage CPU_Usage The TM CPU utilization that is calculated based on the ProcessTree. - Memory

Parameter Metric Description JM Heap Memory Memory_Heap_Used The used JM Heap Memory. Unit: bytes. Memory_Heap_Committed The requested JM Heap Memory. Unit: bytes. Memory_Heap_Max The maximum JM Heap Memory that can be used. Unit: bytes. JM NonHeap Memory Memory_NonHeap_Used The used JM NonHeap Memory. Unit: bytes. Memory_NonHeap_Committed The requested JM NonHeap Memory. Unit: bytes. Memory_NonHeap_Max The maximum JM NonHeap Memory that can be used. Unit: bytes. TM Heap Memory Memory_Heap_Used The used TM Heap Memory. Unit: bytes. Memory_Heap_Committed The requested TM Heap Memory. Unit: bytes. Memory_Heap_Max The maximum TM Heap Memory that can be used. Unit: bytes. TM NonHeap Memory Memory_NonHeap_Used The used TM NonHeap Memory. Unit: bytes. Memory_NonHeap_Committed The requested TM NonHeap Memory. Unit: bytes. Memory_NonHeap_Max The maximum TM NonHeap Memory that can be used. Unit: bytes. Memory RSS Memory_RSS The heap memory used by the TM. Unit: bytes. - JVM

Parameter Metric Description JM Threads Threads_Count The total number of active JM threads. TM Threads Threads_Count The total number of active TM threads. JM GC Time GarbageCollector_PS_Scavenge_Time The GC duration of the JM young generation. GarbageCollector_PS_MarkSweep_Time The mark-and-sweep GC duration of the JM old generation. JM GC Count GarbageCollector_PS_Scavenge_Count The GC quantity of the JM young generation. GarbageCollector_PS_MarkSweep_Count The mark-and-sweep GC quantity of the JM old generation. TM GC Count GarbageCollector_PS_Scavenge_Count The GC quantity of the TM young generation. GarbageCollector_PS_MarkSweep_Count The mark-and-sweep GC quantity of the TM old generation. TM GC Time GarbageCollector_PS_Scavenge_Time The GC duration of the TM young generation. GarbageCollector_PS_MarkSweep_Time The mark-and-sweep GC duration of the TM old generation. TM ClassLoader ClassLoader_ClassesLoaded The total number of classes that the TM has loaded since the JVM was started. ClassLoader_ClassesUnloaded The total number of classes that the TM has unloaded since the JVM was started. JM ClassLoader ClassLoader_ClassesLoaded The total number of classes that the JM has loaded since the JVM was started. ClassLoader_ClassesUnloaded The total number of classes that the JM has unloaded since the JVM was started.