By default, the probe records conversation history during calls involving Large Language Models (LLMs), such as LLM and agent calls. The conversation history data format follows the OpenTelemetry specification. This topic describes how to configure the conversation history collection behavior for your LLM application.

To support various recording scenarios, the ARMS probe supports the following three modes for collecting and recording conversation history:

Record conversation history in Span Attributes (default).

Stop recording conversation history.

Record conversation history in logs.

You can adjust the configuration to modify the conversation history collection behavior of your LLM application to meet various requirements.

Prerequisites

You have installed the Python probe or the Java probe.

The framework and probe versions must meet the following requirements:

Python applications

Component/Framework

Supported component versions

Supported scenarios

Required probe version

OpenAI Python SDK

1.X

ChatCompletion

Completion

Embedding

2.0.0 or later

Java applications

Component/Framework

Supported component versions

Supported scenarios

Required probe version

OpenAI Java SDK

1.1.0 or later

ChatCompletion

Completion

Embedding

4.6.0 or later

Spring AI

1.0.0 or later

OpenAI ChatModel

ChatClient (Default)

ToolManager (Default)

4.6.0 or later

Spring AI Alibaba

1.0.0.3 or later

DashScope ChatModel

4.6.0 or later

Example

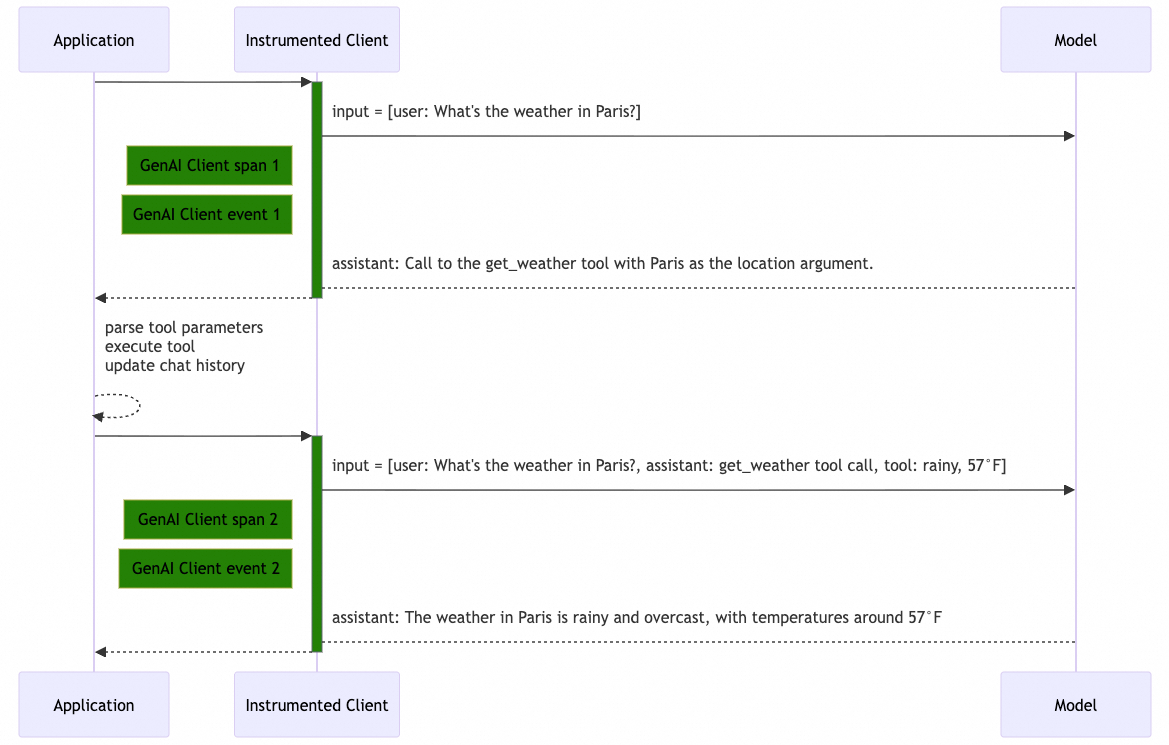

This topic provides an example of a React Agent call that includes a function. The LLM application invokes the large language model with a tool definition. The model then returns a tool_call request. After the application completes the tool call, it invokes the large language model again with the tool call result to generate the final output. The following time series chart shows the call procedure.

Record conversation history in Span Attributes

Collection behavior and data format

This is the default behavior. The probe records input messages, output messages, system instructions, and tool definitions in JSON format within the Span Attributes.

Attribute name | Description | Schema | Content integrity |

| Input messages | Fully recorded | |

| Output messages | Fully recorded | |

| System instructions | Fully recorded | |

| Tool definitions | - |

|

Configuration

You can configure this behavior using the following environment variables:

Environment variable

Value

OTEL_INSTRUMENTATION_GENAI_CAPTURE_MESSAGE_CONTENT

TrueOTEL_INSTRUMENTATION_GENAI_MESSAGE_CONTENT_CAPTURE_STRATEGY

"span-attributes"For Java applications, you can also add System Properties to the startup command. For example:

-Dotel.instrumentation.genai.capture-message-content=true \ -Dotel.instrumentation.genai.message-content.capture-strategy=span-attributes

Example

GenAI Client Span 1

Property | Value |

Span name |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

| |

| |

GenAI Client Span 2

Property | Value |

Span name |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

| |

Stop collecting conversation history

Collection behavior and data format

In this mode, the probe does not record details such as input messages, output messages, or system instructions. For tool definitions, only basic information is recorded in JSON format.

Attribute name | Description | Schema | Content integrity |

| Input messages | Not recorded | |

| Output messages | Not recorded | |

| System instructions | Not recorded | |

| Tool definitions | - |

|

Configuration

You can configure this behavior using the following environment variable:

Environment variable

Value

OTEL_INSTRUMENTATION_GENAI_CAPTURE_MESSAGE_CONTENT

FalseFor Java applications, you can also add a System Property to the startup command. For example:

-Dotel.instrumentation.genai.capture-message-content=false

Example

GenAI Client Span 1

Property | Value |

Span name |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

GenAI Client Span 2

Property | Value |

Span name |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Record conversation history in local logs

Collection behavior and data format

In this mode, only basic information is kept in the Span Attributes. The probe records input messages, output messages, system instructions, and tool definitions as a single-line log in JSON format. The log is saved to a local log file.

Attribute name | Description | Schema | Content integrity |

| Input messages | Fully recorded | |

| Output messages | Fully recorded | |

| System instructions | Fully recorded | |

| Tool definitions | - |

|

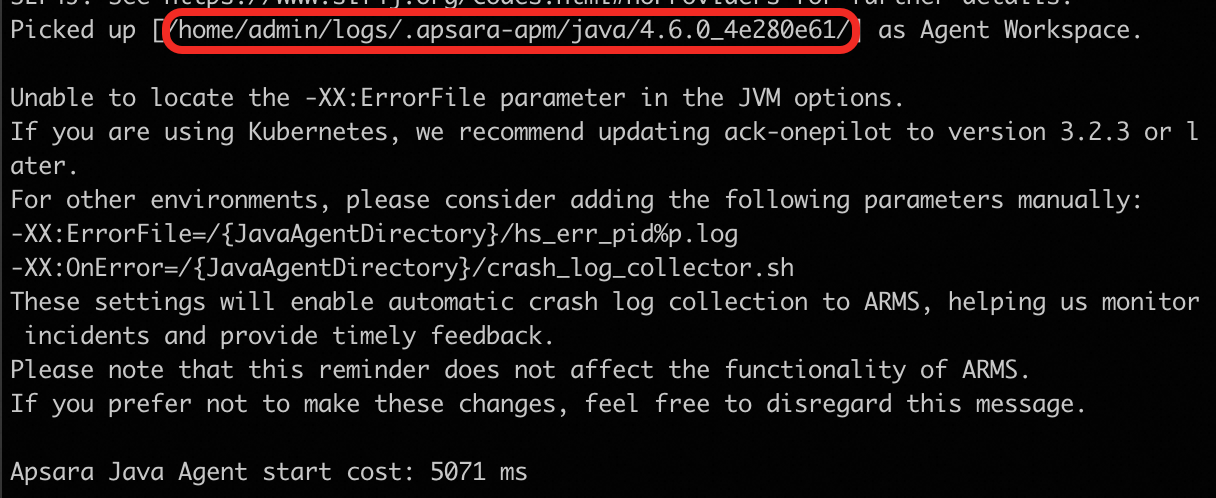

By default, when the probe starts, it searches for the first available folder in the following order of priority to use as the log path.

If a folder is specified using the APSARA_APM_AGENT_WORKSPACE_DIR environment variable, logs are recorded in the

.apsara-apm/{language}/logssubfolder of the specified folder.Probe log folder:

/home/admin/.opt/.apsara-apm/{language}/logsHome folder:

~/.apsara-apm/{language}/{agent_version}_{agent_commit_id}/logs

When the application starts, the probe prints a log message to stdout that indicates the log storage folder. To simplify folder management, you can specify a folder using the APSARA_APM_AGENT_WORKSPACE_DIR environment variable.

![]()

The conversation history log file is named in the genai_messages_{ip}_{pid}.log format. The maximum log file size is 256 MB. When this size is exceeded, the file is rotated. Only the two most recent log files are kept in the file system. Older files are deleted.

Configuration

You can configure this behavior using the following environment variables:

Environment variable

Value

OTEL_INSTRUMENTATION_GENAI_CAPTURE_MESSAGE_CONTENT

TrueOTEL_INSTRUMENTATION_GENAI_MESSAGE_CONTENT_CAPTURE_STRATEGY

"event"For Java applications, you can also add System Properties to the startup command. For example:

-Dotel.instrumentation.genai.capture-message-content=true \ -Dotel.instrumentation.genai.message-content.capture-strategy=event

Example

GenAI Client Span 1

Property | Value |

Span name |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

GenAI Client Event 1

The spanId corresponds to GenAI Client Span 1.

{

"scope": {

"name": "aliyun.instrumentation.openai",

"version": "1.0.1"

},

"timeUnixNano": 1760080084146812928,

"severity": "UNSPECIFIED",

"attributes": {

"event.name": "gen_ai.client.inference.operation.details",

"gen_ai.provider.name": "openai",

"gen_ai.operation.name": "chat",

"gen_ai.request.model": "gpt-4",

"gen_ai.request.max_tokens": 200,

"gen_ai.request.top_p": 1.0,

"gen_ai.response.id": "chatcmpl-9J3uIL87gldCFtiIbyaOvTeYBRA3l",

"gen_ai.response.model": "gpt-4-0613",

"gen_ai.usage.output_tokens": 17,

"gen_ai.usage.input_tokens": 47,

"gen_ai.response.finish_reasons": ["tool_calls"],

"gen_ai.input.messages": "[{\"role\":\"user\",\"parts\":[{\"type\":\"text\",\"content\":\"Weather in Paris?\"}]}]",

"gen_ai.output.messages": "[{\"role\":\"assistant\",\"parts\":[{\"type\":\"tool_call\",\"id\":\"call_VSPygqKTWdrhaFErNvMV18Yl\",\"name\":\"get_weather\",\"arguments\":{\"location\":\"Paris\"}}],\"finish_reason\":\"tool_call\"}]",

"gen_ai.tool.definitions": "[{\"type\":\"function\",\"name\":\"get_weather\",\"description\":\"Get the current temperature for a specific location.\"}]"

},

"traceId": "0b46a347592ac487ed092ebe802c6818",

"spanId": "b3c40af8cd1a522c"

}GenAI Client Span 2

Property | Value |

Span name |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

GenAI Client Event 2

The spanId corresponds to GenAI Client Span 2.

{

"scope": {

"name": "aliyun.instrumentation.openai",

"version": "1.0.1"

},

"timeUnixNano": 1760080084176812928,

"severity": "UNSPECIFIED",

"attributes": {

"event.name": "gen_ai.client.inference.operation.details",

"gen_ai.provider.name": "openai",

"gen_ai.operation.name": "chat",

"gen_ai.request.model": "gpt-4",

"gen_ai.request.max_tokens": 200,

"gen_ai.request.top_p": 1.0,

"gen_ai.response.id": "chatcmpl-VSPygqKTWdrhaFErNvMV18Yl",

"gen_ai.response.model": "gpt-4-0613",

"gen_ai.usage.output_tokens": 52,

"gen_ai.usage.input_tokens": 97,

"gen_ai.response.finish_reasons": ["stop"],

"gen_ai.input.messages": "[{\"role\":\"user\",\"parts\":[{\"type\":\"text\",\"content\":\"Weather in Paris?\"}]},{\"role\":\"assistant\",\"parts\":[{\"type\":\"tool_call\",\"id\":\"call_VSPygqKTWdrhaFErNvMV18Yl\",\"name\":\"get_weather\",\"arguments\":{\"location\": \"Paris\"}}]},{\"role\":\"tool\",\"parts\":[{\"type\":\"tool_call_response\",\"id\":\"call_VSPygqKTWdrhaFErNvMV18Yl\",\"response\":\"rainy, 57°F\"}]}]",

"gen_ai.output.messages": "[{\"role\":\"assistant\",\"parts\":[{\"type\":\"text\",\"content\":\"The weather in Paris is currently rainy with a temperature of 57°F.\"}],\"finish_reason\":\"sto\"}]"

},

"traceId": "0b46a347592ac487ed092ebe802c6818",

"spanId": "0a706a178bd746c5"

}Record conversation history in SLS

In the Record conversation history in local logs mode, you can use LoongCollector to send the local logs to Simple Log Service (SLS) for further processing and consumption.

Step 1: Install LoongCollector

If LoongCollector is already installed in your environment, skip this step.

Environment type | Reference |

Linux | |

Windows | |

Kubernetes |

Step 2: Create a collection configuration

Log on to the Simple Log Service console, click the project, and expand the desired Logstore. Then, click the

icon next to Data Collection and click Integrate Now in the JSON - Text Log area.

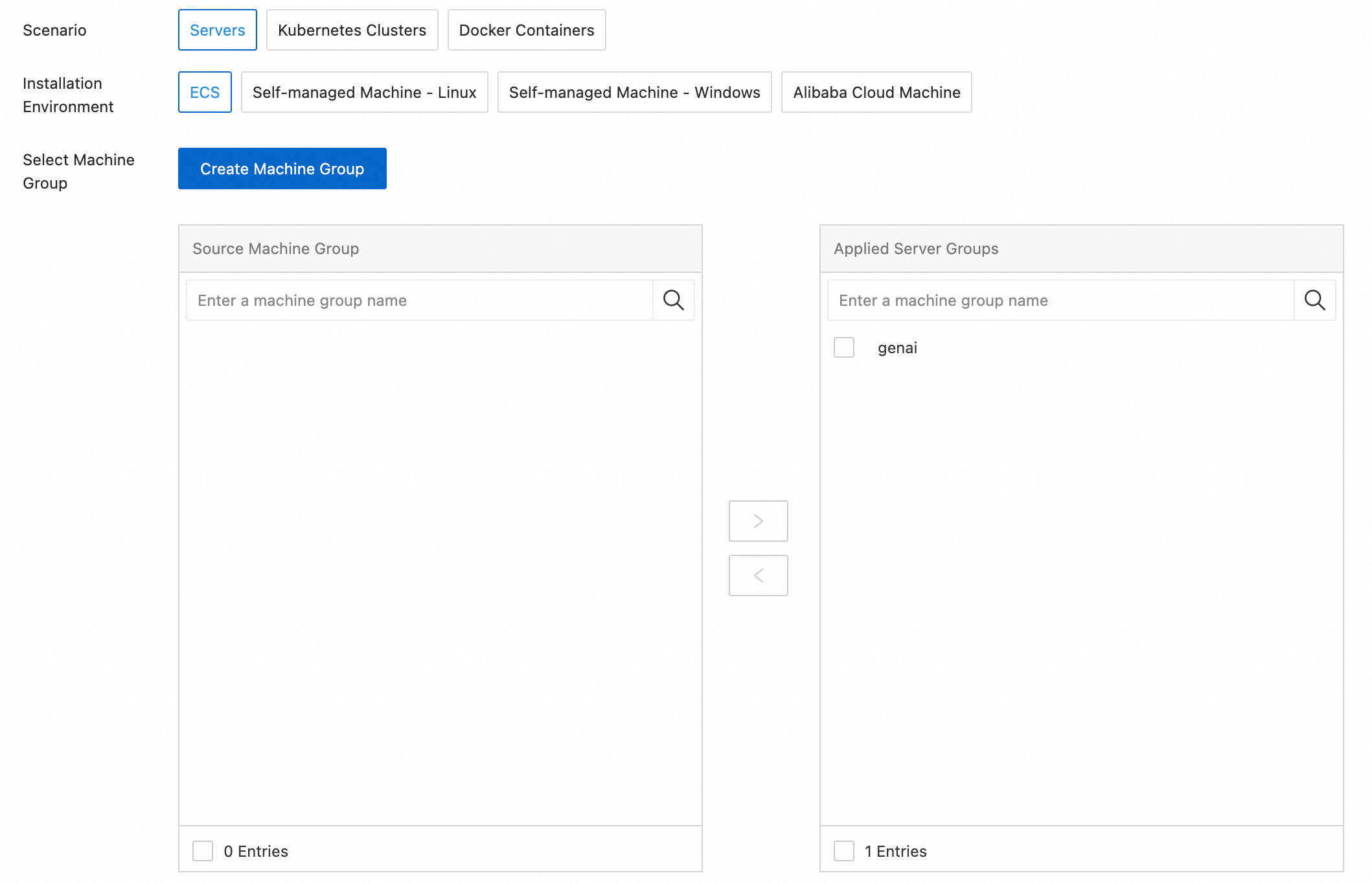

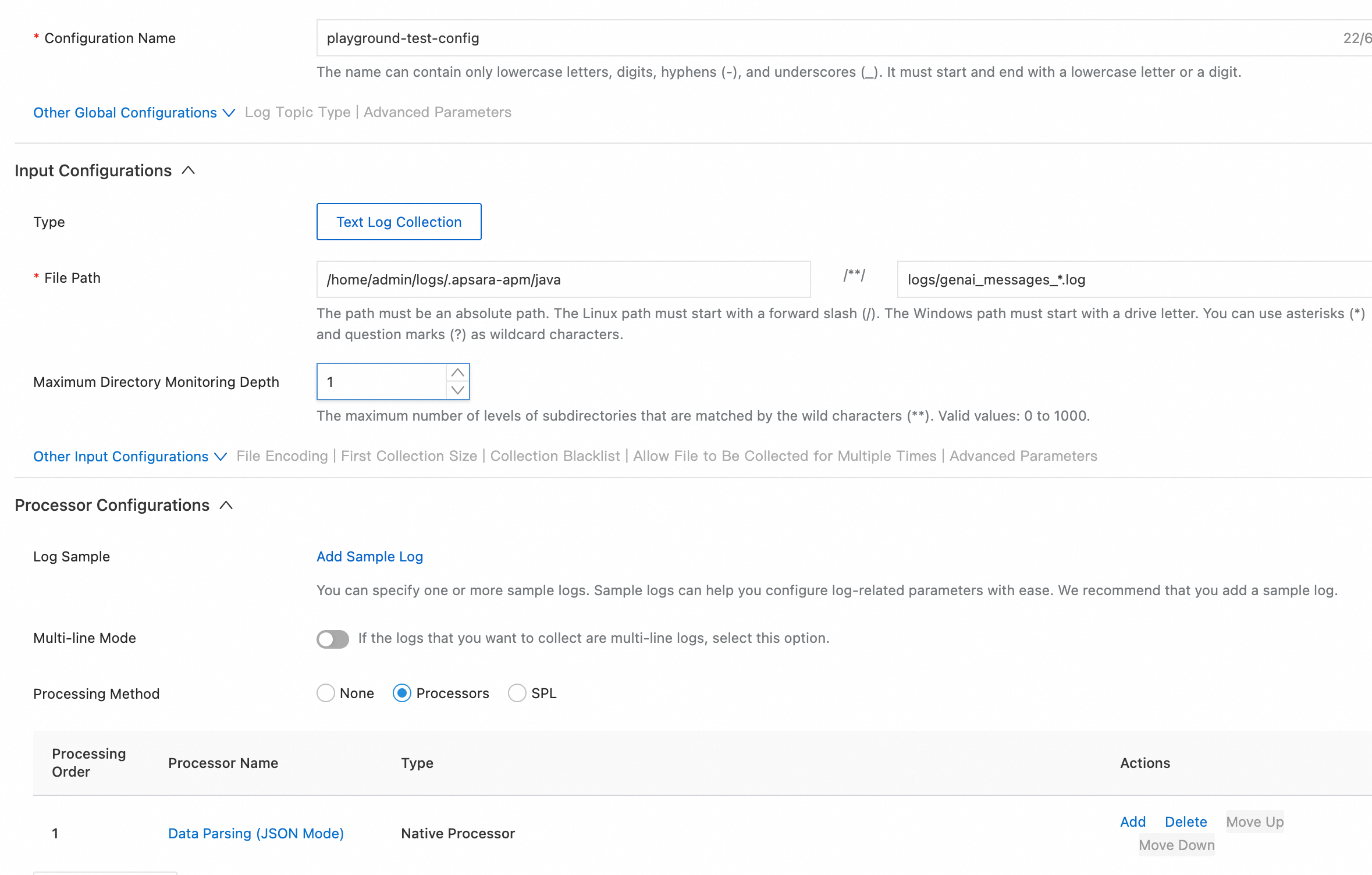

icon next to Data Collection and click Integrate Now in the JSON - Text Log area.Select an existing machine group or create a machine group for the host where the logs are located.

Create a collection configuration. Under Input Configurations, set File Path to the log folder path. Check the standard output from the application startup to confirm the folder path. For Processing Configurations, select JSON parsing.

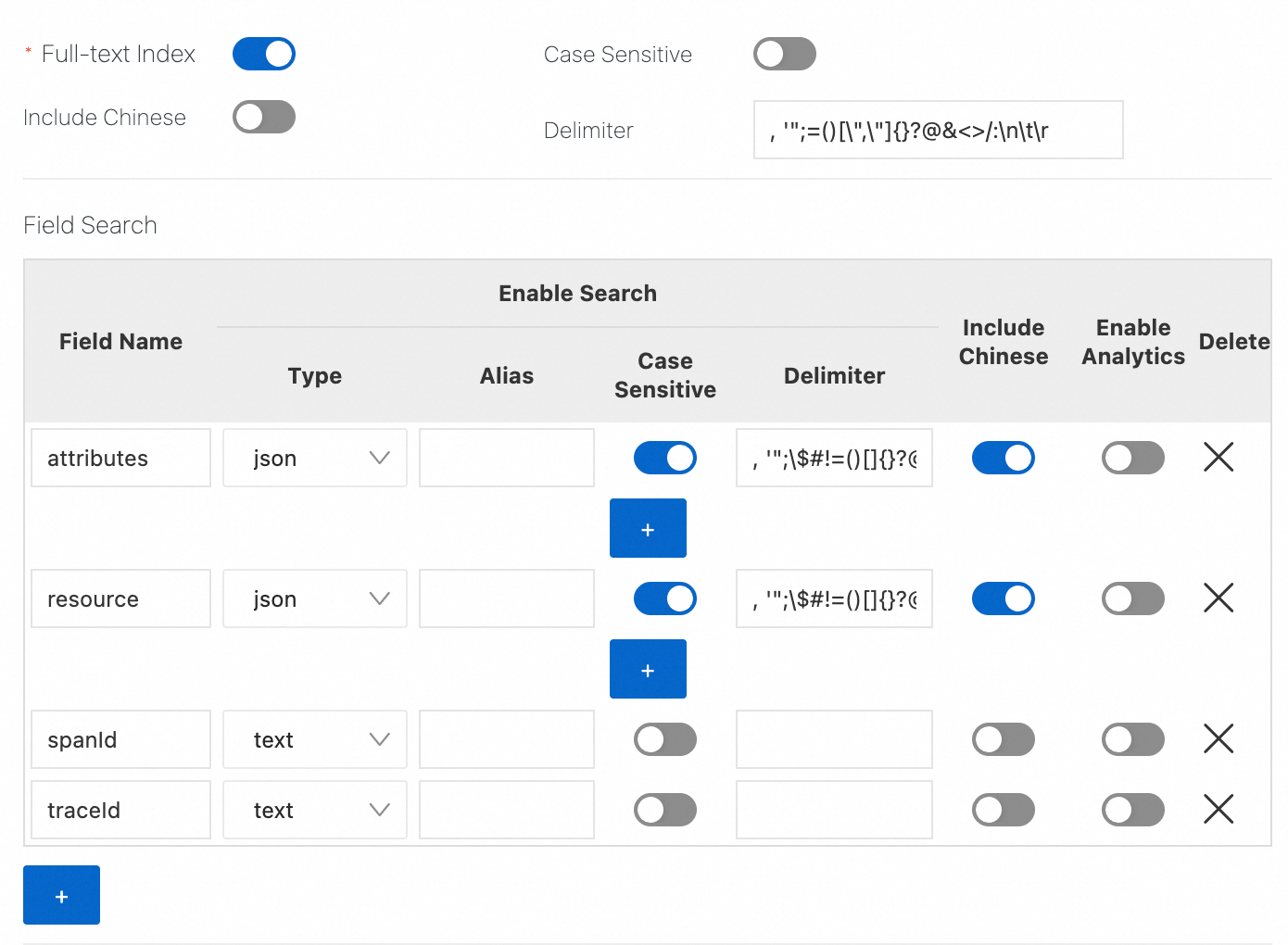

Add at least the following indexes to the logs to facilitate data retrieval and analysis.

For more information about log collection, see Collect text logs from servers.

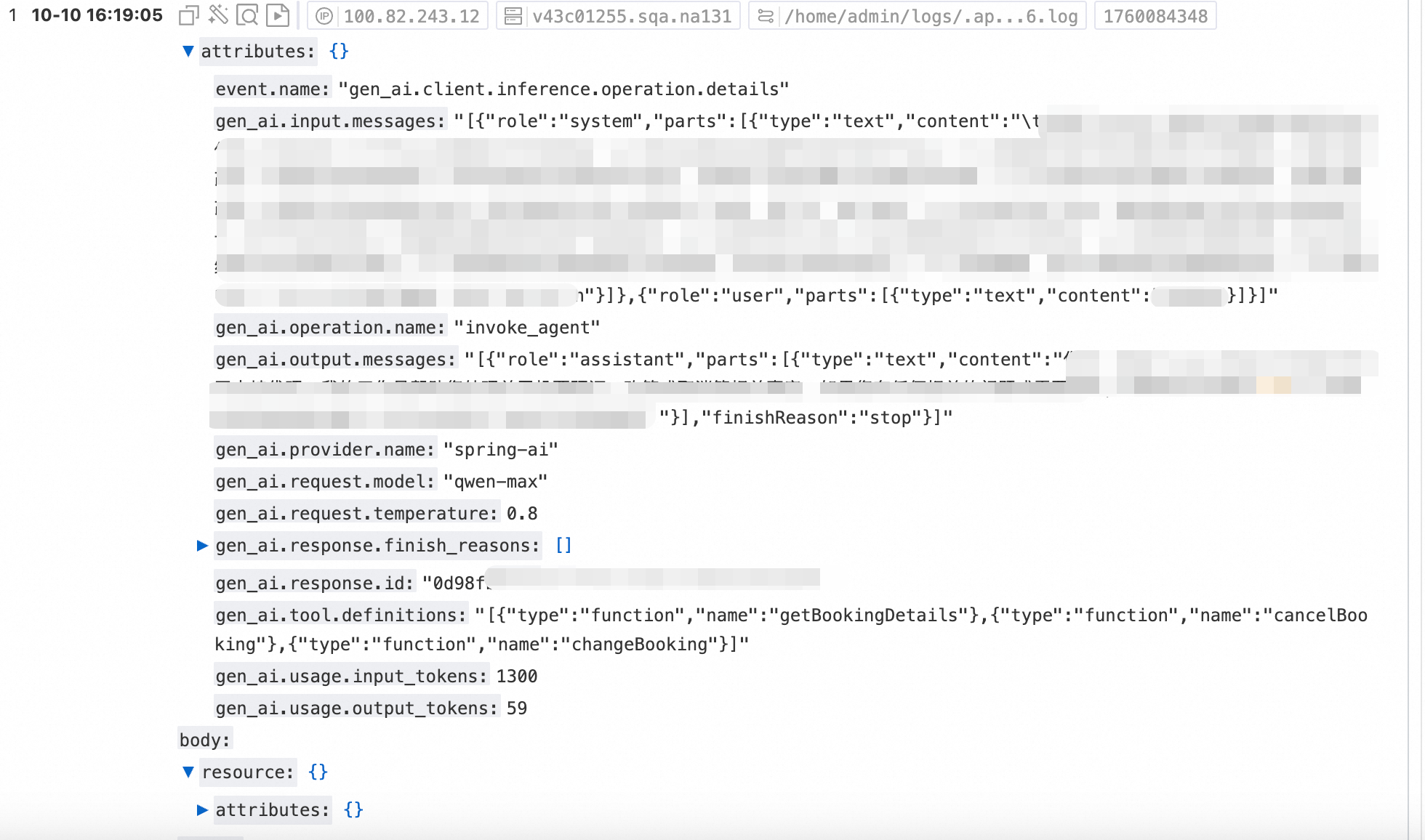

Step 3: View collected logs in SLS

After you complete the initial configuration, logs are collected in SLS within a few minutes:

Adjust the message length limit

To prevent excessive resource usage from overly long messages, the probe truncates long message content by default. The default length is 8192 characters per message. A truncated message ends with the ...[truncated] identifier. For example:

[

{

"role": "assistant",

"parts": [

{

"type": "text",

"content": "The weather in Paris...[truncated]"

}

],

"finish_reason": "stop"

}

]Configuration

You can configure this behavior using the following environment variable:

Environment variable

Value

OTEL_INSTRUMENTATION_GENAI_MESSAGE_CONTENT_MAX_LENGTH

8192

For Java applications, you can also add a System Property to the startup command. For example:

-Dotel.instrumentation.genai.message-content.max-length=8192

Message bodies that can be truncated

Conversation history type | Message |

| TextPart.content |

| TextPart.content |

| TextPart.content |