This topic describes the typical use scenarios of ApsaraMQ for Kafka, including website activity tracking, log aggregation, data extract, transfer, and load (ETL), and data transfer hub.

Website activity tracking

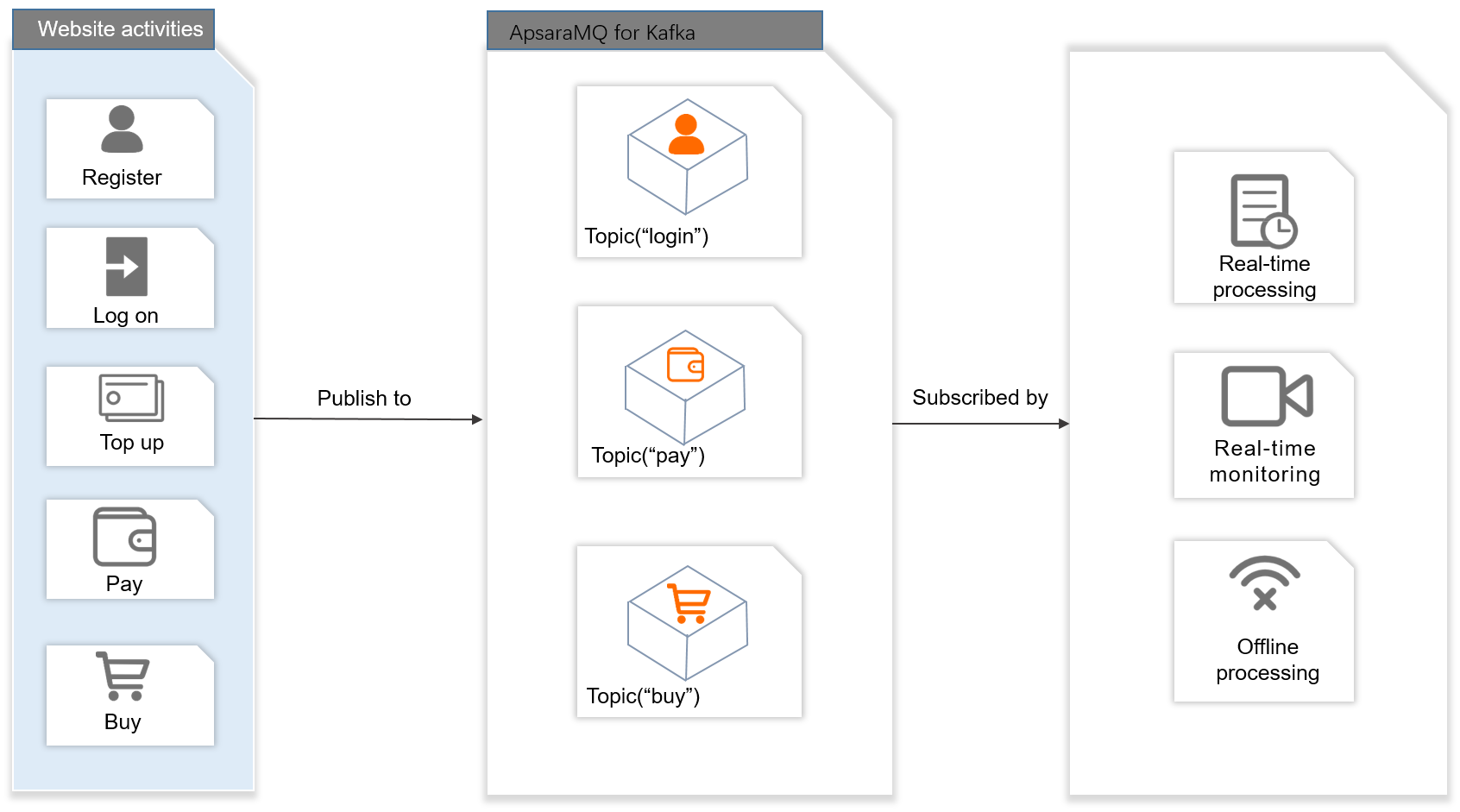

Successful website operations require analysis of user actions on websites. You can use the publish-subscribe model of ApsaraMQ for Kafka to collect user action data on a website in real time, publish messages to different topics based on business data types, and then use message streams generated based on the real-time delivery of subscribed messages for real-time processing or real-time monitoring, or load the message streams to offline data warehouse systems such as Hadoop and MaxCompute for offline processing. User actions on a website include sign-in, logon, top-up, payment, and purchase.

ApsaraMQ for Kafka provides the following benefits when it is used for website activity tracking:

High throughput: High throughput is required to support the large amount of user action data on a website.

Auto scaling: Promotions on a website can cause a sharp increase in user action data. During promotions, ApsaraMQ for Kafka brokers can be scaled out based on your requirements.

Big data analysis: ApsaraMQ for Kafka can connect to real-time ETL engines such as Storm and Spark. ApsaraMQ for Kafka can also connect to offline data warehouse systems such as Hadoop.

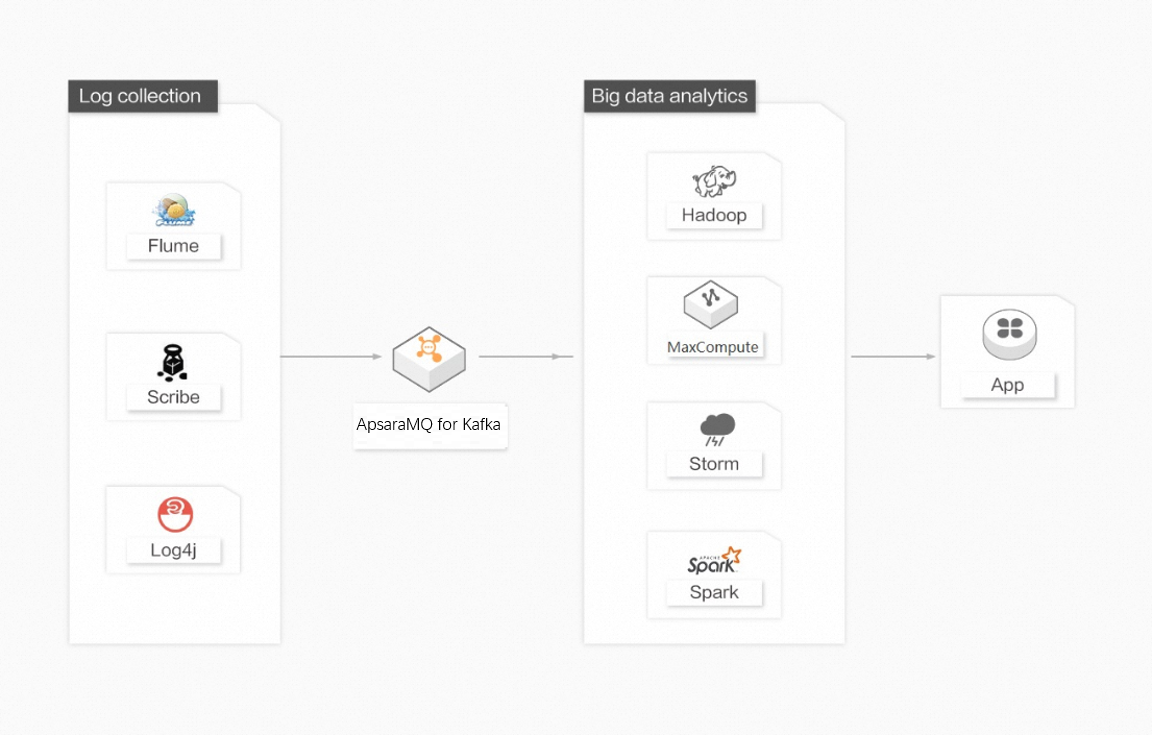

Log aggregation

Many platforms, such as Taobao and Tmall, generate a large number of logs every day. In most cases, the logs are streaming data, such as page views and queries. Compared with log-centered systems, such as Scribe and Flume, ApsaraMQ for Kafka features higher performance, stronger data persistence, and shorter end-to-end response time. This makes ApsaraMQ for Kafka an ideal log collection center. In ApsaraMQ for Kafka, file details are ignored. Logs of multiple hosts or applications can be abstracted as log or event message streams and then asynchronously sent to ApsaraMQ for Kafka clusters. This greatly reduces the response time. ApsaraMQ for Kafka clients can submit and compress messages in batches. This prevents increased performance overhead for producers. Consumers can use offline warehouse systems such as Hadoop and MaxCompute and real-time online analysis systems such as Storm and Spark to perform statistical analysis on logs.

ApsaraMQ for Kafka provides the following benefits when it is used for data aggregation:

System decoupling: ApsaraMQ for Kafka serves as a bridge between application systems and analysis systems and decouples the two types of systems.

High scalability: ApsaraMQ for Kafka is scalable. If the data volume increases, you can add nodes to rapidly scale out ApsaraMQ for Kafka.

Online and offline analysis systems: ApsaraMQ for Kafka supports real-time online analysis systems and offline analysis systems such as Hadoop.

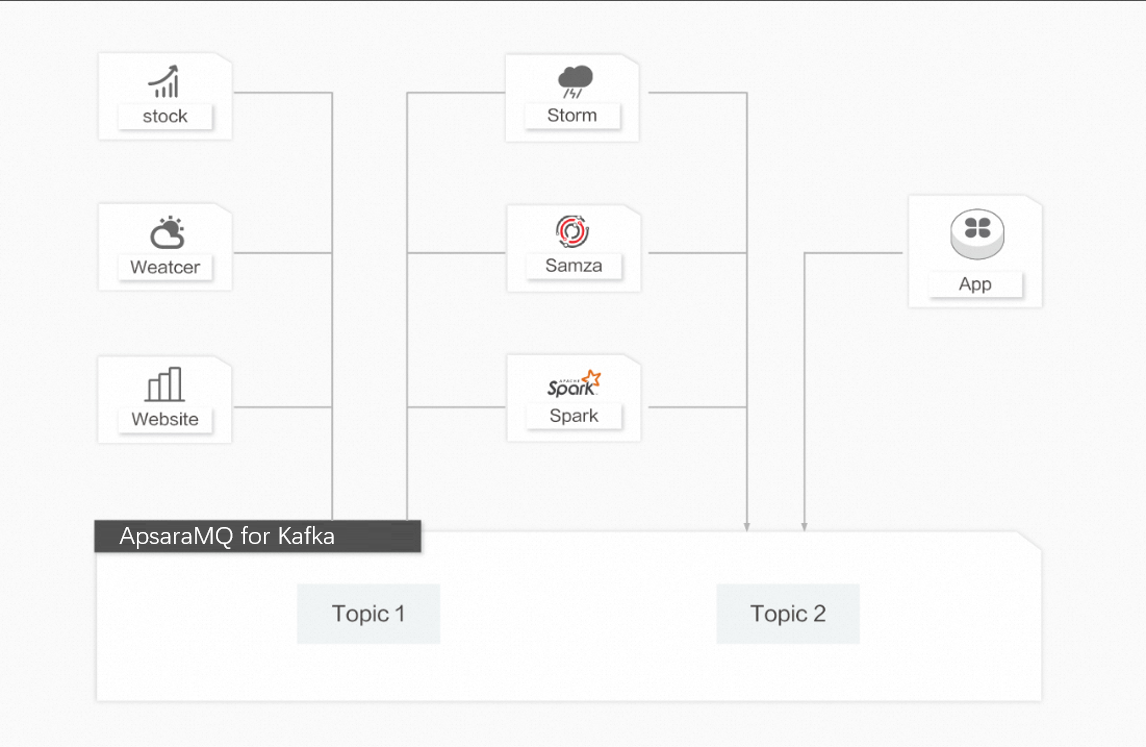

ETL

In fields such as stock market trend analysis, meteorological data monitoring and management, and website user action analysis, collecting and storing all data in databases before processing the data is difficult due to the huge amount of data generated in real time. Therefore, traditional ETL architectures cannot meet the requirements of users. Different from traditional architectures, ApsaraMQ for Kafka and ETL engines such as Storm, Samza, and Spark can efficiently resolve the preceding pain point. The ETL model captures and processes data in real time during data flow, computes and analyzes data based on business requirements, and then saves or distributes the results to relevant components.

ApsaraMQ for Kafka provides the following benefits when it is used for ETL:

Data flow: ApsaraMQ for Kafka captures and processes data in real time during data flow and analyzes data based on business requirements.

High scalability: ApsaraMQ for Kafka is highly scalable and can be used to deal with the huge amount of data generated in real time.

Connection to ETL engines: ApsaraMQ for Kafka can connect to open source data processing engines such as Storm, Samza, and Spark, and Alibaba Cloud services such as E-MapReduce, Blink, and Realtime Compute.

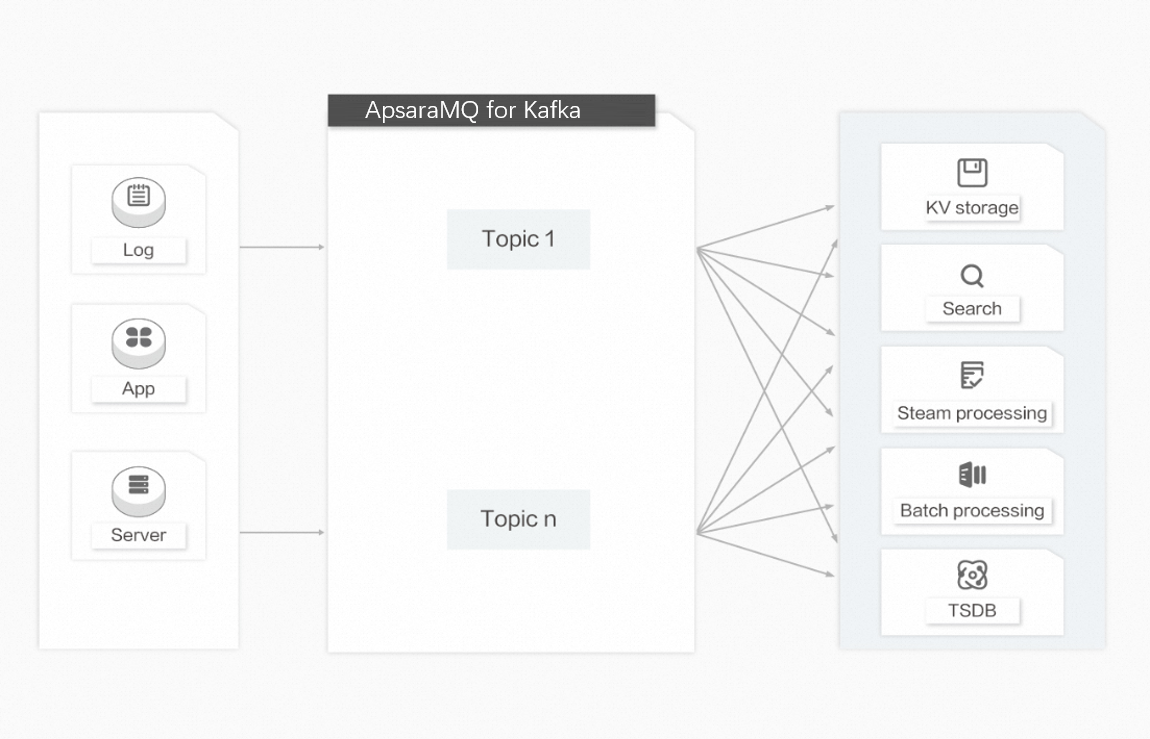

Data transfer hub

Over the past 10 years, dedicated systems have emerged to provide specific services such as key-value storage (HBase), search (Elasticsearch), stream processing (Storm, Spark, and Samza), and time series database (OpenTSDB). Each dedicated system is designed for a single goal. The simplicity of dedicated systems makes it easier and more cost-efficient to build distributed systems on commercial hardware. In most cases, the same dataset needs to be injected into multiple dedicated systems. For example, if application logs are used for offline analysis, searching for a single log is also required. However, it is impractical to construct an independent workflow to collect data of each type before importing the data to the corresponding dedicated systems. In this case, you can use ApsaraMQ for Kafka as a data transfer hub to import the same data record to different dedicated systems.

ApsaraMQ for Kafka provides the following benefits when it serves as a data transfer hub:

High-capacity storage: ApsaraMQ for Kafka can store a large amount of data on commercial hardware and help build horizontally scalable distributed systems.

One-to-many consumption model: The publish-subscribe model allows the same dataset to be consumed multiple times.

Real-time and batch processing: ApsaraMQ for Kafka supports local data persistence and page cache, and transmits messages to consumers for real-time processing and batch processing at the same time without performance loss.