Model APIs are designed for teams developing artificial intelligence (AI) applications, providing flexible and intelligent AI gateway configuration and debugging. You can preset various plugins, such as AI proxy, AI observability, consumer authorization, and Content Moderation. This topic describes how to create, edit, debug, and delete Model APIs.

Create a Model API

Log on to the AI Gateway console and choose Instance. In the top menu bar, select a region, then click the target instance ID.

In the navigation pane on the left, choose Model API, then click Create Model API.

Select a scenario and click the corresponding Create button.

The scenario you select determines the available protocol options and the default routes that the system automatically creates. The following scenarios are supported:

Text Generation: Supports OpenAI-compatible and Anthropic protocols.

Image Generation

Video Generation

Speech Synthesis

Embedding

Rerank

Others

Configure the basic information.

In the Create Model API form that opens, configure the settings as follows:

Protocol: Each protocol provides a set of built-in default routes for the selected scenario. This helps you quickly generate compatible APIs for common services such as OpenAI, DashScope, and vLLM.

API Name: A custom name for the API. The name must be globally unique within your account and can be up to 64 characters long. The name can contain letters, numbers, underscores (_), and hyphens (-).

Domain Name: The domain name used to access the API. You can select more than one. The combination of a domain name and the base path Base Path must be unique.

If you do not have a domain name, click the Add Domain Name button on the right to create one.

Base Path: The base request path of the API. The default value is

/. You can also enable Remove during backend forwarding.NoteIf you enable Remove during backend forwarding, the system automatically removes the base path from the request URI before forwarding the request to the backend service. For example:

The base path is set to /api.

The original request path is /api/users.

The path forwarded to the backend service is /users.

AI Request Monitoring: If you enable this feature, you can view metrics, logging, and tracing information. Logging and tracing depend on the SLS log delivery service. You can select Record request content and Log response to record requests sent to the large model or the context returned by the large model.

ImportantIf you enable this feature, the system records all AI request content, including the request body, to the access log. Ensure that you properly configure SLS and implement data security protection measures.

Large Model Service: Supports Single Service, Multiple service (by model Nname), and Multiple services (by proportion).

Single Service: Select one AI service and set the Model Name. The model name can be passed through or rewritten.

Multiple service (by model Nname): Routes requests to different services by matching the model name in the request body with a rule. The rule supports the wildcard characters

?and*. For example,qwen-*can matchqwen-maxandqwen-long.Multiple services (by proportion): Select multiple AI services and set their weights. This service supports passing through or rewriting the model name.

Fallback: You can enable this feature and configure multiple fallback policies in sequence. You can reuse the same service.

First Packet Timeout: The maximum time, in milliseconds, to wait for the first response package in a streaming response. This setting is suitable for streaming interaction scenarios that are sensitive to response latency. A value of 0 disables this feature.

Resource Group: You can select the default resource group, an existing resource group, or create a new one. Resource groups are used to group, authorize, and monitor resources in your account.

To create a new resource group, you can click Create Resource Group.

Confirm the parameters and click OK to create the Model API.

Default route details

This section describes the default routes that the system automatically creates when you select different Protocols for different Scenarios.

Text generation (Text)

Protocol: OpenAI compatible (OpenAI/v1)

Route Name | Path | Method | Description |

|

| POST | Creates a model response for the given chat conversation. |

|

| POST | Creates a completion for the provided prompt and parameters. |

Protocol: Anthropic (Anthropic)

The Anthropic protocol is designed for the Anthropic series of models, such as Claude. It provides native message formats and interaction methods. This protocol is suitable for application scenarios that require the native Anthropic API format.

Large model providers that support this protocol include Alibaba Cloud Model Studio (Qwen), Claude, Moonshot AI (Moonshot), and Zhipu AI. The AI services from these providers automatically support the Anthropic protocol without requiring extra configuration.

Route Name | Path | Method | Description |

|

| POST | Creates a message for the given chat conversation using Anthropic's native message format. |

Image generation (Image)

Protocol: Alibaba Cloud Model Studio image generation

Route Name | Path | Method | Description |

|

| POST | Generate a text-to-image synthesis image. |

|

| POST | Generate an image-to-image synthesis image. |

|

| POST | Generate an image-to-image outpainting image. |

|

| POST | Generate a virtual model image. |

|

| POST | Generate a background generation image. |

|

| GET/POST/PUT/PATCH/DELETE | Manage asynchronous tasks. |

Protocol: OpenAI compatible

Route Name | Path | Method | Description |

|

| POST | Generate an image. |

|

| POST | Edit an image. |

|

| POST | Creates a variation of a given image. |

Protocol: ComfyUI

Route Name | Path | Method | Description |

|

| GET | WebSocket endpoint for real-time communication with the server. |

|

| GET | Retrieve a list of available embeddings. |

|

| GET | Retrieve a list of extensions that register a web directory. |

|

| GET | Retrieve server features and capabilities. |

|

| GET | Retrieve a list of available model types. |

|

| GET | Retrieve models in a specific folder. |

|

| GET | Retrieve a map of custom node modules and associated template workflows. |

|

| POST | Upload an image. |

|

| POST | Upload a mask. |

|

| GET | View an image. Lots of options. |

|

| GET | Retrieve metadata for a model. |

|

| GET | Retrieve system information, such as Python version, devices, and VRAM. |

|

| GET/POST | Retrieve current queue status and execution information or submit a prompt to the queue. |

|

| GET | Retrieve details of all node types. |

|

| GET | Retrieve details of one node type. |

|

| GET/POST | Retrieve the queue history. |

|

| GET | Retrieve the queue history for a specific prompt. |

|

| GET/POST | Retrieve the current state of the execution queue or manage queue operations. |

|

| POST | Stop the current workflow execution. |

|

| POST | Free memory by unloading specified models. |

|

| GET | List user data files in a specified directory. |

|

| GET | Lists files and directories in a structured format. |

|

| GET/POST/DELETE | Retrieve, upload, update, or delete a specific user data file. |

|

| POST | Move or rename a user data file. |

|

| GET/POST | Get user information or create a new user. |

Video generation (Video)

Protocol: Alibaba Cloud Model Studio video generation

Route Name | Path | Method | Description |

|

| POST | Generate a video-generation synthesis video. |

|

| POST | Generate an image-to-video synthesis video. |

|

| GET/POST/PUT/PATCH/DELETE | Manage asynchronous tasks. |

Speech synthesis (Audio)

Protocol: Alibaba Cloud Model Studio speech synthesis

Route Name | Path | Method | Description |

|

| GET | Generate a text-to-audio synthesis audio. |

Protocol: OpenAI compatible (OpenAI/v1)

Route Name | Path | Method | Description |

|

| POST | Generate speech audio. |

Vectorization (Embedding)

Protocol: OpenAI compatible (OpenAI/v1)

Route Name | Path | Method | Description |

|

| POST | Creates an embedding vector representing the input text. |

Text reranking (Rerank)

Protocol: Alibaba Cloud Model Studio text reranking

Route Name | Path | Method | Description |

|

| POST | Reranks the given documents based on query relevance. |

Protocol: vLLM (vLLM)

Route Name | Path | Method | Description |

|

| POST | Reranks the given documents based on query relevance. |

Others

Protocol: OpenAI compatible (OpenAI/v1)

Route Name | Path | Method | Description |

|

| GET/POST/PUT/PATCH/DELETE | Manage models. |

|

| GET/POST/PUT/PATCH/DELETE | Manage files. |

|

| GET/POST/PUT/PATCH/DELETE | Manage batches. |

|

| GET/POST/PUT/PATCH/DELETE | Manage fine-tuning jobs. |

Compatibility: When you create an AI service for large model providers that support the Anthropic protocol, such as Alibaba Cloud Model Studio, Claude, Moonshot AI, and Zhipu AI, multiple protocols are automatically supported. These protocols include the OpenAI compatible protocol and the Anthropic protocol. You can then select the appropriate protocol when you create a Model API.

Edit a Model API

Log on to the AI Gateway console and choose Instance. In the top menu bar, select a region, then click the target instance ID.

In the navigation pane on the left, click Model API, and then click Edit in the Actions column of the target API. In the Edit Model API panel, modify the parameters. For more information about the parameters, see Create a Model API.

Confirm your changes and click OK.

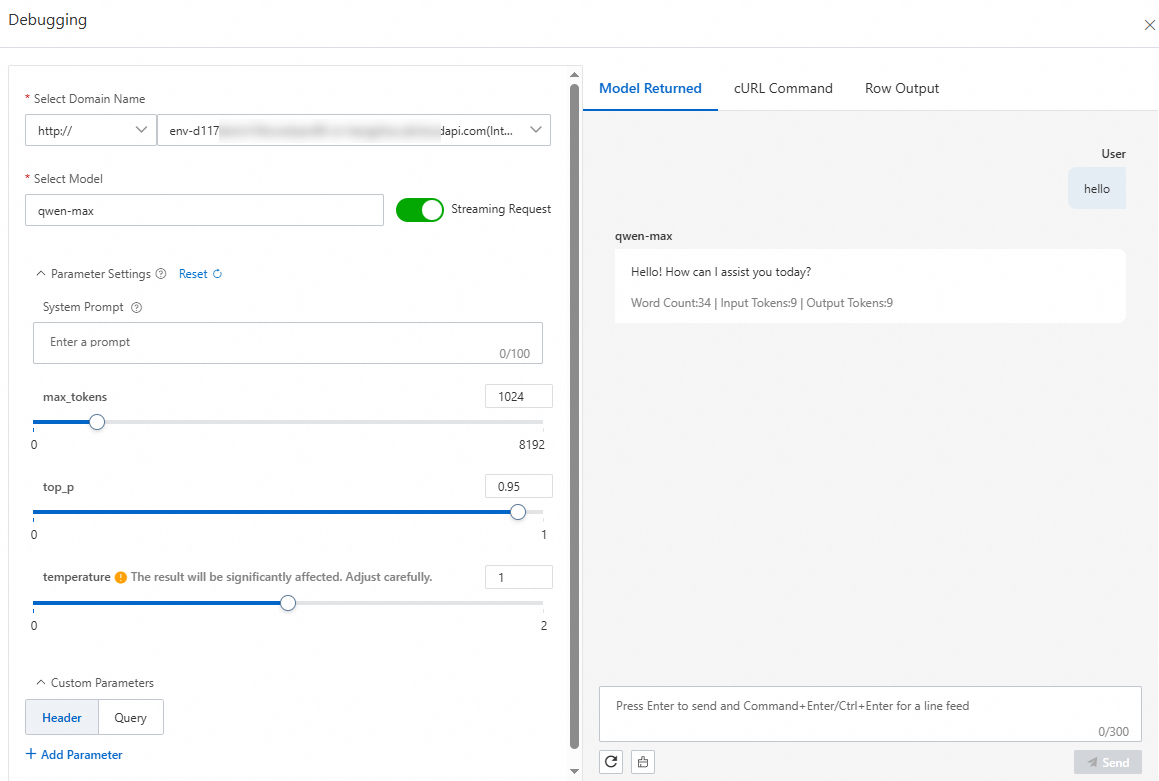

Debug a Model API

Currently, you can only debug text generation using the /v1/chat/completions endpoint.

Log on to the AI Gateway console and choose Instance. In the top menu bar, select a region, then click the target instance ID.

In the navigation pane on the left, select Model API, and click Debug in the Actions column of the target API.

In the Debugging panel, select a domain name and model, enable the Streaming Request switch if needed, and configure the parameters and custom parameters. On the Model Returned tab, enter your content and click Send to start debugging.

Delete a Model API

Log on to the AI Gateway console and choose Instance. In the top menu bar, select a region, then click the target instance ID.

In the navigation pane on the left, select Model API, click Delete in the Actions column of the target API. In the confirmation dialog box that appears, enter the API name and click Delete.