Notebook is an interactive data analysis and development platform that provides features such as job editing, data analysis, and data visualization. If you want to use Notebook to develop Spark SQL jobs, you can use the Notebook feature of Data Management (DMS) to complete job development.

Prerequisites

An AnalyticDB for MySQL Enterprise Edition, Basic Edition, or Data Lakehouse Edition cluster is created.

A job resource group is created for the AnalyticDB for MySQL cluster.

A database account is created for the AnalyticDB for MySQL cluster.

If you use an Alibaba Cloud account, you need to only create a privileged account.

If you use a Resource Access Management (RAM) user, you must create a privileged account and a standard account and associate the standard account with the RAM user.

AnalyticDB for MySQL is authorized to assume the AliyunADBSparkProcessingDataRole role to access other cloud resources.

The log storage path of Spark applications is configured for the AnalyticDB for MySQL cluster.

NoteLog on to the AnalyticDB for MySQL console. Find the cluster that you want to manage and click the cluster ID. In the left-side navigation pane, choose . Click Log Settings. In the dialog box that appears, select the default path or specify a custom storage path. You cannot set the custom storage path to the root directory of OSS. Make sure that the custom storage path contains at least one layer of folders.

An Object Storage Service (OSS) bucket is created in the same region as the AnalyticDB for MySQL cluster.

Limits

The Notebook feature is supported only in the China (Hangzhou) region.

Procedure

Create and go to a workspace.

- Log on to the DMS console V5.0.

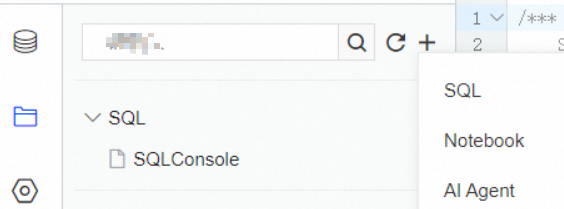

Move the pointer over the

icon in the upper-left corner and choose . Note

icon in the upper-left corner and choose . NoteIf you use the DMS console in normal mode, choose in the top navigation bar.

Click Create Workspace. In the Create Workspace dialog box, configure the Workspace Name and Region parameters and click OK.

Click Go to Workspace in the Actions column of the workspace to go to the workspace.

(Optional) Add workspace members. If a workspace has multiple users, you must perform this step to assign different roles to each user.

Configure the code storage.

On the

tab, click Storage Management.

tab, click Storage Management.Configure the OSS path in the Code Storage section.

Add a resource.

On the

tab, click Resource Configuration.

tab, click Resource Configuration.Click Add Resource. In the Add Resource panel, configure the parameters that are described in the following table.

Parameter

Required

Description

Resource Name

Yes

The name of the resource. You can enter a custom name.

Resource Introduction

Yes

The description of the resource. You can enter the purpose of the resource.

Image

Yes

Only Spark3.5+Python3.9 is supported.

AnalyticDB Instance

Yes

The ID of the AnalyticDB for MySQL cluster.

NoteIf you cannot find the desired cluster, check whether the cluster has been registered with DMS.

AnalyticDB Resource Group

Yes

The name of the job resource group.

Executor Spec

Yes

The resource specifications of Spark executors. In this example, the default medium specification is used.

Different resource types correspond to different specifications. For more information, see the Type column in the Spark application configuration parameters topic.

Max Executors

Min Executors

Yes

The number of Spark executors.

After you select the Spark3.5+Python3.9 image, the Min Executors parameter is automatically set to 2, and the Max Executors parameter is automatically set to 8.

Notebook Spec

Yes

The Notebook specifications. In this example, General_Tiny_v1 (1 core, 4 GB) is used.

VPC ID

Yes

The virtual private cloud (VPC) in which the AnalyticDB for MySQL cluster resides, which ensures that Notebook can communicate with the AnalyticDB for MySQL cluster.

ImportantIf you change the VPC and vSwitch of the AnalyticDB for MySQL cluster, you must change the VPC ID and VSwitch ID parameters of the resource to the new VPC and vSwitch. Otherwise, job submissions may fail.

Zone ID

Yes

The zone in which the AnalyticDB for MySQL cluster resides.

VSwitch ID

Yes

The vSwitch to which the AnalyticDB for MySQL cluster is connected.

Security Group ID

Yes

An available security group, which ensures that Notebook can communicate with the AnalyticDB for MySQL cluster.

Release Resource

Yes

The period of time for which the resource remains idle before it is automatically released.

Dependent Jars

No

The OSS storage path of the JAR package. Specify this parameter only if you submit a job in Python and use a JAR package.

SparkConf

No

The configuration parameters that are similar to those of Apache Spark. The parameters must be in the

key: valueformat. For information about the configuration parameters that are different from those of Apache Spark or the configuration parameters that are specific to AnalyticDB for MySQL, see Spark application configuration parameters.Click Save.

Click Start in the Actions column of the resource to start the resource.

Initialize data.

Move the pointer over the

icon in the upper-left corner and choose .

icon in the upper-left corner and choose .Click +New. In the Add Instance dialog box, configure the parameters that are described in the following table.

Parameter

Description

Data Source

On the Alibaba Cloud tab, select OSS.

Basic Information

File and Log Storage

This parameter is automatically set to OSS.

Instance Region

The region in which the AnalyticDB for MySQL cluster resides.

Connection Method

This parameter is automatically set to Connection String Address.

Connection String Address

Set the value to oss-cn-hangzhou.aliyuncs.com.

Bucket

The name of the bucket.

Access mode

The access method. In this example, Security Hosting - Manual is used.

AccessKey ID

The AccessKey ID of an Alibaba Cloud account or a RAM user that has permissions to access OSS.

For information about how to obtain an AccessKey ID and an AccessKey secret, see Accounts and permissions.

AccessKey Secret

The AccessKey secret of an Alibaba Cloud account or a RAM user that has permissions to access OSS.

For information about how to obtain an AccessKey ID and an AccessKey secret, see Accounts and permissions.

Advanced Information

The optional parameters. For more information, see the "Advanced Information" section of the Register an Alibaba Cloud database instance topic.

After you configure the preceding parameters, click Test Connection in the lower-left corner.

NoteIf the connection test fails, check the instance information based on the error message.

After the Successful connection message is displayed, click Submit.

Go to the workspace and click the

tab.

tab.On the Data Lake Data tab, click Add OSS and select the bucket that you specified in Step b.

Create a notebook.

On the

tab, click the

tab, click the  icon and select Notebook.

icon and select Notebook.

Develop a Spark SQL job on the Notebook page.

NoteFor information about the buttons on the Notebook page, see the "Notebook UI" section of the Use Notebook to query and analyze data topic.

Run the following command to download Python dependencies:

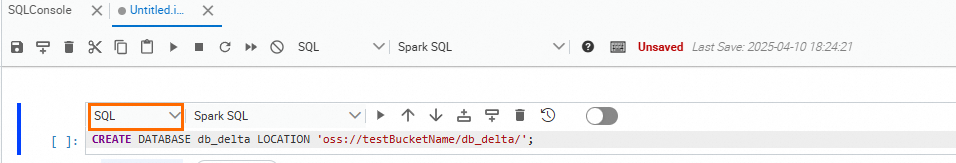

pip install deltaSwitch the cell type to SQL and execute the following statement to create a database.

NoteThe db_delta database created in Step b and the

sample_dataexternal table created in Step c are automatically displayed in the AnalyticDB for MySQL cluster. You can analyze thesample_datatable in the AnalyticDB for MySQL console.

CREATE DATABASE db_delta LOCATION 'oss://testBucketName/db_delta/'; -- Specify the storage path for data in the db_delta database.Switch the cell type to Code and run the following code to create an external table named

sample_dataand insert data into the table. The data of thesample_dataexternal table is stored in the OSS path specified in Step b.# -*- coding: utf-8 -*- import pyspark from delta import * from pyspark.sql.types import * from pyspark.sql.functions import * print("Starting Delta table creation") data = [ ("Robert", "Baratheon", "Baratheon", "Storms End", 48), ("Eddard", "Stark", "Stark", "Winterfell", 46), ("Jamie", "Lannister", "Lannister", "Casterly Rock", 29), ("Robert", "Baratheon", "Baratheon", "Storms End", 48), ("Eddard", "Stark", "Stark", "Winterfell", 46), ("Jamie", "Lannister", "Lannister", "Casterly Rock", 29), ("Robert", "Baratheon", "Baratheon", "Storms End", 48), ("Eddard", "Stark", "Stark", "Winterfell", 46), ("Jamie", "Lannister", "Lannister", "Casterly Rock", 29) ] schema = StructType([ StructField("firstname", StringType(), True), StructField("lastname", StringType(), True), StructField("house", StringType(), True), StructField("location", StringType(), True), StructField("age", IntegerType(), True) ]) sample_dataframe = spark.createDataFrame(data=data, schema=schema) sample_dataframe.delta').mode("overwrite").option('mergeSchema','true').saveAsTable("db_delta.sample_data")Switch the cell type to SQL and execute the following statement to query data from the

sample_datatable:SELECT * FROM db_delta.sample_data;

To use Spark SQL to analyze the

sample_datatable in the AnalyticDB for MySQL console, perform the following steps:Log on to the AnalyticDB for MySQL console. In the upper-left corner of the console, select a region. In the left-side navigation pane, click Clusters. On the Clusters page, click an edition tab. Find the cluster that you want to manage and click the cluster ID.

In the left-side navigation pane, choose . On the page that appears, select the Spark engine and an interactive resource group.

Query data from the

sample_datatable.SELECT * FROM db_delta.sample_data LIMIT 1000;

References

Notebook: describes the information about Notebook.