This topic describes common issues and solutions when using the node instant scaling feature.

Index

Category | Subcategory | Links |

Scaling behavior of node instant scaling | ||

Custom scaling behavior | ||

Known limitations

Feature limitations

Node instant scaling does not support the swift mode.

A node pool can contain up to 180 nodes per scale-out batch.

Scale-in cannot be disabled for a specific cluster.

NoteTo disable scale-in for a specific node, see How do I prevent node instant scaling from removing specific nodes?

The node instant scaling solution does not support checking the inventory of preemptible instances. If the Billing Method of the node pool is set to preemptible instances and the option to Use Pay-as-you-go Instances When Spot Instances Are Insufficient is enabled for the node pool, the pay-as-you-go instance is scaled out even if there is sufficient inventory of preemptible instances.

Inability to perfectly predict available node resources

The available resources on a newly provisioned node may not precisely match the instance type's specifications. This is because the underlying OS and system daemons on the ECS instance consume resources. For details, see Why is the memory size of a purchased instance different from the memory size defined in its instance type?

Due to this overhead, the resource estimates used by the node instant scaling add-on may be slightly higher than the actual allocatable resources on a new node. While this discrepancy is usually small, it's important to be aware of the following when configuring your pod resource requests:

Request less than the full instance capacity. The total requested resources, including CPU, memory, and disk, must be less than the instance type specifications. As a general guideline, we recommend that a pod's total resource requests do not exceed 70% of the node's capacity.

Account for non-Kubernetes or static pods. When determining if a node has sufficient resources, the node instant scaling add-on only considers the resource requests of Kubernetes pods (including pending pods and DaemonSet pods). If you run static pods that are not managed as DaemonSets, you must manually account for and reserve resources for them.

Test large pods in advance. If a pod requests a large amount of resources, for example, more than 70% of a node's resources, you must test and confirm in advance that the pod can be scheduled to a node of the same instance type.

Limited simulatable resource types

The node instant scaling add-on supports only a limited number of resource types for simulating and determining whether to perform scaling operations. For more information, see What resource types can node instant scaling simulate?

Storage constraints

The autoscaler is not aware of specific storage constraints that a pod may have. This includes requirements such as:

Needing to run in a specific Availability Zone to access a persistent volume (PV).

Requiring a node that supports a specific disk type (such as ESSD).

Solution: Configure a dedicated node pool

If your application has such storage dependencies, configure a dedicated node pool for it before enabling Auto Scaling on that pool.

By presetting the Availability Zone, instance type, and disk type in the node pool's configuration, you ensure that any newly provisioned nodes will meet the pod's storage mounting requirements. This prevents scheduling failures and pod startup errors caused by resource mismatches.

Scale-out behavior

What resource types can node instant scaling simulate?

The following resource types are supported for simulating and determining scaling behavior.

cpu

memory

ephemeral-storage

aliyun.com/gpu-mem # Only shared GPUs are supported.

nvidia.com/gpuDoes node instant scaling support provisioning an appropriately sized instance type from the node pool based on a pod's resource requests?

Yes, this is a supported feature. The autoscaler intelligently selects the most resource-efficient and cost-effective instance type that can satisfy the pod's requirements.

For example, consider a node pool configured with two instance types: a smaller 4 vCPU / 8 GiB type and a larger 12 vCPU / 48 GiB type.

If a pod requests 2

vCPU, node instant scaling will prioritize provisioning a new4 vCPU / 8 GiBnode to schedule the pod.If you later update the node pool's configuration, replacing the

4 vCPU / 8 GiBtype with an8 vCPU / 16 GiBtype, node instant scaling will automatically adapt and begin provisioning8 vCPU / 16 GiBnodes for similar future requests.

If a node pool has multiple instance types, how does node instant scaling choose which one to provision?

When multiple instance types are configured in a node pool, node instant scaling follows a default selection logic:

Filter by availability: It periodically identifies and filters out any instance types that are currently experiencing insufficient inventory in the region.

Sort by size: It then sorts the remaining available instance types in ascending order based on the number of vCPU cores.

Select the first match: Starting with the smallest instance type, it checks each one to see if it can satisfy the resource requests of the unschedulable pod. The first instance type in the sorted list that meets the pod's requirements is selected for provisioning. Node instant scaling does not evaluate any of the larger instance types after a suitable match is found.

When using node instant scaling, how can I track the current availability of instance types in my node pool?

Node instant scaling provides health metrics that periodically update the inventory of instance types in a node pool with Auto Scaling enabled. When the inventory status of an instance type changes, node instant scaling emits a Kubernetes event named InstanceInventoryStatusChanged. You can watch for these events to monitor the health of your node pool's inventory. This helps you evaluate if your chosen instance types are reliably in stock and allows you to make adjustments to your configuration ahead of time. For more information, see View the health status of node instant scaling.

How can I optimize the node pool configuration to prevent scale-out failures due to insufficient inventory?

Consider the following configuration suggestions to expand the range of available instance types:

Configure multiple optional instance types for the node pool, or use a generalized configuration.

Configure multiple zones for the node pool.

Why does node instant scaling fail to add nodes?

Check if the following exists:

The instance types configured for the node pool have insufficient inventory.

The instance types configured for the node pool cannot meet the pod's resource requests. The resource size of an ECS instance type is its listed specification. Consider the following resource reservations during runtime.

During instance creation, some resources are consumed by virtualization and the OS. For more information, see Why does a purchased instance have a memory size that is different from the memory size defined in the instance type?

Container Service for Kubernetes (ACK) requires a certain amount of node resources to run Kubernetes add-ons and system processes, such as kubelet, kube-proxy, Terway, and the container runtime. See Resource reservation policy for details.

By default, system add-ons are installed on nodes. The resources requested by a pod must be less than the instance specifications.

You have not completed the authorization as described in Enable instant elasticity for nodes.

The node pool with Auto Scaling enabled fails to scale out instances.

To ensure the accuracy of subsequent scaling and system stability, node instant scaling does not perform scaling operations until issues with abnormal nodes are resolved.

How do I configure custom resources for a node pool with node instant scaling enabled?

Configure ECS tags with the following fixed prefix for a node pool with node instant scaling enabled. This allows the scaling add-on to identify the available custom resources in the node pool or the exact values of specified resources.

The node instant scaling add-on (ACK GOATScaler) must be version 0.2.18 or later. To upgrade, see Manage add-ons.

goatscaler.io/node-template/resource/{resource-name}:{resource-size}Example:

goatscaler.io/node-template/resource/hugepages-1Gi:2GiScale-in behavior

Why does node instant scaling fail to remove nodes?

Check if the following exists:

The option to scale in only empty nodes is enabled, but the node being checked is not empty.

The resource request threshold of the pods on the node is higher than the configured scale-in threshold.

Pods from the

kube-systemnamespace are running on the node.The pods on the node have a mandatory scheduling policy that prevents other nodes from running them.

The pods on the node have a PodDisruptionBudget, and the minimum number of available pods has been reached.

If a new node is added, node instant scaling will not perform a scale-in operation on that node within 10 minutes.

Offline nodes exist. An offline node is a running instance that does not have a corresponding node object. The node instant scaling add-on supports an automatic cleanup feature in version 0.5.3 and later. For earlier versions, you must manually delete these residual instances.

Version 0.5.3 is in canary release. To use it, submit a ticket and request access.

See Add-ons to upgrade it.

On the Node Pools page, click Sync Node Pool, then click Details. On the Nodes tab, check whether any nodes are in the offline state.

What types of pods can prevent node instant scaling from removing nodes?

If a pod is not created by a native Kubernetes controller, such as a Deployment, ReplicaSet, Job, or StatefulSet, or if pods on a node cannot be safely terminated or migrated, node instant scaling may be prevented from removing the node.

Pod-basis scaling behavior control

How do I control the scale-in behavior of node instant scaling on a per-pod basis?

Use the pod annotation goatscaler.io/safe-to-evict to specify whether a particular pod should prevent the node it is running on from being scaled in by the node instant scaling add-on.

To PREVENT the node from being scaled in, add the annotation

"goatscaler.io/safe-to-evict": "false"to the pod.To ALLOW the node to be scaled in, add the annotation

"goatscaler.io/safe-to-evict": "true"to the pod.

Node-basis scaling behavior control

How do I specify which nodes to delete during a node instant scaling scale-in?

Add the goatscaler.io/force-to-delete:true:NoSchedule taint to the nodes you want to remove. After you add this taint, node instant scaling directly deletes the nodes without checking the pod status or draining the pods. Use this feature with caution because it may cause service interruptions or data loss.

How do I prevent node instant scaling from removing specific nodes?

Use the node annotation "goatscaler.io/scale-down-disabled": "true" for the target node to prevent it from being scaled in by the node instant scaling add-on. The following is a sample command to add the annotation:

kubectl annotate node <nodename> goatscaler.io/scale-down-disabled=trueCan node instant scaling be configured to only scale in empty nodes?

Yes, you can configure this behavior at either the node level or cluster level. If settings are configured at both levels, the node-level setting will take precedence.

Node level configuration

Add a label to a specific node to control its scale-in behavior.

To enable (only scale in if empty):

goatscaler.io/scale-down-only-empty:trueTo disable (allow scale-in even with pods):

goatscaler.io/scale-down-only-empty:false

Cluster level configuration

In the ACK console, go to the Add-ons page.

Find the node instant scaling add-on (ACK GOATScaler) and follow the on-screen instructions to set

ScaleDownOnlyEmptyNodestotrueorfalse.

The node instant scaling add-on related

Does the node instant scaling add-on update automatically?

No. With the exception of system-wide maintenance or major platform upgrades, ACK does not automatically update the node instant scaling add-on (ACK GOATScaler). You must manually upgrade it on the Add-ons page in the ACK console.

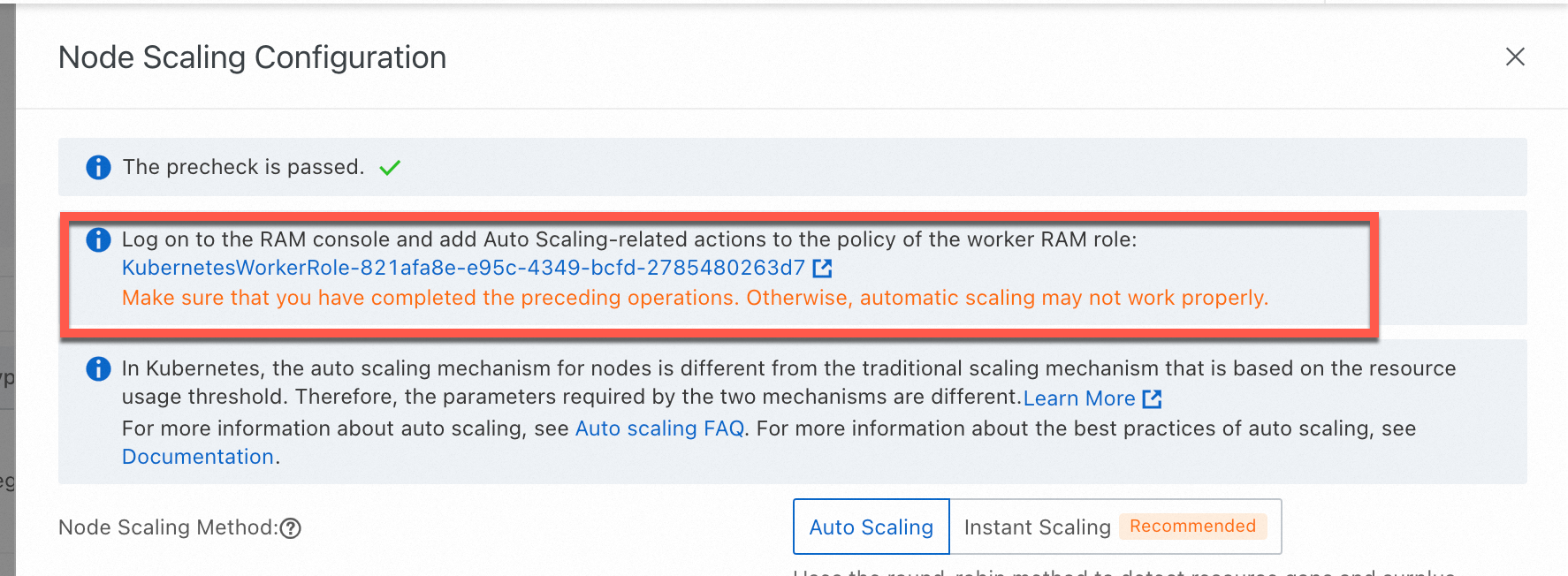

Why is node scaling not working on my ACK managed cluster even though the required role authorization is complete?

Possible cause

This issue can occur if the required token (addon.aliyuncsmanagedautoscalerrole.token) is missing from a Secret within the kube-system namespace. By default, ACK uses the cluster's Worker Role to enable autos caling capabilities, and this token is essential for authenticating those operations.

Solution

The solution is to re-apply the required policy to the cluster's Worker Role by using the authorization wizard in the ACK console.

On the Clusters page, find the cluster to manage and click its name. In the left navigation pane, choose .

On the Node Pools page, click Enable to the right of Node Scaling.

Follow the on-screen instructions to authorize the

KubernetesWorkerRoleand attach theAliyunCSManagedAutoScalerRolePolicysystem policy.

Manually restart the

cluster-autoscalerDeployment (node auto scaling) or theack-goatscalerDeployment (node instant scaling) in thekube-systemnamespace for the permissions to take effect immediately.