Argo Workflows is widely used in scenarios such as scheduled tasks, machine learning, and extract, transform, and load (ETL). Defining workflows in YAML can be challenging if you are not familiar with Kubernetes. The Hera Python SDK offers a user-friendly alternative, enabling the construction of workflows using Python code. It supports complex task scenarios, is easy to test, and integrates seamlessly with the Python ecosystem.

Introduction

Argo Workflows uses YAML to define workflows, providing clear and concise configurations. However, for users unfamiliar with YAML, the strict indentation and hierarchical structure can complicate workflow configuration.

Hera is a Python SDK framework designed for simplifying the development and submission process of workflows. When handling complex workflows, you can effectively prevent syntax errors that might arise from YAML by using Hera. The Hera Python SDK offers several benefits:

Code simplicity: Straightforward and efficient code development.

Seamless integration with the Python ecosystem: Functions act as templates, integrating with Python frameworks and offering many libraries and tools.

Testability: You can use the Python testing framework directly, enhancing code quality and maintainability.

Prerequisites

The Argo console and components are installed, and access credentials and the Argo Server IP address are retrieved. For more information about the instructions, see Enable batch task orchestration.

Hera is installed. You can refer to the following command to install it:

pip install hera-workflows

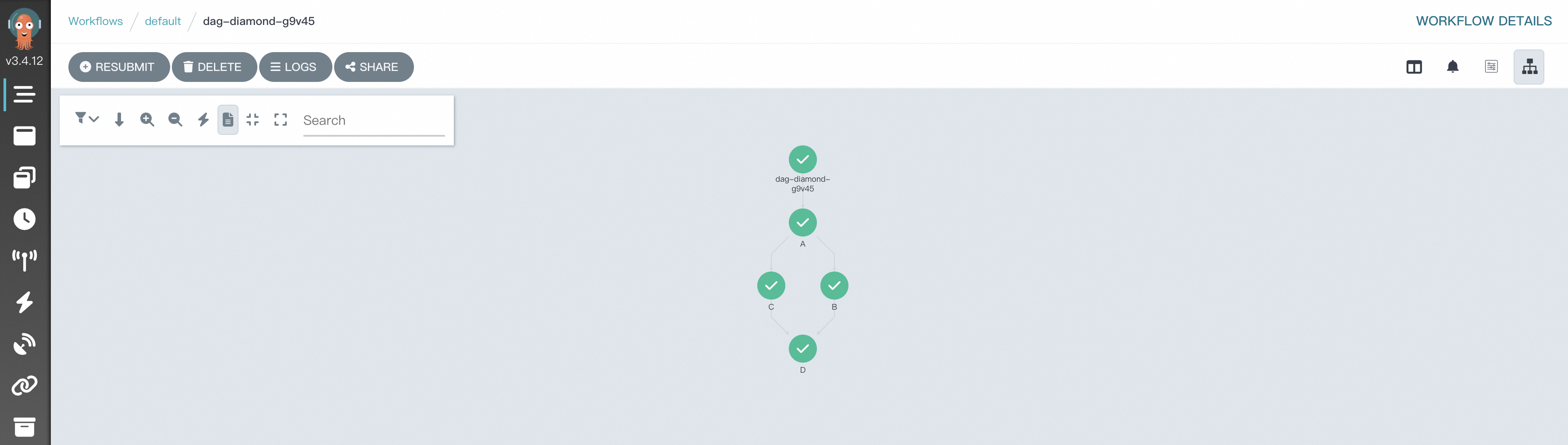

Scenario 1: Simple DAG diamond

In Argo Workflows, a directed acyclic graph (DAG) defines complex task dependencies. The diamond structure allows parallel task execution with converge results in the subsequent task. This structure is ideal for merging data streams or processing results. The following example shows how to define a workflow with a diamond structured using Hera, where task A and task B run in parallel, and their outputs are collectively passed as inputs to task C.

Use the following sample code to create a file named simpleDAG.py:

# Import required librires. from hera.workflows import DAG, Workflow, script from hera.shared import global_config import urllib3 urllib3.disable_warnings() # Specify the endpoint and token. global_config.host = "https://${IP}:2746" global_config.token = "abcdefgxxxxxx" # Enter the token you retrieved. global_config.verify_ssl = "" # The decorator function script is the key feature of Hera that enables Python-like function orchestration by using Hera. # You can call the function within a Hera context manager, such as a Workflow or Steps context. # The function still runs as normal outside of any Hera context, which means that you can write unit tests for the given function. # This example prints the input message. @script() def echo(message: str): print(message) # Orchestrate the workflow. Workflow is the primary resource in Argo and a key class in Hera. It is responsible for storing templates, specifying entry points, and running templates. with Workflow( generate_name="dag-diamond-", entrypoint="diamond", namespace="argo", ) as w: with DAG(name="diamond"): A = echo(name="A", arguments={"message": "A"}) # Create a template. B = echo(name="B", arguments={"message": "B"}) C = echo(name="C", arguments={"message": "C"}) D = echo(name="D", arguments={"message": "D"}) A >> [B, C] >> D # Define dependencies: Tasks B and C depend on Task A, and Task D depends on Tasks B and C. # Create the workflow. w.create()Run the following command to submit the workflow:

python simpleDAG.pyAfter the workflow starts running, view the DAG task process and result in the Workflow Console (Argo).

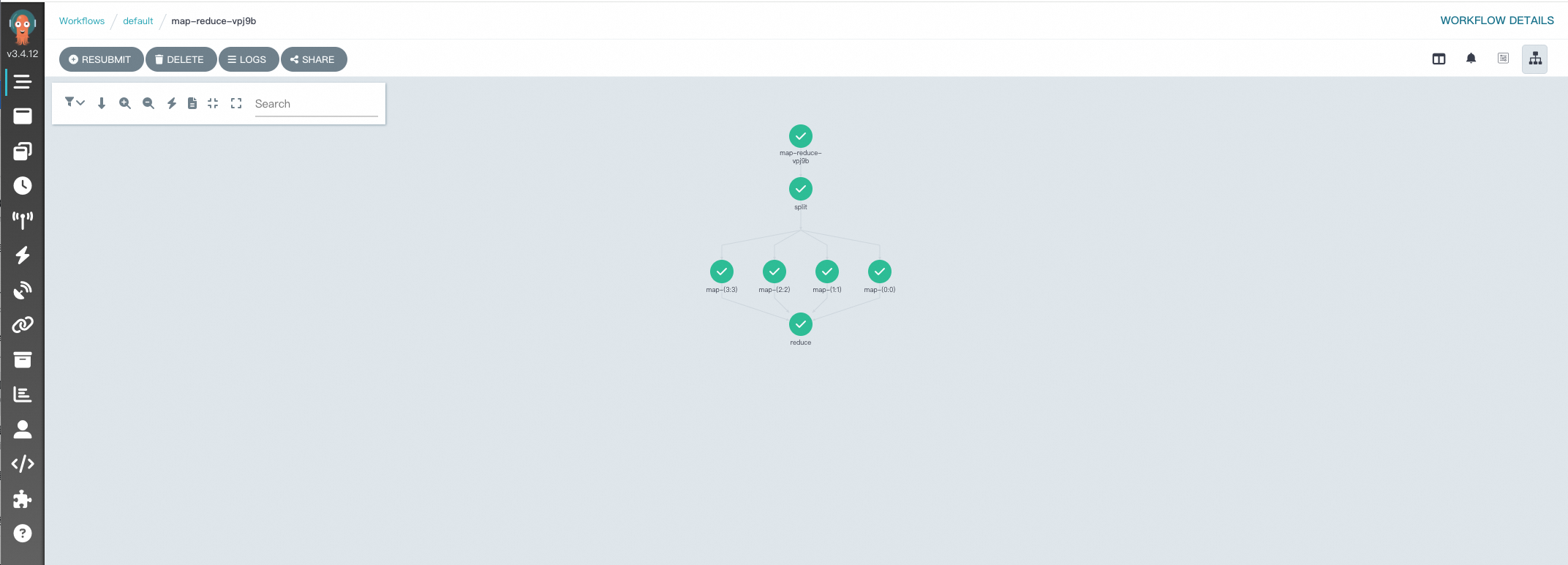

Scenario 2: MapReduce

To process MapReduce-style data in Argo Workflows, DAG templates are used to manage and coordinate tasks, simulating the Map and Reduce phases. The following example shows how to use Hera to create a simple MapReduce workflow for counting words in text files, with each step defined as a Python function for integration with the Python ecosystem.

Configure artifacts. For instructions on related operations, see Configure artifacts.

Use the following sample code to create a file named map-reduce.py:

Run the following command to submit the workflow:

python map-reduce.pyAfter the workflow starts running, view the DAG task process and result in the Workflow Console (Argo).

References

Hera documentation

For more information about Hera, see Hera overview.

For more information about how to use Hera to train large language models (LLMs), see Train an LLM with Hera.

Sample YAML deployment configurations

For more information about how to use YAML files to deploy a simple-diamond workflow, see dag-diamond.yaml.

For more information about how to use YAML files to deploy a MapReduce workflow, see map-reduce.yaml.

Contact us

If you have suggestions or questions about this product, join the DingTalk group 35688562 to contact us.