Container Service for Kubernetes (ACK) allows you to schedule and manage GPU resources by using GPU scheduling. The default GPU scheduling mode is the same as that used in open source Kubernetes. In this topic, a GPU-accelerated TensorFlow job is used as an example to describe how to quickly deploy a GPU-heavy application.

Usage notes

We recommend that you request GPU resources for GPU-accelerated nodes managed by ACK in the same way as Kubernetes extended resources. You need to pay attention to the following items when you request GPU resources for applications and use GPU resources.

Do not run GPU-heavy applications directly on nodes.

Do not use command-line tools, such as

docker,podman, andnerdctl, to create containers or use these tools to request GPU resources for containers. For example, do not run thedocker run --gpus allordocker run -e NVIDIA_VISIBLE_DEVICES=allcommand and run GPU-heavy applications.Do not add the

NVIDIA_VISIBLE_DEVICES=allorNVIDIA_VISIBLE_DEVICES=<GPU ID>environment variable to theenvsection in the pod YAML file. Do not use theNVIDIA_VISIBLE_DEVICESenvironment variable to request GPU resources for pods and run GPU-heavy applications.Do not set

NVIDIA_VISIBLE_DEVICES=alland run GPU-heavy applications when you build container images if theNVIDIA_VISIBLE_DEVICESenvironment variable is not specified in the pod YAML file.Do not add

privileged: trueto thesecurityContextsection in the pod YAML file and run GPU-heavy applications.

If you use the preceding methods to request GPU resources, the following security risks may exist.

If you use one of the preceding methods to request GPU resources on a node but do not specify the details in the device resource ledger of the scheduler, the actual GPU resource allocation information may be different from that in the device resource ledger of the scheduler. In this scenario, the scheduler can still schedule certain pods that request the GPU resources to the node. As a result, your applications may compete for resources provided by the same GPU, such as requesting resources from the same GPU, and some applications may fail to start up due to insufficient GPU resources.

Using the preceding methods may also cause other unknown issues, such as the issues reported by the NVIDIA community.

Procedure

Log on to the ACK console. In the left-side navigation pane, click Clusters.

On the Clusters page, find the cluster you want to manage and click its name. In the left-side pane, choose .

On the Deployments page, click Create from YAML. Paste the following code block into the Template editor and click Create.

apiVersion: v1 kind: Pod metadata: name: tensorflow-mnist namespace: default spec: containers: - image: registry.cn-beijing.aliyuncs.com/acs/tensorflow-mnist-sample:v1.5 name: tensorflow-mnist command: - python - tensorflow-sample-code/tfjob/docker/mnist/main.py - --max_steps=100000 - --data_dir=tensorflow-sample-code/data resources: limits: nvidia.com/gpu: 1 # Request one GPU for the pod. workingDir: /root restartPolicy: AlwaysIn the left-side navigation pane of the cluster management page, choose , find the pod that you created, and then click the name of the pod to view the pod information.

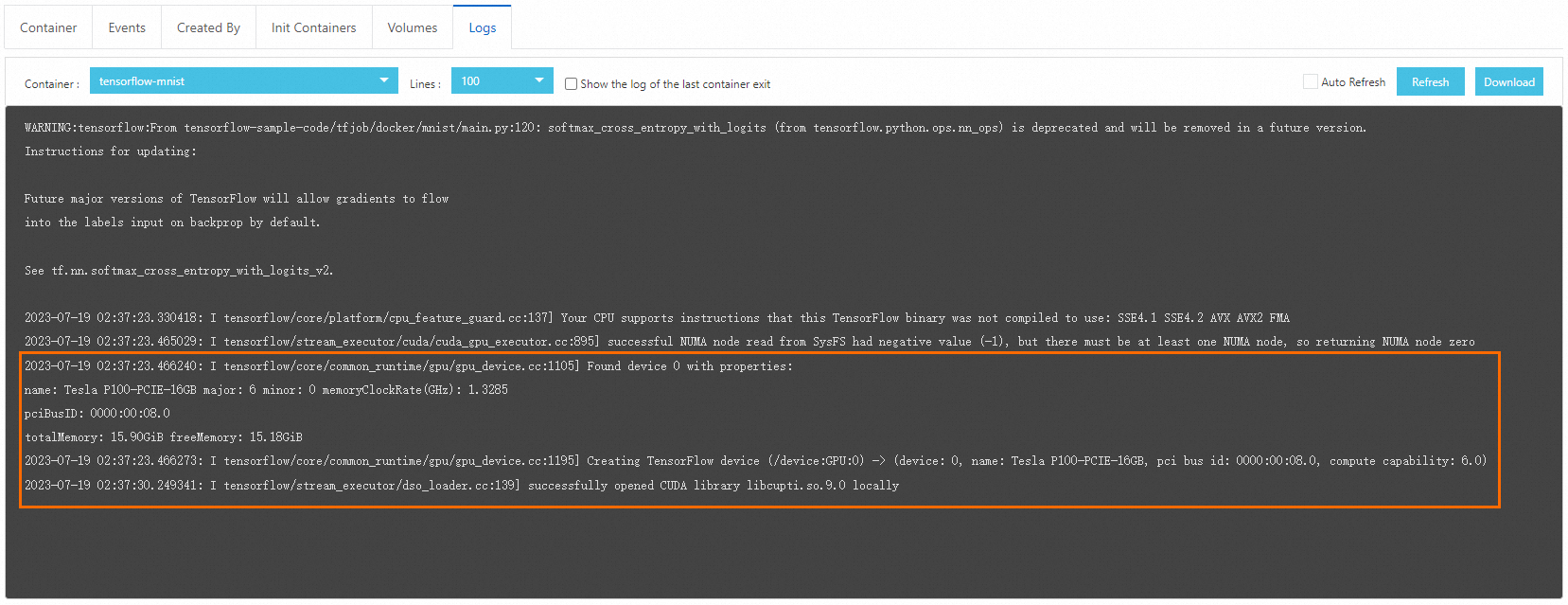

Click the Logs tab and view the log of the TensorFlow job. The following output indicates that the job is using the GPU.