Simple Log Service (SLS) offers log query and analysis features using SQL syntax. Create indexes to facilitate log queries and analysis. This topic explains the basic syntax, limitations of search and analytic statements, and the SQL functions available for these features.

Reading guide

SLS provides the log query and analysis features. For more information, see Guide to log query and analysis.

If you want to query and analyze logs, you must collect the logs to a Standard logstore. For more information, see Manage a logstore. After you create indexes, query and analyze only incremental logs based on the indexes. For more information, see Create indexes. If you want to query and analyze historical logs in files, you must reindex the logs. For more information, see Import historical logs from log files and Reindex logs for a logstore.

You can query tens of billions of logs. For more information, see What can I do if the "The results are inaccurate" error occurs?

SLS provides reserved fields. If you want to analyze reserved fields, see Reserved fields.

Query and analysis

Quickly query billions of logs and perform statistical analysis on the results using SQL syntax. Search statements can be executed independently, while analytic statements must be run together with a search statement. The log analysis feature allows you to analyze data from search results or all data in a logstore.

Basic syntax

Each query statement consists of a search statement and an analytic statement. The search statement and the analytic statement are separated with a vertical bar ( |). A search statement can be independently executed. An analytic statement must be executed together with a search statement. The log analysis feature is used to analyze data in the search results or all data in a logstore.

Search statement|Analytic statementStatement | Description |

Search statement |

Important We recommend that you specify up to 30 search conditions in a search statement. |

Analytic statement |

Important

|

SLS provides ANTLR grammar files for query and analysis. Use these files with ANTLR tools for custom development based on SLS queries.

The ANTLR grammar file is as follows:

Example

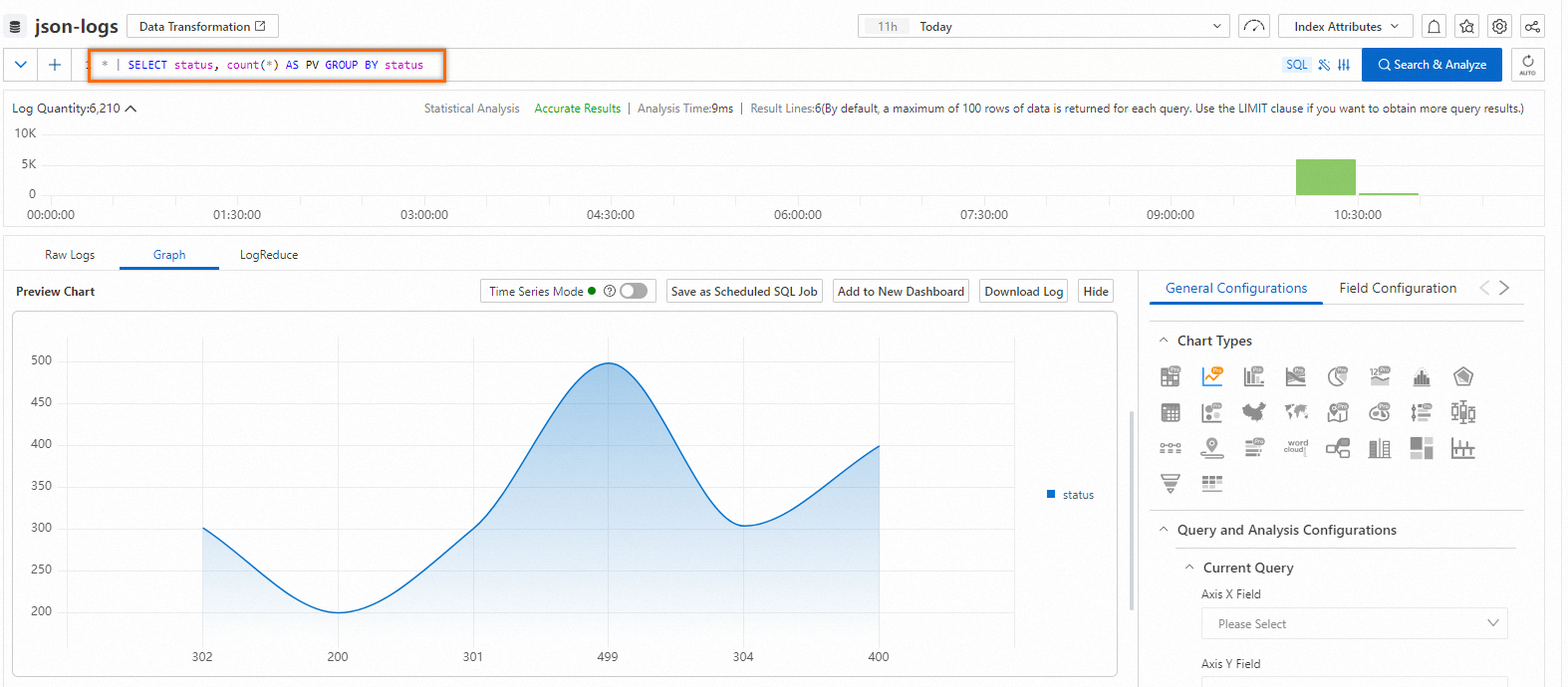

* | SELECT status, count(*) AS PV GROUP BY statusThe following figure shows the query and analysis results.

Advanced features

LiveTail: Monitors online logs in real-time to reduce O&M workloads.

LogReduce: Extracts patterns from similar logs during log collection to efficiently understand the logs.

Contextual query: Views the context information of a specific log, facilitating troubleshooting and issue identification.

Field analysis: Provides field distribution, statistical metrics, and top 5 time series charts to help understand data.

Event settings: Easily obtains detailed information about raw logs based on the event settings.

Overview of Storeview: Performs cross-region and cross-store JOIN query operations by using Storeviews.

Limits on the query feature

Item | Description |

Number of keywords | The number of keywords that are used as search conditions. The number of logical operators is not included. You can specify up to 30 keywords in a search statement. |

Size of a field value | The maximum size of a field value is 512 KB. The excess part is not involved in searching. If the size of a field value is greater than 512 KB, logs may fail to be obtained by using keywords, but the logs are actually stored in the logstore. Note To set the maximum length of a log field value, see Why are field values truncated when I query and analyze logs? |

Maximum number of concurrent search statements | Each project supports up to 100 concurrent search statements. For example, 100 users can concurrently execute search statements in all logstores of a project. |

Returned result | The returned logs are displayed on multiple pages. Each page displays up to 100 logs. |

Fuzzy search | In a fuzzy search, SLS matches up to 100 words that meet the specified conditions and returns the logs that meet the search conditions and contain one or more of these words. For more information, see Fuzzy search. |

Data sorting in search results | By default, search results are displayed in descending order of the time, which is accurate to the second. If the search results are returned within nanoseconds, the search results are displayed in descending order of the time, which is accurate to the nanosecond. |

Limits of the analysis feature

Limit | Standard instance | Dedicated SQL instance | |

SQL enhancement | Complete accuracy | ||

Concurrency | Up to 15 concurrent queries per project. | Up to 100 concurrent queries per project. | Up to 5 concurrent queries per project. |

Data volume | A single query can scan up to 400 MB of log data (excluding cached data). Data exceeding this limit is truncated and marked as incomplete query results. | A single query can scan up to 2 GB of log data (excluding cached data). Data exceeding this limit is truncated and marked as incomplete query results. | Unlimited. |

Method to enable | By default, the log analysis feature is enabled. | A switch is provided for you to manually enable Dedicated SQL. | A switch is provided for you to manually enable Dedicated SQL. |

Fee | Free of charge. | You are charged based on the actual CPU time. | You are charged based on the actual CPU time. |

Data effectiveness mechanism | You can analyze only the data that is written to Simple Log Service after the log analysis feature is enabled. If you need to analyze historical data, you must reindex the historical data. | You can analyze only the data that is written to Simple Log Service after the log analysis feature is enabled. If you need to analyze historical data, you must reindex the historical data. | You can analyze only the data that is written to Simple Log Service after the log analysis feature is enabled. If you need to analyze historical data, you must reindex the historical data. |

Return results | By default, analysis returns up to 100 rows and 100 MB of data. Exceeding 100 MB results in an error. If you need to return more data, use the LIMIT clause. | By default, analysis returns up to 100 rows and 100 MB of data. Exceeding 100 MB results in an error. If you need to return more data, use the LIMIT clause. | By default, analysis returns up to 100 rows and 100 MB of data. Exceeding 100 MB results in an error. If you need to return more data, use the LIMIT clause. |

Maximum field length | The default maximum length for a single field is 2,048 bytes (2 KB) and can be adjusted up to 16,384 bytes (16 KB). Data beyond this limit will not be included in log query and analysis. Note To change this limit, adjust Maximum Field Length. Changes apply only to new data. For more information, see Create indexes. | The default maximum length for a single field is 2,048 bytes (2 KB) and can be adjusted up to 16,384 bytes (16 KB). Data beyond this limit will not be included in log query and analysis. Note To change this limit, adjust Maximum Field Length. Changes apply only to new data. For more information, see Create indexes. | The default maximum length for a single field is 2,048 bytes (2 KB) and can be adjusted up to 16,384 bytes (16 KB). Data beyond this limit will not be included in log query and analysis. Note To change this limit, adjust Maximum Field Length. Changes apply only to new data. For more information, see Create indexes. |

Timeout period | The maximum timeout period for an analysis operation is 55 seconds. | The maximum timeout period for an analysis operation is 55 seconds. | The maximum timeout period for an analysis operation is 55 seconds. |

Number of bits for double-type field values | Double-type field values are limited to 52 bits. Exceeding this can lead to precision loss in floating-point numbers. | Double-type field values are limited to 52 bits. Exceeding this can lead to precision loss in floating-point numbers. | Double-type field values are limited to 52 bits. Exceeding this can lead to precision loss in floating-point numbers. |