BladeLLM delivers efficient and user-friendly quantization capabilities for Large Language Models (LLMs), offering both weight-only quantization (weight_only_quant) and joint quantization of weights and activations (act_and_weight_quant). The platform seamlessly integrates leading quantization algorithms including GPTQ, AWQ, and SmoothQuant, while supporting diverse data types such as INT8, INT4, and FP8. This documentation provides comprehensive guidance on executing model quantization procedures.

Background information

Existing issues

The rapid advancement of LLM technology and applications presents significant challenges for inference deployment due to increasing parameter counts and context scales.

Excessive GPU memory consumption: Deploying large models necessitates substantial GPU memory for loading model weights, while the combination of sequence length and hidden layer dimensions further increases memory requirements through KV cache allocation.

Service throughput and latency issues: GPU memory limitations restrict batch sizes during LLM inference, consequently constraining overall service throughput. As model scale and context length expand, computational demands increase, impacting text generation performance. When combined with batch size limitations, this creates significant response latency challenges through request queuing under high-concurrency scenarios.

Solutions

Model weight and computation cache compression effectively reduces GPU memory consumption during deployment while increasing the maximum feasible inference batch size, thereby enhancing overall service throughput. Furthermore, INT8/INT4 quantization minimizes data transfer sizes from GPU memory during computation, alleviating memory bottlenecks in LLM inference workloads. Additional performance gains can be realized through leveraging INT8/INT4 hardware acceleration capabilities.

BladeLLM integrates advanced quantization algorithms with sophisticated system optimization to deliver a comprehensive quantization solution featuring robust capabilities and exceptional performance. The quantization toolkit offers flexible calibration data input mechanisms and comprehensive multi-GPU model quantization support. Moreover, BladeLLM incorporates CPU offload capabilities, enabling model quantization in resource-constrained environments with limited GPU memory. The platform also features automatic mixed precision functionality, allowing dynamic quantization precision adjustment through selective computational fallback mechanisms.

Create a quantization task

Deploy an elastic job service within Elastic Algorithm Service (EAS) of Platform for AI (PAI) to execute model quantization calibration and conversion tasks. Follow these steps:

-

Log on to the PAI console. Select a region on the top of the page. Then, select the desired workspace and click Elastic Algorithm Service (EAS).

On the Elastic Algorithm Service (EAS) page, click Deploy Service. In the Custom Model Deployment section of the Deploy Service page, click Custom Deployment.

On the Custom Deployment page, configure the key parameters. For information about other parameters, see Custom deployment.

Parameter

Description

Basic Information

Service Name

Specify a name for the service, such as bladellm_quant.

Environment Information

Deployment Method

Select Image-based Deployment.

Image Configuration

In the Alibaba Cloud Image list, select .

NoteThe image version is frequently updated. We recommend that you select the latest version.

Model Settings

Mount the model to be quantized. Use OSS mounting as an example. You can also choose other mounting methods. Click OSS and configure the following parameters:

Uri: Select the OSS storage directory where the quantization model is located. For information about how to create an Object Storage Service (OSS) directory and upload files, see Get started with the OSS console.

Mount Path: Configure the destination path to mount to the service instance, such as

/mnt/model.Enable Read-only Mode: Turn off this feature.

Command

Configure the model quantization command:

blade_llm_quantize --model /mnt/model/Qwen2-1.5B --output_dir /mnt/model/Qwen2-1.5B-qt/ --quant_algo gptq --calib_data ['hello world!'].Parameters:

--model: Configure the quantization model input path.--output_dir: Configure the quantization model output path.--quant_algo: Specify a quantization algorithm. The default quantization algorithm is MinMax. If you use the default quantization algorithm, you do not need to specify--calib_data.

The input or output path must match the OSS path mounted in the model configuration. For information about other quantization parameters that can be configured, see Model quantization parameters.

Port Number

After you select an image, the system automatically configures port 8081. No manual modification is required.

Resource Information

Resource Type

In this example, select Public Resources. You can select other resource types based on your business requirements.

Deployment Resources

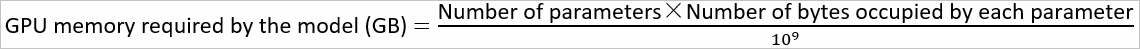

Select an instance type for running the BladeLLM quantization command. The selected instance type's GPU memory only needs to be slightly higher than the GPU memory required by the quantization model. The model GPU memory calculation formula is as follows:

. For example, if an FP16 model includes 7 billion parameters, the GPU memory is calculated by using the following formula:

. For example, if an FP16 model includes 7 billion parameters, the GPU memory is calculated by using the following formula: . Features

Task Mode

Turn on the switch to create an EAS scalable job service.

After configuring the parameters, click Deploy.

When the Service Status becomes Completed, model quantization is complete. You can go to the output path in OSS (your data source) to view the generated quantization model, and deploy the model on EAS by referring to Get started with BladeLLM.

Introduction to quantization techniques

This section presents the various quantization modes, algorithms, and their respective application scenarios within the BladeLLM quantization toolkit, along with detailed parameter usage instructions.

Quantization modes

BladeLLM supports two quantization modes: weight_only_quant and act_and_weight_quant, which can be specified by using the quant_mode parameter.

weight_only_quant

Definition: quantizes only model weights.

Characteristics: Compared to act_and_weight_quant, weight_only_quant typically preserves model accuracy more effectively. In scenarios where GPU memory bandwidth represents the primary deployment constraint, weight_only_quant offers an optimal balance between model performance and accuracy maintenance.

Supported data types: supports 8-bit and 4-bit. Both are signed symmetric quantization by default.

Quantization granularity: supports per-channel quantization and block-wise quantization.

Supported algorithms: includes

minmax,gptq,awq, andsmoothquant+.

act_and_weight_quant

Definition: quantizes both model weights and activation values simultaneously.

Characteristics: Unlike weight_only_quant, this approach enables genuine low-bit dense computation, substantially enhancing operator execution performance.

Supported data types: supports 8-bit. It is signed symmetric quantization by default.

Quantization granularity: uses per-token dynamic quantization for activation values and per-channel static quantization for weights. Block-wise quantization is not currently supported.

Supported algorithms: includes

minmax,smoothquant, andsmoothquant_gptq.

The quantization modes support the following hardware and quantization data types.

Hardware type | weight_only_quant | act_and_weight_quant | ||||

INT8 | INT4 | FP8 | INT8 | FP8 | ||

Ampere (SM80/SM86) | GU100/GU30 | Y | Y | N | Y | N |

Ada Lovelace (SM89) | L20 | N | N | N | Y | Y |

Hopper (SM90) | GU120/GU108 | N | N | N | Y | Y |

block_wise_quant

Traditionally, model parameter quantization employed per-channel quantization, where each output channel utilized shared quantization parameters. For weight_only_quant applications, contemporary approaches implement finer-grained quantization strategies to minimize LLM model quantization losses. These methods partition each output channel into multiple smaller blocks, with each block employing distinct quantization parameters. BladeLLM standardizes block size at 64 parameters, establishing that every 64 parameters utilize a common set of quantization parameters.

Example: For the parameter

If per-channel quantization is used, the quantization parameter

. If block-wise quantization is used, the quantization parameter

.

This example demonstrates that block-wise quantization employs more granular quantization parameters, theoretically delivering superior quantization accuracy—particularly in 4-bit weight-only quantization scenarios—though it may marginally impact model quantization performance.

Quantization algorithms

BladeLLM provides several quantization algorithms that can be specified by using the quant_algo parameter.

MinMax

MinMax represents a straightforward quantization algorithm that employs round-to-nearest (RTN) methodology.

This algorithm is suitable for both weight_only_quant and act_and_weight_quant. It does not require calibration data, and the quantization process is fast.

GPTQ

GPTQ constitutes a weight-only quantization algorithm leveraging approximate second-order information for quantization fine-tuning, effectively preserving quantization accuracy while maintaining relatively efficient processing. The algorithm sequentially quantizes parameters within each channel (or block), subsequently utilizing the inverse Hessian matrix derived from activation values to appropriately adjust parameters within that channel (or block), thereby compensating for accuracy degradation resulting from quantization.

This algorithm supports block-wise quantization, requires a certain amount of calibration data, and in most cases has better quantization accuracy than the MinMax algorithm.

AWQ

AWQ represents an activation-aware weight-only quantization algorithm that acknowledges varying parameter significance levels, identifying that a small subset (0.1%-1%) of critical parameters (salient weights) exist, whose quantization omission substantially minimizes quantization losses. Experimental findings indicate that parameter channels exhibiting larger activation values carry greater importance, thus important channel selection depends on activation value distributions. Specifically, AWQ mitigates quantization losses in critical parameters by applying relatively large scaling factors prior to quantization.

This algorithm supports block-wise quantization, requires a certain amount of calibration data, and in some cases has better accuracy than GPTQ, but takes longer for quantization calibration. For example, when calibrating Qwen-72B on 4 V100-32G GPUs, gptq takes about 25 minutes, while awq takes about 100 minutes.

SmoothQuant

SmoothQuant serves as an effective post-training quantization algorithm designed to enhance LLM W8A8 quantization, representing a typical act-and-weight quantization approach. The consensus holds that activation values present greater quantization challenges compared to model weights during model quantization, with outliers constituting the primary obstacle for activation value quantization. SmoothQuant identified that LLM activation outliers frequently manifest uniformly across specific channels without token-dependent variations. Building upon this discovery, SmoothQuant employs mathematically equivalent transformations to shift quantization complexity from activation values to weights, thereby achieving seamless outlier processing in activations.

This algorithm requires a certain amount of calibration data, and in most cases has better quantization accuracy than the MinMax algorithm. Currently, it does not support block-wise quantization.

SmoothQuant+

SmoothQuant+ functions as a weight-only quantization algorithm that minimizes quantization losses through activation outlier smoothing. This algorithm acknowledges that model weight quantization errors become exacerbated by activation outliers, therefore SmoothQuant+ initially smooths activation outliers along the channel dimension while simultaneously adjusting corresponding weights to preserve computational equivalence, before executing standard weight-only quantization.

This algorithm requires a certain amount of calibration data and supports block-wise quantization.

SmoothQuant-GPTQ

SmoothQuant-GPTQ involves applying the GPTQ algorithm to quantize model parameters following activation outlier smoothing through SmoothQuant principles. This hybrid approach effectively combines the respective advantages of both SmoothQuant and GPTQ methodologies.

The following table describes the basic support for various quantization algorithms.

Quantization algorithm | weight_only_quant (supports blockwise quantization) | act_and_weight_quant (does not support blockwise quantization) | Calibration data dependency | |||

INT8 | INT4 | FP8 | INT8 | FP8 | ||

minmax | Y | Y | N | Y | Y | N |

gptq | Y | Y | N | N | N | Y |

awq | Y | Y | N | N | N | Y |

smoothquant | N | N | N | Y | Y | Y |

smoothquant+ | Y | Y | N | N | N | Y |

smoothquant_gptq | N | N | N | Y | Y | Y |

Algorithm selection recommendations:

For rapid experimentation, we recommend the MinMax algorithm, which eliminates calibration data requirements and offers accelerated quantization processing.

If the MinMax quantization accuracy does not meet your requirements:

For weight-only quantization, you can further try gptq, awq, or smoothquant+.

For act_and_weight quantization, you can further try smoothquant or smoothquant_gptq.

Among these alternatives, AWQ and SmoothQuant+ require extended quantization processing time compared to GPTQ, though they may demonstrate reduced quantization accuracy degradation in certain scenarios. Notably, GPTQ, AWQ, SmoothQuant, and SmoothQuant+ all mandate calibration data requirements.

To enhance quantization accuracy preservation, we recommend enabling block-wise quantization. While block-wise quantization may introduce minor performance reductions in quantized models, it typically delivers substantial quantization accuracy improvements in 4-bit weight-only quantization contexts.

Should the aforementioned quantization accuracy prove insufficient, consider enabling automatic mixed precision quantization by configuring the fallback_ratio parameter to designate the proportion of layers reverting to floating-point computation. This mechanism automatically evaluates quantization sensitivity across all layers and selectively reverts specified computational layers to enhance overall quantization accuracy.