This topic describes how to efficiently deploy a service by using the self-developed BladeLLM engine of Platform for AI (PAI) to perform large language model (LLM) inference with low latency and high throughput.

Prerequisites

PAI is activated and a default workspace is created. For more information, see Activate PAI and create a default workspace.

If you want to deploy a custom model, make sure the following prerequisites are met:

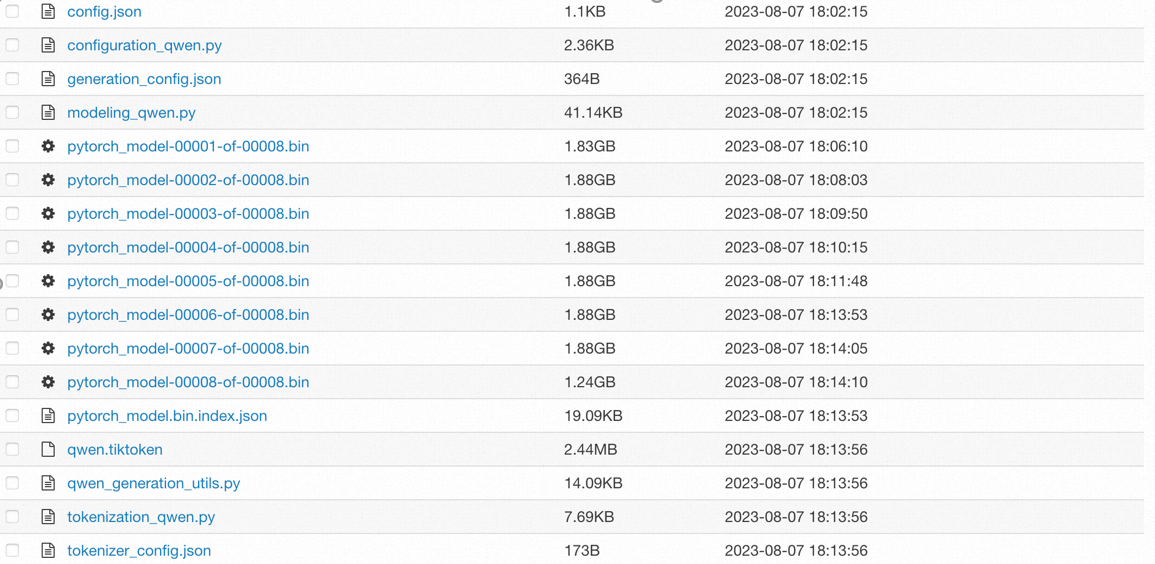

Model files and related configuration files are prepared. The following figure shows sample model files.

NoteOnly Qwen and Llama text models can be deployed, and the model structure cannot be changed.

The config.json configuration file must be included. You must configure the config.json file based on the Huggingface model format. For more information about the sample file, see config.json.

An Object Storage Service (OSS) bucket is created and custom model files are uploaded. For more information, see Get started with the OSS console.

Limitations

The following table describes the limitations that apply when you use the BladeLLM engine to deploy services.

Type | Description |

Model limit | Only Qwen and Llama text models can be deployed, including the open source, fine-tuned, and quantized versions. |

Resource limit |

|

Deploy a service

PAI provides the following deployment methods for using the BladeLLM engine to deploy a service. You can use preset public models or fine-tuned models in PAI to implement quick scenario-based deployment. PAI also supports custom and JSON deployment for users who have special configuration requirements. You can deploy your fine-tuned models with a few clicks.

Scenario-based model deployment (recommended)

Log on to the PAI console. In the top navigation bar, select the desired region. On the page that appears, select the desired workspace and click Enter Elastic Algorithm Service (EAS).

On the Elastic Algorithm Service (EAS) page, click Deploy Service. In the Scenario-based Model Deployment section of the Deploy Service page, click LLM Deployment.

On the LLM Deployment page, configure the parameters described in the following table.

Parameter

Description

Example

Basic Information

Service Name

Specify a name for the service.

blade_llm_qwen_server

Inference Engine

If you set this parameter to BladeLLM, the self-developed BladeLLM engine of PAI is used for quick deployment.

High-performance Deployment

Image Version

Select the BladeLLM engine version.

blade-llm:0.9.0

Model Settings

The source of the model. Valid values:

Public Model: PAI provides various common models. Click the

icon in the drop-down list to view the details of each model.

icon in the drop-down list to view the details of each model. Custom Model: If the public models cannot meet your business requirements, you can select a fine-tuned model. You can select one of the following mount methods: OSS, Standard File Storage NAS (NAS), Extreme NAS, Cloud Parallel File System (CPFS) for Lingjun, and PAI Model.

Resource Information

Resource Type

You can use the following resource types:

Public Resources: We recommend that you use public resources in scenarios that involve light workloads and low timeliness requirements.

EAS Resource Group: suitable for scenarios that require resource isolation or have high security requirements. For information about how to purchase Elastic Algorithm Service (EAS) resource groups, see Use EAS resource groups.

Resource Quota: suitable for scenarios that require powerful computing and efficient data processing capabilities. For information about how to prepare a resource quota, see Create resource quotas.

Public Resources

Deployment Resources

Select an instance type for deploying the service. For more information, see Limitations.

ecs.gn7i-c16g1.4xlarge

Features

Shared Memory

Configure the shared memory size to allow the instance to perform read and write operations on the memory without the need for data copy and transfer. By default, when you select an instance type that has multiple GPUs, the shared memory is set to 64 GB. Otherwise, the shared memory is set to 0. You can also manually modify the shared memory size.

Default settings

Advanced Settings

Tensor Parallelism

BladeLLM supports distributed inference across multiple GPUs. In most cases, you can set this parameter to the number of GPUs in the instance type.

1

Port Number

The local HTTP port on which the model service listens after the image is started.

NoteYou cannot specify port 8080 because EAS listens on port 8080.

8001

Maximum GPU Memory Usage

The ratio of the KV cache memory to the total GPU memory minus the memory occupied by the model. Default value: 0.85. If out-of-memory issues occur, you can decrease the parameter value. If you have sufficient memory, you can increase the parameter value.

0.85

Chunk Size for Prefill

The built-in optimization policy of BladeLLM. The input data is divided into multiple chunks during the prefill phase to increase computing resource efficiency and reduce latency. The chunk size is the amount of data provided to the model at a time. Unit: tokens.

2048

Speculative Decoding

Specifies whether to enable the acceleration strategy that uses a smaller and faster draft model to accelerate the inference of the destination model. The draft model predicts the future token generation in multiple steps. The destination model verifies and filters the token at the same time. This accelerates the token generation without compromising accuracy.

ImportantThe speculative decoding feature deploys a draft model and requires an instance type that has larger GPU memory. If you enable the speculative decoding feature, you must recalculate the required memory size based on the number of parameters of the destination model and draft model.

Draft Model: a small-scale model that has a similar architecture to the destination model. You can use a built-in public model in PAI or fine-tune a model based on a specific task or dataset.

Speculation Step Size: the length of the token sequence generated by the draft model in each prediction. Default value: 4. These sequences are verified and filtered by the destination model.

Disabled

Other Parameters

Add Option Parameter: An option parameter uses its parameter value to specify a configuration. You must enter a parameter name and value.

Add Flag Parameter: A flag parameter is of the Boolean type and used to enable or disable a feature. You need to only enter a parameter name.

None

Command Preview

The running command automatically generated based on the image and parameter configuration. By default, you cannot modify the running command. If you want to modify the running command, click Switch to Free Edit Mode.

Default configuration used without the need to modify the configuration

After you configure the parameters, click Deploy.

Custom deployment

Log on to the PAI console. In the top navigation bar, select the desired region. On the page that appears, select the desired workspace and click Enter Elastic Algorithm Service (EAS).

On the Elastic Algorithm Service (EAS) page, click Deploy Service. In the Custom Model Deployment section of the Deploy Service page, click Custom Deployment.

On the Custom Deployment page, configure the parameters. The following table describes the parameters. For information about other parameters, see Custom deployment.

Parameter

Description

Basic Information

Service Name

Specify a name for the service.

Environment Information

Deployment Method

Set this parameter to Image-based Deployment.

Image Configuration

Select .

Mount storage

Click OSS to mount the configurations of the fine-tuned model and configure the following parameters:

OSS: Select the OSS directory in which the model files are stored. Example:

oss://examplebucket/bloom_7b.Mount Path: the path of the model configurations mounted to the service instance. Example:

/mnt/model/bloom_7b.

Command

Set this parameter to

blade_llm_server --port 8081 --model /mnt/model/bloom_7b/.Port Number

Set this parameter to 8081. The value must match the port specified in the command.

Resource Information

Deployment Resources

Select an instance type for deploying the service. For more information, see Limitations.

Shared Memory

When using multiple GPUs for inference, you must configure the shared memory size. By default, when you select an instance type with multiple GPUs, the shared memory is set to 64 GB. Otherwise, it is set to 0. You can also manually adjust the shared memory size.

After configuring the parameters, click Deploy.

JSON deployment

Log on to the PAI console. In the top navigation bar, select the desired region. On the page that appears, select the desired workspace and click Enter Elastic Algorithm Service (EAS).

On the Elastic Algorithm Service (EAS) page, click Deploy Service. In the Custom Model Deployment section of the Deploy Service page, click JSON Deployment.

On the JSON Deployment page, specify the following content in the JSON editor and click Deploy:

{ "name": "blade_llm_bloom_server", // The name of the service. "containers": [ { // Use a URL that contains the -vpc suffix for the image and command. In the URL, <region_id> indicates the region ID, such as cn-hangzhou. // Specify a valid version number. "image": "eas-registry-vpc.<region_id>.cr.aliyuncs.com/pai-eas/blade-llm:0.8.0", "command": "blade_llm_server --port 8081 --model /mnt/model/bloom_7b/", // The port number must match the value of the --port parameter in the command. "port": 8081 } ], "storage": [ { // The mount path for the model. "mount_path": "/mnt/model/bloom_7b/", "oss": { "endpoint": "oss-<region_id>-internal.aliyuncs.com", // The OSS directory containing the model files. "path": "oss://examplebucket/bloom_7b/" } }, { // Configure shared memory when using multiple GPUs for inference. "empty_dir": { "medium": "memory", "size_limit": 64 }, "mount_path": "/dev/shm" } ], "metadata": { "instance": 1, "memory": 64000, // Memory size in MB. "cpu": 16, "gpu": 1, "resource": "eas-r-xxxxxx" // Resource group ID. } }

Call a service

When you deploy a service that is in the running state, you can call the service to perform model inference.

View the access address and token of the service.

On the Elastic Algorithm Service (EAS) page, find the desired service and click Invocation Method in the Service Type column.

In the Invocation Method dialog box, you can view the access address and token of the service.

Run the following command in your terminal to call the service and receive generated text in streaming mode:

# Call EAS service curl -X POST \ -H "Content-Type: application/json" \ -H "Authorization: AUTH_TOKEN_FOR_EAS" \ -d '{"prompt":"What is the capital of Canada?", "stream":"true"}' \ <service_url>/v1/completionsNote the following parameters:

Authorization: Your service token.

<service_url>: Your service access address.

Sample response:

data: {"id":"91f9a28a-f949-40fb-b720-08ceeeb2****","choices":[{"finish_reason":"","index":0,"logprobs":null,"text":" The"}],"object":"text_completion","usage":{"prompt_tokens":7,"completion_tokens":1,"total_tokens":8},"error_info":null} data: {"id":"91f9a28a-f949-40fb-b720-08ceeeb2****","choices":[{"finish_reason":"","index":0,"logprobs":null,"text":" capital"}],"object":"text_completion","usage":{"prompt_tokens":7,"completion_tokens":2,"total_tokens":9},"error_info":null} data: {"id":"91f9a28a-f949-40fb-b720-08ceeeb2****","choices":[{"finish_reason":"","index":0,"logprobs":null,"text":" of"}],"object":"text_completion","usage":{"prompt_tokens":7,"completion_tokens":3,"total_tokens":10},"error_info":null} data: {"id":"91f9a28a-f949-40fb-b720-08ceeeb2****","choices":[{"finish_reason":"","index":0,"logprobs":null,"text":" Canada"}],"object":"text_completion","usage":{"prompt_tokens":7,"completion_tokens":4,"total_tokens":11},"error_info":null} data: {"id":"91f9a28a-f949-40fb-b720-08ceeeb2****","choices":[{"finish_reason":"","index":0,"logprobs":null,"text":" is"}],"object":"text_completion","usage":{"prompt_tokens":7,"completion_tokens":5,"total_tokens":12},"error_info":null} data: {"id":"91f9a28a-f949-40fb-b720-08ceeeb2****","choices":[{"finish_reason":"","index":0,"logprobs":null,"text":" Ottawa"}],"object":"text_completion","usage":{"prompt_tokens":7,"completion_tokens":6,"total_tokens":13},"error_info":null} data: {"id":"91f9a28a-f949-40fb-b720-08ceeeb2****","choices":[{"finish_reason":"","index":0,"logprobs":null,"text":"."}],"object":"text_completion","usage":{"prompt_tokens":7,"completion_tokens":7,"total_tokens":14},"error_info":null} data: {"id":"91f9a28a-f949-40fb-b720-08ceeeb2****","choices":[{"finish_reason":"stop","index":0,"logprobs":null,"text":""}],"object":"text_completion","usage":{"prompt_tokens":7,"completion_tokens":8,"total_tokens":15},"error_info":null} data: [DONE]