ESS(EMR Remote Shuffle Service)是E-MapReduce(简称EMR)在优化计算引擎的Shuffle操作上,推出的扩展组件。

背景信息

目前Shuffle方案缺点如下:

Shuffle Write在大数据量场景下会溢出,导致写放大。

Shuffle Read过程中有大量的网络小包导致Connection reset问题。

Shuffle Read过程中存在大量小数据量的IO请求和随机读,对磁盘和CPU造成高负载。

对于M*N次的连接数,在M和N数千的规模下,作业基本无法完成。

NodeManager和Spark Shuffle Service是同一进程,当Shuffle的数据量特别大时,通常会导致NodeManager重启,从而影响YARN调度的稳定性。

EMR推出的基于Shuffle的ESS服务,可以优化目前Shuffle方案的问题。ESS优势如下:

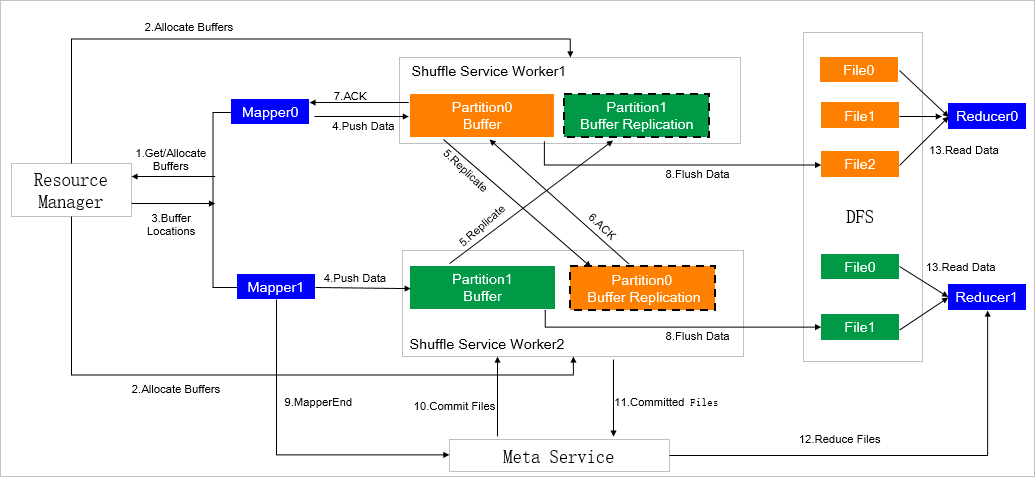

使用Push-Style Shuffle代替Pull-Style,减少Mapper的内存压力。

支持IO聚合,Shuffle Read的连接数从M*N降到N,同时更改随机读为顺序读。

支持两副本机制,降低Fetch Fail概率。

支持计算与存储分离架构,可以部署Shuffle Service至特殊硬件环境中,与计算集群分离。

解决Spark on Kubernetes时对本地磁盘的依赖。

ESS设计架构图如下。

使用限制

此文档仅适用于EMR-3.39.1之前、EMR-4.x系列版本和EMR-5.5.0之前版本。EMR-3.39.1及之后版本,EMR-5.5.0及之后版本,详情请参见RSS。

创建集群

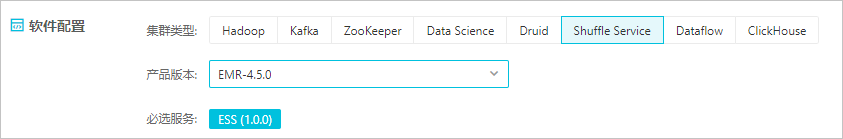

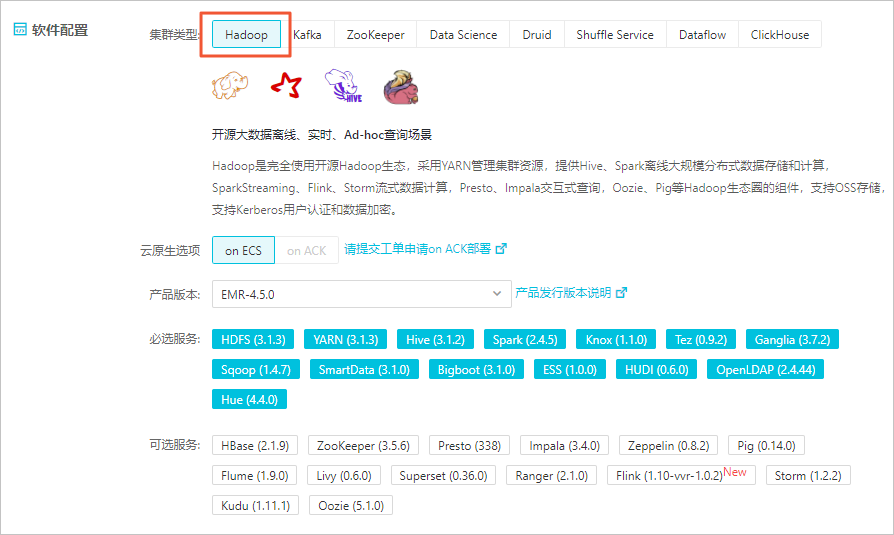

以EMR-4.5.0版本为例,您可以通过以下两种方式创建ESS的集群:

创建E-MapReduce的Shuffle Service集群。

创建E-MapReduce的Hadoop集群。

集群创建详情请参见创建集群。

使用ESS

Spark使用ESS时,需在提交Spark作业时添加以下配置项,配置详情请参见作业编辑。

Spark相关的参数,请参见Spark Configuration。

参数 | 描述 |

spark.shuffle.manager | 固定值org.apache.spark.shuffle.ess.EssShuffleManager。 |

spark.ess.master.address | 填写格式<ess-master-ip>:<ess-master-port>。 涉及参数如下:

|

spark.shuffle.service.enabled | 设置为false。 使用EMR的Remote Shuffle Service时需要关闭原有的External Shuffle Service。 |

spark.shuffle.useOldFetchProtocol | 设置为true。 兼容旧的Shuffle协议。 |

spark.sql.adaptive.enabled | 设置为false。 EMR的Remote Shuffle Service暂不支持Adaptive Execution。 |

spark.sql.adaptive.skewJoin.enabled |

配置项说明

您可以在ESS服务配置页面,查看ESS所有的配置项。

参数 | 描述 | 默认值 |

ess.push.data.replicate | 是否开启两副本。取值包含:

说明 建议生产环境开启两副本。 | true |

ess.worker.flush.queue.capacity | 每个目录的Flush buffer数量。 说明 为了提升性能,您可以配置多块磁盘。为了提升整体的读写吞吐量,建议一块磁盘不多于2个目录。 每个目录的Flush buffer所消耗堆内的内存为ess.worker.flush.buffer.size * ess.worker.flush.queue.capacity,即 | 512 |

ess.flush.timeout | Flush到存储层的超时时间。 | 240s |

ess.application.timeout | Application心跳超时时间,超时会清理Application相关资源。 | 240s |

ess.worker.flush.buffer.size | Flush buffer大小,超过最大值会触发刷盘。 | 256k |

ess.metrics.system.enable | 是否打开监控。取值包含:

| false |

ess_worker_offheap_memory | Worker堆外内存大小。 | 4g |

ess_worker_memory | Worker堆内内存大小。 | 4g |

ess_master_memory | Master堆内内存大小。 | 4g |