This topic describes how to use the task orchestration feature of Data Management

(DMS) to train a machine learning model.

Prerequisites

- An Alibaba Cloud account is created.

- DMS is activated.

- Data Lake Analytics (DLA) is activated. For more information, see Activate DLA.

- Object Storage Service (OSS) is activated. For more information, see Activate OSS.

Background information

As big data technologies have been developing and computing capabilities have been

improving in recent years, machine learning and deep learning are widely applied.

Relevant applications include personalized recommendation systems, facial recognition

payments, and autonomous driving. MLlib is a machine learning library of Apache Spark.

It provides a variety of algorithms for training machine learning models, such as

classification, regression, clustering, collaborative filtering, and dimensionality

reduction. In this topic, the k-means clustering algorithm is used. You can use the

task orchestration feature of DMS to create a DLA Serverless Spark task to train a machine learning model.

Upload data and code to OSS

- Log on to the OSS console.

- Create a data file. In this example, create a file named data.txt and add the following

content to the file:

0.0 0.0 0.0

0.1 0.1 0.1

0.2 0.2 0.2

9.0 9.0 9.0

9.1 9.1 9.1

9.2 9.2 9.2

- Write the Spark MLLib code and package the code into a fat JAR file.

Note In this example, use the following code to read data from the data.txt file and train

a machine learning model by using the k-means clustering algorithm.

package com.aliyun.spark

import org.apache.spark.SparkConf

import org.apache.spark.mllib.clustering.KMeans

import org.apache.spark.mllib.linalg.Vectors

import org.apache.spark.sql.SparkSession

object SparkMLlib {

def main(args: Array[String]): Unit = {

val conf = new SparkConf().setAppName("Spark MLlib Kmeans Demo")

val spark = SparkSession

.builder()

.config(conf)

.getOrCreate()

val rawDataPath = args(0)

val data = spark.sparkContext.textFile(rawDataPath)

val parsedData = data.map(s => Vectors.dense(s.split(' ').map(_.toDouble)))

val numClusters = 2

val numIterations = 20

val model = KMeans.train(parsedData, numClusters, numIterations)

for (c <- model.clusterCenters) {

println(s"cluster center: ${c.toString}")

}

val modelOutputPath = args(1)

model.save(spark.sparkContext, modelOutputPath)

}

}

- Upload the data.txt file and the fat JAR file to OSS. For more information, see Upload objects.

Create a DLA Serverless Spark task in DMS

- Log on to the DMS console V5.0.

- In the top navigation bar, click DTS. In the left-side navigation pane, choose .

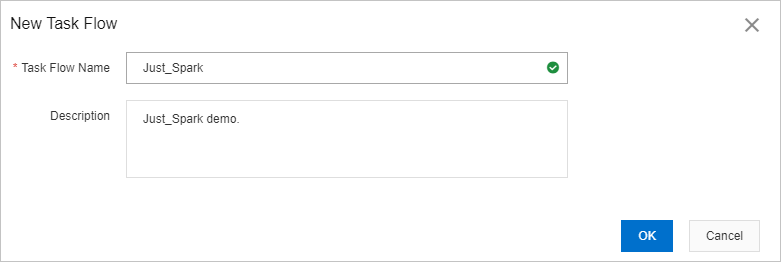

- On the page that appears, click Create Task Flow.

- In the Create Task Flow dialog box, enter relevant information in the Task Flow Name and Description fields and click OK. In this example, set the task flow name to

Just_Spark and enter Just_Spark demo. in the Description field.

- On the Task Orchestration page, find the DLA Serverless Spark task node in the left-side Task Type navigation tree and drag the task node to the

canvas.

- Double-click the DLA Serverless Spark node on the canvas and complete the following configuration.

- Select the region of the Spark cluster that you created from the Region drop-down list.

- Select the Spark cluster from the Spark cluster drop-down list.

- Write code in the Job configuration field. The code is used to train the machine learning model on the Spark cluster.

In this example, write the following code:

{

"name": "spark-mllib-test",

"file": "oss://oss-bucket-name/kmeans_demo/spark-mllib-1.0.0-SNAPSHOT.jar",

"className": "com.aliyun.spark.SparkMLlib",

"args": [

"oss://oss-bucket-name/kmeans_demo/data.txt",

"oss://oss-bucket-name/kmeans_demo/model/"

],

"conf": {

"spark.driver.resourceSpec": "medium",

"spark.executor.instances": 2,

"spark.executor.resourceSpec": "medium",

"spark.dla.connectors": "oss"

}

}

Note

file: specifies the absolute path of the fat JAR file in OSS.

args: specifies the absolute paths of the data.txt file and the machine learning model

to be trained in OSS.

- After you complete the configuration, click Save.

- Click Try Run in the upper-left corner to test the DLA Serverless Spark task.

- If

status SUCCEEDED appears in the last line of the logs, the test run is successful.

- If

status FAILED appears in the last line of the logs, the test run fails. In this case, view the

node on which the failure occurs and the reason for the failure in the logs. Then,

modify the configuration of the node and try again.

- Publish the task flow. For more information about how to publish a task flow, see

Publish a task flow.

View the execution status of the task flow

- Log on to the DMS console V5.0.

- In the top navigation bar, click DTS. In the left-side navigation pane, choose .

- Click the name of the task flow that you want to manage to go to its details page.

- Click Go to O&M in the upper-right corner of the canvas to go to the task flow O&M page.

icon to the left of the

icon to the left of the