You can containerize the software environment required for AI training jobs and then execute the training jobs on elastic container instances. This deployment method solves the issue of complex environment deployment. In addition, you need to pay only for what you use. This reduces costs and improves efficiency. This topic describes how to run a GPU-based TensorFlow training job on elastic container instances in a Container Service for Kubernetes (ACK) Serverless cluster. This GPU-based TensorFlow training job is available on GitHub.

Background information

In recent years, AI and machine learning have been widely used in a large number of fields, and various training models have been developed. An increasing number of training jobs are run on the cloud. However, it is not easy to continuously run training jobs in a cloud environment. You may encounter the following difficulties:

Difficulties in deploying environments: You must purchase a GPU-accelerated instance and install a GPU driver on the instance. After you prepare a containerized environment for training jobs, you must install the GPU runtime hook.

Lack of scalability: After you deploy an environment and run the training jobs, you may need to release idle resources to save costs. The next time you want to run training jobs, you must deploy an environment and create instances again. If compute nodes are insufficient, you must scale out the compute nodes. In this case, you must create instances and deploy the environment again.

To resolve the preceding difficulties, we recommend that you use ACK Serverless clusters and elastic container instances to run training jobs. The solution has the following benefits:

You are charged on a pay-as-you-go basis and do not need to manage resources.

You need only to prepare the configurations once. Then, you can reuse the configurations without limits.

The image cache feature allows you to create instances and start training jobs in a more efficient manner.

Training data is decoupled from training models. Training data can be persisted.

Preparations

Prepare training data and an image.

In this example, a TensorFlow training job available on GitHub is used. For more information, see TensorFlow Model Garden.

An image has been prepared and has been uploaded to Alibaba Cloud Container Registry (ACR). You can directly use it or perform secondary development.

Internal address for images: registry-vpc.cn-hangzhou.aliyuncs.com/eci_open/tensorflow:1.0

Public address for images: registry.cn-hangzhou.aliyuncs.com/eci_open/tensorflow:1.0

Create an ACK Serverless cluster.

Create an ACK Serverless cluster in the ACK console. For more information, see Create an ACK Serverless cluster.

ImportantIf you need to pull an image over the Internet or if your training jobs need to access the Internet, you must configure an Internet NAT gateway.

You can use kubectl and one of the following methods to manage and access the ACK Serverless cluster:

If you want to manage the cluster from an on-premises machine, install and configure the kubectl client. For more information, see Connect to an ACK cluster by using kubectl.

Use kubectl to manage the ACK Serverless cluster on Cloud Shell. For more information, see Use kubectl to manage ACK clusters on Cloud Shell.

Create a File Storage NAS (NAS) file system and add a mount target.

Create a NAS file system and add a mount target in the File Storage NAS console. The NAS file system and the ACK Serverless cluster must be in the same virtual private cloud (VPC). For more information, see Create a file system and Manage mount targets.

Procedure

Create an image cache

The image cache feature has been integrated into ACK Serverless clusters by using Kubernetes CustomResourceDefinitions (CRDs) to accelerate the pulling of images.

Create the YAML file that is used to create the image cache.

The following code shows an example of the imagecache.yaml file.

NoteIf your cluster is deployed in the China (Hangzhou) region, we recommend that you use the internal address of images to pull images. If your cluster is deployed in another region, you can use the public address of images to pull images.

apiVersion: eci.alibabacloud.com/v1 kind: ImageCache metadata: name: tensorflow spec: images: - registry.cn-hangzhou.aliyuncs.com/eci_open/tensorflow:1.0Create an image cache.

kubectl create -f imagecache.yamlYou must pull an image when you create an image cache. The amount of time required to pull an image is related to the image size and network conditions. You can run the following command to view the creation progress of the image cache:

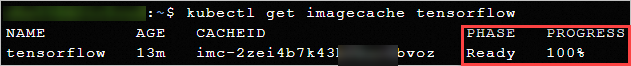

kubectl get imagecache tensorflowA command output similar to the following one indicates that the image cache is created.

Create a training job

Create a persistent volume (PV) and a persistent volume claim (PVC) for the NAS file system.

Prepare the YAML file.

The following code shows an example of the nas.yaml file:

apiVersion: v1 kind: PersistentVolume metadata: name: pv-nas labels: alicloud-pvname: pv-nas spec: capacity: storage: 100Gi accessModes: - ReadWriteMany csi: driver: nasplugin.csi.alibabacloud.com volumeHandle: pv-nas volumeAttributes: server: 15e1d4****-gt***.cn-beijing.nas.aliyuncs.com # Mount target of the NAS file system. path: / mountOptions: - nolock,tcp,noresvport - vers=3 --- kind: PersistentVolumeClaim apiVersion: v1 metadata: name: pvc-nas spec: accessModes: - ReadWriteMany resources: requests: storage: 100Gi selector: matchLabels: alicloud-pvname: pv-nasCreate a PV and a PVC.

kubectl create -f nas.yaml

Create a pod to run the training job.

Prepare the YAML file.

The following code shows an example of the tensorflow.yaml file:

apiVersion: v1 kind: Pod metadata: name: tensorflow labels: app: tensorflow alibabacloud.com/eci: "true" annotations: k8s.aliyun.com/eci-use-specs: "ecs.gn6i-c4g1.xlarge" # Specify GPU specifications. k8s.aliyun.com/eci-auto-imc: "true" # Enable automatic matching of image caches. spec: restartPolicy: OnFailure containers: - name: tensorflow image: registry.cn-hangzhou.aliyuncs.com/eci_open/tensorflow:1.0 # Use the image address that corresponds to the image cache. command: - python args: - /home/classify_image/classify_image.py # Execute the training script after the container is started. resources: limits: nvidia.com/gpu: "1" # The number of GPUs requested by the container. volumeMounts: # Mount the NAS file system to provide persistent storage for training results. - name: pvc-nas mountPath: /tmp/classify_image_model volumes: - name: pvc-nas persistentVolumeClaim: claimName: pvc-nasCreate a pod.

kubectl create -f tensorflow.yaml

View the execution status of the training job.

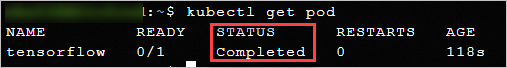

kubectl get podIf the pod is in the Completed state, the training job is complete.

Note

NoteYou can also run the

kubectl describe pod <pod name>command to view the details of the pod or run thekubectl logs <pod name>command to view logs.

View the results

You can view the results of the training job in the console.

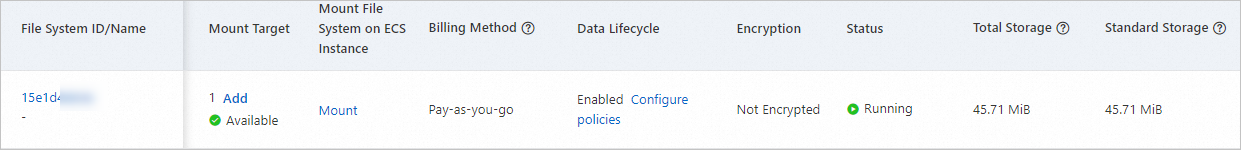

In the File Storage NAS console, you can view that the training results are stored in the NAS file system and the storage space occupied by the training results. After the NAS file system is remounted, you can obtain the results in the corresponding path.

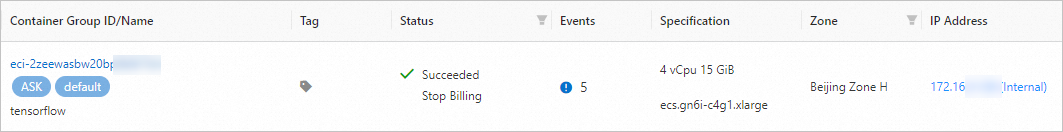

You can view the elastic container instance that corresponds to the pod in the Elastic Container Instance console.

If an elastic container instance is successfully run, containers in the instance are stopped. Then, the system reclaims the underlying computing resources, and the billing of pods is stopped.

References

This tutorial uses the image cache feature to accelerate image pulling and uses a NAS file system for persistent storage. For more information, see: