Stream output is widely-known in Alibaba as a powerful performance tool to improve business transformation. However, what is still unclear is its definition, technical theories, and application scenarios. This article will introduce these concepts in detail.

Stream, also known as Reactive, is an R&D framework based on asynchronous data streams and a concept and programming model rather than a technical architecture. Today, responsive technical frameworks are commonly used in technical stacks, such as React.js and RxJs at the frontend and RxJava and Reactor at the server, with RXJava in the Android system. All of these created reactive programming.

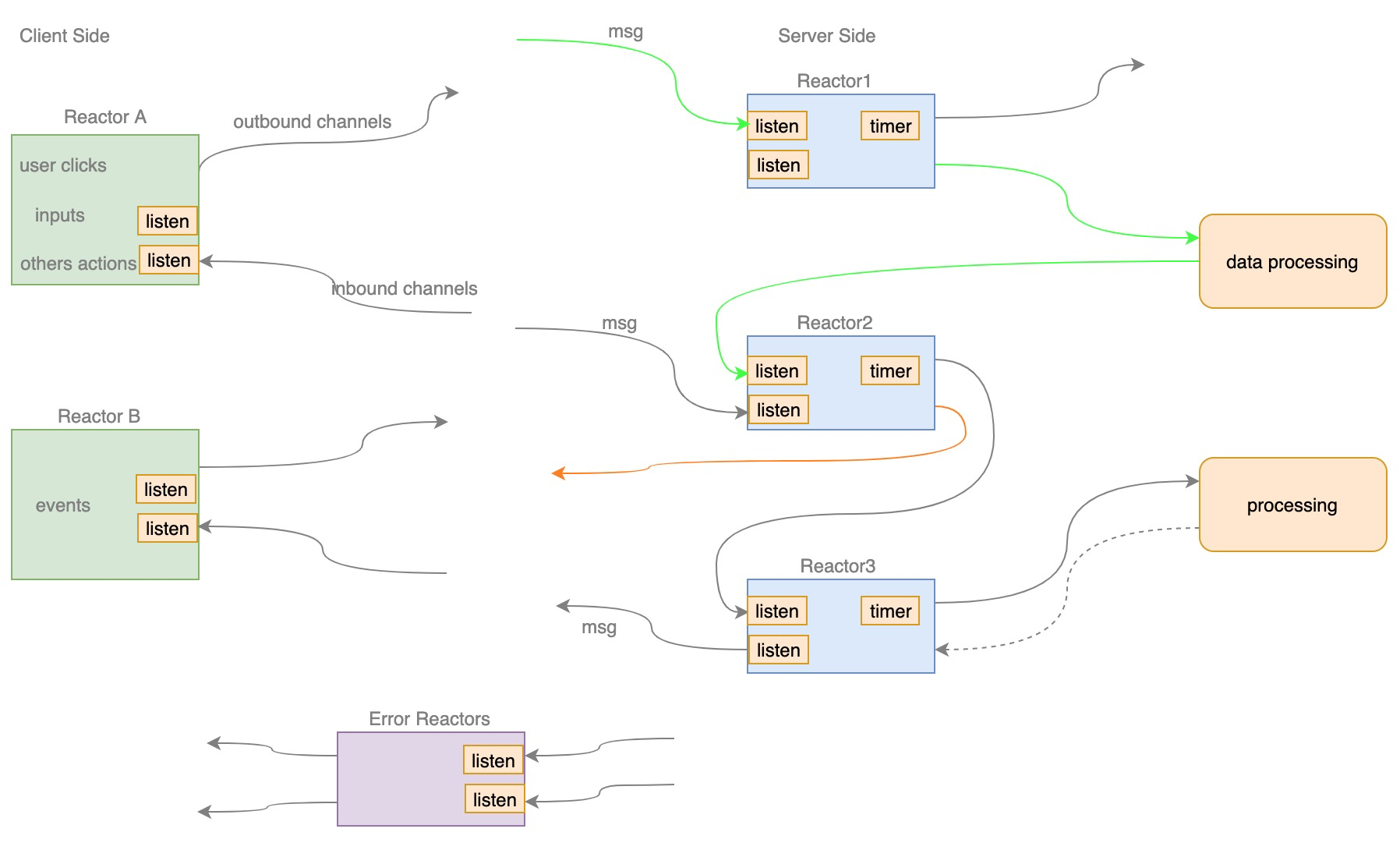

Reactive Programming is a programming paradigm based on an event model. There are two ways to obtain the last task execution result in asynchronous programming; one is proactive polling, which is called Proactive mode, and the other is passive feedback receiving, which is called Reactive mode. In short, in Reactive mode, the feedback of the previous task is an event that triggers the execution of the next task.

This is also the connotation of Reactive. In this case, the main body that processes and sends events is called the reactor. It also sends the next event to other reactors after the last event is processed.

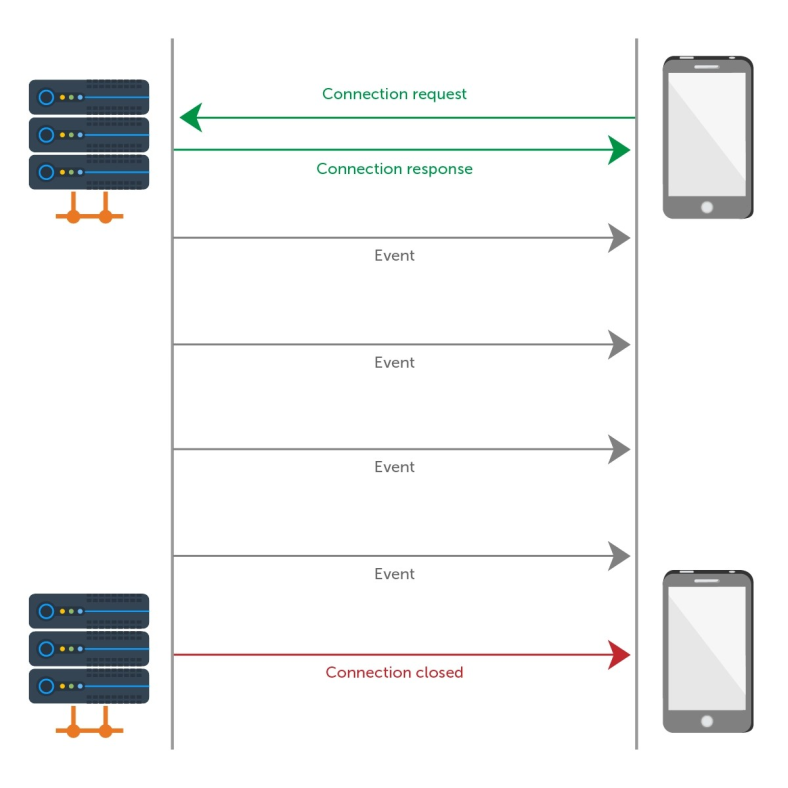

The following diagram shows a Reactive model:

The runtime of a new coding mode will bring less traffic switching, higher performance, and less memory consumption. However, it also lowers code maintainability. The strengths and weaknesses of this mode should be measured according to the benefits in different scenarios.

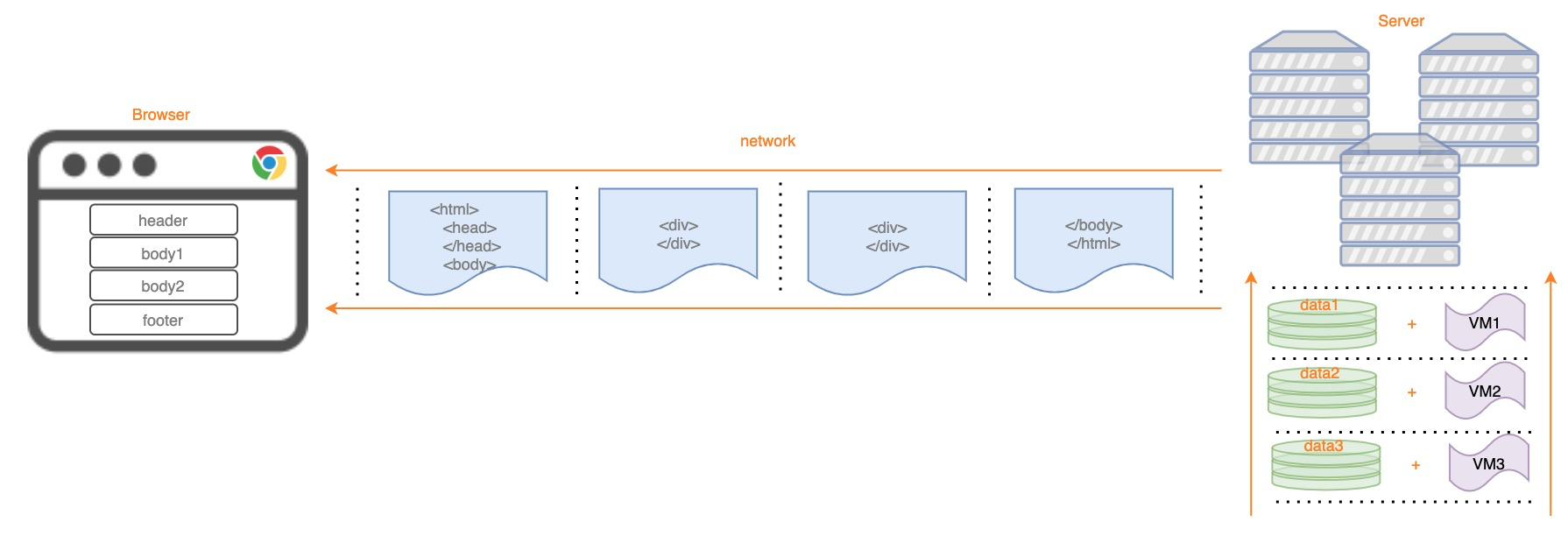

The Stream output concept was introduced in an internal closing meeting after a performance competition. It is based on the stream processing theory applied in page rendering and the rendered HTML in network transmission. Therefore, it is also called stream rendering. To be more specific, the page is split into several independent modules, with separated data sources and page templates in each module. Server-side stream operations are performed on each module for business logic processing and page template rendering. Then, the rendered HTML is output to the network in-stream. After that, the chunked HTML data is transmitted in-network, and the streaming chunked HTML data is rendered and displayed one by one in the browser. The detailed procedure is shown on the diagram below:

The HTML stream output can be performed as described above, which also brings about the stream output of JSON data. It is generally the same but only with no rendering logic. The data stream output is also similar to the figure above. The request from the client can be considered as a reactive event. To sum up, the stream output refers to the process when the server returns streaming data after the client proactively initiates a request.

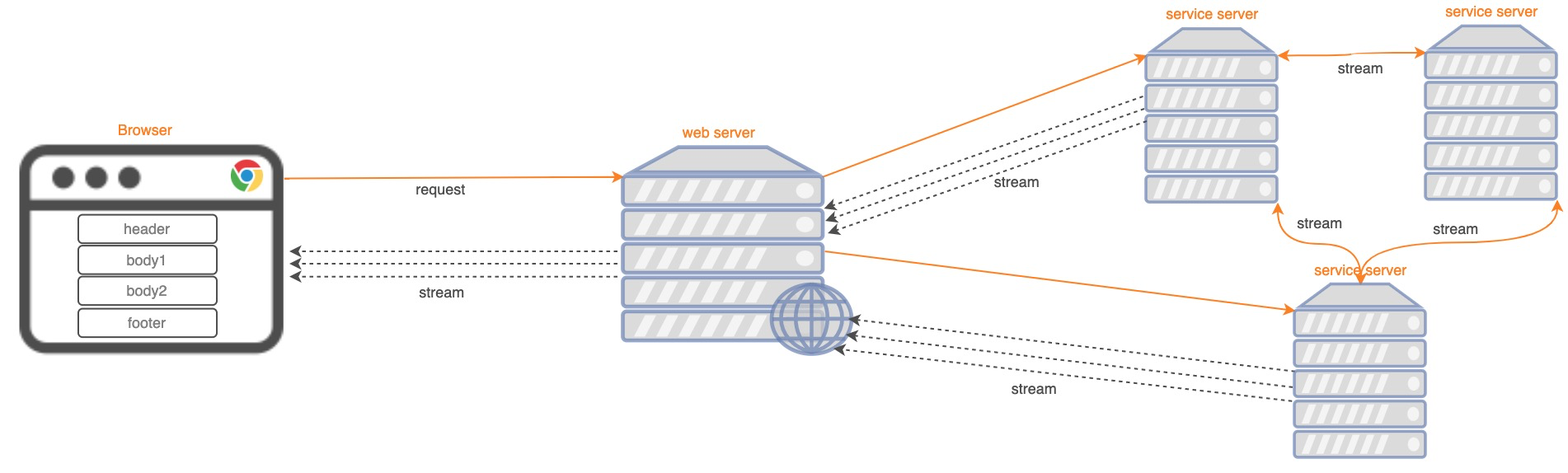

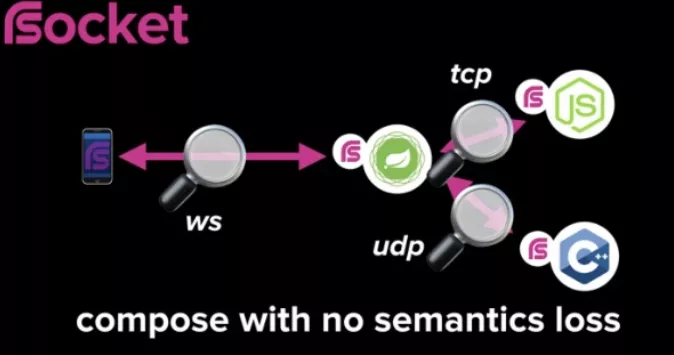

The data stream output on the network works for the data between the client and the web server and various microservice servers, as shown in the following figure:

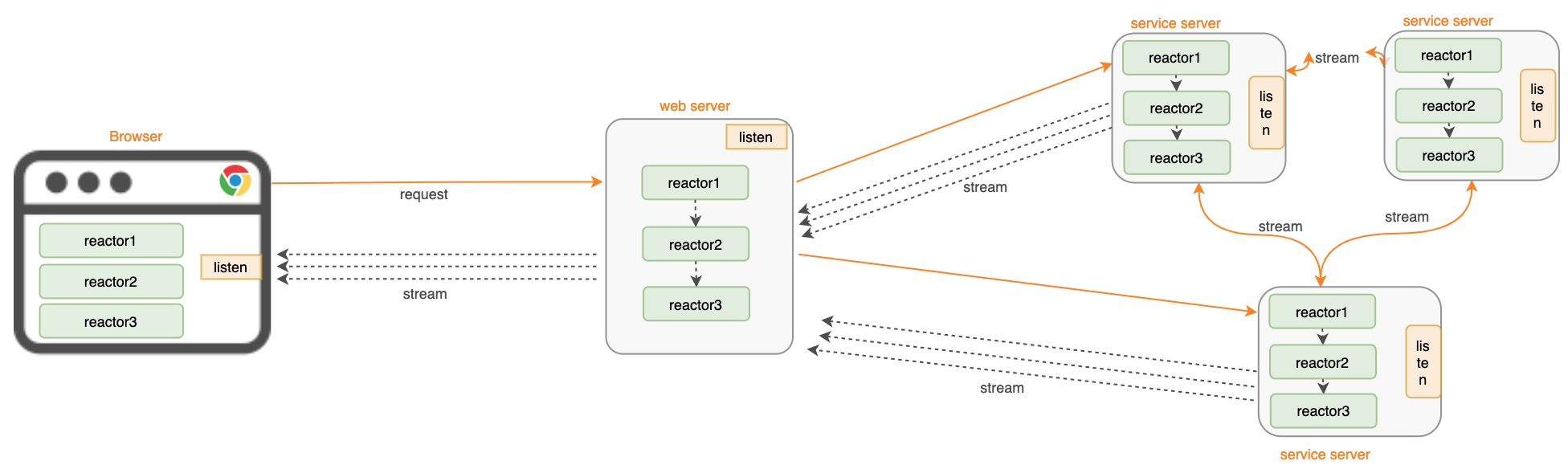

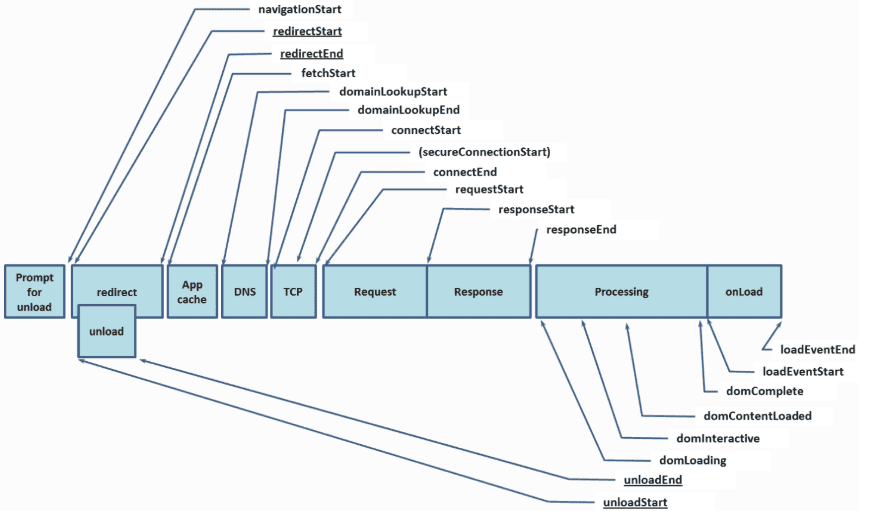

Data can be transmitted between networks. The following figure shows the data stream output on the entire request response procedure:

To sum up, end-to-end Reactive refers to stream output + reactive programming.

The following explains several core technologies, including the basic technical theory that supports the data stream output and receiving shown above.

Chunked transfer encoding is a data transmission mechanism of HTTP. In this mechanism, HTTP data sent by a web page server to a client application (usually a web browser) can be divided into multiple parts. Chunked transfer encoding is only available in HTTP/1.1.

You must set the response header in HTTP Response as Transfer-Encoding: chunked to apply the Reactive format of chunked transfer encoding. The detailed transfer format is listed below:

Note: In this case, the line break is rn.

HTTP/1.1 200 OK\r\n

\r\n

Transfer-Encoding: chunked\r\n

...\r\n

\r\n

<chunked 1 length>\r\n

<chunked 1 content>\r\n

<chunked 2 length>\r\n

<chunked 2 content>\r\n

...\r\n

0\r\n

\r\n

\r\nFor more information, please see the interpretation section. Examples of chunked transfer encoding are listed below:

func handleChunkedHttpResp(conn net.Conn) {

buffer := make([]byte, 1024)

n, err := conn.Read(buffer)

if err != nil {

log.Fatalln(err)

}

fmt.Println(n, string(buffer))

conn.Write([]byte("HTTP/1.1 200 OK\r\n"))

conn.Write([]byte("Transfer-Encoding: chunked\r\n"))

conn.Write([]byte("\r\n"))

conn.Write([]byte("6\r\n"))

conn.Write([]byte("hello,\r\n"))

conn.Write([]byte("8\r\n"))

conn.Write([]byte("chunked!\r\n"))

conn.Write([]byte("0\r\n"))

conn.Write([]byte("\r\n"))

}From a browser perspective, HTTP chunked transfer is more suitable for HTML output synchronization. Many web pages contain SEO, so TDK elements of SEO must be synchronized. Therefore, this method is more suitable since it performs stream output of JSON data through SSE, as shown below:

Server Send Events (SSE) is a standard HTTP protocol. It is used when a server sends events to a client in stream. The monitoring functions are bound to some event types in clients to process business logic. SEE is only used in one-way. Only the server can send events to the client in stream. The specific process is shown on the diagram below:

SSE stipulates the following field types:

1) Event

It refers to the event Type. If the event field is specified, an event will be triggered on the current EventSource object when the client receives the message. The event type is the value of this field. The named event of any type on this object can be monitored via addEventListener(). If there are no event fields in this message, the event handler function of onmessage will be triggered.

2) Data

It refers to the data field of the message. If the message contains multiple data fields, the client will concatenate the fields into a string as the field value with line breaks.

3) ID

Event ID is the value of the internal property "Last Event ID" of the current EventSource object.

4) Retry

An integer value specifies the reconnection time in milliseconds. The field value will be ignored if it is not an integer.

Client Code Sample:

// Client initialization event source

const evtSource = new EventSource("//api.example.com/ssedemo.php", { withCredentials: true } );

// Add a processing function for message events to monitore messages sent from the server

evtSource.onmessage = function(event) {

const newElement = document.createElement("li");

const eventList = document.getElementById("list");

newElement.innerHTML = "message: " + event.data;

eventList.appendChild(newElement);

}Server Code Sample:

date_default_timezone_set("America/New_York");

header("Cache-Control: no-cache");

header("Content-Type: text/event-stream");

$counter = rand(1, 10);

while (true) {

// Every second, send a "ping" event.

echo "event: ping\n";

$curDate = date(DATE_ISO8601);

echo 'data: {"time": "' . $curDate . '"}';

echo "\n\n";

// Send a simple message at random intervals.

$counter--;

if (!$counter) {

echo 'data: This is a message at time ' . $curDate . "\n\n";

$counter = rand(1, 10);

}

ob_end_flush();

flush();

sleep(1);

}Results:

event: userconnect

data: {"username": "bobby", "time": "02:33:48"}

event: usermessage

data: {"username": "bobby", "time": "02:34:11", "text": "Hi everyone."}

event: userdisconnect

data: {"username": "bobby", "time": "02:34:23"}

event: usermessage

data: {"username": "sean", "time": "02:34:36", "text": "Bye, bobby."}Note: When SSE is used without HTTP 2, the maximum connection number of SSE is limited. The default value is 6, it means only six SSE connections can be established at a time. However, these restrictions only work for the same domain name. Six more SSE connections can be established for cross-domain domain names. The default maximum connection number is 100 when SSE is used through HTTP 2.

Currently, SSE has been integrated into Spring5. The webflux of Springboot2 performs data stream output through SSE.

In the microservices architecture, data is transmitted between different services through application protocols. Typical transfer methods include the REST or SOAP API based on HTTP protocol and RPC based on TCP byte stream. However, the request-response mode is only supported for HTTP. Therefore, the client must use polling to obtain the latest push messages, which undoubtedly causes resource waste. Furthermore, if the response time of a request is too long, the processing of subsequent requests will be blocked. Although SSE can be used to push messages, it is only a simple text protocol with limited functions. By comparison, WebSocket supports two-way data transfer with no application-layer protocols supported. Therefore, RSocket solves the various problems of the existing protocols.

RSocket is a new application network protocol for reactive applications. The protocol working at layers five and six in Open System Interconnection is an application-layer protocol above TCP/IP. RSocket can use different underlying transport layers, including TCP, WebSocket, and Aeron. TCP uses interaction between components in a distributed system, WebSocket uses interaction between browsers and servers, and Aeron is a transmission mode based on UDP. This ensures that RSocket can be used in different scenarios, as is shown above. Then, RSocket ensures efficient transmission and saves bandwidth through binary format. Moreover, through reactive traffic control, both sides in message transmission will not collapse due to excessive request pressure. For more information, please see RSocket [1] or alibaba-rsocket-broker [2] open-sourced by Leijuan.

Rsocket provides four different interaction modes for all scenarios:

| Mode | Description |

| request/response | This is the most typical and common pattern. The sender waits for the response message corresponding to the sender after sending the message to the receiver. |

| request/stream | Each request message from the sender corresponds to a message stream of the receiver as a response. |

| fire-and-forget | The request message sent by the sender has no response. |

| channel | Establish a bidirectional transmission channel between the sender and receiver. |

RSocket is available in different languages, including Java, Kotlin, JavaScript, Go, .NET, and C++. The Java implementation is listed below:

import io.rsocket.AbstractRSocket;

import io.rsocket.Payload;

import io.rsocket.RSocket;

import io.rsocket.RSocketFactory;

import io.rsocket.transport.netty.client.TcpClientTransport;

import io.rsocket.transport.netty.server.TcpServerTransport;

import io.rsocket.util.DefaultPayload;

import reactor.core.publisher.Mono;

public class RequestResponseExample {

public static void main(String[] args) {

RSocketFactory.receive()

.acceptor(((setup, sendingSocket) -> Mono.just(

new AbstractRSocket() {

@Override

public Mono<Payload> requestResponse(Payload payload) {

return Mono.just(DefaultPayload.create("ECHO >> " + payload.getDataUtf8()));

}

}

)))

.transport(TcpServerTransport.create("localhost", 7000)) // Specify the transport layer implementation

.start() // Start the server

.subscribe();

RSocket socket = RSocketFactory.connect()

.transport(TcpClientTransport.create("localhost", 7000)) // Specify the transport layer implementation

.start() // Start the client

.block();

socket.requestResponse(DefaultPayload.create("hello"))

.map(Payload::getDataUtf8)

.doOnNext(System.out::println)

.block();

socket.dispose();

}

}

Reactive Programming FrameworkFor the implementation of the reactive procedure, the reactive programming framework serves as the core supporting technology. It is a programmatic revolution for developers. There are still many differences between programming through asynchronous data streams and the original process programming mode.

For Example:

@Override

public Single<Integer> remaining() {

return Flowable.fromIterable(LotteryEnum.EFFECTIVE_LOTTERY_TYPE_LIST)

.flatMap(lotteryType -> tairMCReactive.get(generateLotteryKey(lotteryType)))

.filter(Result::isSuccess)

.filter(result -> !ResultCode.DATANOTEXSITS.equals(result.getRc()))

.map(result -> (Integer) result.getValue().getValue())

.reduce((acc, lotteryRemaining) -> acc + lotteryRemaining)

.toSingle(0);

}In general, stream output can be performed through HTTP chunked transfer protocols, HTTP SSE protocol, and RSocket. Then, the end-to-end reactive mode can only be implemented through stream output.

Performance, experience, and data are the three major things in the daily work of developers. Performance has always been the core point of developers. Stream output is created for performance issues. The suitable stream output scenarios are shown on the diagram below:

The figure above describes the main stages in the Resource Timing API request lifecycle.

Compared with a static page, a dynamic page is mainly composed of the page style, JavaScript of page interaction, and the dynamic page data. Apart from the time consumed in different stages of the lifecycle above, this scenario also contains the page rendering. When the browser receives HTML, it will perform DOM tree construction, scanner preloading, CSSOM tree construction, Javascript compilation and execution, in-process CSS file loading, and JS file loading to block page rendering. If we split the page and streaming data in the following way, the performance will improve significantly.

Single-Interface Dynamic Page

In some scenarios, such as SEO, pages need to be rendered synchronously. In most cases, they are single-interface dynamic pages, which can be split into parts above and below the body. For example:

<!-- Module 1 -->

<html>

<head>

<meta />

<link />

<style></style>

<script src=""></script>

</head>

<body>

<!-- Module 2 -->

<div>xxx</div>

<div>yyy</div>

<div>zzz</div>

</body>

</html>Module 1 arrives at the page while Module 2 does not, but Module 1 can load CSS and JS while waiting for Module 2 after rendering. This way, the performance is improved in several aspects:

Single-Interface Multi-Floor Page:

<!-- Module 1 -->

<html>

<head>

<meta />

<link />

<style></style>

<script src=""></script>

</head>

<body>

<!-- Module 2 -->

<div>xxx1</div>

<div>yyy1</div>

<div>zzz1</div>

<!-- Module 3 -->

<div>xxx2</div>

<div>yyy2</div>

<div>zzz2</div>

<!-- Module 4 -->

<div>xxx3</div>

<div>yyy3</div>

<div>zzz3</div>

</body>

</html>Many scenarios involve a page with multiple floors, such as the main parts of a homepage (content, structure, performance, and behavior), various shopping guide floors, and detailed information floors. In some cases, the back floor depends on the data of the front floor. In these cases, the page floor can be split into multiple modules for output. Apart from the improvements in the aspects above, the data processing time of data dependency between floors is also reduced.

Multi-Floor Page with Multiple Interfaces

In most cases, most pages are rendered by synchronous SSR and asynchronous CSR, which involves JS asynchronous loading of asynchronous floors. If the synchronous rendering is split according to the single-interface multi-floor page, the rendering of asynchronous floors will be loaded and run in advance.

In general, stream output based on HTTP chunked transfer protocols cover almost all page scenarios and improve the performance experience.

Single-Interface Big Data

Page rendering is performed through asynchronous loading for apps or single-page systems. A single interface results in a longer response time. The large data packet body leads to the loss of unpacking and sticking packets in the network. If multiple asynchronous interfaces are used, the latency of data requests and the CPU occupancy caused by network IO will increase due to limited network bandwidth. Therefore, based on business scenario analysis, a single interface can be split into multiple independent or coupled business modules. Thus, the data of these modules can be output in stream. This way, performance can be improved in the following aspects:

Interfaces with Great Interdependency

However, most business scenarios are coupled with each other. For example, the list module data depends on the new arrival module data for business logic processing. In this case, the server processes and outputs the new arrival module data and then the list module data. This saves time for interdependency waiting.

Although daily business scenarios are more complex, stream output can improve page performance and user experience.

ApsaraDB - July 7, 2021

Alibaba Cloud Native - June 12, 2024

Alibaba Cloud Native Community - November 10, 2021

Alibaba Cloud Data Intelligence - December 6, 2023

Farruh - January 12, 2024

Apache Flink Community - August 29, 2025

Apsara Stack

Apsara Stack

Apsara Stack is a full-stack cloud solution created by Alibaba Cloud for medium- and large-size enterprise-class customers.

Learn More ECS(Elastic Compute Service)

ECS(Elastic Compute Service)

Elastic and secure virtual cloud servers to cater all your cloud hosting needs.

Learn More Super Computing Cluster

Super Computing Cluster

Super Computing Service provides ultimate computing performance and parallel computing cluster services for high-performance computing through high-speed RDMA network and heterogeneous accelerators such as GPU.

Learn More Elastic High Performance Computing

Elastic High Performance Computing

A HPCaaS cloud platform providing an all-in-one high-performance public computing service

Learn More