Server Load Balancer or Server Load Balancing (SLB) is a service that distributes high traffic sites among several servers. Server Load Balancer intercept traffic for a website and reroutes that traffic to servers.

Server Load Balancer distributes inbound network traffic across multiple Elastic Compute Service (ECS) instances that act as backend servers based on forwarding rules. You can use Server Load Balancer to improve the responsiveness and availability of your applications.

After you add ECS instances that reside in the same region to a Server Load Balancer instance, Server Load Balancer uses virtual IP addresses (VIPs) to virtualize these ECS instances into backend servers in a high-performance server pool that ensures high availability. Client requests are distributed to the ECS instances based on forwarding rules.

Server Load Balancer checks the health status of the ECS instances and automatically removes unhealthy ones from the server pool to eliminate single points of failure (SPOFs). This enhances the resilience of your applications. You can also use Server Load Balancer to defend your applications against distributed denial of service (DDoS) attacks.

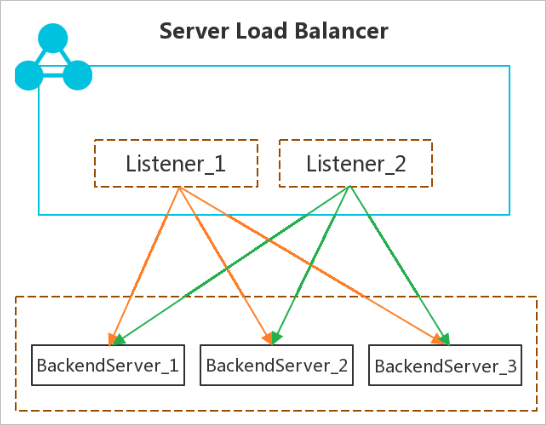

Server Load Balancer consists of three components:

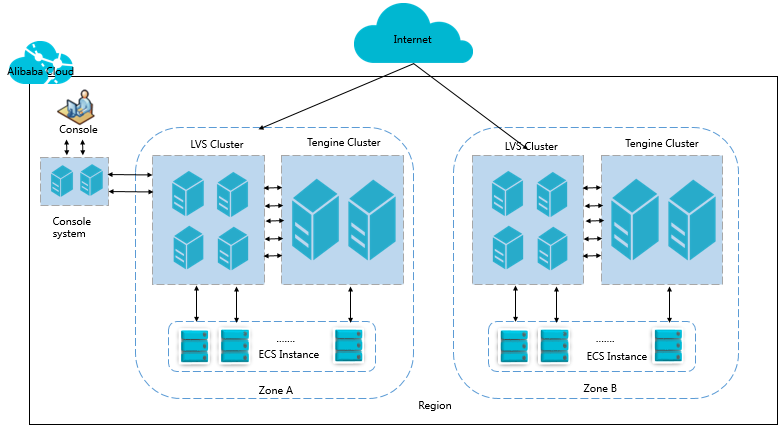

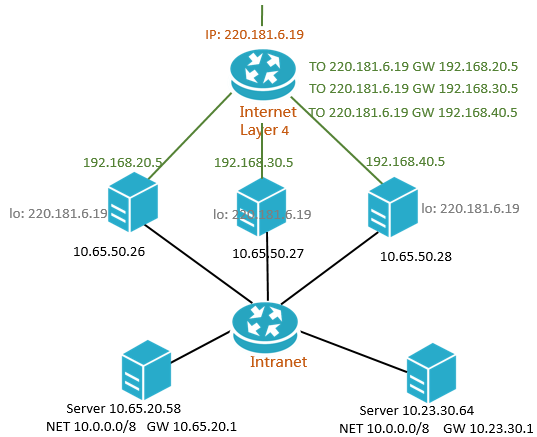

Server Load Balancer instances are deployed in clusters to synchronize sessions and protect backend servers from SPOFs, improving redundancy, and ensuring service stability. Layer-4 Server Load Balancer uses the open-source Linux Virtual Server (LVS) and Keepalived software to balance loads, whereas Layer-7 SLB uses Tengine. Tengine, a web server project launched by Taobao, is based on NGINX and adds advanced features dedicated to high-traffic websites.

Requests from the Internet reach an LVS cluster along Equal-Cost Multi-Path (ECMP) routes. In the LVS cluster, each machine uses multicast packets to synchronize sessions with the other machines. At the same time, the LVS cluster performs health checks on the Tengine cluster and removes unhealthy machines from the Tengine cluster to ensure the availability of the Layer-7 Server Load Balancer.

Server Load Balancer Best practice:

You can use session synchronization to prevent persistent connections from being affected by server failures within a cluster. However, for short-lived connections or if the session synchronization rule is not triggered by the connection (the three-way handshake is not completed), server failures in the cluster may still affect user requests. To prevent session interruptions caused by server failures within the cluster, you can add a retry mechanism to the service logic to reduce the impact on user access.

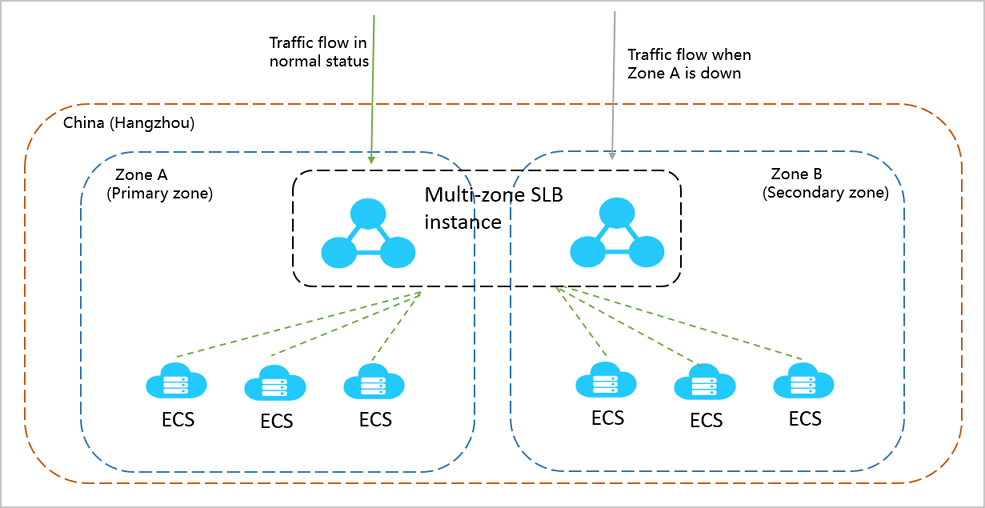

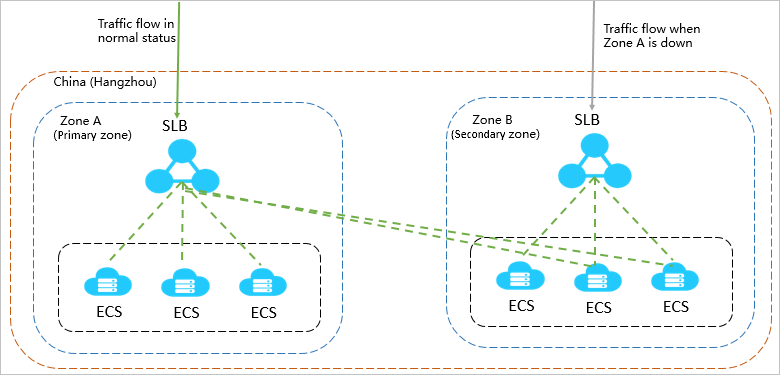

To provide more stable and reliable load balancing services, you can deploy Server Load Balancer instances across multiple zones in most regions to achieve cross-data-center disaster recovery. Specifically, you can deploy a Server Load Balancer instance in two zones within the same region whereby one zone acts as the primary zone and the other acts as the secondary zone. If the primary zone suffers an outage, failover is triggered to redirect requests to the servers in the secondary zone within approximately 30 seconds. After the primary zone is restored, traffic will be automatically switched back to the servers in the primary zone.

Server Load Balancer Best practice:

We recommend that you create Server Load Balancer instances in regions that support primary/secondary deployment for zone-disaster recovery.

You can choose the primary zone for your Server Load Balancer instance based on the distribution of ECS instances. That is, select the zone where most of the ECS instances are located as the primary zone for minimized latency.

However, we recommend that you do not deploy all ECS instances in the primary zone. When you develop a failover solution, you must deploy several ECS instances in the secondary zone to ensure that requests can still be distributed to backend servers in the secondary zone for processing when the primary zone experiences downtime.

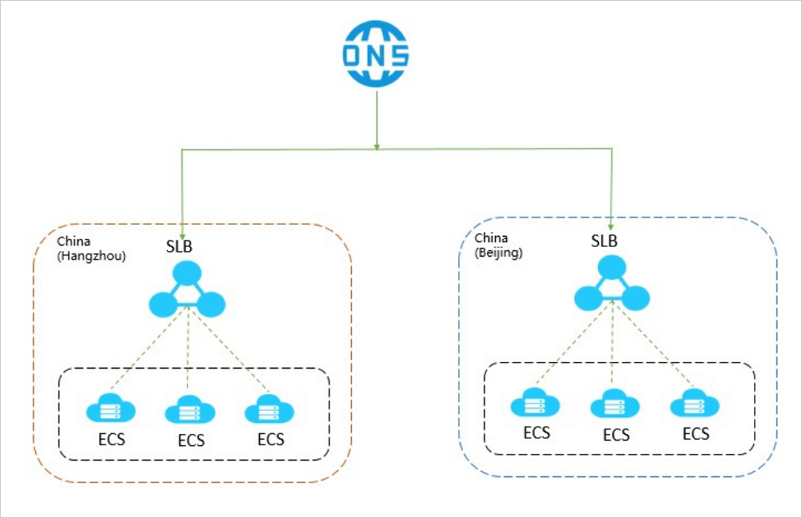

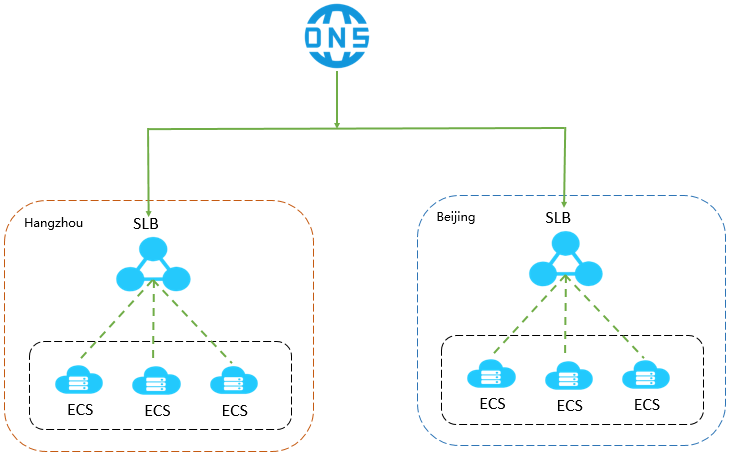

In the context of one Server Load Balancer instance, traffic distribution for your applications can still be compromised by network attacks or invalid Server Load Balancer configurations, because the failover between the primary zone and the secondary zone is not triggered. As a result, the load-balancing performance is impacted. To avoid this situation, you can create multiple Server Load Balancer instances to form a global load-balancing solution and achieve cross-region backup and disaster recovery. Also, you can use the instances with DNS to schedule requests so as to ensure service continuity.

Server Load Balancer Best practice:

You can deploy Server Load Balancer instances and ECS instances in multiple zones within the same region or across different regions, and then use DNS to schedule requests.

Server Load Balancer instances are deployed in clusters to synchronize sessions and protect backend servers from SPOFs, improving redundancy, and ensuring service stability. Server Load Balancer supports Layer-4 load balancing of Transmission Control Protocol (TCP) and User Datagram Protocol (UDP) traffic and Layer-7 load balancing of HTTP and HTTPS traffic.

Server Load Balancer forwards client requests to backend servers by using Server Load Balancer clusters and receives responses from backend servers over internal networks.

Alibaba Cloud provides Layer-4 (TCP and UDP) and Layer-7 (HTTP and HTTPS) load balancing.

Layer-4 Server Load Balancer runs in a cluster of LVS machines for higher availability, stability, and scalability of load balancing in abnormal cases.

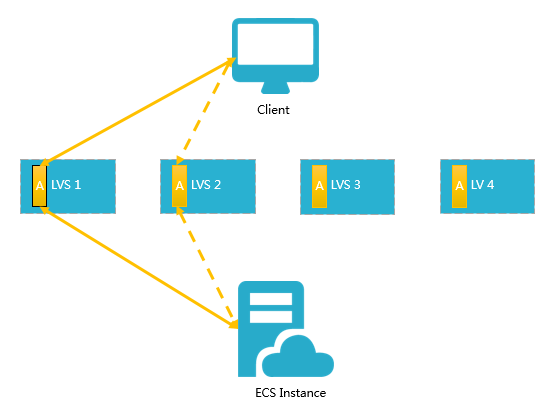

In an LVS cluster, each machine synchronizes sessions with other machines via multicast packets. As shown in the below figure, Session A is established on LVS1 and is synchronized to other LVS machines after the client transfers three data packets to the server. Solid lines indicate the current active connections, while dotted lines indicate that the session requests will be sent to other normally working machines if LVS1 fails or is being maintained. In this way, you can perform hot updates, machine maintenance, and cluster maintenance without affecting business applications.

Server Load Balancer (SLB) can be used to improve the availability and reliability of applications with high access traffic.

Balance loads of your applications

You can configure listening rules to distribute heavy traffic among ECS instances that are attached as backend servers to SLB instances. You can also use the session persistence feature to forward all of the requests from the same client to the same backend ECS instance to enhance access efficiency.

Scale your applications

You can extend the service capability of your applications by adding or removing backend ECS instances to suit your business needs. Server Load Balancer can be used for both web servers and application servers.

Eliminate single points of failure (SPOFs)

You can attach multiple ECS instances to a Server Load Balancer instance. When an ECS instance malfunctions, Server Load Balancer automatically isolates this ECS instance and distributes inbound requests to other healthy ECS instances, ensuring that your applications continue to run properly.

Implement zone-disaster recovery (multi-zone disaster recovery)

To provide more stable and reliable load balancing services, Alibaba Cloud allows you to deploy Server Load Balancer instances across multiple zones in most regions for disaster recovery. Specifically, you can deploy a Server Load Balancer instance in two zones within the same region. One zone is the primary zone, while the other zone is the secondary zone. If the primary zone fails or becomes unavailable, the Server Load Balancer instance will failover to the secondary zone in about 30 seconds. When the primary zone recovers, the Server Load Balancer instance will automatically switch back to the primary zone.

We recommend that you create a Server Load Balancer instance in a region that has multiple zones for zone-disaster recovery. We recommend that you plan the deployment of backend servers based on your business needs. In addition, we recommend that you add at least one backend server in each zone to achieve the highest load balancing efficiency.

As shown in the following figure, ECS instances in different zones are attached to a single Server Load Balancer instance. In normal cases, the Server Load Balancer instance distributes inbound traffic to ECS instances both in the primary zone (Zone A) and in the secondary zone (Zone B). If Zone A fails, the Server Load Balancer instance distributes inbound traffic only to Zone B. This deployment mode helps avoid service interruptions caused by zone-level failure and reduce latency.

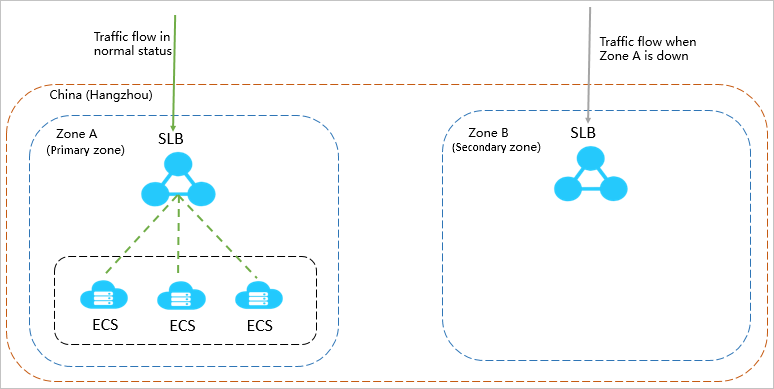

Assume that you deploy all ECS instances in the primary zone (Zone A) and no ECS instances in the secondary zone (Zone B) as shown in the following figure. If Zone A fails, your services will be interrupted because no ECS instances are available in Zone B. This deployment mode achieves low latency at the cost of high availability.

Geo-disaster recovery

You can deploy Server Load Balancer instances in different regions and attach ECS instances of different zones within the same region to a Server Load Balancer instance. You can use DNS to resolve domain names to service addresses of Server Load Balancer instances in different regions for global load balancing purposes. When a region becomes unavailable, you can temporarily stop DNS resolution within that region without affecting user access.

Alibaba Cloud Server Load Balancer (SLB) distributes traffic among multiple instances to improve the service capabilities of your applications. You can use SLB to prevent a single point of failure (SPOFs) and improve the availability and the fault tolerance capability of your applications.

This article is intended for sharing my experience of what I have learned about Alibaba Cloud Server Load Balancer (SLB) from Alibaba Cloud Academy. Although this is not a new topic to me, I took the ACA course to get my fundamentals right as I believe it is very important. I've also learned a lot of new things, especially topics related to Alibaba Cloud-specific technologies. I gained a deeper understanding of how Alibaba Cloud can help my organization increase its profit by reducing operating costs.

A Server Load Balancer is a hardware or virtual software appliance that distributes the application workload across an array of servers, ensuring application availability, elastic scale-out of server resources, and supports health management of backend servers and application systems.

Traditionally, we need a web server to provide and deliver services to our customers. Usually, we want to think if we can have a very powerful web server that can do anything we want, such as providing any services and serving as many customers as possible.

However, with only one web server, there are two major concerns. The first one is there is always limited to a server. If your business is booming, lots of new users are coming to visit your website so one day your website will definitely reach your capacity limit and will deliver a very unsatisfying experience to your users.

Also, if you only have one web server, a single point of failure may occur. For example, a power outage or network connection issues may happen to your servers. If your single server is down, your customers will be totally out of service, and you cannot provide your service anymore. This is the problem you may suffer when you have only one web server, even if it is very powerful.

AI Supports Automatic Generation of 90.4% of Frontend Modules during Double 11

2,593 posts | 791 followers

FollowJDP - April 29, 2022

Alibaba Clouder - March 2, 2021

Alibaba Clouder - September 5, 2018

Alibaba Clouder - April 8, 2021

JDP - October 8, 2021

Alibaba Clouder - March 3, 2021

2,593 posts | 791 followers

Follow Server Load Balancer

Server Load Balancer

Respond to sudden traffic spikes and minimize response time with Server Load Balancer

Learn More Simple Application Server

Simple Application Server

Cloud-based and lightweight servers that are easy to set up and manage

Learn More Web Hosting Solution

Web Hosting Solution

Explore Web Hosting solutions that can power your personal website or empower your online business.

Learn More ApsaraDB RDS for SQL Server

ApsaraDB RDS for SQL Server

An on-demand database hosting service for SQL Server with automated monitoring, backup and disaster recovery capabilities

Learn MoreMore Posts by Alibaba Clouder