This article introduces the technical challenges brought by cloud technology to traditional enterprises, and explains in depth the best practices of cloud architecture. It was first published in Lingyun, a magazine jointly sponsored by Alibaba Cloud and CSDN's Programmer magazine.

Authors: Wang Yude and Zhang Wensheng

Cloud computing has received much attention since its birth as an innovative application model in the field of information technology. Thanks to features such as low costs, elasticity, ease of use, high reliability, and service on demand, cloud computing has been regarded as the core of next-generation information technological reform. Cloud computing has been actively embraced by many businesses, and is transforming industries such as the Internet, gaming, Internet of Things, and other emerging industries. Most enterprise users, however, are often restricted by traditional IT technical architecture and lack the motivation as well as the technical expertise for migration to the cloud.

Typically, the most important element in an enterprise is a database management system that can meet the needs for real-time transactions and analytics. Traditional stand-alone databases use a "scale-up" approach, which usually only supports storage and processing of several terabytes of data, far from being sufficient to satisfy actual needs.

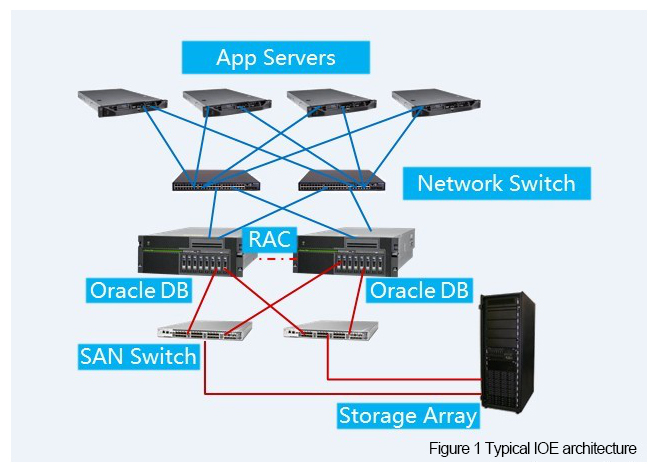

The online transaction processing (OLTP) cluster-based system is gradually becoming the default approach for achieving higher performance and larger data storage capacity. As shown in Figure 1, common enterprise database clusters such as Oracle RAC usually use the Share-Everything (Share-Disk) mode. Database servers share resources such as disks and caches.

When the performance fails to meet requirements, database servers (usually minicomputers) have to be upgraded in terms of CPU, memory, and disk in order to improve the service performance of single-node databases. In addition, the number of database server nodes can be increased to improve performance and overall system availability by implementing parallel multiple nodes and server load balancing. However, when the number of database server nodes increases, the communication between nodes becomes a bottleneck, and the data access control of each node will be subject to the consistency requirements for transaction processing. Actual case studies show that an RAC with more than four nodes is very rare.

In addition, according to Moore's Law, processor performance will be doubled once every 18 months, while DRAM performance will be doubled once every 10 years, creating a gap between processor performance and memory performance. Although processor performance is on a rapid rise, disk storage performance enhancement is slow because of the physical limitation of disks. Factors such as mechanical rotational speed and the seek time of magnetic arms have limited the IOPS performance of hard disks. There has been essentially zero improvement in hard disk performance during the past 10 years; HDD disk rotation speed has been stuck between 7,200-15,000 RPM. HDD-based disk array storage is increasingly becoming a performance bottleneck for centralized storage architecture. Full-flash arrays on the other hand are limited by high costs and short rewriting service life, which is far from meeting the requirements of large-scale commercial applications.

Therefore, IoE's centralized storage (Share-Everything) approach is costly and has restrictions in performance, capacity and scalability. The high concurrency and big data processing requirements brought about by the Internet, the rapid development of x86 and open source database technology, and the increasing maturity of NoSQL, Hadoop and other distributed system technologies drive the evolution from the centralized scale-up system architecture to the distributed scale-out architecture.

Gartner's IT experts placed Web-scale IT as one of the top 10 IT trends in 2015 that would have a significant impact on the industry over the next three years. It is predicted that more and more companies will adopt Web-scale IT by building apps and architecture similar to those of Amazon, Google, and Facebook. This will enable Web-scale IT to become a commercial hardware platform, introducing new development models, cloud optimization methods, and software-defined methods to existing infrastructure. Development-operation collaboration models such as DevOps is the first step towards the development of Web-scale IT. Despite the potential benefits of Web-scale IT, traditional IT systems still face technical constraints from the following aspects.

■ Performance

User experience is an important factor influencing conversion rate. According to statistics, if a website fails to be loaded within four seconds, around 60% of customers will be lost. Poor user experience will cause customers to abandon the service or purchase services from competitors. Therefore, it is vital to work out a solution that improves user experience by ensuring low-latency responses for highly concurrent access scenarios.

■ Scalability

The access behavior of Internet/mobile Internet users is dynamic. Traffic at hot spots may surge over 10 times of average traffic. It is vital to work out a solution that can respond quickly to the resource overhead requirements of bursty traffic and provide undifferentiated user experience.

■ Fault tolerance and maximum availability

The Internet is deployed on a distributed computing architecture, and is based on a large number of x86 servers and universal network devices. Even though these devices are designed to be reliable, the probability of malfunction is high due to the sheer number of devices. In addition to mechanical faults, there will also be bugs in software development.

How should we automate the handling when hardware malfunctions?

How should we perform systematic damage control?

How should we limit traffic to the server and client based on standalone server QPS and concurrency to achieve dynamic traffic allocation?

How can we identify chain-dependent risks between services and important functional point dependencies of the system?

How can we assess the maximum possible risk points, detect the maximum availability faults of a distributed system, isolate faulty modules, and implement rollback for unfinished transactions?

How do we ensure that core functionality is available after sacrificing non-critical features through elegant downgrading?

■ Capacity management

As businesses expands, system performance will inevitably reach a bottleneck. How can we conduct more scientific capacity assessment and expansion, and automate the calculation of the correspondence between front-end requests and the number of back-end servers to predict hardware and software capacity requirements?

■ Service-orientation

How can we abstract business logic functionality into atomic services to encapsulate and assemble services, and deploy services in a distributed system environment, increasing business flexibility? How can we clarify the relationships between these services from a business perspective? How can we trace and present single service call chains in a large-scale distributed system to discover service call exceptions in a timely manner?

■ Cost

As the evolutionary performance metrics of the system continue to change, how can we ensure that the specific access traffic requirements can be met at minimum cost?

■ Automated O&M management

Evolving large-scale systems require constant maintenance, rapid iteration, and optimization. How can we deal with thousands or even tens of thousands of servers for O&M? How can we use automation tools and processes to manage large-scale hardware and software clusters and deploy, upgrade, expand, and maintain systems quickly?

Since its inception in 2003, Taobao has witnessed fast business development at a growth rate of almost 100% every year. At its beginning, Taobao selected the then popular LAMP architecture for a quicker launch to seize the market, with PHP as the website development language, Linux as the operating system, Apache as the web server, and MySQL as the database. Taobao was launched online within less than three months. At that time, the entire website only had around 10 app servers, and the MySQL database adopted read/write splitting and was deployed with one master database and two slave databases.

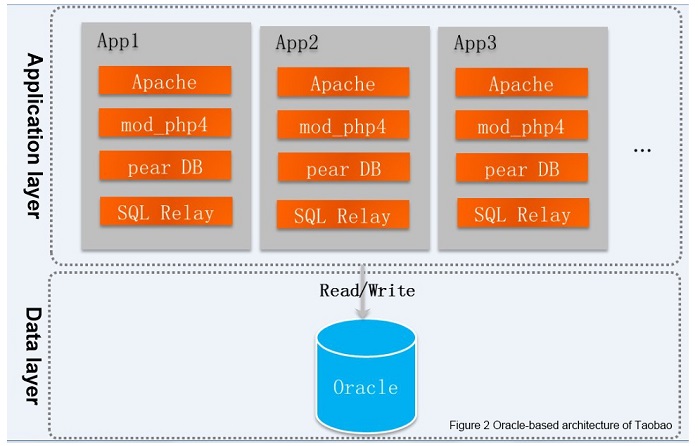

In 2004, driven by the growth of Taobao, we drew experiences from some enterprise solutions such as telecom operators and banks to transform the LAMP architecture into an Oracle + IBM minicomputer database architecture and EMC storage (see Figure 2). Although the solution cost was high, the performance was superb. However, as website traffic increased, the system became overwhelmed. What worried us most at point was designing a website system architecture that can cope with the rise in website traffic and transaction volume? How should we select the database? How should we select the cache? How should we build the business system?

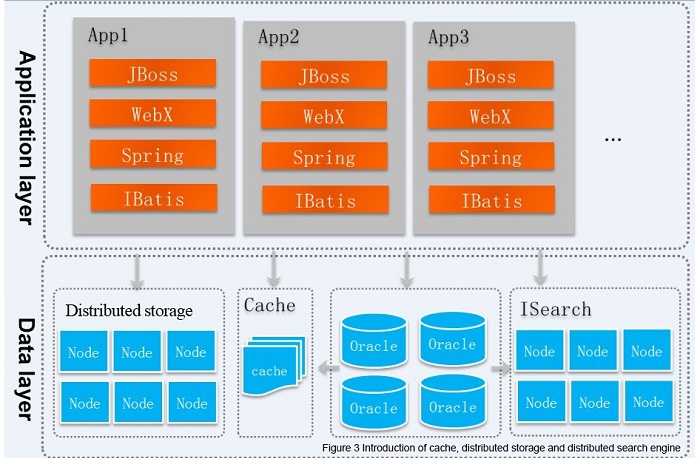

We eventually referred to eBay's Internet architecture and designed a Java technical solution, using many Java open-source products. For example, we chose the then popular JBoss as the app server and used an open-source IOC container Spring to manage the business class. We also encapsulated a database access tool IBatis as the database and Java class object-relationship mapping tool. However, for product search, we used our independently developed ISearch search engine to replace the search in Oracle databases, reducing the pressure on database servers. The implementation is relatively simple - we dumped out the full data in the Oracle minicomputer every night and built ISearch indexes. Back then, this was feasible since the amount of products was not large and a server with general configurations could basically store all the indexes. We did not split the data and structured a peer-to-peer cluster directly.

From 2006 onwards, Taobao began to build its own CDN nodes to improve user experience. Because Taobao's traffic mainly comes from a variety of product images, product descriptions, and other static data, self-built CDN nodes can speed up access and improve the website user experience by bringing these resources closer to users.

In 2007, the annual transactions on Taobao exceeded 40 billion yuan, nearly 100 million yuan per day on average. More than 1 million transactions were performed every day. Some major problems at the time include very high traffic for systems such as the product details system. If the access requests were directed to the database directly, the database would be under very high pressure. For example, when accessing user information, every access request to a page will involve querying the buyer information, seller information, the buyer credit information, and the seller service star rating. At that time, Taobao used distributed cache TDBM (predecessor of Tair) to cache this hot static data in the memory to improve access performance. In addition, we also deployed our independently developed distributed file system TFS on multiple x86 servers to replace the commercial NAS storage devices. This storage system is used for storing various file information for Taobao, such as product photos, product descriptions, and transaction snapshot information. Using this method, we were able to cut down costs and improve the overall system capacity and performance, while achieving higher scalability. Around 200 TFS servers were launched online in the first phase. We also changed the ISearch search engine to a distributed architecture to support horizontal expansion, with 48 nodes deployed. Figure 3 illustrates this architectural approach.

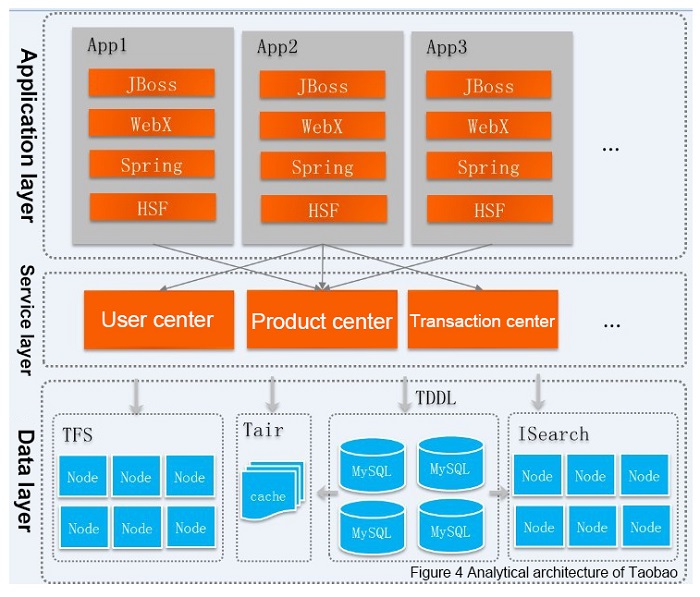

In early 2008, we split the system by user domain, product domain, transaction domain, shop domain, and other business domains to relieve the bottleneck from the centralized architecture of the Oracle database (limit of connections, and I/O performance). We established more than 20 business centers, including a product center, user center and transaction center. All systems with user access demands must use the remote interface provided by the business center for access and were not allowed to access underlying MySQL databases directly. Service interfaces of business centers were called through the HSF remote communication method and calls between business systems were implemented through the Notify message middleware asynchronously. Figure 4 shows the distributed architecture of Taobao.

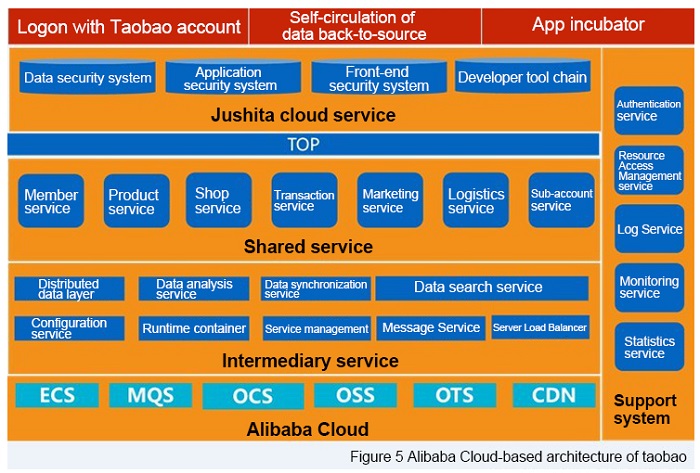

In 2010, Taobao focused on developing a unified architecture system. Taobao considered requirements from an overall system level, such as development efficiency, O&M standardization, performance, scalability, availability, and cost requirements. Taobao adopted the Alibaba Cloud computing platform for a more uniform underlying infrastructure (see Figure 5), and used Server Load Balancer, ECS, RDS, OSS, CDN, and other Alibaba Cloud computing services. Leveraging the high availability features of our services, Alibaba Cloud has achieved dual-data-room disaster tolerance and unitized deployment of remote data rooms, providing stable, efficient, and easy-to-maintain infrastructure support for Taobao.

The following technical challenges emerged during the transition from the IOE architecture to the cloud computing platform technical architecture.

■ Availability

Can a cloud computing platform of PC server-based distributed architecture achieve high availability by dropping high-end storage and the high redundancy mechanism of minicomputer?

■ Consistency

Oracle relies on RAC and shared storage to achieve physical-level consistency. Can RDS for MySQL achieve the same level of consistency?

■ High performance

High-end storage boasts high I/O capability. Can RDS based on PC servers provide the same or even higher I/O processing capability? Is the performance of MySQL and Oracle the same for SQL processing?

■ Scalability

How should we split the business logic and achieve service-orientation? How many databases and tables should the data be split to, and in which dimension? And how should we perform the secondary split to facilitate the operation?

Based on the Alibaba Cloud computing platform, we adopted various technical best practices, including stateless apps, effective use of caches (browser cache, reverse proxy cache, page cache, local page cache, object cache, and read/write splitting), service atomization, database segmentation, asynchronous resolution of performance problems, minimization of transaction units, and appropriate waivers of consistency. We also used automated monitoring/O&M means, including monitoring and early warning, unified configuration management, basic server monitoring, URL monitoring, network monitoring, inter-module call monitoring, intelligent monitoring analysis, an integrated fault management platform, and capacity management. These solutions helped us solved the above problems by achieving higher scalability, lower costs, and higher performance and availability for the entire system.

Taobao's technical architecture evolves along with the gradual development of businesses, accumulating many valuable architectural best practices during the process. Most enterprise customers can select appropriate technical architecture based on their own business requirements to achieve a networked design for the overall IT system. The cloud architecture in different application scenarios includes Network Attached Storage, app services, OLTP database, and OLAP database.

For file storage, you can directly replace the EMC storage with OSS to store massive amounts of data files. The maximum capacity of OSS storage is up to 40 PB. Because OSS offers a distributed storage mode, it can improve data access performance through parallel reading and writing of multiple nodes. In particular, you can also split large files into blocks for concurrent transmission and storage through Multipart Upload to achieve high performance.

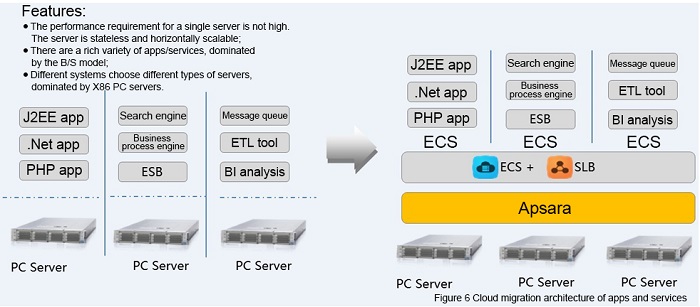

For app services, you can replace IBM minicomputers with combinations of Server Load Balancer + multiple ECS instances (see Figure 6). You can also deploy your services directly based on ACE, ONS, OpenSearch, and other Alibaba Cloud middleware cloud services.

OLTP app migration is relatively complex. Currently Alibaba Cloud RDS instances support a maximum configuration of 48 GB of memory, with 14,000 IOPS and 1 TB of storage capacity (SSD storage), and supports MySQL and SQL Server. This configuration is able to meet the database app requirements in many scenarios if used as a single database server and is able to directly replace IBM minicomputers + Oracle databases + EMC storage architecture in most scenarios.

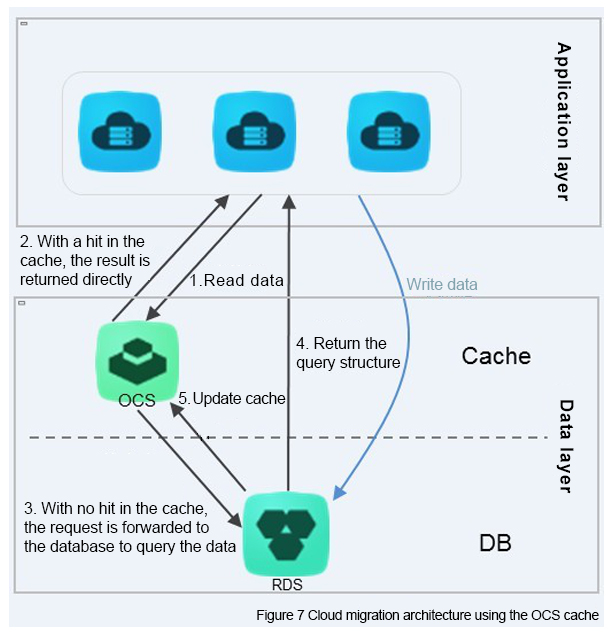

For apps with higher performance requirements, you can consider introducing the OCS (open cache service), and load part of the query data into the distributed cache to reduce the number of RDS data queries and improve the system's data query concurrency efficiency while speeding up responses, as shown in Figure 7.

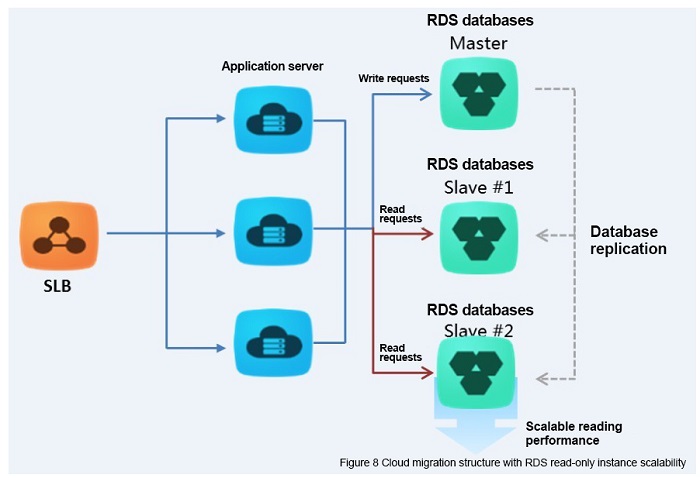

For scenarios where the reading requests far exceed the writing requests, you can consider using multiple RDS database and implementing read/write splitting through a distributed structure where writing transactions mainly occur in the primary database and reading requests access the backup database. You can also expand the reading database based on demands to improve the overall request performance. Figure 8 shows the cloud architecture with read-only instance scalability.

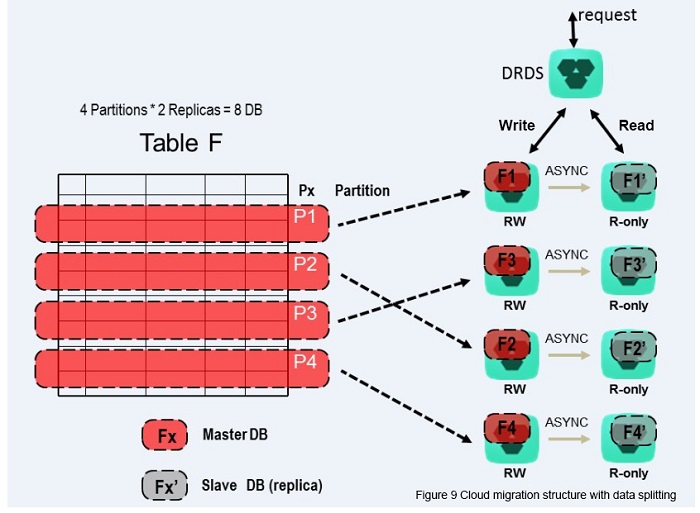

For database tables with a large data size, you can split them horizontally to distribute the data on multiple RDS instances and improve the performance and capacity through distributed database operations in parallel. Figure 9 shows the cloud architecture with data splitting.

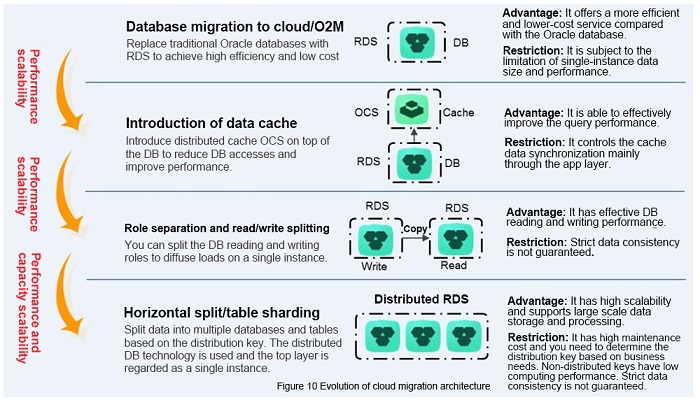

In general, through migration to the RDS, the introduction of data caching, database- and table-based data splitting and read/write splitting, and other approaches, the original IOE architecture can be replaced in the scale-out approach to achieve better performance and scalability. Figure 10 shows the evolution process of cloud architecture.

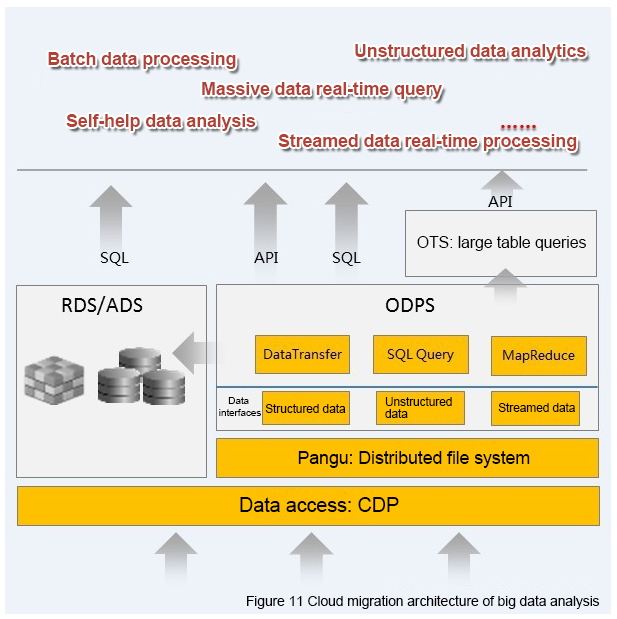

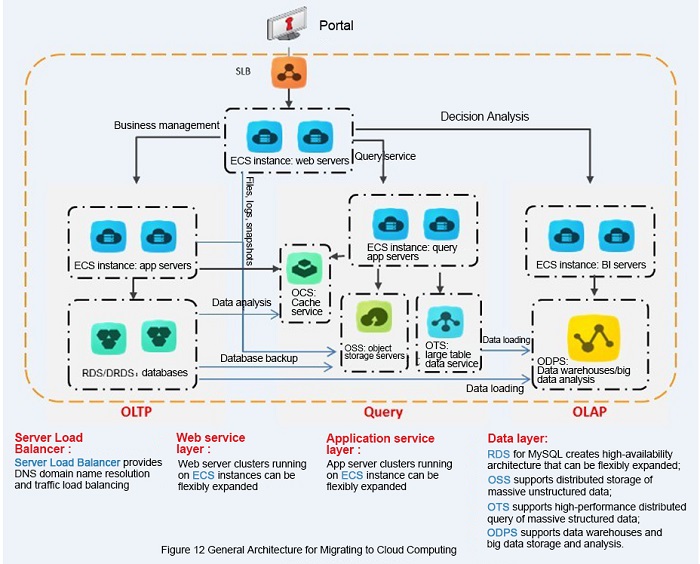

For OLAP apps, you can adopt ODPS + OTS + RDS/ADS solutions in place of the minicomputer + Oracle DB + OLAP + RAC + EMC storage solution, as shown in Figure 11. The universal cloud architecture solution is shown in Figure 12. You are advised to perform analysis and make a proper choice based on your needs to finalize a cloud migration scheme for your specific business system.

Different cloud architecture solutions enable you to migrate traditional IT systems to the cloud based on the requirements of different implementation scenarios. By following the best practices for cloud product architecture, you can experience the full potential of Alibaba Cloud product portfolios. Alibaba Cloud products and services help your organization achieve elastic, low cost, stable, secure, and easy-to-use IT architecture.

2,605 posts | 747 followers

FollowAlibaba Clouder - August 26, 2020

Alibaba Cloud Native Community - September 12, 2023

Alibaba Clouder - June 2, 2020

Alibaba Container Service - July 16, 2019

Alibaba Clouder - November 14, 2017

Alibaba Clouder - August 14, 2018

Your post is very inspiring and inspires me to think more creatively., very informative and gives interesting new views on the topic., very clear and easy to understand, makes complex topics easier to understand, very impressed with your writing style which is smart and fun to work with be read. , is highly relevant to the present and provides a different and valuable perspective.

2,605 posts | 747 followers

Follow Architecture and Structure Design

Architecture and Structure Design

Customized infrastructure to ensure high availability, scalability and high-performance

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More DevOps Solution

DevOps Solution

Accelerate software development and delivery by integrating DevOps with the cloud

Learn More Intelligent Advisor

Intelligent Advisor

An intelligent tool that can be used to perform quick inspections on your cloud resources and application architecture to detect underlying risks and provide solutions.

Learn MoreMore Posts by Alibaba Clouder

Raja_KT February 14, 2019 at 6:56 am

Was it oracle coherence in the past? minicomputer Oracle DB OLAP RAC EMC storage solution replacement makes very much sense.