Abstract: Nowadays, more and more businesses are incorporating big data analysis to improve competitiveness. For many enterprises, data analysis is of critical importance, and is necessary for marketing and product optimization. This article mainly describes some Hadoop processing scenarios and focuses on some best practices of Hadoop on the cloud.

This article mainly describes some Hadoop processing scenarios and focuses on some best practices of Hadoop on the cloud. This article is based on Alibaba Cloud E-MapReduce and the entire Alibaba Cloud system.

I will not cover all scenarios in this article, but I will be focusing on the most important scenarios. The description includes only brief information about typical scenarios that are generally used in combination by a service system.

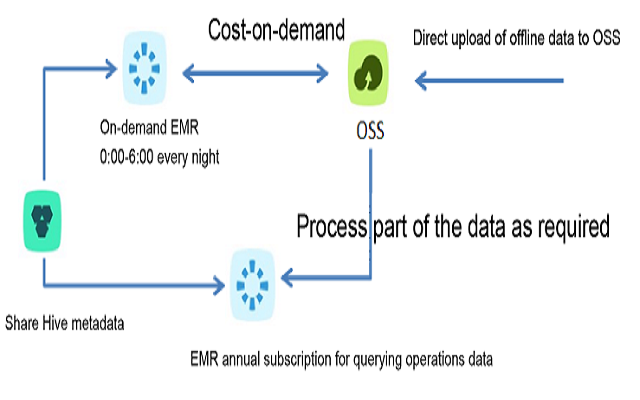

• Data can be divided into cold data and hot data. Generally, you are recommended to store cold data in OSS and hot data in a local HDFS.

• To reduce cost, run clusters as required from 00:00 to 6:00 in the morning and release them when they are not required. ECS tends to have lower loads at these hours and therefore large clusters are usually available then.

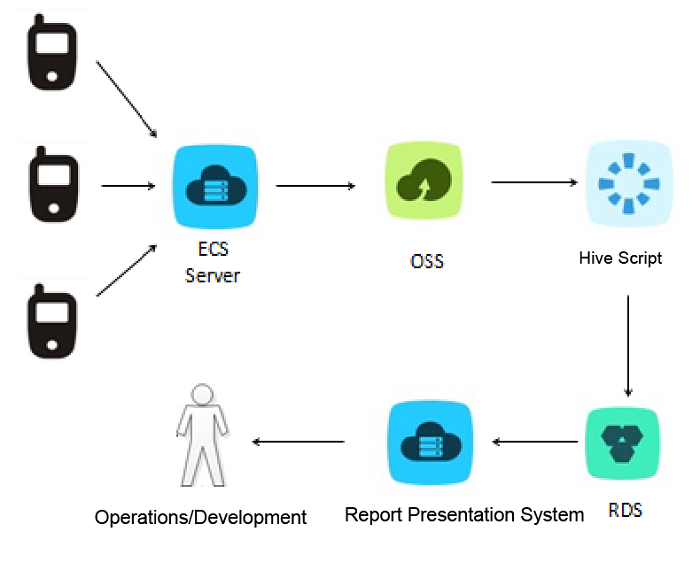

Currently, live videos over the Internet are extremely popular and large amounts of user behavior data is generated during live videos, including web page browsing records and button clicking records.

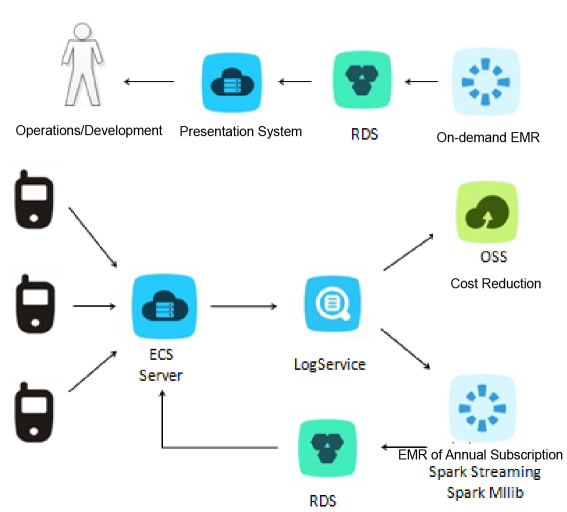

In this scenario, the server stores the data in OSS, runs a Hive script in E-MapReduce to analyze the data (such as generating PV and UV statistics), stores access records corresponding to each link in RDS, and presents the data to operations personnel through the report system.

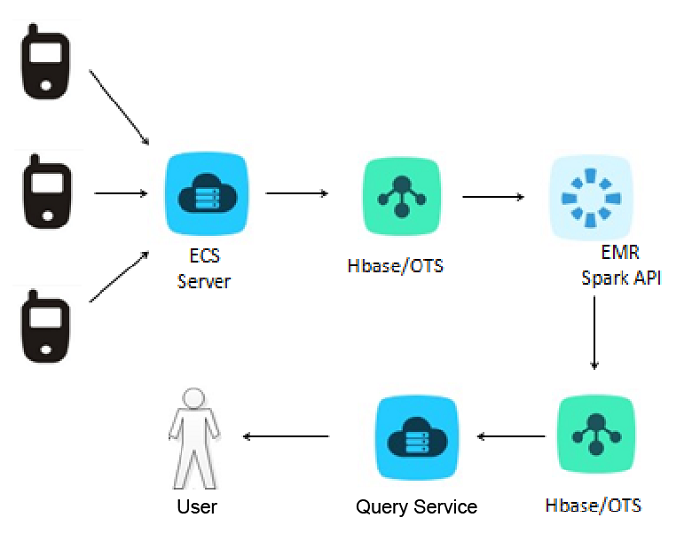

It is possible to collect online data and analyze the data in an offline manner. For example, a transportation app can upload physical indicators of a vehicle in real time, including the speed, engine power, and battery level to HBase, and then Hive, MapReduce, or Spark can be used to analyze the indicators by city in an offline manner. The analysis, performed by period and city, include information about the average mileage, average speed, miles per gallon, and average idle time. The information is stored in HBase and can be used by vehicle manufacturers. Furthermore, additional information such as number of vehicles on the road, proportion of vehicles on the road, and driver behavior can be used to analyze traffic conditions.

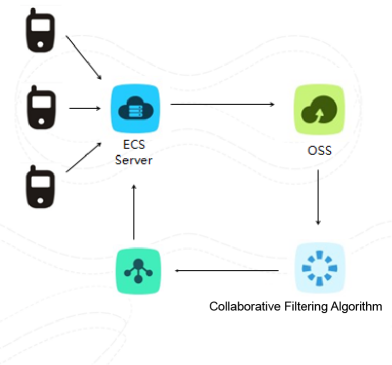

When a user streams a video online, the user will perform operations such as liking, saving, and sharing. Users performing the same operation on the same video are associated and analyzed into a model. When an unassociated user watches the video, a link is provided to the user recommending videos associated with other users. This process involves the collaborative filtering algorithm.

Spark MLlib analyzes user logs in OSS and stores the analysis results in RDS.

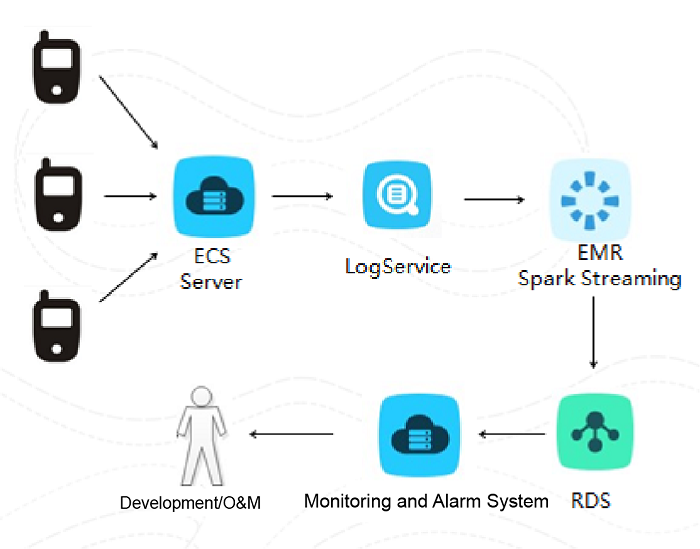

We can monitor a network or a service through real time processing. Statistics from this analysis show the current service quality from various dimensions, including the proportions of status code by request type, proportions of request interfaces, distribution of request delays, and proportions of latencies by request type. Final analysis results can be presented to O&M or R&D personnel to help them ensure service quality and optimize performance. In case of an exception, an alarm is triggered to inform O&M or R&D personnel.

The architecture employs Spark Streaming to receive logs sent from LogService in real time, analyze the logs and store the analysis results in RDS. In case of an exception, the monitoring and alarm system triggers an alarm.

Suppose you have a website with many visitors. User logs are received by LogService and stored in OSS. At night, E-MapReduce is run to perform offline log analysis on, for example, page UV and redirection from Page A to Page B. The analysis results are provided to support operations.

When users browse the website, content recommendation is performed in real time based on the content that they view. E-MapReduce Spark Streaming receives data from LogService in real time, leverages the Spark MLlib algorithm to determine the content to be recommended, and stores the recommendation information in RDS. The information changes in real time when users navigate through the website.

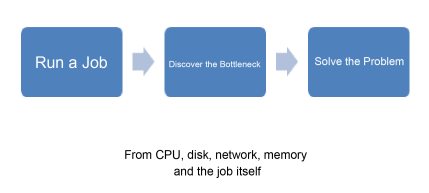

• Monitoring tools such as Ganglia can be used to check the CPU, disk, network, and memory information of running jobs. If a bottleneck is identified, you can easily upgrade the hardware on the cloud to eliminate the bottleneck. For example, you can select an ECS instance with an SSD cloud disk, better processing power, and larger memory capacity.

• You can run the jstack command multiple times to view stack positions of jobs and locate bottlenecks.

The following describes some common measures to address the issues of implementing Hadoop:

• Avoid small files. It is recommended that the size of each file stored in OSS or HDFS be limited to about 1 GB. Because it takes about 60 seconds to process a task and the processing speed is 20-30MB/s, 1 GB would be the most suitable file size.

• Implement concurrent processing more frequently to fully leverage cluster resources.

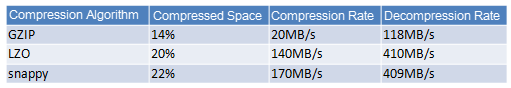

• Compress data to save storage space, reduce the storage cost, speed up data transmission, and lower disk I/O workload.

• Use Hive on Tez.

• Use new computing engines such as Spark and select in-memory instances.

If you have any feedback or suggestions, please feel free to share them with me. You can find me on my microblog: Alifengshen.

Database Recovery in GitLab: Implementing Database Disaster Tolerance

2,593 posts | 793 followers

FollowAlibaba Cloud MaxCompute - August 15, 2022

Alibaba Clouder - April 8, 2019

Alibaba Clouder - March 30, 2021

Alibaba Clouder - March 30, 2021

Alibaba Clouder - March 24, 2021

Alibaba Clouder - March 1, 2019

2,593 posts | 793 followers

Follow E-MapReduce Service

E-MapReduce Service

A Big Data service that uses Apache Hadoop and Spark to process and analyze data

Learn MoreMore Posts by Alibaba Clouder

Raja_KT February 16, 2019 at 6:43 am

Good sharing. But when we know the complete systems, we can draw proper lines :)