This article shows you how you can deploy microservices on Alibaba Cloud with Function Compute by using Cloud Customer Service as an example.

Alibaba Cloud Function Compute is a fully hosted and serverless running environment that takes away the need to manage infrastructure and enables businesses to focus on software development. You can deploy microservices on Alibaba Cloud with Function Compute; in this article, we will be doing this with the Cloud Customer Service product as an example.

Note: At the time of writing, Cloud Customer Service is only available for Mainland China Alibaba Cloud accounts.

Alibaba Cloud's Cloud Customer Service is a complete intelligent service system that can be easily integrated into websites, applications, public accounts, and other systems. Cloud Customer Service provides complete hotline and online service functions for users to easily access other systems such as CRM. It dynamically manages the centralized knowledge bases and knowledge documents used by customers and agents. Based on Alibaba Cloud's intelligent algorithms, chatbots can accurately understand your intention and answer any questions. In addition, it collects and analyzes the data in the customer service center in real time, helping enterprise decision-makers understand the most common issues and service bottlenecks from a global perspective.

As a function of Cloud Customer Service, Visitor Card associates the users of Cloud Customer Service with those in the CRM system to help the customer service personnel understand the customers' basic information for better support.

The Visitor Card Integration Guide provided by Cloud Customer Service is a web project implemented based on Spring MVC. For users who use Node.js, they can migrate from Java to Function Compute and provide function as a service for core business calls implemented with Node.js.

Users have tried to migrate Java by themselves but encountered the following technical challenges:

· JAR provided by Cloud Customer Service is a private Maven warehouse and cannot be accessed by the external network. Therefore, JAR must be copied to Maven for integration.

· How should the Maven plug-in be properly configured to generate a JAR package that is supported by Function Compute?

fccsplatform-common-crypto-1.0.0.20161108.jar is a package in the Maven warehouse in Alibaba Cloud's internal network. It is only available to external network customers through Cloud Customer Service. The following XML fragment is a common way in which Maven depends on local JAR packages. Because this is not a typical scenario, additional information is required to complete it.

<dependency>

<groupId>com.alipay.fc.csplatform</groupId>

<artifactId>fccsplatform-common-crypto</artifactId>

<version>1.0.0.20161108</version>

<scope>system</scope>

<systemPath>${project.basedir}/lib/fccsplatform-common-crypto-1.0.0.20161108.jar</systemPath>

</dependency>Microservices help to make application delivery more agile and nimble. Solid-state disk (SSD) technology makes applications faster. This you already know.

But have you thought about how you can leverage microservices and SSD together to double down on speed and agility benefits? If not, this article is for you. It explains how to pair a microservices architecture with SSD storage to optimize application performance, specifically for cloud-based deployments.

Microservices are an approach to application architecture that involves breaking complex applications into multiple discrete parts, or services.

In a microservices architecture, your application’s storage process might run as one service, while the frontend runs as another—or possibly even multiple services, each covering a specific frontend component. Authentication, security, logging and so on can also be performed by distinct services.

The main advantage of microservices is that they provide more modularity and flexibility for your application as a whole. You can deploy each service in a different location in order to optimize cost and performance on a per-service basis. You can also update a service without having to disrupt other parts of the app. You can generally track performance or security issues more easily because you can isolate a problem to a particular service, rather than having to slog through your entire application to locate a root cause. These (and more) are the benefits of microservices.

SSD, meanwhile, refers to a special type of hard disk in which data is stored using flash memory. This makes SSD different from conventional hard disks, which rely on magnetic storage.

The main advantage of SSD is speed. While SSD data transfer rates vary depending on which specific SSD devices you use and how your software accesses them, you can generally expect I/O rates to be about 20 times faster with SSD than they are on traditional hard disks, while overall data transfer rates are between 8 and 16 times faster.

SSD has been a popular solution for about a decade for personal computers, where SSD devices offer the additional advantage of being more resistant to physical damage. However, SSD is also an increasingly popular storage option for cloud-based applications, for reasons that we discuss below.

Microservices and SSD each offer distinct advantages on their own.

When you use microservices and SSD together in the cloud, however, you multiply the benefits of each.

The main reason is that a microservices architecture allows you to choose specific components of an application to run with SSD storage. Rather than having to deploy the entire application on SSD (as you would if you did not have a microservices architecture), you can take advantage of SSD for the specific services that will benefit from it the most. The rest can run with standard storage, or no storage at all, depending on their needs.

This approach allows you to leverage the performance of SSD in a cost-efficient way because you are not paying for SSD storage for your entire application, you are only paying for the services that need it.

The microservices that handle the storage for your application are often the most obvious candidates for an SSD hosting solution, particularly if your application will benefit significantly from faster throughput. For example, this could be the case for a web server with a heavy traffic load that needs to avoid bottlenecking at the storage level.

In other cases, you may not want to run your main storage service on SSD, but still take advantage of SSD for other specific parts of the application. For instance, you might host the microservice and database that handle authentication on an SSD-enabled cloud instance. This approach would improve the speed of logins for your users.

As a third example, your application might include a caching microservice. One way to enable fast access for this data is to store it in memory. But in some cases, such as those where you need to cache a large amount of data, it could be more cost-effective or scalable to use SSD storage for the caching instead. SSD is not as fast as in-memory data storage, of course, but for caching application or user data, it is likely sufficient.

Practices for solving data consistency problems in a microservices architecture

With rapid business development, monolithic architectures show many problems, such as low code maintainability, low fault tolerance, difficult testing, and poor agile delivery capabilities. To solve these problems, microservices were born. Although microservices can solve the aforementioned problems, it also brings about new problems, one of which is how to ensure business data consistency between microservices.

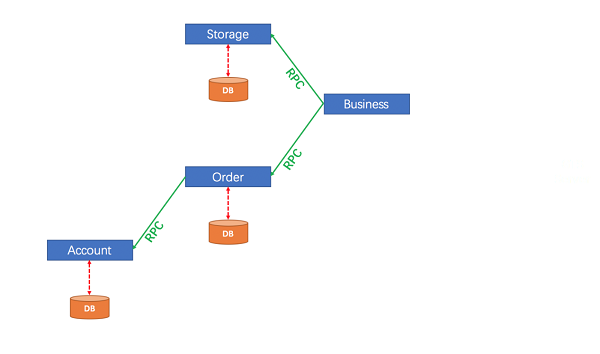

This article uses a commodity purchase case to explain how Fescar can ensure business data consistency under the microservices architecture Dubbo. In the example described in this article, the registration, configuration, and service center for both Dubbo and Fescar is Nacos. Fescar later than 0.2.1 supports the Nacos registration, configuration, and service center.

The commodity purchase business includes three microservices:

The business structure is as follows:

StorageService

public interface StorageService {

/**

* deduct storage count

*/

void deduct(String commodityCode, int count);

}OrderService

public interface OrderService {

/**

* create order

*/

Order create(String userId, String commodityCode, int orderCount);

}This blog explores how architectural visualization can help us identify problems that exist in our architecture, and ensure our system is highly available.

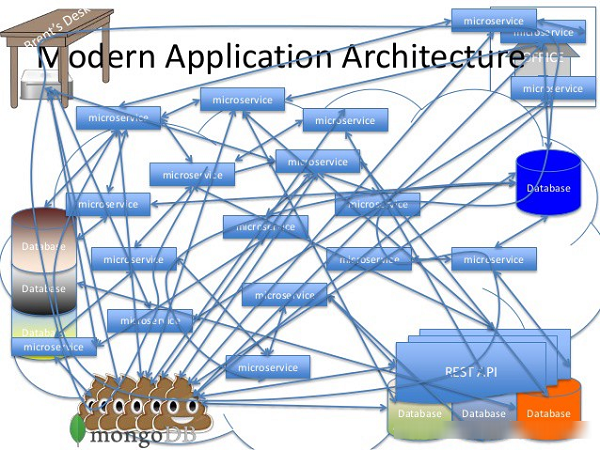

Every time a microservice architecture is changed, it becomes more complex. Frequent architectural changes may lead to huge differences between the actual architecture and the expectations. Because of these changes, it is difficult for architects or system operations and maintenance (O&M) personnel to accurately remember the composition and interaction of all resources and instances.

In addition, some undesirable features may be introduced during the dynamic evolution of system architectures, such as strong dependence, insufficient local capacity, and over-coupling. These factors bring about significant security threats to the system stability. Therefore, whenever we perform system transformation, business expansion, and stability management, we need to sort out the system architecture to present the interaction between each component beforehand. Architectural visualization can help us clearly identify problems that exist in our architecture, and ensure our system is highly available.

An architecture diagram shared by Daniel Woods when he talked about microservices

Architectural visualization brings about advantages in many aspects, including:

Many familiar architecture diagrams exist in static PowerPoint slides. While we may still be using these legacy architectural diagrams, they are often outdated after significant architectural changes. Using an outdated architecture diagram may lead to misunderstanding of the online architecture. We need to constantly update the view of the system architecture in our minds to be sensitive to any architectural changes. Big promotions or major system transformations happening each year provides us with opportunities to sort out and re-recognize the system architecture. Then we may use various tools to view the distribution of various system components, and the internal and external dependencies they have. This is the mostly commonly used method to sort out an architecture diagram. We may call it the "manual drafting method".

People may want to use automation through technology to improve the efficiency of manual work—for example, the commonly used event-tracking-based microservice visualization solutions. These type of solutions are mainly used in the monitoring fields, such as distributed tracking and appliance performance management (APM). An architectural visualization solution for an ARM product in the app dimension is as follows.

Microservices architecture involves developing applications as a suite of multiple independent modular services with each of them serving a unique purpose.

With the proliferation of online applications, their architectures need to continuously evolve to make them stand out in the multitude of similar apps. Applications must be highly available and resilient while running on cloud environments. To ensure a consistent user experience, organizations need to frequently roll out updates, even multiple times in a day. However, monolithic web applications that serve up HTML to desktop browsers cannot cope with such regular updates and hamper developers’ productivity.

This led to the need for a new architecture: Microservices.

Let’s see what it stands for ?

Microservices is a way of breaking large software projects into loosely coupled modules that communicate with each other through simple APIs.

Microservices architecture involves developing applications as a suite of multiple independent modular services with each of them serving a unique purpose. Each of these services runs a particular process and communicates internally with other modules to serve a business goal.

Let’s dig deeper and understand why microservices architecture is needed.

An independent application may have different individual modules that cater to the different business or technical needs. For a low-scale application with low complexity, the processing of overall tasks isn’t difficult when all modules are present in the same application. In fact, this improves program debugging speed and ensures a high level of execution efficiency.

However, as the scale of an independent application increases, these benefits soon fade away. With business expansion and changing needs, application backend gradually becomes too complex for developers to manually handle and scale. You need the ability to internally restructure your application based on changing requirements by adding, removing, or changing modules. Dead code appears and restructuring becomes more difficult. Minor changes may often impact the entire application. In this case, to resize or upgrade any module, you have to resize or upgrade all modules in the application. The program's external dependencies become increasingly complex and the scope of automated testing falls.

To address all of these shortcomings, microservice architecture was developed. Let’s see how they bring value to your development process and application efficiency.

An application is made up of multiple services, where each service handles one set of tasks. In microservice architecture, these multiple services communicate with each other using web protocols, such as HTTP or JSON. Microservice advantages are:

Needless to say, Microservices definitely make your life easier, but there are some drawbacks too.

Although the name includes the qualifier, "micro," its implementation requires manual efforts. If you do not have a complete understanding of the business field and are unaware of the true business needs, the management of multiple services along with numerous relevant processes becomes a daunting task. Besides, the number of processes grows when load balancing and messaging middleware are added. Management, operation, and orchestration of all these services become cumbersome. In addition, you need a DevOps expert for implementation and management of these architectures, which adds to overall overhead.

As microservice architecture acts a distributed system, a certain level of complexity gets added. Also, it can lead to several issues, including network latency, fault tolerance, message serialization, and asynchronicity.

Manage Ultra-Large-Scale application has many challenges, such as application management and monitoring, high availability, performance optimization, and system expansion .etc. This course will show you how to manage the large applications on Alibaba Cloud using EDAS which is the core product supports 99 percent of Alibaba Cloud's large-scale application systems.

This course introduces the concepts related to resource configuration & orchestration automation, and installation and configuration of the popular resource automation tool terraform. Through the actual operation demo, you will learn how to use terraform to achieve automatic configuration and orchestration of application resources based on Alibaba Cloud platform.

Through this course, you can fully understand what a microservice architecture is and how to implement microservice architecture based on Alibaba Cloud container service.

Through this course, you will learn the basic knowledge and common tools of problem diagnosis and monitoring for containerized applications, and understand Alibaba Cloud Container Service.)

This course aims to help IT companies who want to container their business applications, and cloud computing engineers or enthusiasts who want to learn container technology and Swarm. By learning this course, you can fully understand what a Swarm is, some key concepts of Swarm, the basic architecture of Swarm, why we need Swarm, and the basic use of Alibaba Cloud Container Service for Swarm, so as to provide reference for the evaluation, design and implementation of application containerization.

This topic describes the basic operations in Function Compute. Function Compute allows you to quickly build an application by writing function code, meaning no server management is required. Additionally, Function Compute supports elastic scaling. You can use services on a Pay-As-You-Go basis.

Function Compute supports the following tools: command-line tool fcli, command-line tool fun, and console. You can use fcli or the console to build services or query logs.

Functions are segments of application code that run a specific function, and are used in system scheduling and running. You can use the handlers provided by Function Compute to write code and then deploy the code as functions in Function Compute. Services in Function Compute correspond to the microservices in the software application architecture. When you build an application in Function Compute, you must abstract service logic into microservices as needed, and then implement the microservices as services in Function Compute.

You can create multiple functions for one service and set different memory sizes, environment variables, or other attributes for each function. This layered abstraction of services and functions balances the tradeoff between system abstraction and implementation flexibility. For example, to implement a microservice, you can use Alibaba Cloud Intelligent Speech Interaction to convert text into voices, and then combine this voice segment with images to form a video. When text is converted into voices, other services are called. Usually, you can configure a small memory size for this operation. However, video synthesis is a computing-intensive service and requires a large memory. To reduce cost, you can combine functions of different specifications to implement a microservice.

Istio is an open platform that provides connection, protection, control and monitors microservices.

Microservices are currently being valued by more and more IT enterprises. Microservices are multiple services divided from a complicated application. Each service can be developed, deployed, and scaled. Combining the microservices and container technology simplifies the delivery of microservices and improves the liability and scalability of applications.

As microservices are extensively used, the distributed application architecture composed of microservices becomes more complicated in dimensions of operation and maintenance, debugging, and security management. Developers have to deal with greater challenges, such as service discovery, load balancing, failure recovery, metric collection and monitoring, A/B testing, gray release, blue-green release, traffic limiting, access control, and end-to-end authentication.

Istio emerged. Istio is an open platform for connecting, protecting, controlling, and monitoring microservices. It provides a simple way to create microservices networks and provides capabilities such as load balancing, inter-service authentication, and monitoring. Besides, Istio can provide the preceding functions without modifying services.

Application Configuration Management (ACM) allows you to centralize the management of application configurations. This makes for more convenient management of configurations and enhances service capabilities for such scenarios as microservices, DevOps, and big data. ACM's predecessor was Diamond, the internal configuration center for Alibaba's Taobao.

A PaaS platform for a variety of application deployment options and microservices solutions to help you monitor, diagnose, operate and maintain your applications

How Does Cloud Computing Help Analyze Critical COVID-19 Cases 80% Faster?

2,593 posts | 793 followers

FollowAlibaba Clouder - March 1, 2021

JJ Lim - January 4, 2022

Alibaba Clouder - September 16, 2020

Alibaba Clouder - April 16, 2019

Alibaba Clouder - October 1, 2020

Alibaba Clouder - June 30, 2020

2,593 posts | 793 followers

Follow Function Compute

Function Compute

Alibaba Cloud Function Compute is a fully-managed event-driven compute service. It allows you to focus on writing and uploading code without the need to manage infrastructure such as servers.

Learn More EDAS

EDAS

A PaaS platform for a variety of application deployment options and microservices solutions to help you monitor, diagnose, operate and maintain your applications

Learn MoreMore Posts by Alibaba Clouder