By Lu Feng.

The tool Kettle, which is more formally known as Pentaho Data Integration (or PDI for short), is a very popular open-source ETL tool that is mainly used for data integration, transformation, and migration operations. Kettle supports a variety of relational databases and NoSQL data sources such as HBase and MongoDB, as well as other small data sources such as Excel and Access. Overall, thanks to plugin extensions, Kettle can support a large variety of data sources.

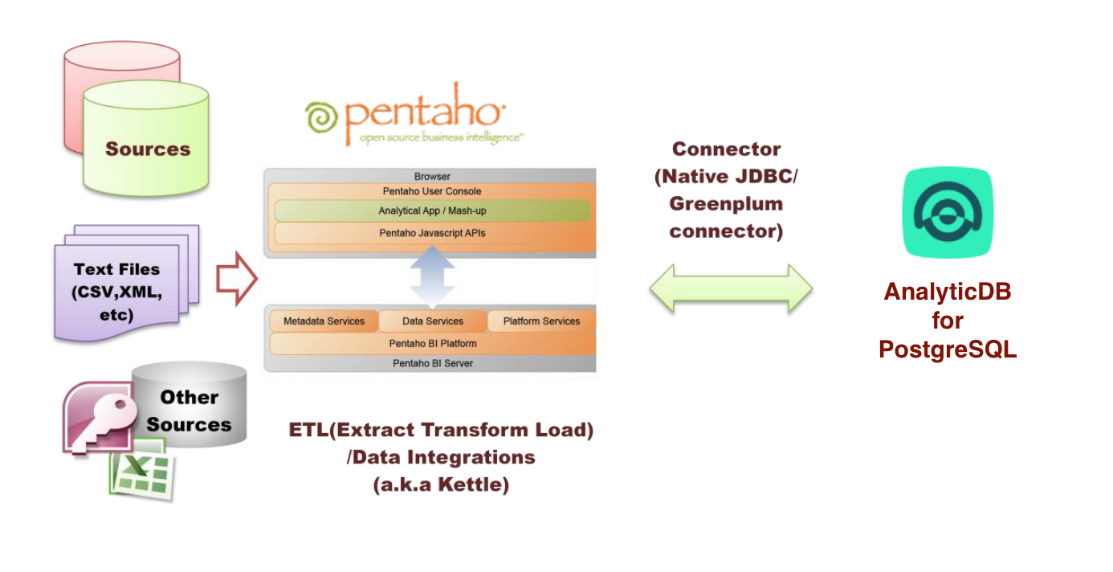

The following figure shows the relationship between Kettle and Alibaba Cloud's AnalyticDB for PostgreSQL. Essentially, after data goes through ETL and other data integration operations through Kettle, these data sources can then interact with AnalyticDB for PostgreSQL:

Kettle supports a variety of data sources, which can be divided into the following major types: table inputs, text file inputs, generate records and customize constant inputs, get system info inputs, JSON file inpus, and various other format files and other input types not mentioned here.

You can learn more about input types in Core Objects > Input in the console.

Table inputs supported by Kettle are obtained from database connections with SQL statements. The Database connection supports many connection methods, including: Native Java Database Connectivity (JDBC), Open Database Connectivity (ODBC), Oracle Call Interface (OCI), and Java Naming and Directory Interface (JNDI) connections.

With these connection methods available, Kettle can connect to the vast majority of the mainstream databases, including Oracle, SQL Server, MySQL, DB2, PostgreSQL, Sybase, and Teradata database. You can read this document for more information about database connections.

Currently, Kettle supports the following methods to import data to AnalyticDB for PostgreSQL:

| Import method | Description |

| Table output (INSERT) |

Use JDBC to import data The batch insert method using JDBC is supported |

| Bulk load (COPY) |

Use COPY to import data For large tables, COPY performance is about 10 times higher than batch insert |

When the table output (INSERT) method is used to import data, data is distributed to the corresponding segment nodes after data flows through the master node and the parsing is performed. This method is relatively slow and therefore best for importing small amounts of data. The bulk load (COPY) method, however, given its better performance, is suitable for importing large amounts of data at one time.

Following this, in the rest of this blog, we will be looking at how to migrate external data to AnalyticDB for PostgreSQL by using these two methods.

Before you use Kettle to import external data to AnalyticDB for PostgreSQL, you need to have the following completed first:

In this section, we will look at how to import data to AnalyticDB for PostgreSQL using the Table Output method. Kettle provides the table output method and import data from different data sources to ADB for PostgreSQL using general-purpose JDBC interfaces. In the following example, MySQL is used to show how to use the JDBC interface to import data to ADB for PostgreSQL. To do this, you will need to do the following:

1. Create a new transformation in Kettle.

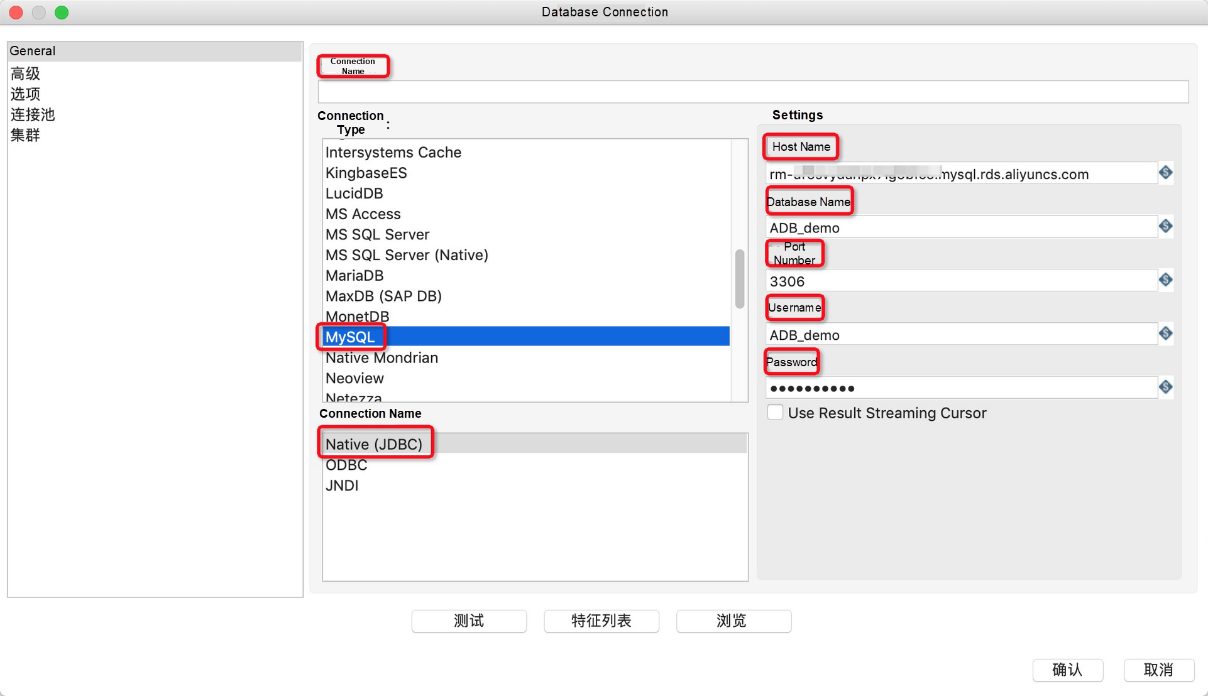

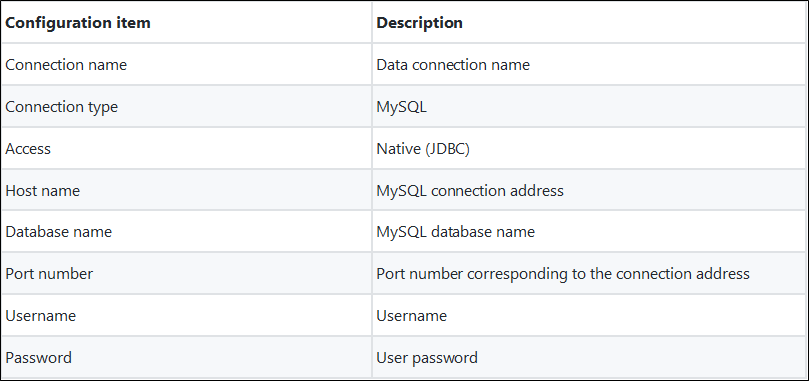

2. In the transformation, create a MySQL database as the output source with the following parameter configurations (see in the figure below). Do not check use Result Streaming Cursor when configuring parameters.

3. After configuring the preceding parameters, click Test to test the connectivity. Then, click OK after a successful test.

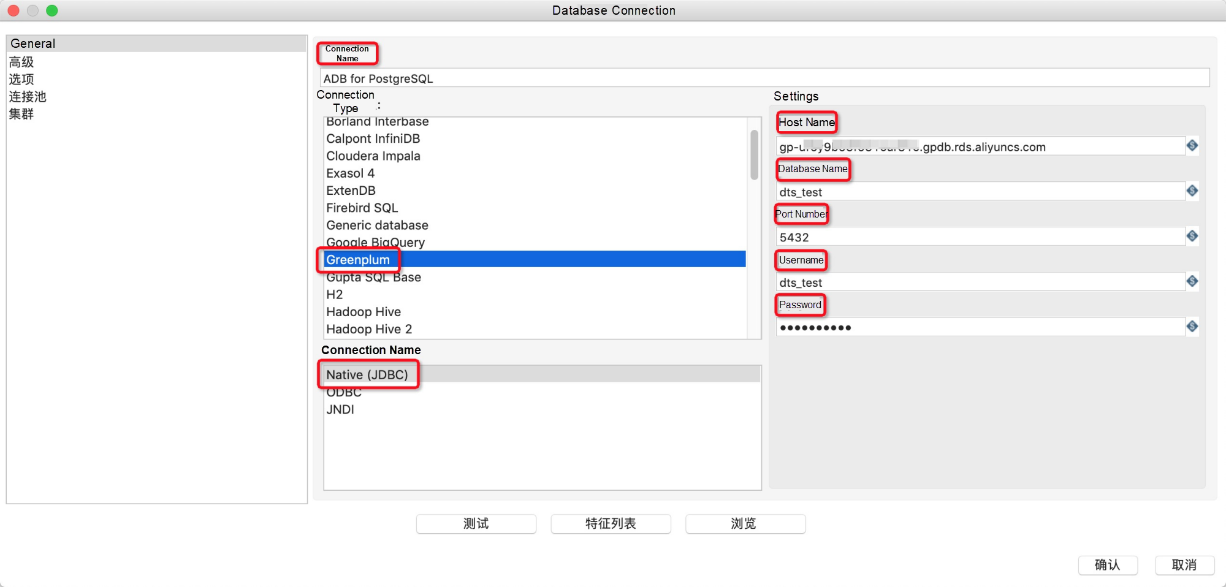

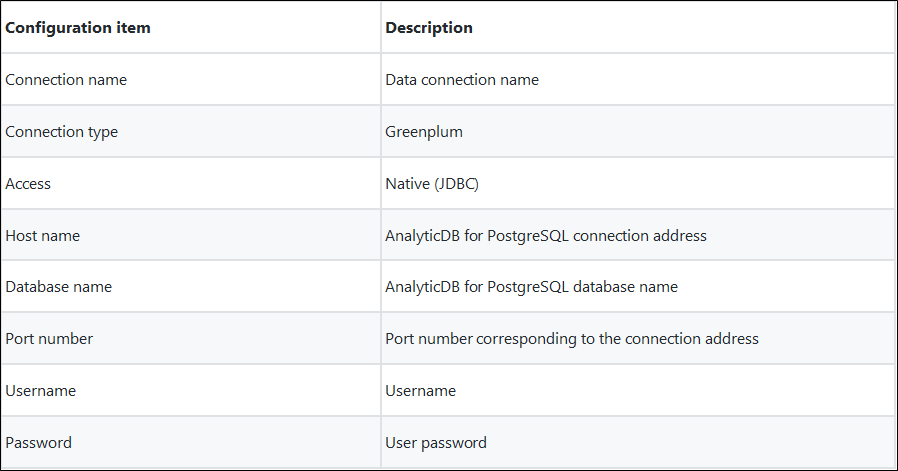

4. In the transformation, create a new Greenplum database as the input source, with the following parameter configurations.

5. After configuring the preceding parameters, click Test to test the connectivity. Then, click OK after a successful test.

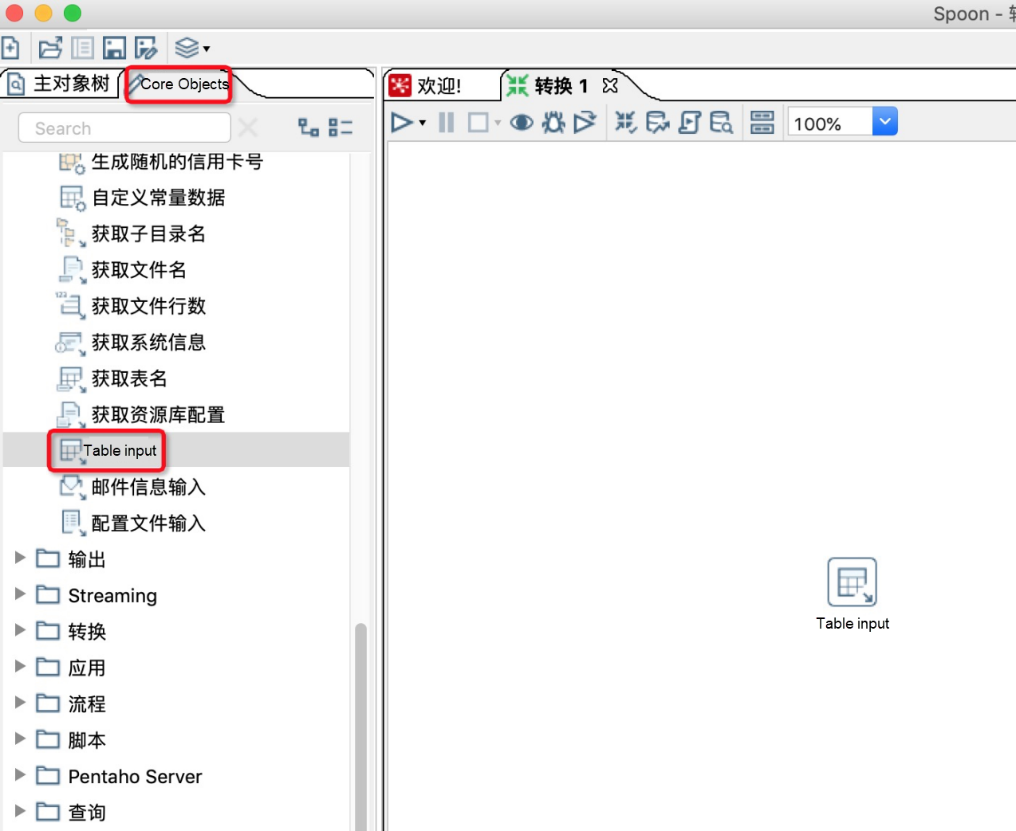

6. In Kettle, navigate to Table input in the left-side pane under the Core Objects tab and drag it into the workspace.

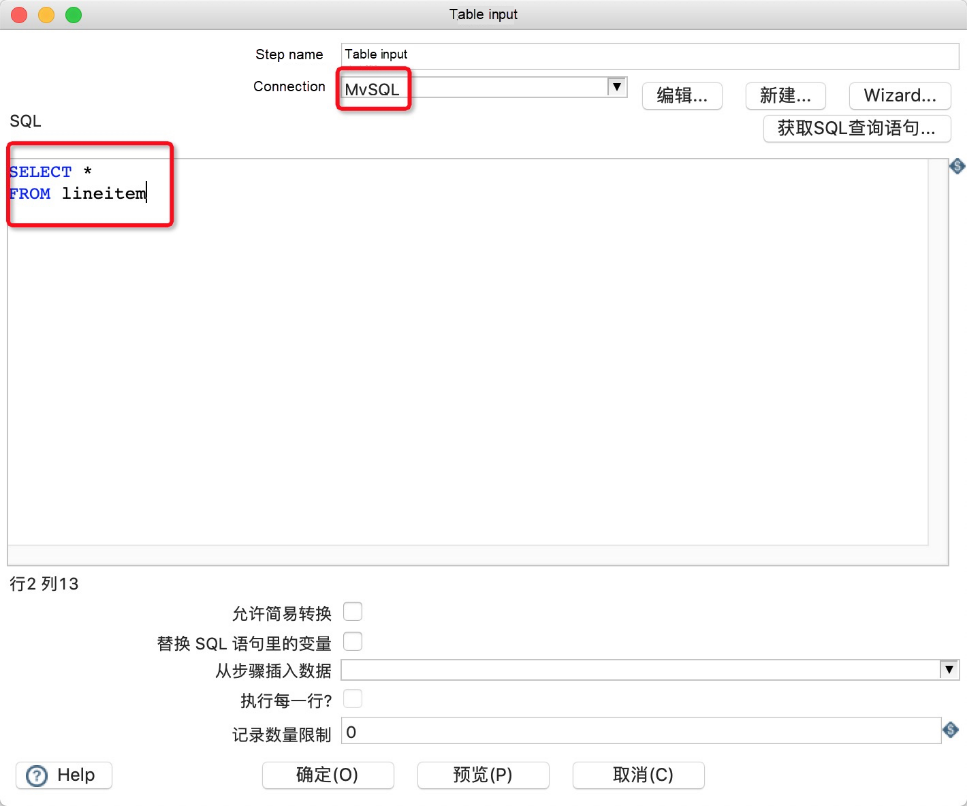

7. Double-click Table Input in the workspace area and make parameter configurations in the Table Input dialog box.

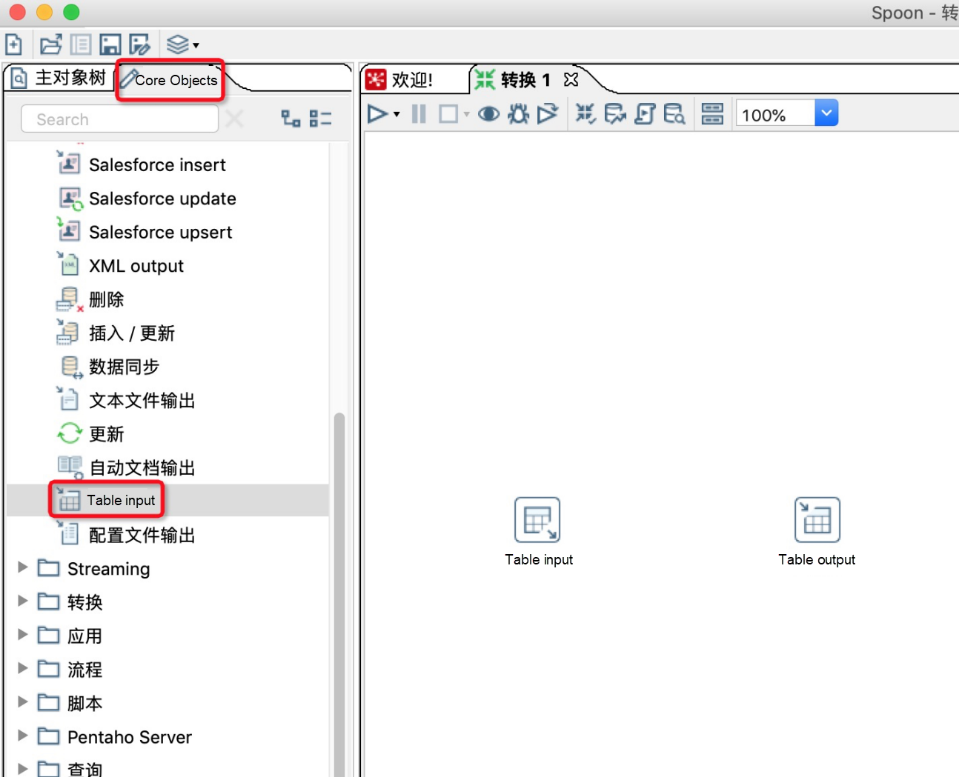

8. Navigate to Table output under Output in the left-side under the Core Objects tab and drag it into the workspace.

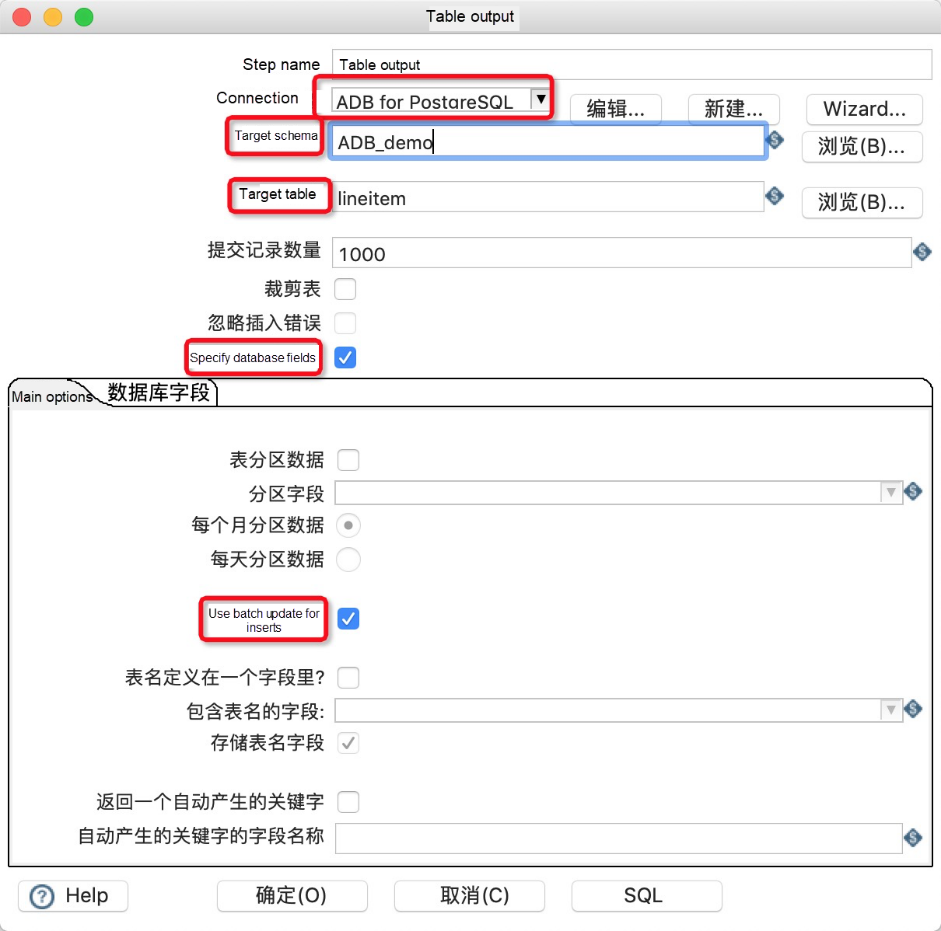

9. Double click Table output in the workspace area and make parameter configurations in the Table output dialog box.

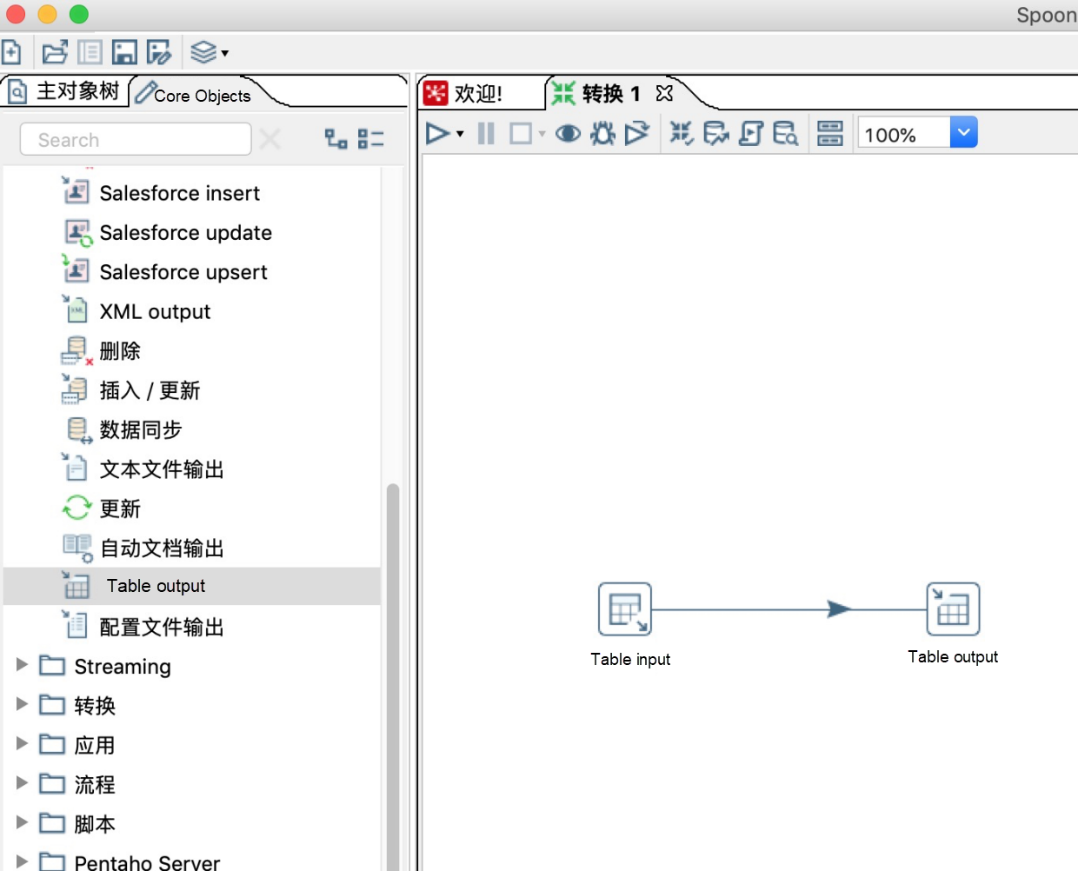

10. Create a connection line from table input to table output.

11. Click the white arrow to run the transformation and check the operation log and operation status.

After MySQL data is imported into AnalyticDB for PostgreSQL, you can use AnalyticDB for PostgreSQL for data analysis.

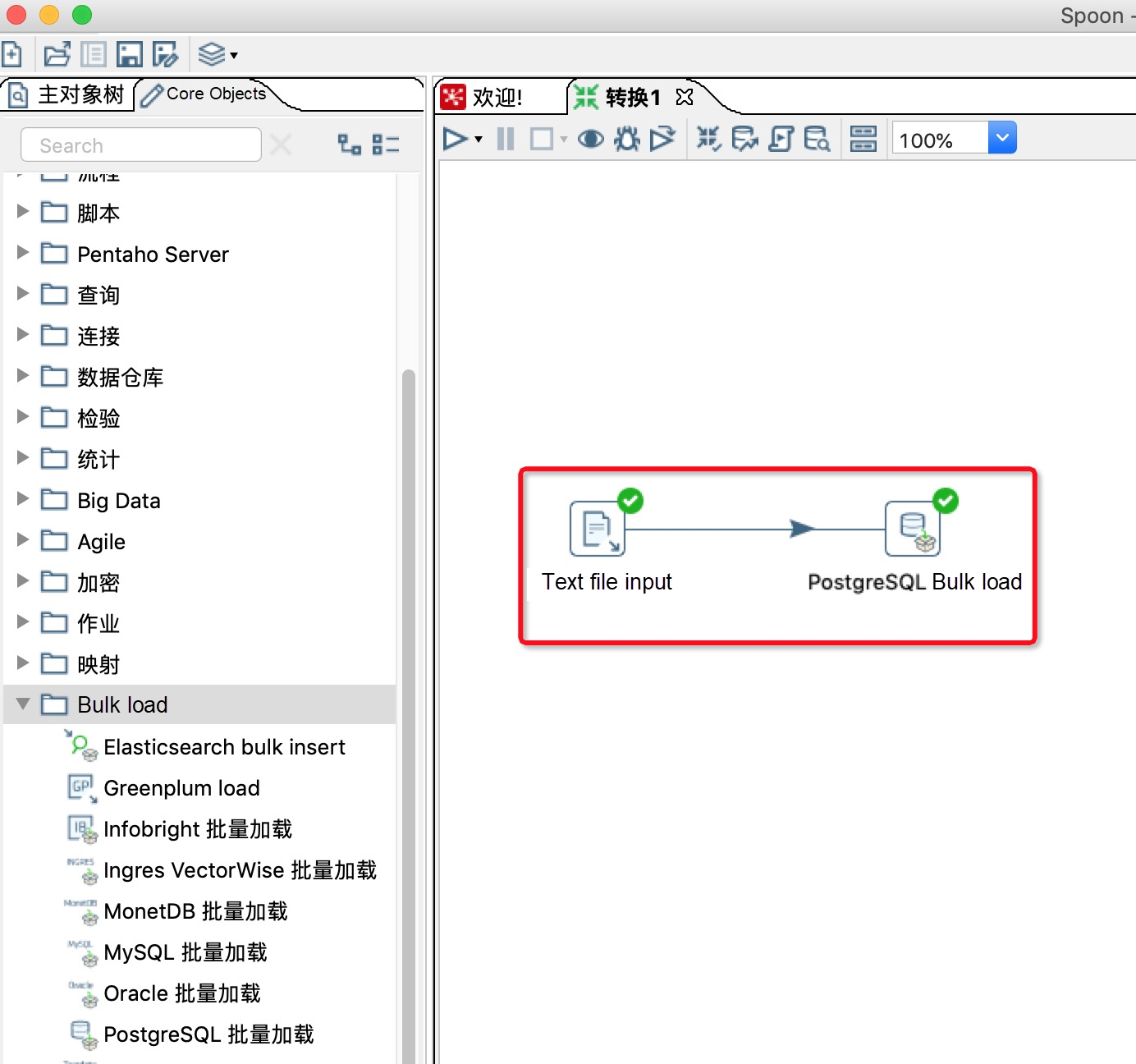

In this section, we will look at how to import data to AnalyticDB for PostgreSQL using the Bulk Load method. Kettle supports importing data to ADB for PostgreSQL by using bulk load (COPY). The following example shows detailed steps to bulk load data from an external file to ADB for PostgreSQL.

1. Create a new transformation in Kettle.

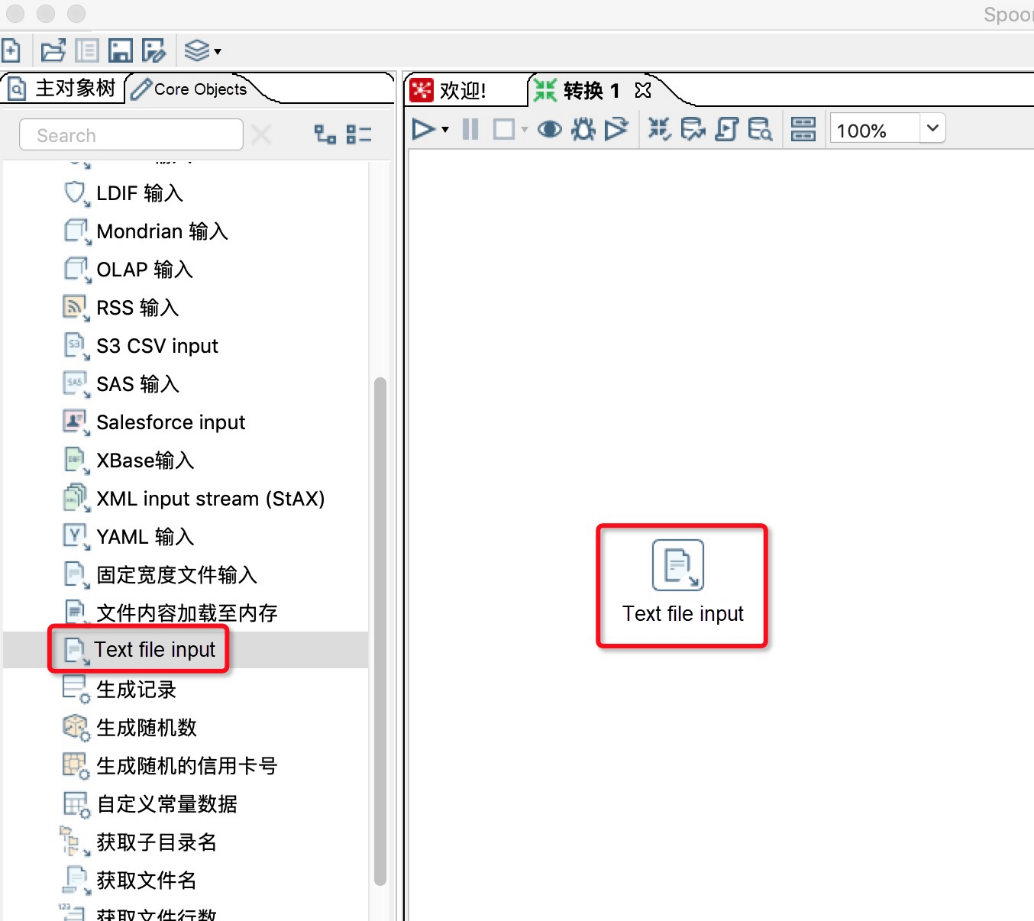

2. In the transformation, create a new text file as the output source.

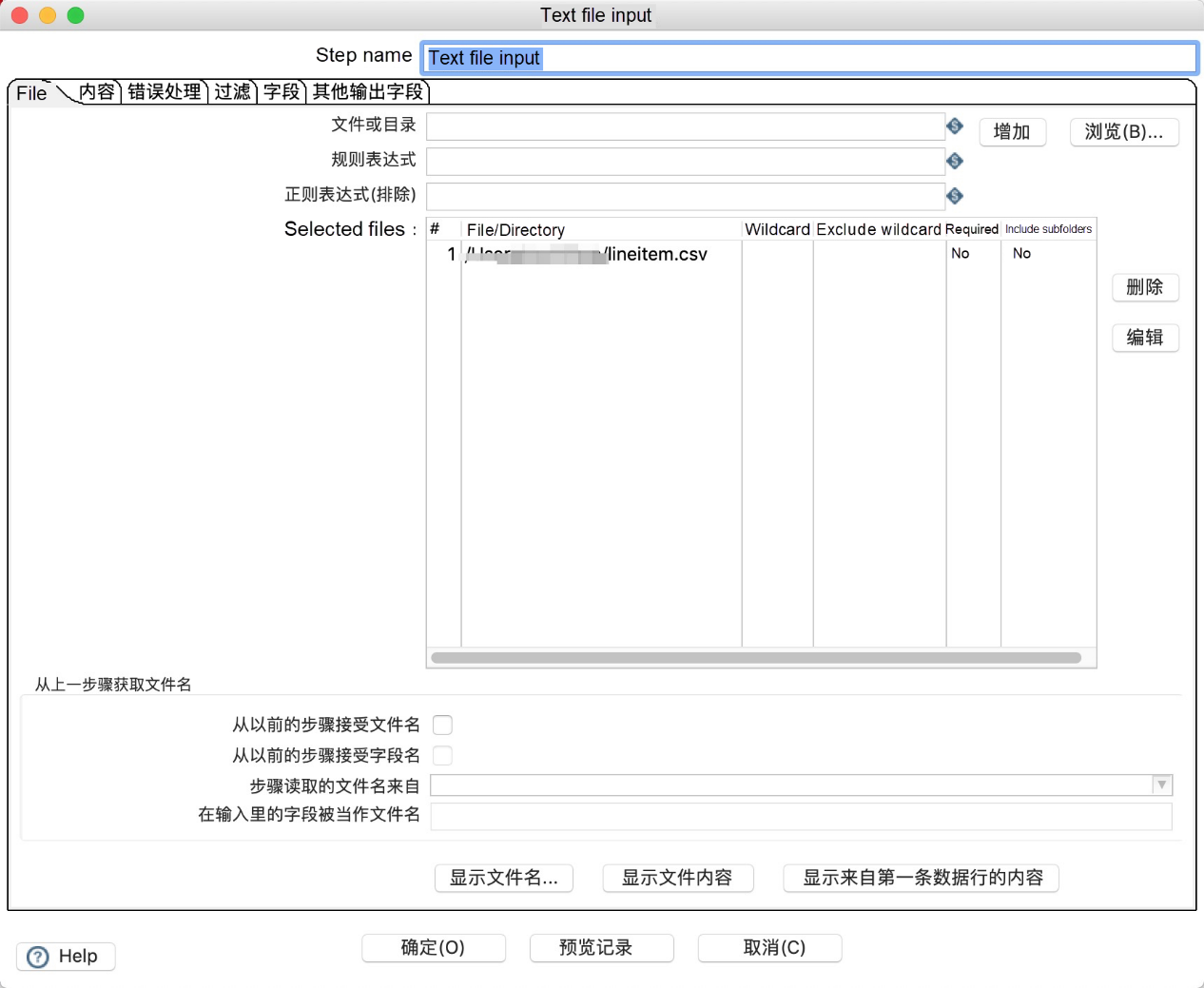

3. Double-click the text file input icon and select the target input text file.

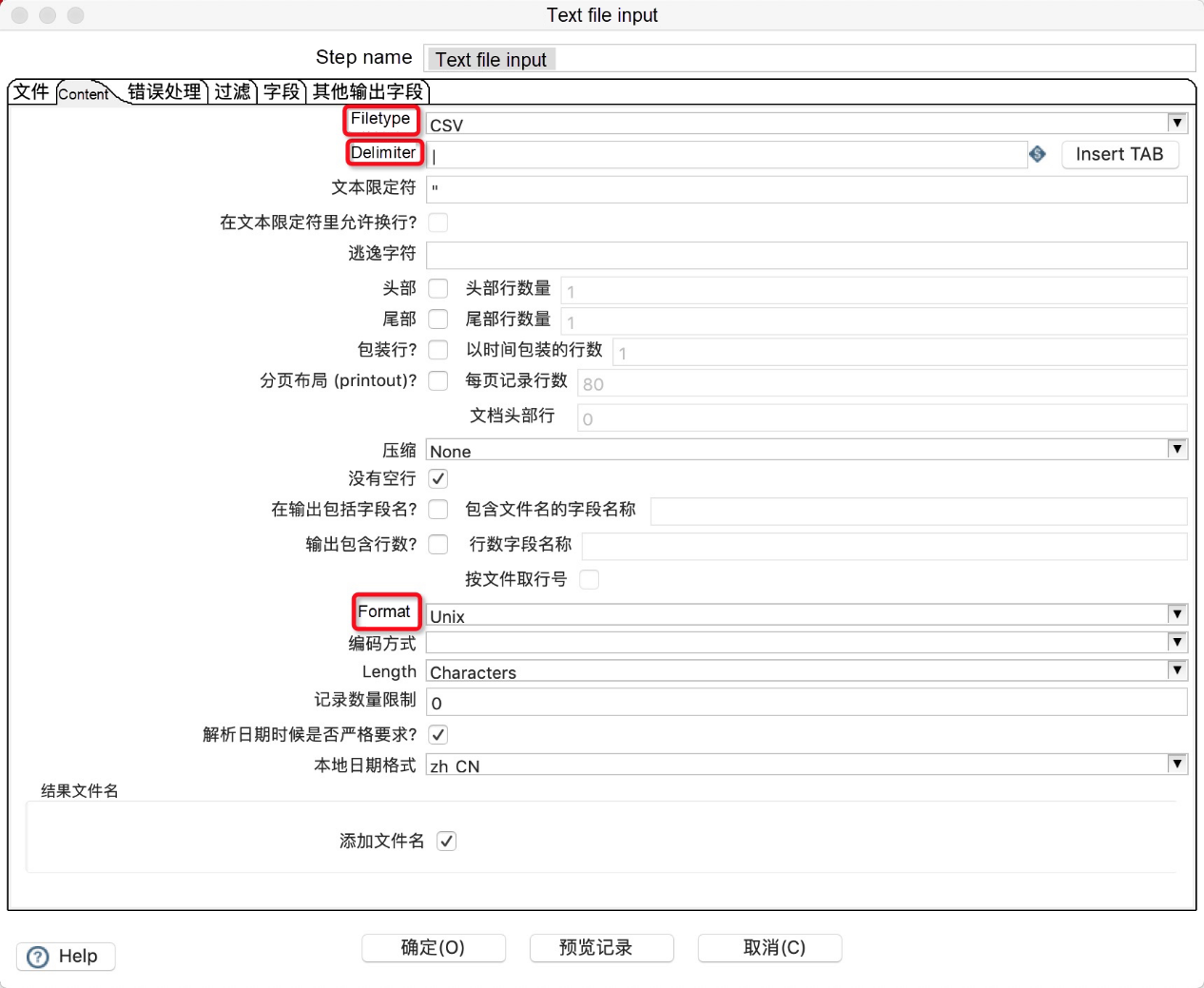

4. In the Content tab, configure a delimiter for the input file.

5. In the Fields tab, define fields in the input file.

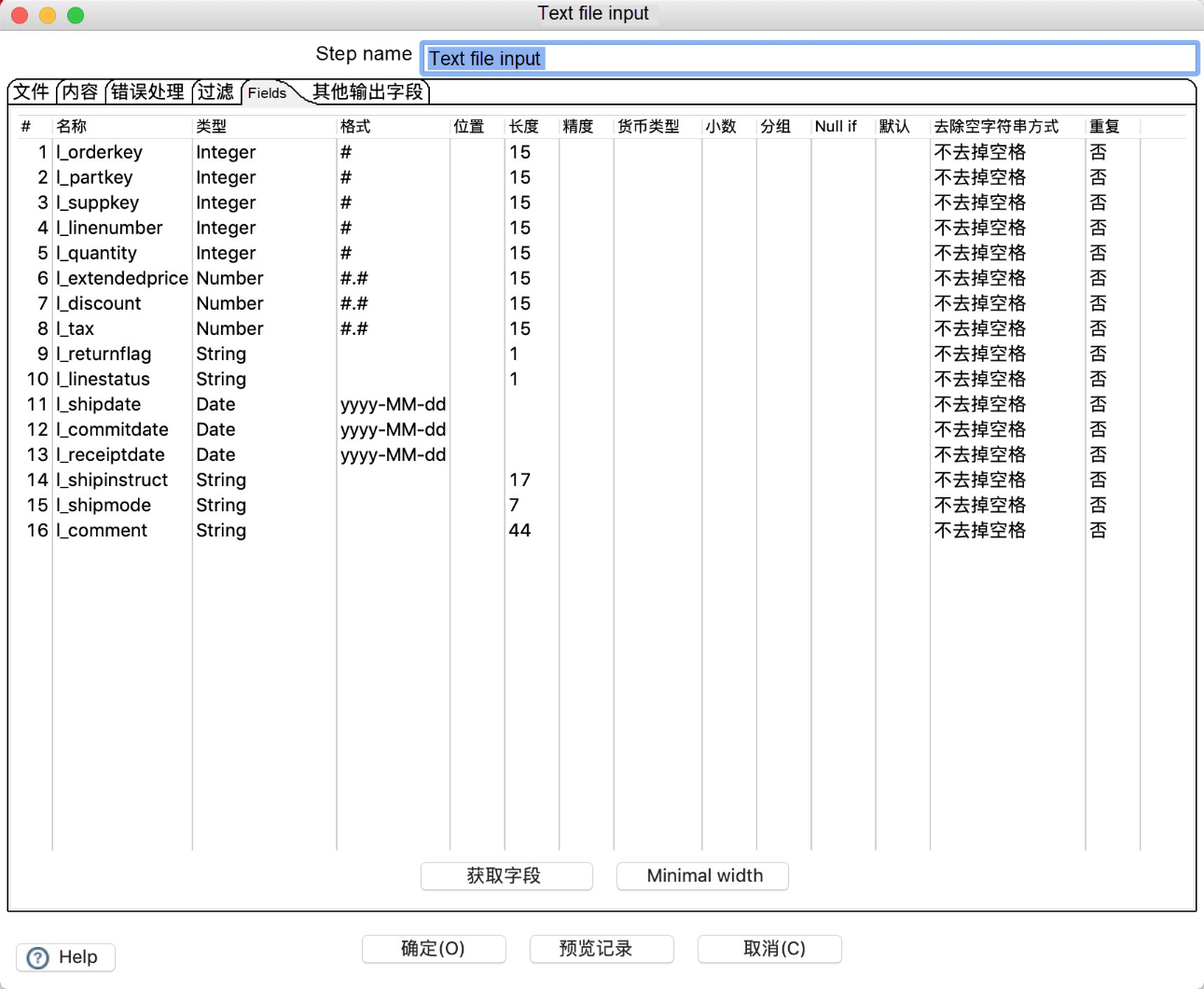

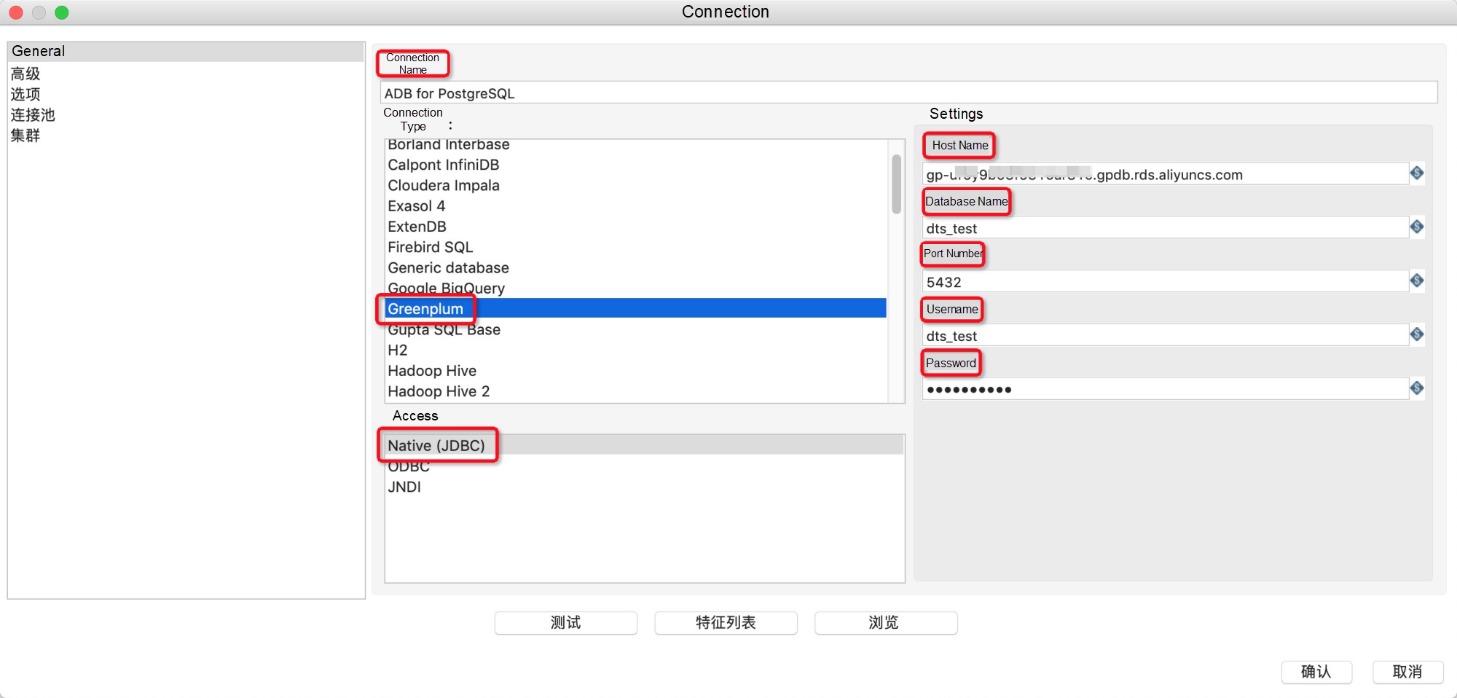

6. In the transformation, create a new Greenplum database as the input source, with the following parameter configurations.

7. After configuring the preceding parameters, click Test to test the connectivity. Click OK after a successful test.

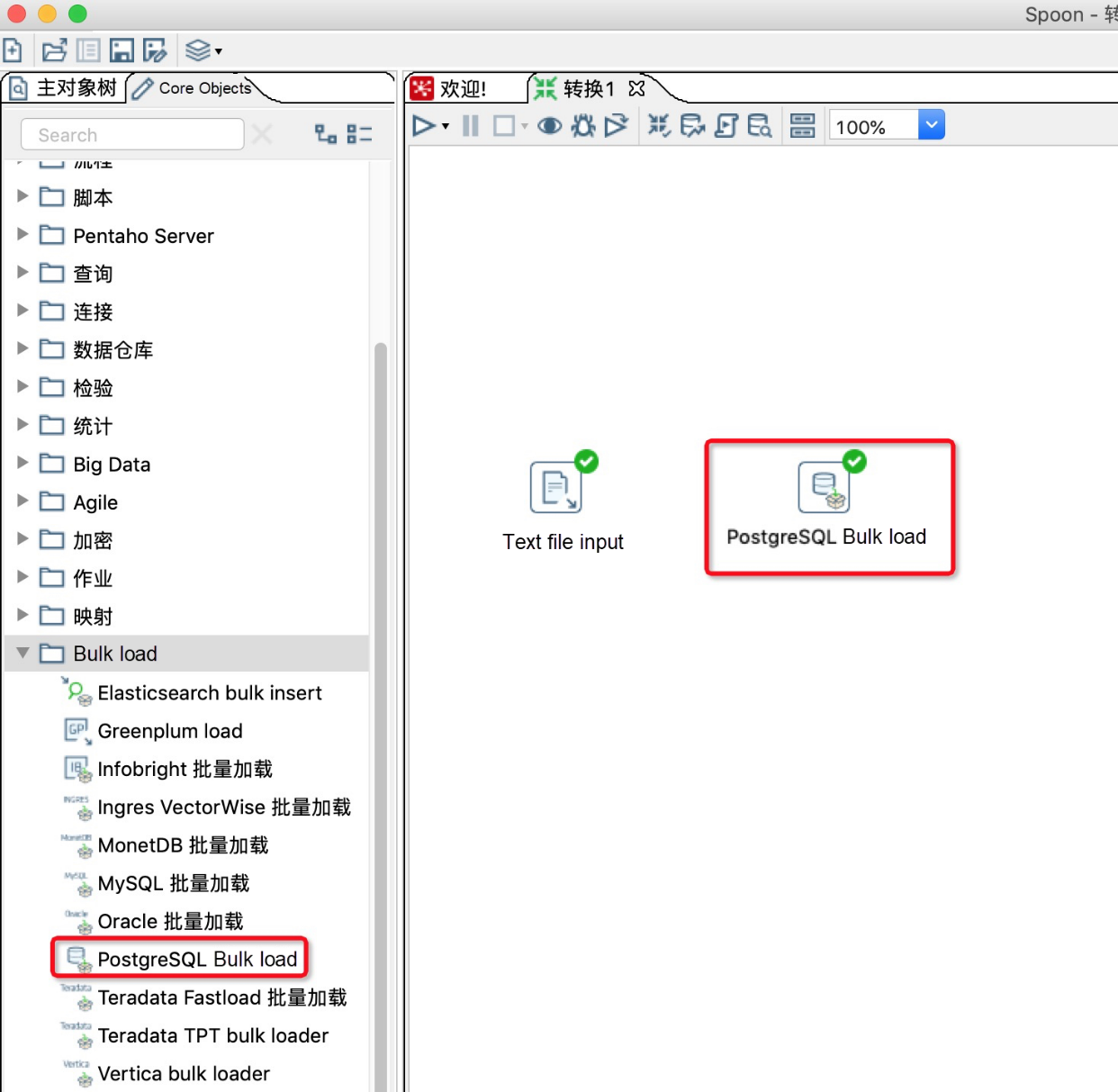

8. Navigate to PostgreSQL bulk load under Bulk load in the left-side Core Objects section.

9. Create a new connection line from file input to PostgreSQL bulk load.

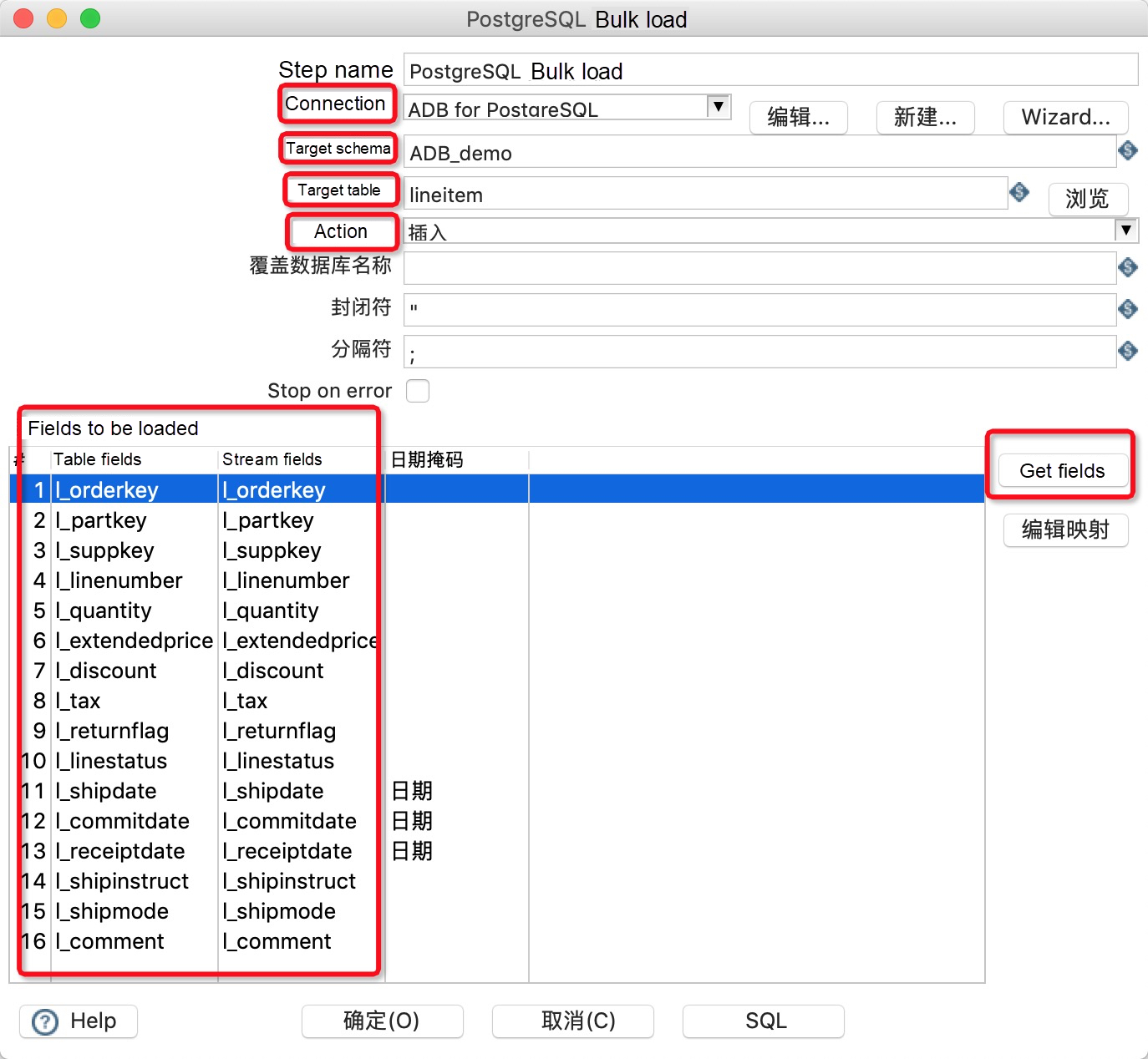

10. Double click the PostgreSQL bulk load icon in the workspace and make parameter configurations in the Bulk load workspace:

11. Click the white arrow to run the transformation and check the operation log and operation status.

After data is imported into AnalyticDB for PostgreSQL, you can use AnalyticDB for PostgreSQL for data analysis.

2 posts | 0 followers

FollowApsaraDB - October 29, 2024

ApsaraDB - July 14, 2025

ApsaraDB - August 8, 2024

ApsaraDB - July 13, 2023

ApsaraDB - April 28, 2020

ApsaraDB - October 30, 2020

2 posts | 0 followers

Follow AnalyticDB for PostgreSQL

AnalyticDB for PostgreSQL

An online MPP warehousing service based on the Greenplum Database open source program

Learn More ApsaraDB RDS for PostgreSQL

ApsaraDB RDS for PostgreSQL

An on-demand database hosting service for PostgreSQL with automated monitoring, backup and disaster recovery capabilities

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn MoreMore Posts by Michael Peng