Much like how you can use relational cache to accelerate EMR spark in data analysis, you can also use EMR Spark relational cache to synchronize data across clusters. This article shows you how.

Before we get too deep into things, let's first discuss a few things. First of all, let's look into what Relational Cache actually is. Well, it works as an important feature in EMR Spark that accelerates data analysis mainly by pre-organizing and pre-computing data and provides functionality similar to the materialized view in traditional data warehousing. In addition to speeding up data processing, Relational Cache can also be applied in many other scenarios. This article will mainly describe how to use Relational Cache to synchronize data tables.

Managing all data through a unified data lake is a goal for many companies. However, the existence of different data centers, network regions, and departments in reality inevitably leads to different big data clusters, and data synchronization across different clusters is a common need. In addition, synchronization of older and newer data after cluster migration and site migration is also a common problem. Data synchronization is very complicated and a laborious task. Lots of customized development and manual intervention are required for tasks. This includes migration tool development, incremental data processing, synchronous read and write, and subsequent data comparison. With all of these problems and needs considered, Relational Cache can simplify data synchronization and allow you to implement cross-cluster data synchronization at a relatively low cost.

In the following section, a specific example is given to show how to use EMR Spark Relational Cache to implement cross-cluster data synchronization.

Assume that we have two clusters (for convenience purposes, let's call them Cluster A and B) and you need to synchronize the data in the activity_log table from Cluster A to Cluster B. Also, another thing is that, during the migration process, new data is continuously inserted into the activity_log table. Create an activity_log table in Cluster A:

CREATE TABLE activity_log (

user_id STRING,

act_type STRING,

module_id INT,

d_year INT)

USING JSON

PARTITIONED BY (d_year)Now, insert two entries as historical information:

INSERT INTO TABLE activity_log PARTITION (d_year = 2017) VALUES("user_001", "NOTIFICATION", 10), ("user_101", "SCAN", 2)Then, create a Relational Cache for activity_log:

CACHE TABLE activity_log_sync

REFRESH ON COMMIT

DISABLE REWRITE

USING JSON

PARTITIONED BY (d_year)

LOCATION "hdfs://192.168.1.36:9000/user/hive/data/activity_log"

AS SELECT user_id, act_type, module_id, d_year FROM activity_logIn the above code, note that REFRESH ON COMMIT indicates that the cache data will be automatically updated when the source table is updated. Next, LOCATION specifies the storage location of the cache data. We point the cache location to HDFS in Cluster B to synchronize data from Cluster A to Cluster B. The Cache field and Partition information remain the same as the source table.

In Cluster B, we also create an activity_log table:

CREATE TABLE activity_log (

user_id STRING,

act_type STRING,

module_id INT,

d_year INT)

USING JSON

PARTITIONED BY (d_year)

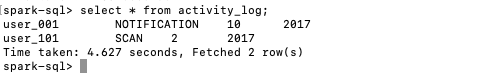

LOCATION "hdfs:///user/hive/data/activity_log"Following this, run MSCK REPAIR TABLE activity_log to automatically repair the corresponding meta information, and then run the query statement. We can see that the two entries inserted into the table in Cluster A can be queried in Cluster B.

Continue to insert new data in Cluster A:

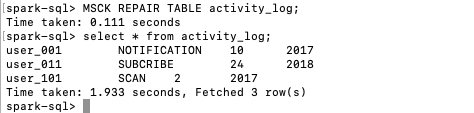

INSERT INTO TABLE activity_log PARTITION (d_year = 2018) VALUES("user_011", "SUBCRIBE", 24);Run MSCK REPAIR TABLE activity_log in Cluster B, and then query the activity_log table again. The result shows that the newly inserted data has been automatically synchronized to the activity_log table in Cluster B. For a partition table, when new partition table data is inserted, Relational Cache can synchronize new partition table data incrementally instead of synchronizing all the data again.

If the new data in activity_log of Cluster A is added by using Hive or other means to import to Hive tables externally instead of being inserted through Spark, you can run the REFRESH TABLE activity_log_sync statement to trigger data synchronization manually or through scripts. If new data is bulk exported by partitions, you can also use statements like REFRESH TABLE activity_log_sync WITH TABLE activity_log PARTITION (d_year=2018) to synchronize partition table data incrementally.

Relational Cache can ensure the data consistency of the activity_log tables in Cluster A and Cluster B. Downstream tasks or applications that are dependent on the activity_log table in Cluster A can be switched to Cluster B at any time. At the same time, you can suspend applications or services that write data to the activity_log table in Cluster A at any time, point them to the activity_log table in Cluster B and restart them to complete migration of upper-layer applications or services. After the migration is completed, you only need to clear activity_log and activity_log_sync in Cluster A.

In this article, we have described how to use Relational Cache to easily synchronize data in data tables of different big data clusters. In addition to this application scenario, Relational Cache can be applied in many other scenarios, for example, creating OLAP platforms with response in seconds, interactive BI, as well as Dashboard applications and accelerating ETL. In the future, we will share the Relational Cache best practices as it applies to more scenarios.

Use Relational Cache to Accelerate EMR Spark in Data Analysis

62 posts | 7 followers

FollowAlibaba Clouder - July 21, 2020

Alibaba EMR - December 2, 2019

Alibaba EMR - March 16, 2021

Alibaba EMR - December 2, 2019

Alibaba EMR - August 28, 2019

Alibaba Clouder - April 14, 2021

62 posts | 7 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Quick BI

Quick BI

A new generation of business Intelligence services on the cloud

Learn More ApsaraDB for HBase

ApsaraDB for HBase

ApsaraDB for HBase is a NoSQL database engine that is highly optimized and 100% compatible with the community edition of HBase.

Learn MoreMore Posts by Alibaba EMR