When Tesla launched its electric vehicles and Apple launched its iPhone X with Face ID, the market realized the unlimited business opportunities that Artificial Intelligence (AI) chips brought with itself. AI is the core of the Internet of Things (IoT) and Industry 4.0. With the continuous increase of data volume, one can assume that the improvement in Big Data analysis will never stop. We are now only seeing the tip of the iceberg for predictive analytics.

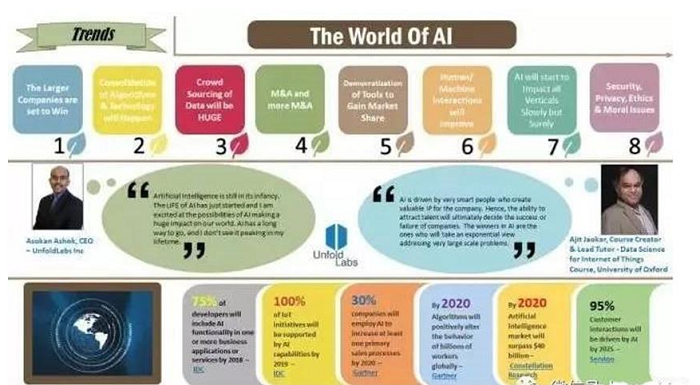

Below are some interesting stats that reiterate the fact that AI will dominate the future:

• By 2018, 75% of developers will employ AI technologies in one or more business applications or services. Source: IDC

• IDC also predicts that by 2019, once can use AI technology on 100% of IoT devices.

• In a survey conducted by Gartner, it predicted that by 2020, 30% of companies will introduce AI to at least one major sales process.

• Additionally, Gartner believes that by 2020, algorithms will actively change the behaviors of millions of workers worldwide.

• Constellation Research predicts that by 2020, the AI market will surpass US$40 billion.

As per Servion, by 2025, AI will drive 95% of customer interactions. Moving on, let us look at the top eight AI trends for 2018.

Figure 1. Top AI Trends for 2018

AI has immense potential for vertical applications in various industries such as retail, transportation, automation, manufacturing, and agriculture. The primarymarket drivers are the increasing use of AI technologies in different end-user verticals along with the service improvement to end consumers.

One must remember that the rise of AI market is subject to the popularity of IT infrastructure, smartphones, and smart wearable devices. Moreover, Natural Language Processing (NLP) applications account for a large part of the AI market. As NLP technologies evolve, they keep driving consumer service growth. One is also witnessing a significant growth for automotive infotainment systems, AI robots, and AI-enabled smartphones globally.

The extensive use of Big Data and AI in the healthcare industry has resulted in improving disease diagnosis, enhanced balance between medical professionals and patients, and reduction in medical costs. This has been backed by a promotion of cross-industry cooperation. Moreover, AI is widely used in clinical trials, large-scale medical programs, medical advices as well as promotion and sales development. AI is expected to play an increasingly significant role in the healthcare industry from 2016 to 2022. The estimated market will reach US$7.9888 billion in 2022 compared to US$667.1 million in 2016 with a CAGR at 52.68%.

Since the beginning of the era of PC and mobile phones, users are interacting with their devices through monitors or keyboards. However, as smart speakers, Virtual Reality(VR)/Augmented Reality(AR), and autopilot systems march into our daily life, we can easily communicate with computing systems smoothly without using traditional monitors. This means that AI makes technologies more intuitive and easier to manipulate through NLP and machine learning. AI can also perform more complex tasks in technical interfaces. For example, autonomous driving is made possible using visual graphics, and one can execute real-time translating with the aid of artificial neural networks. In other words, AI makes interfaces simpler and smarter. Therefore, it sets high standards for user interactions in the future.

Currently, the mainstream ARM-architecture processor is not fast enough to carry out a vast amount of image computing. Thus, future mobile phone chips will come with built-in AI computing core. Just as Apple introduced 3D sensing technology to iPhones, Android smartphone manufacturers will follow up by introducing 3D sensing applications next year.

The heart of AI chips consists of semiconductors and algorithms. AI hardware requires shorter instruction cycles and lower power consumption, including GPUs, DSPs, ASICs, FPGAs, and neuron chips. One must integrate deep learning algorithms and remember that the key to a successful integration is advanced packaging technology. Generally, GPUs are faster than FPGAs; however, they are not as power efficient as FPGAs. As a result, AI hardware choices depend on the needs of manufacturers.

The "getting-smarter of AI" algorithms starts from machine learning to deep learning, and ultimately to autonomous learning. Currently, AI is still in the stage of machine learning and deep learning. To achieve autonomous learning, we must solve these four key issues:

• Creation of an AI platform for autonomous machines.

• Ensuring a virtual environment that allows autonomous machines to learn independently. Additionally, one must follow all laws of physics such as collision and pressure to enable the same effect as in the real world.

• Setting the AI "brains" into the frameworks of autonomous machines.

• Building a portal to the VR world. For example, NVIDIA has launched Xavier, an autonomous machine processor, in preparation for the commercialization and popularization of autonomous machines.

In the future, there will be super-powerful processors required in many specialized fields. However, CPUs are common to all kinds of devices and one can use it in any scenario. Therefore, the perfect architecture will include a combination of a CPU with a GPU (or other processors). For example, NVIDIA has launched the CUDA computing architecture which combines ASICs with common programming models to enable developers to implement multiple algorithms.

In the time to come, AI and AR will be mutually dependent on each other. AR can be considered as the eyes of AI; the virtual world created for robot learning is virtual reality itself. However, you will require additional technologies if you want to introduce people to the virtual environment to train the robots.

The future of IoT is dependent on its integration with AI. We hope this article gave you an insight into the top AI trends to watch out for in 2018.

Understanding HTTP/2: History, Features, Debugging, and Performance

Interspeech 2017 Series Acoustic Model for Speech Recognition Technology

2,593 posts | 792 followers

FollowAlibaba Clouder - January 19, 2021

Alibaba Clouder - July 13, 2018

Alibaba Clouder - November 29, 2018

Alibaba Clouder - September 30, 2019

Alibaba Clouder - October 15, 2018

Alibaba Clouder - June 24, 2020

2,593 posts | 792 followers

Follow IoT Solution

IoT Solution

A cloud solution for smart technology providers to quickly build stable, cost-efficient, and reliable ubiquitous platforms

Learn More AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn More Offline Visual Intelligence Software Packages

Offline Visual Intelligence Software Packages

Offline SDKs for visual production, such as image segmentation, video segmentation, and character recognition, based on deep learning technologies developed by Alibaba Cloud.

Learn MoreMore Posts by Alibaba Clouder